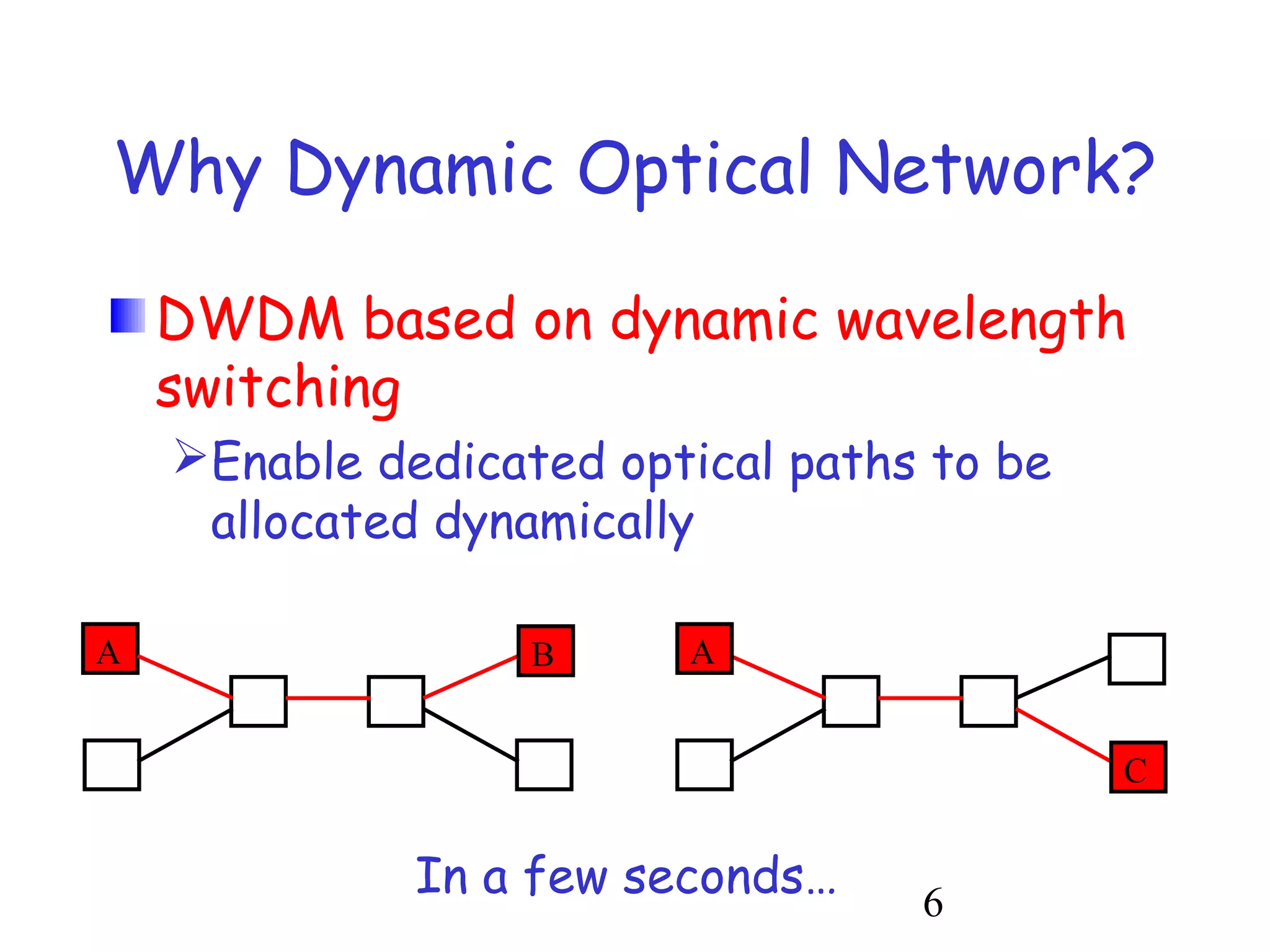

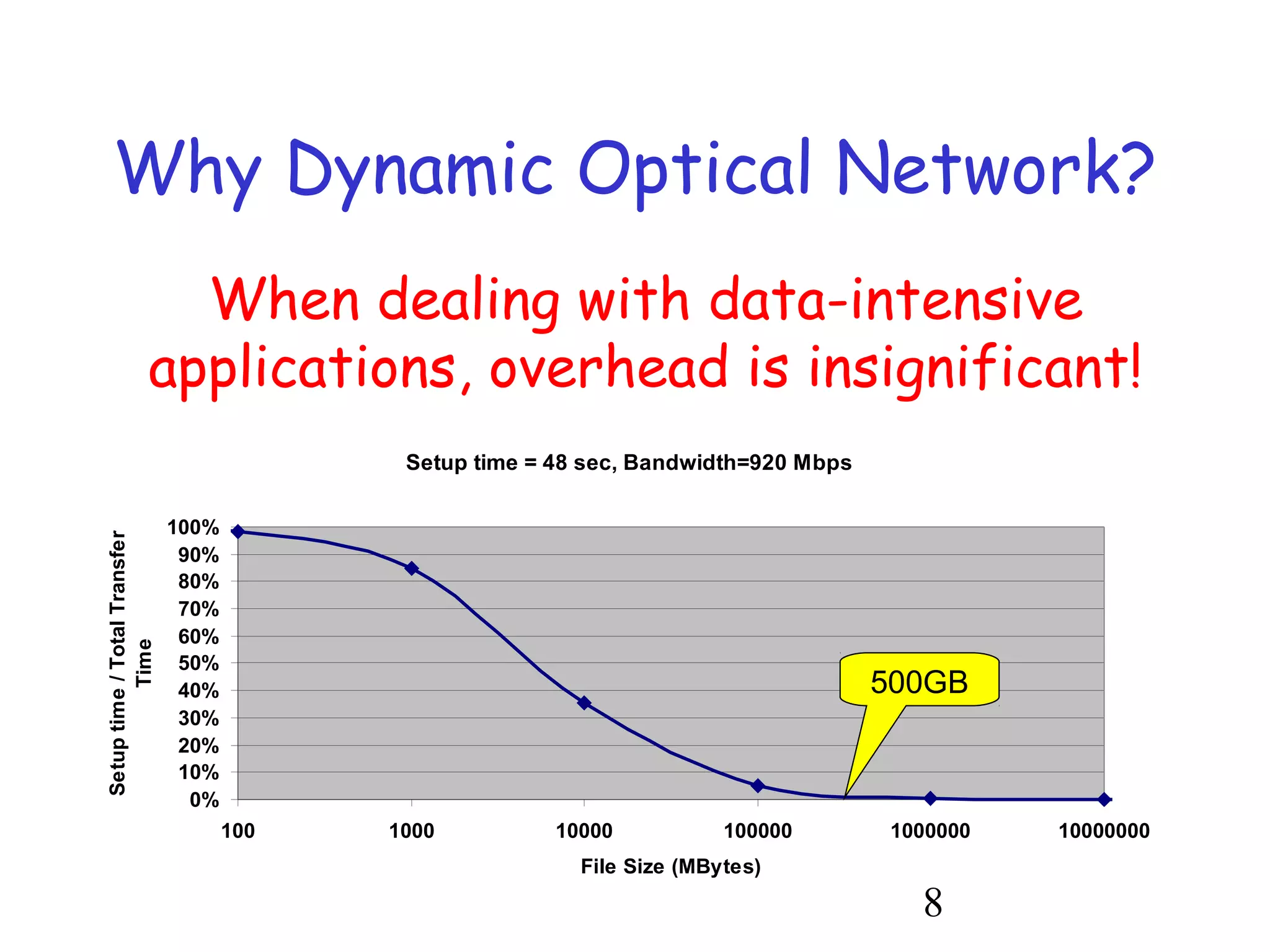

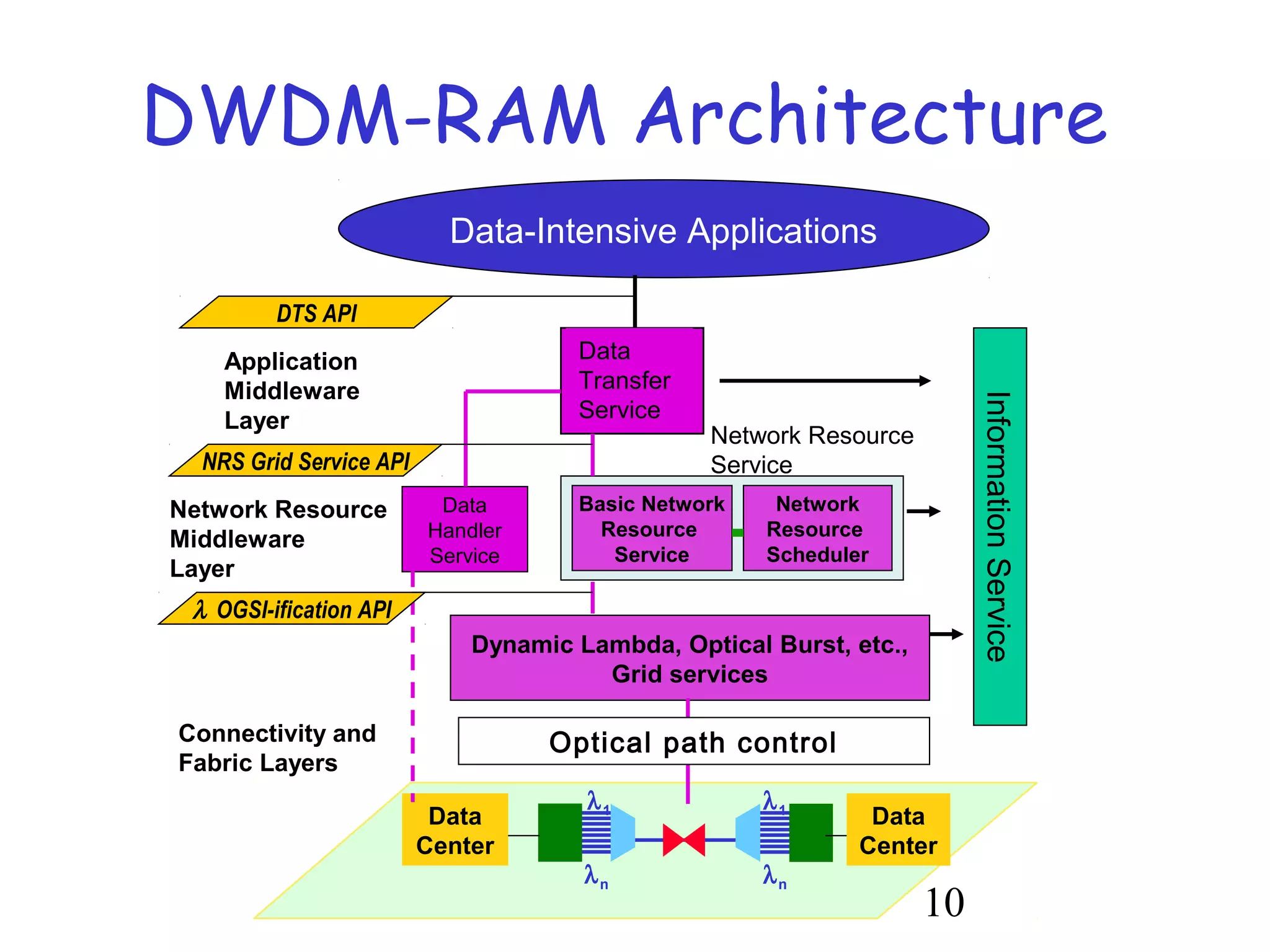

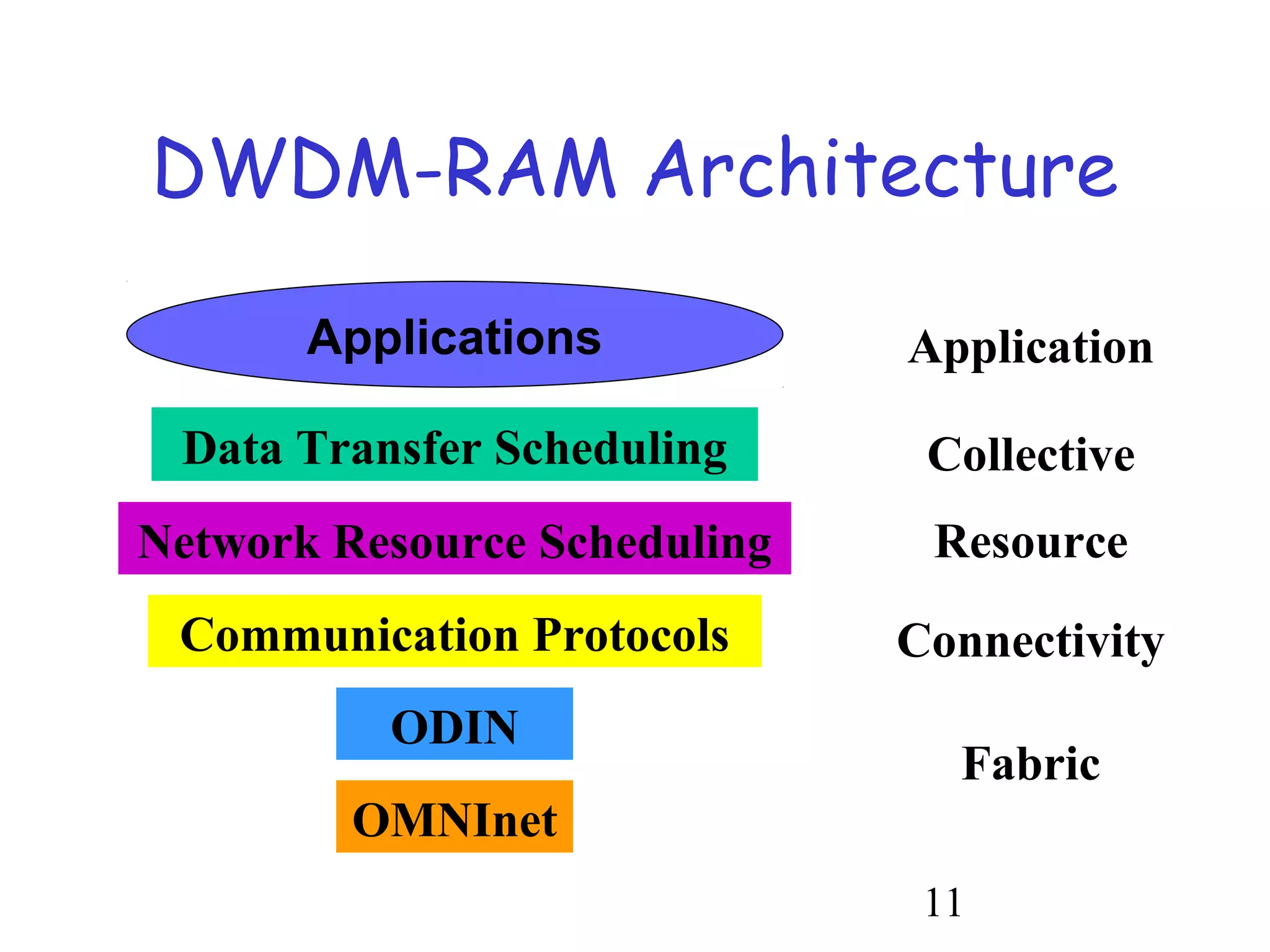

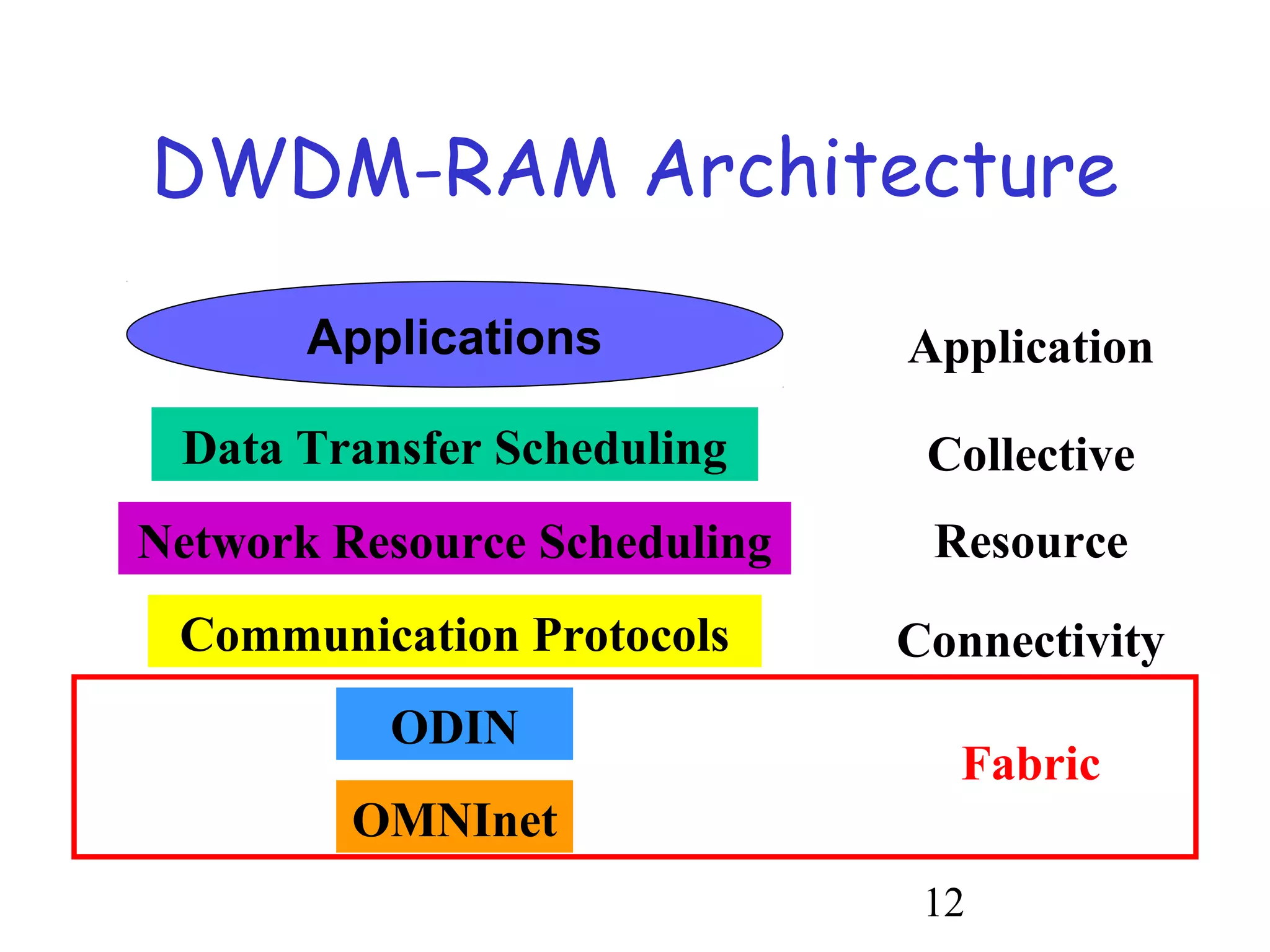

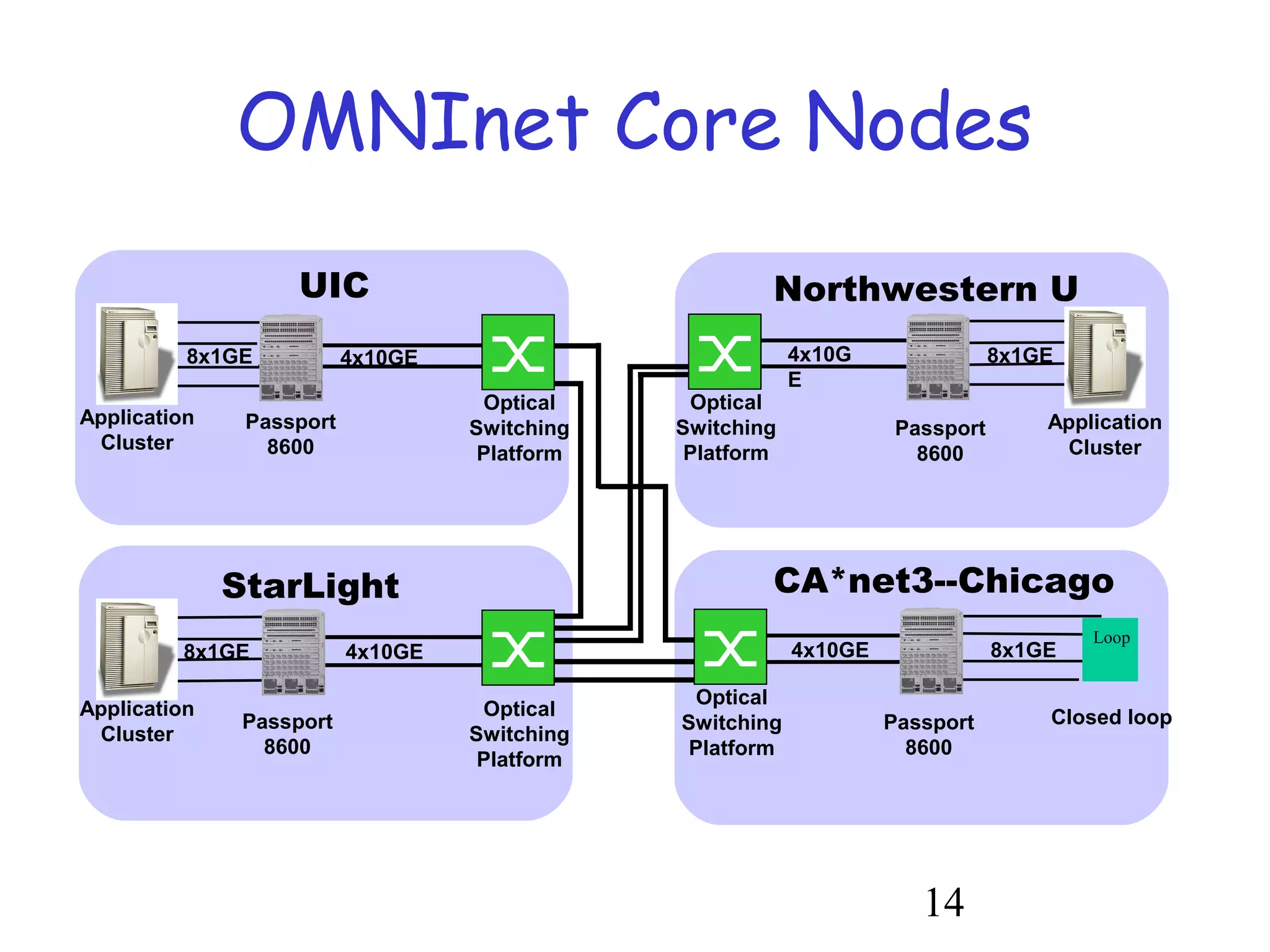

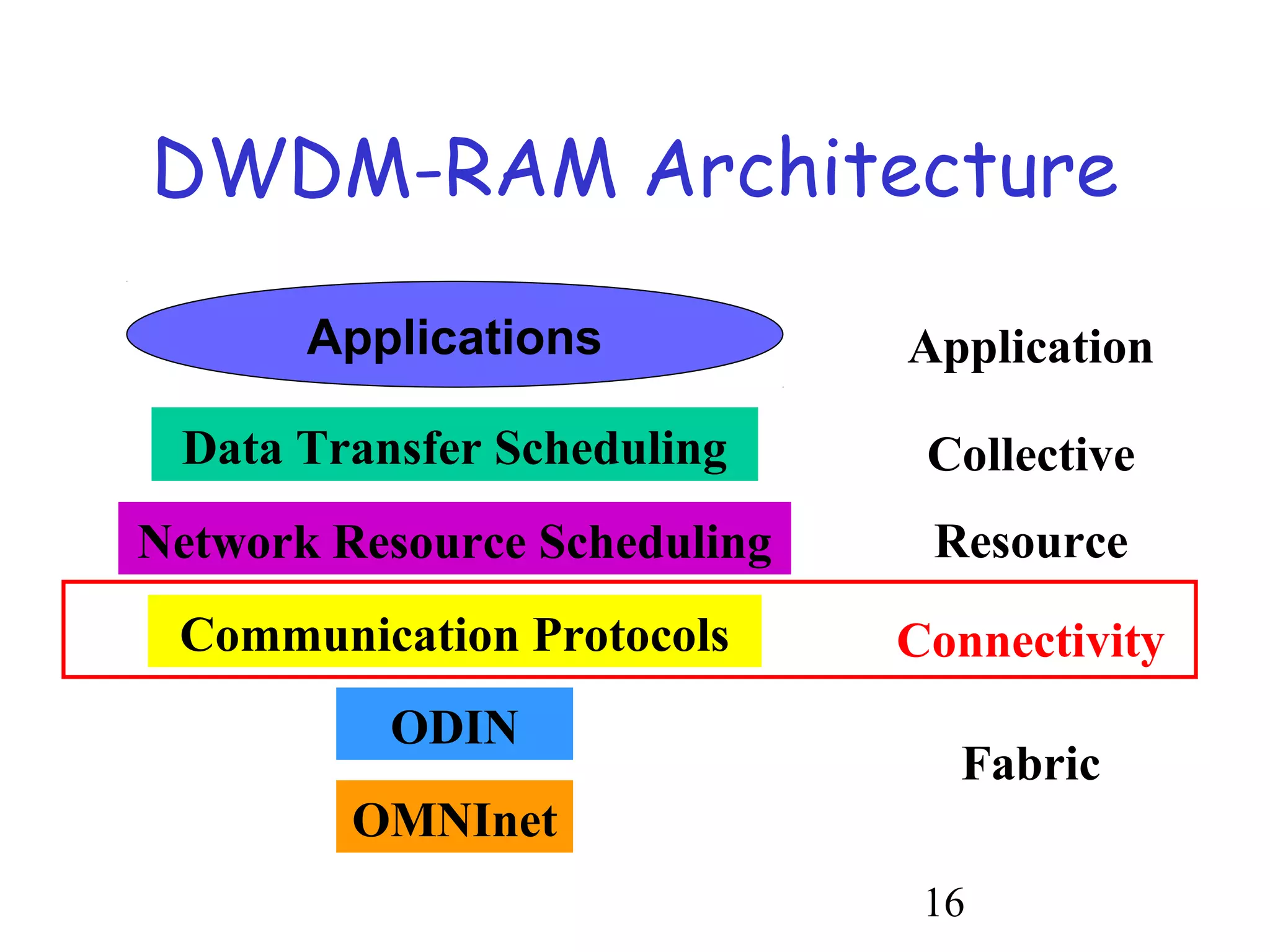

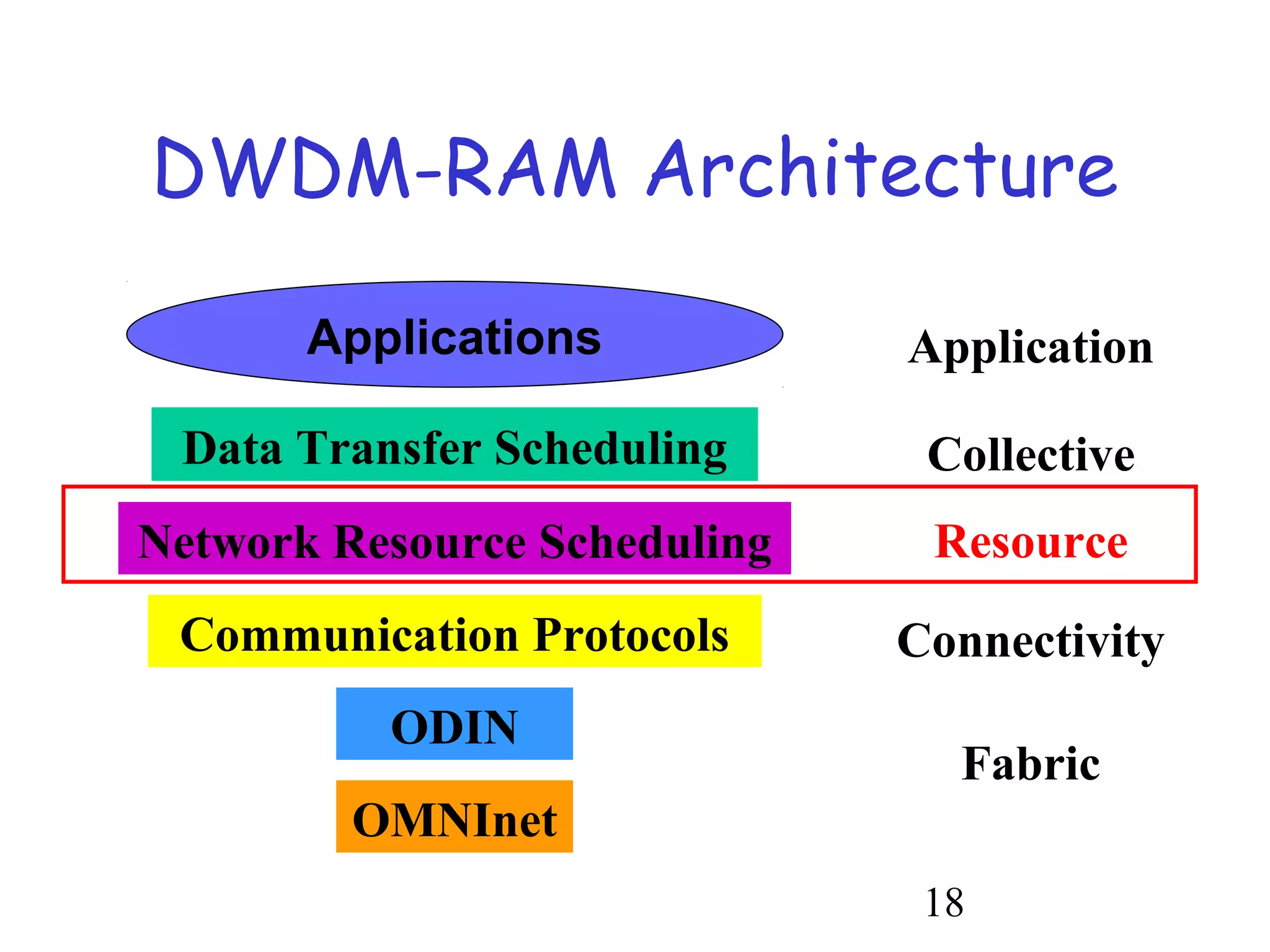

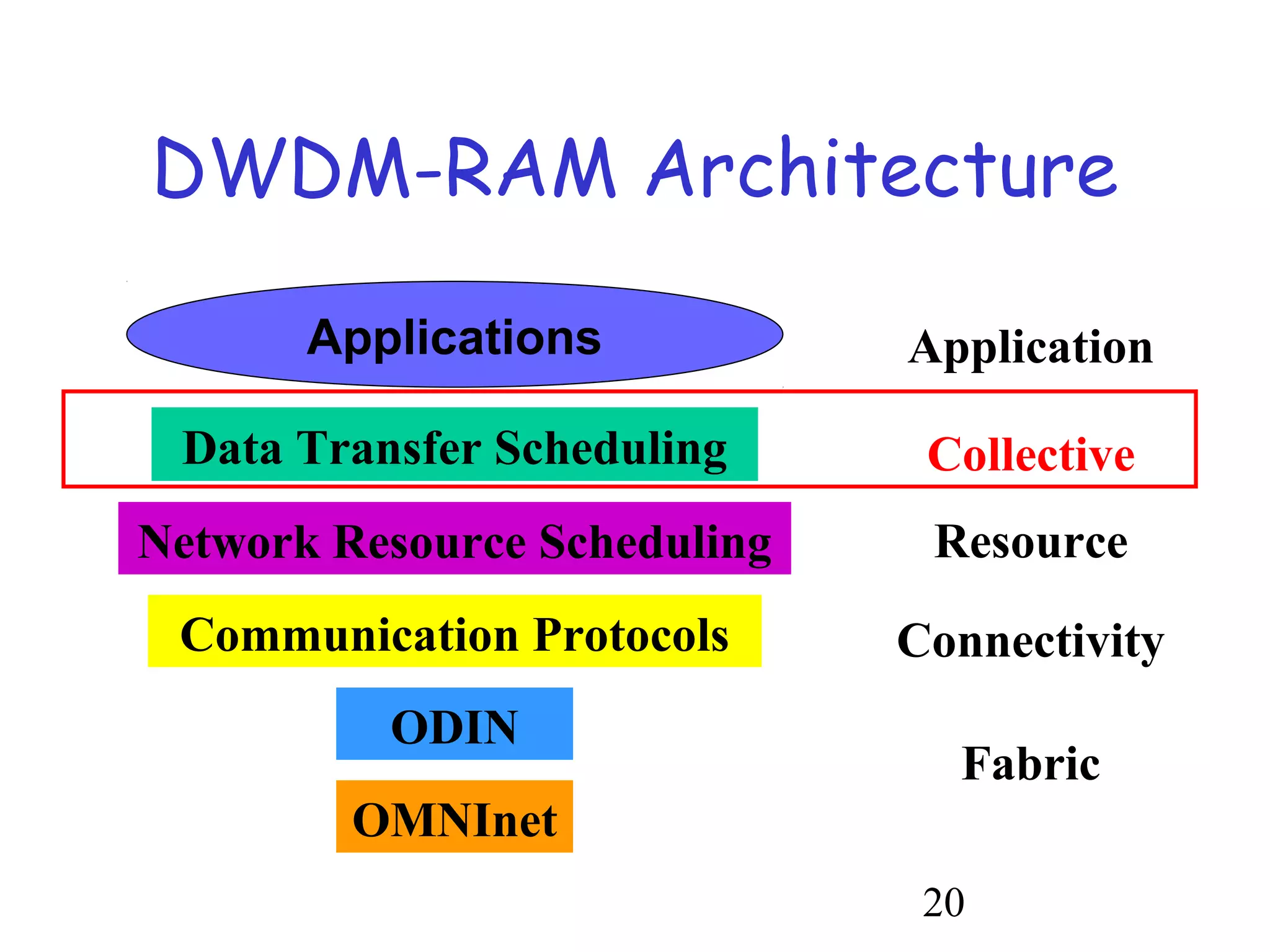

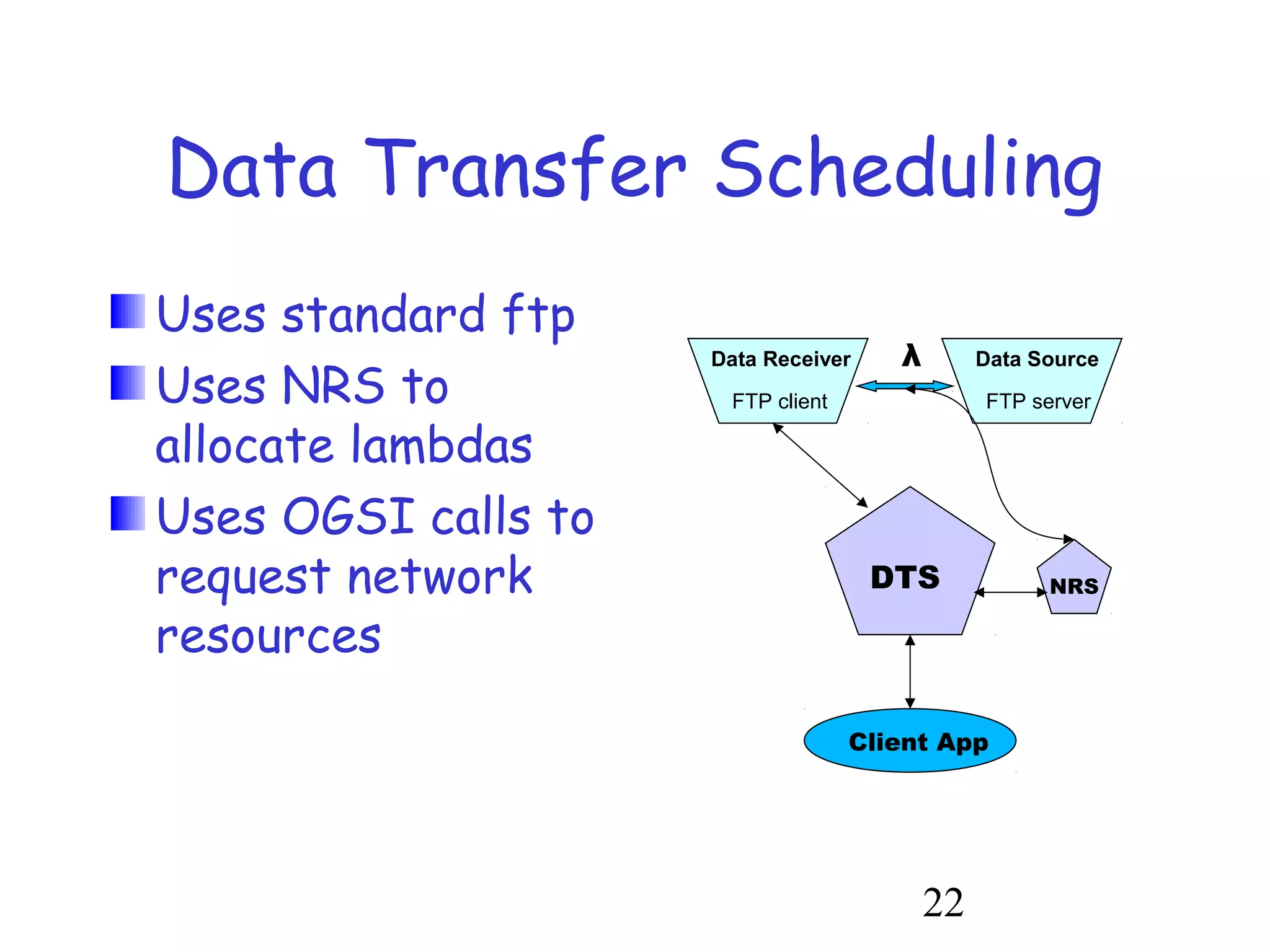

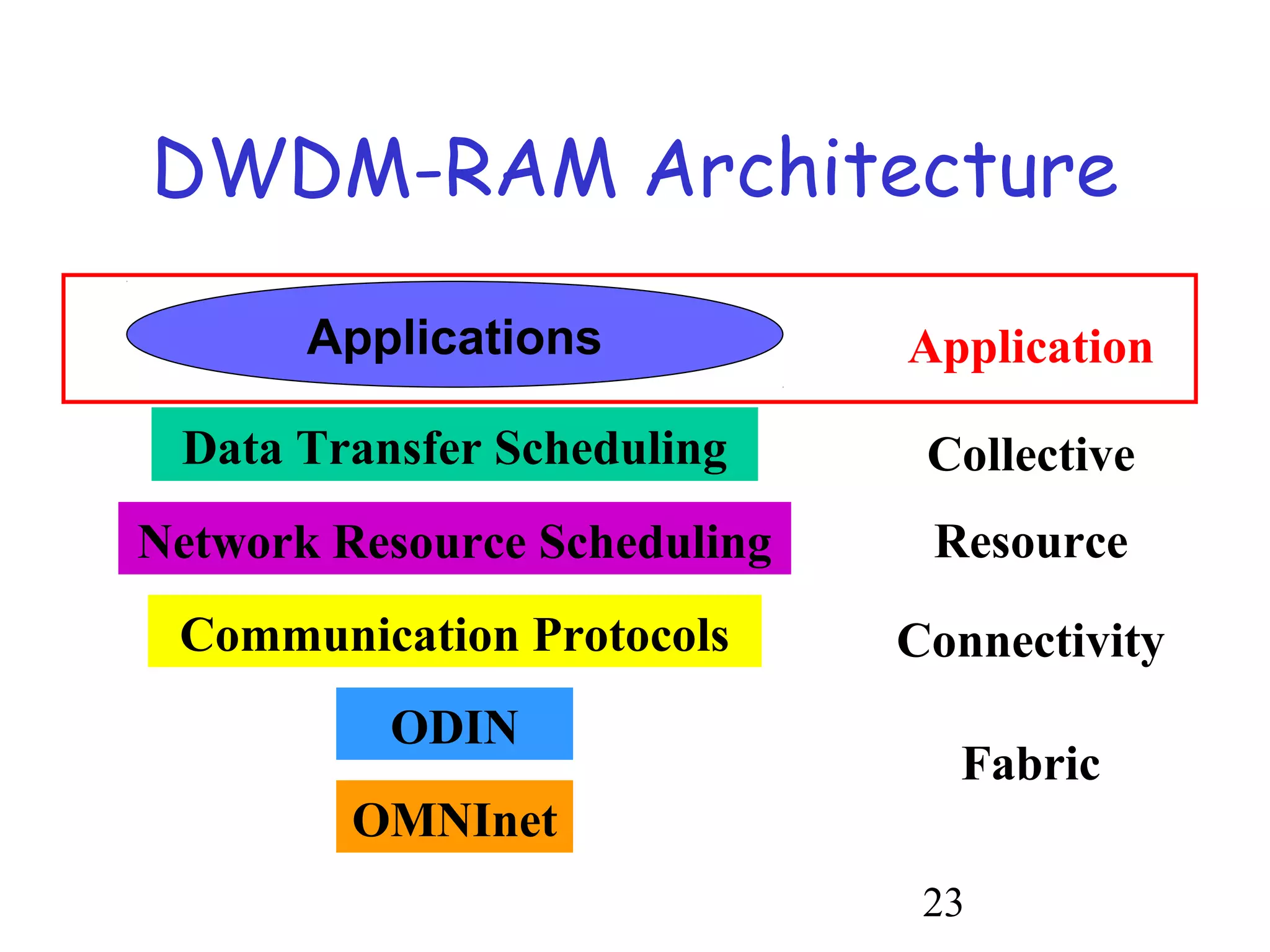

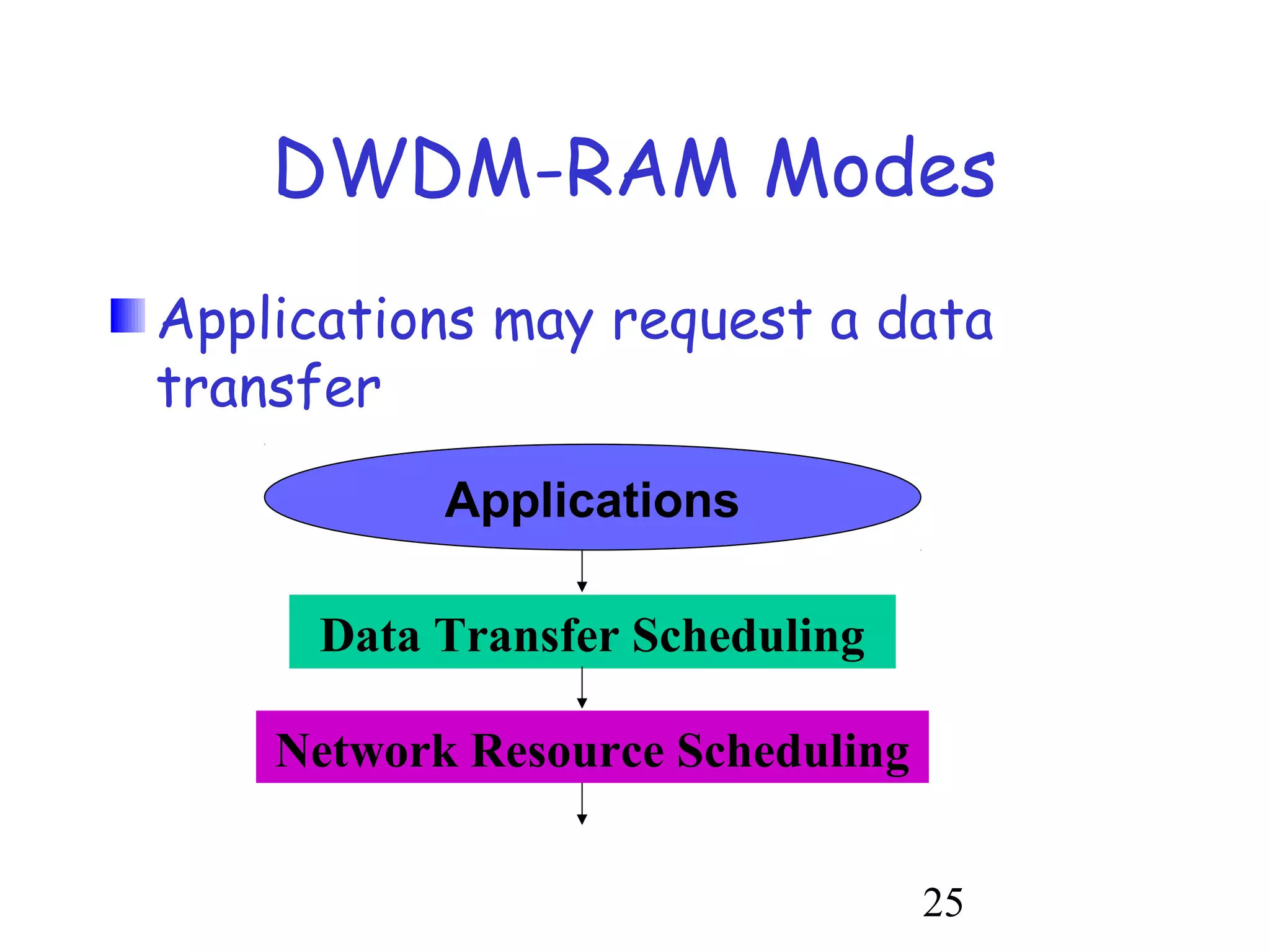

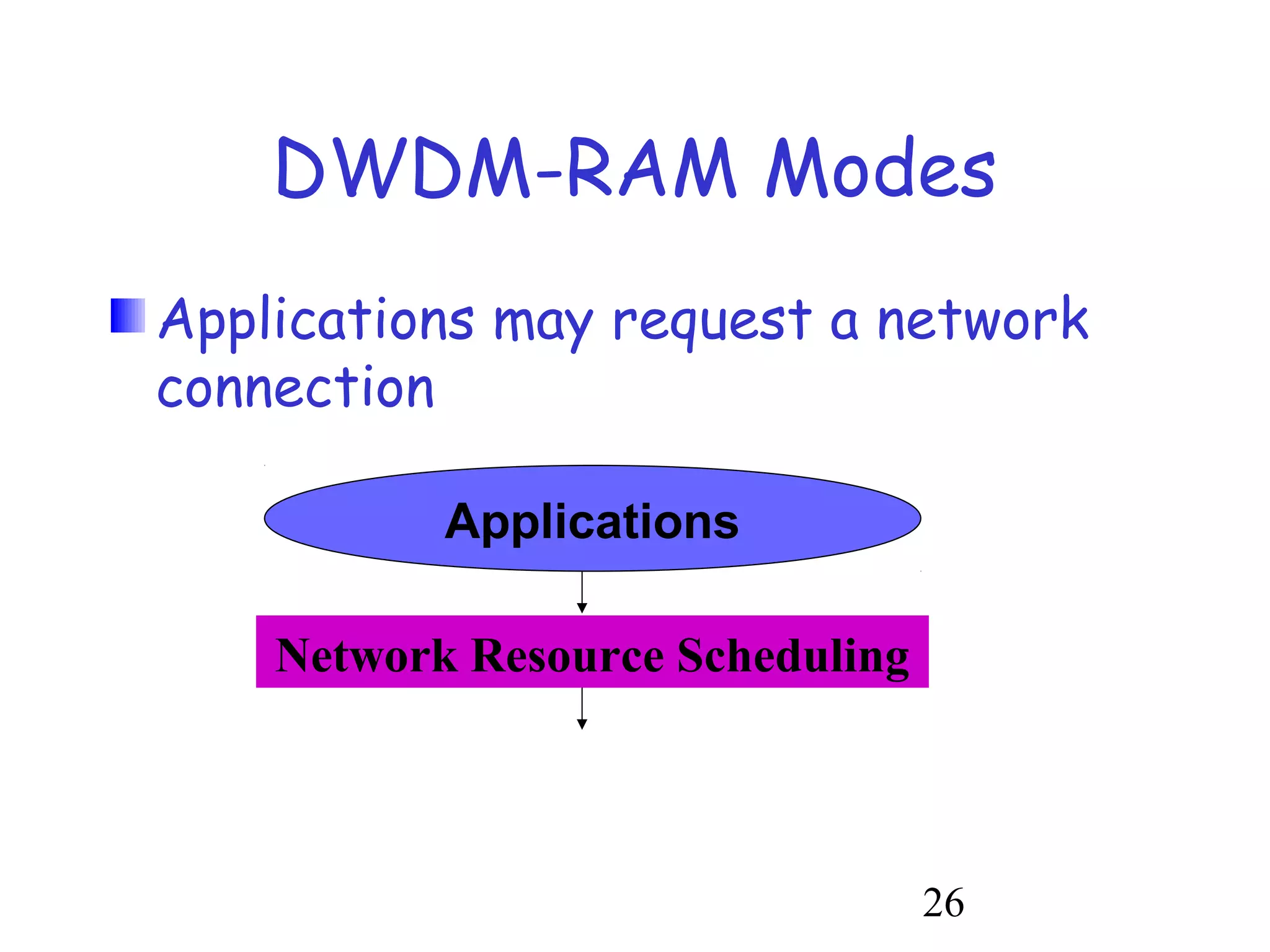

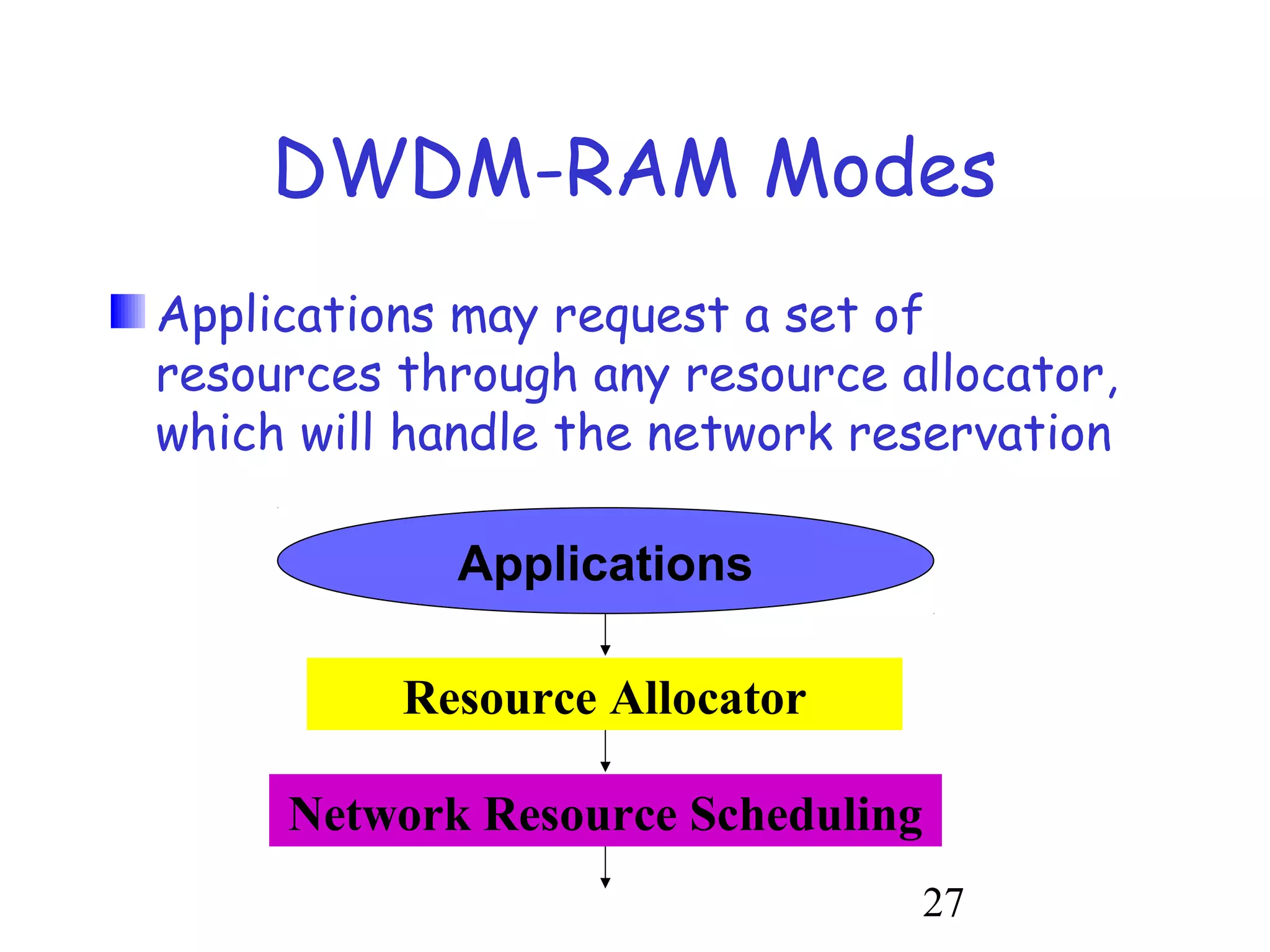

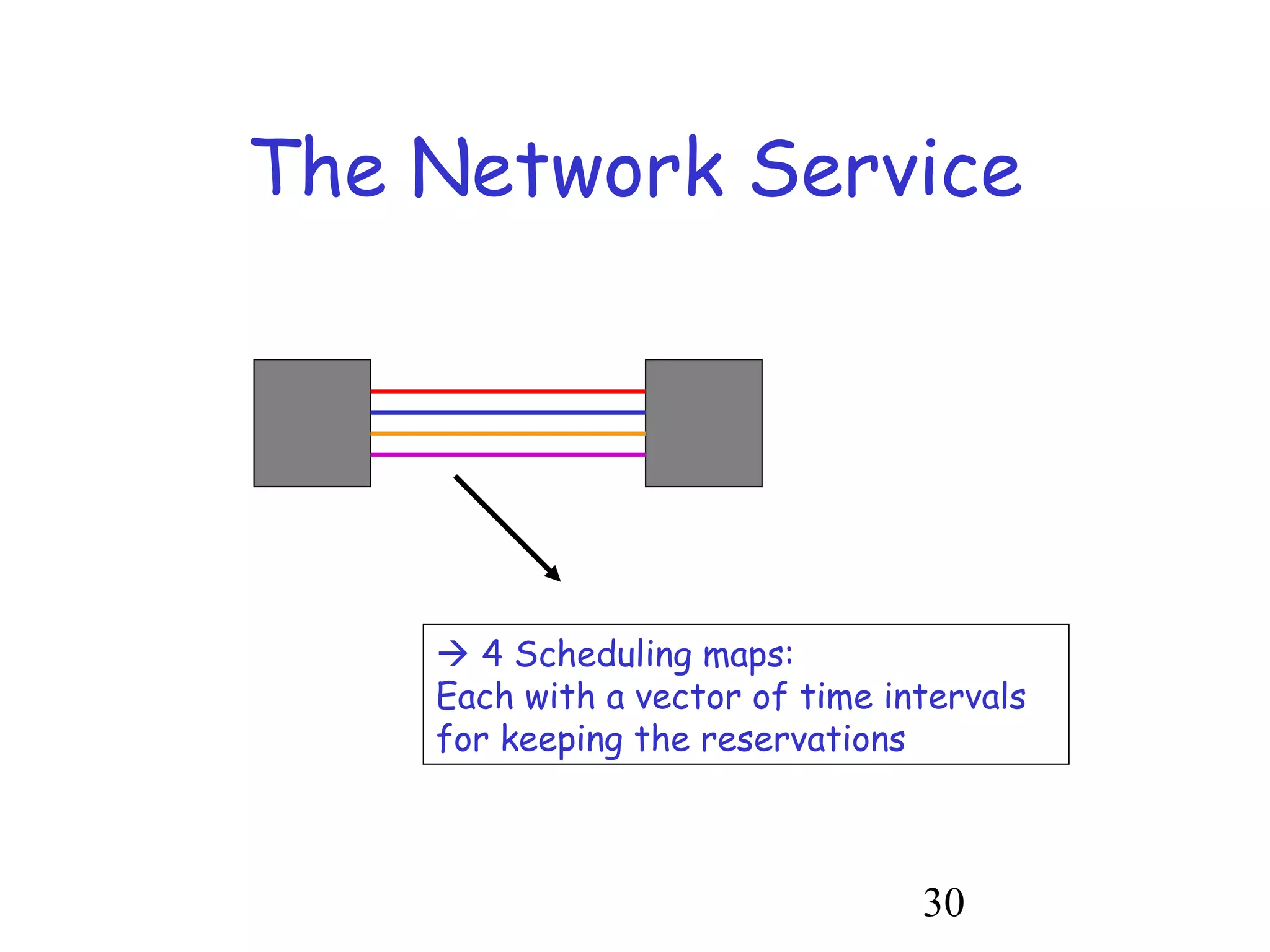

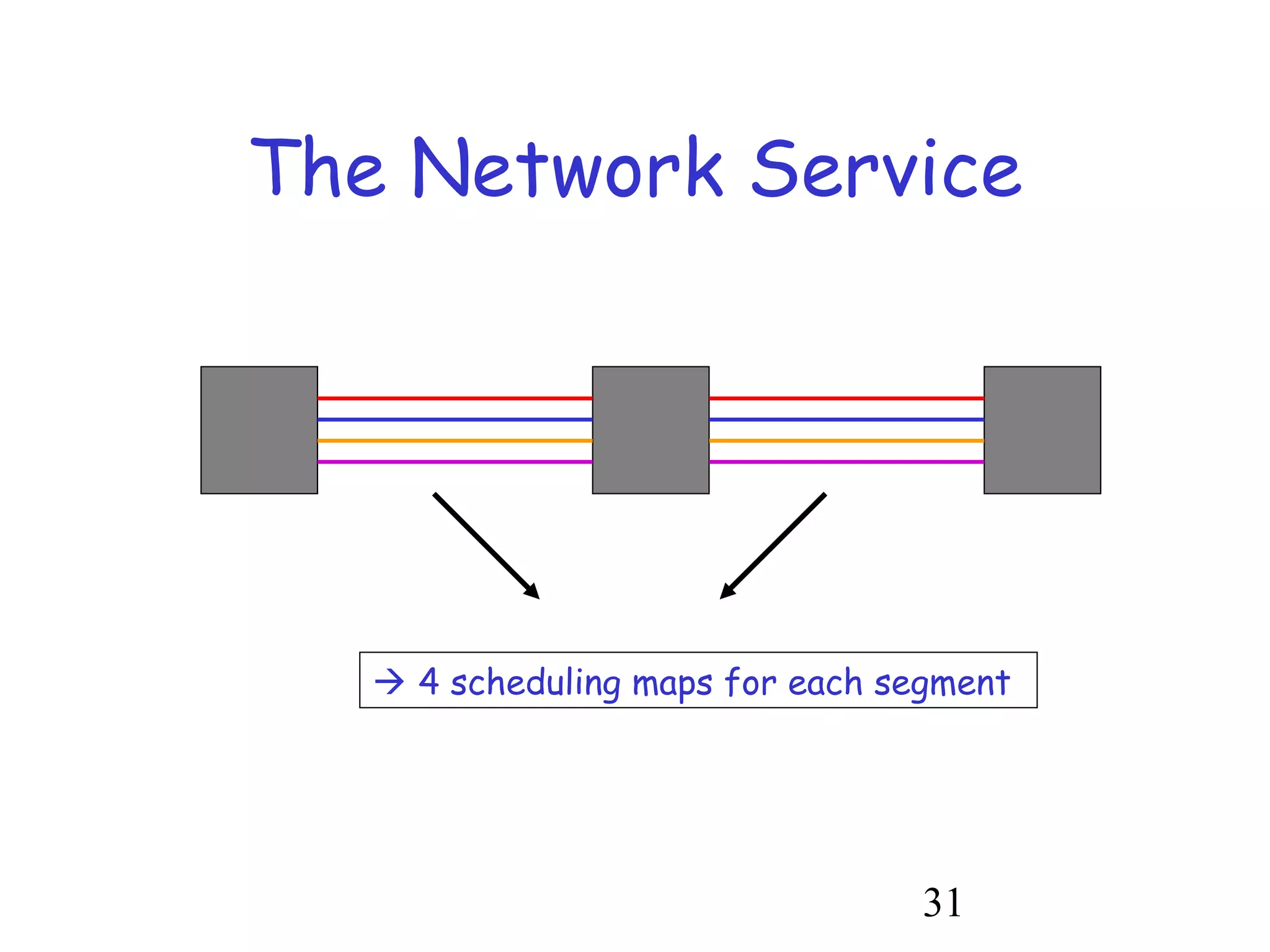

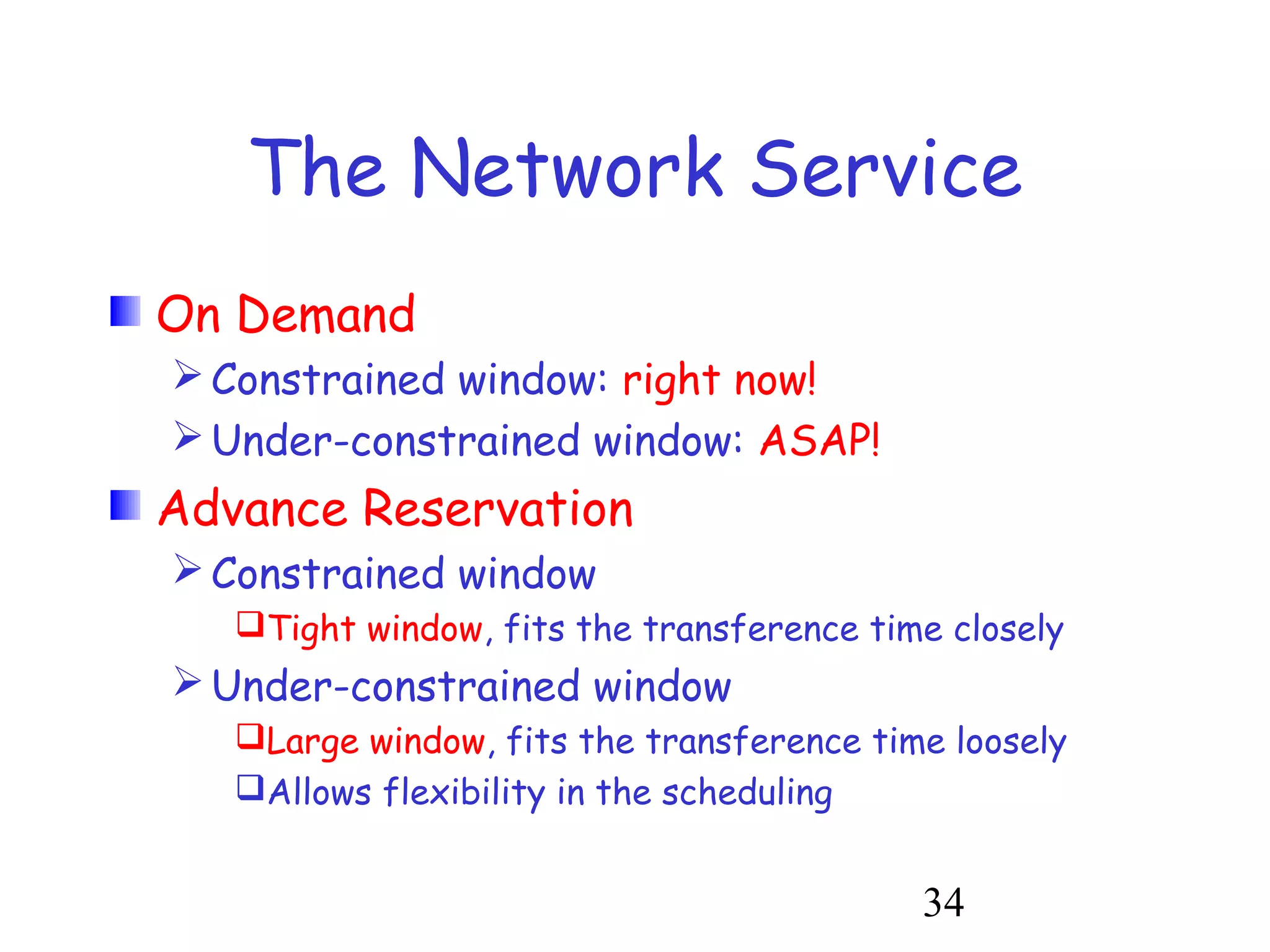

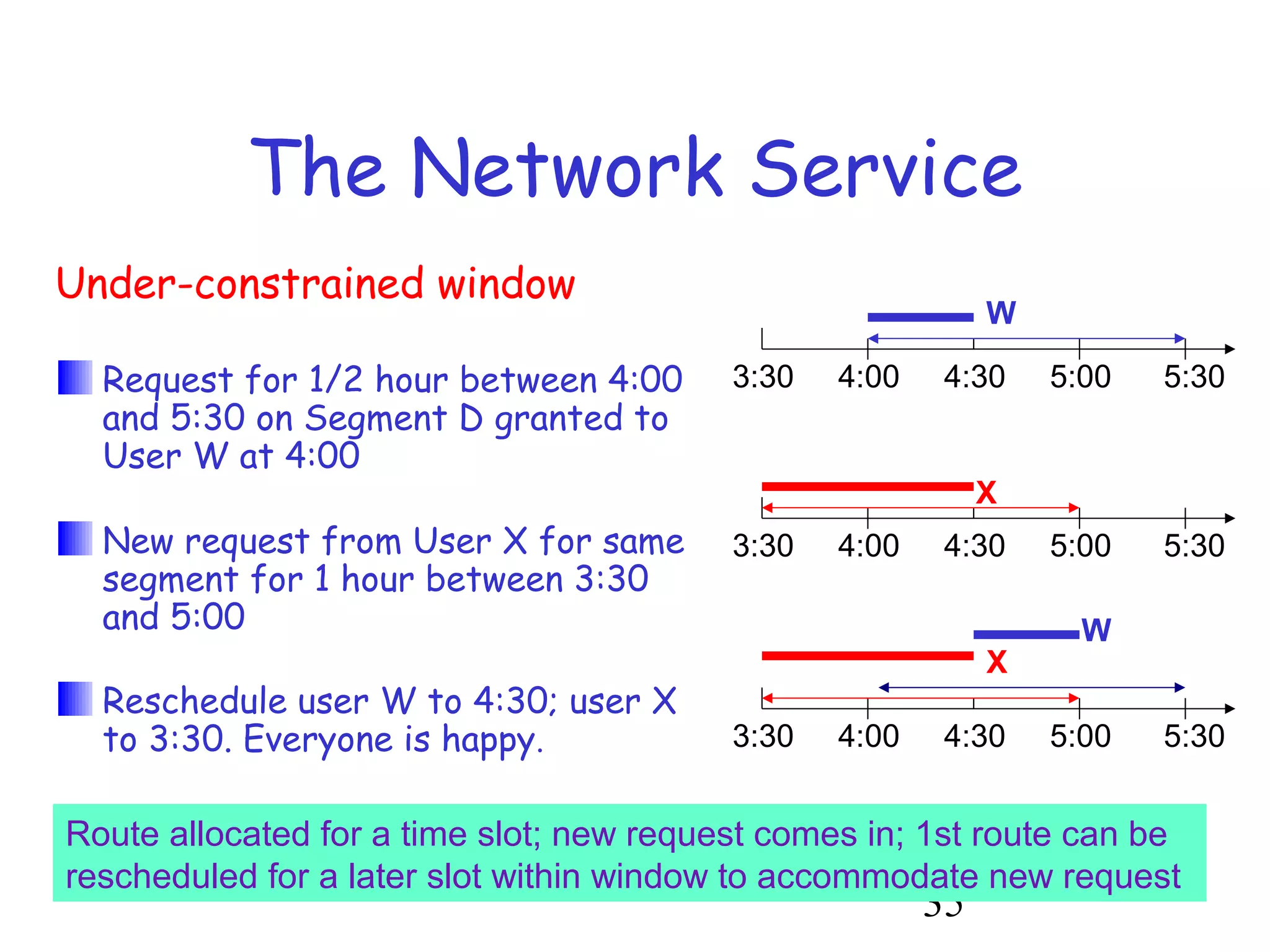

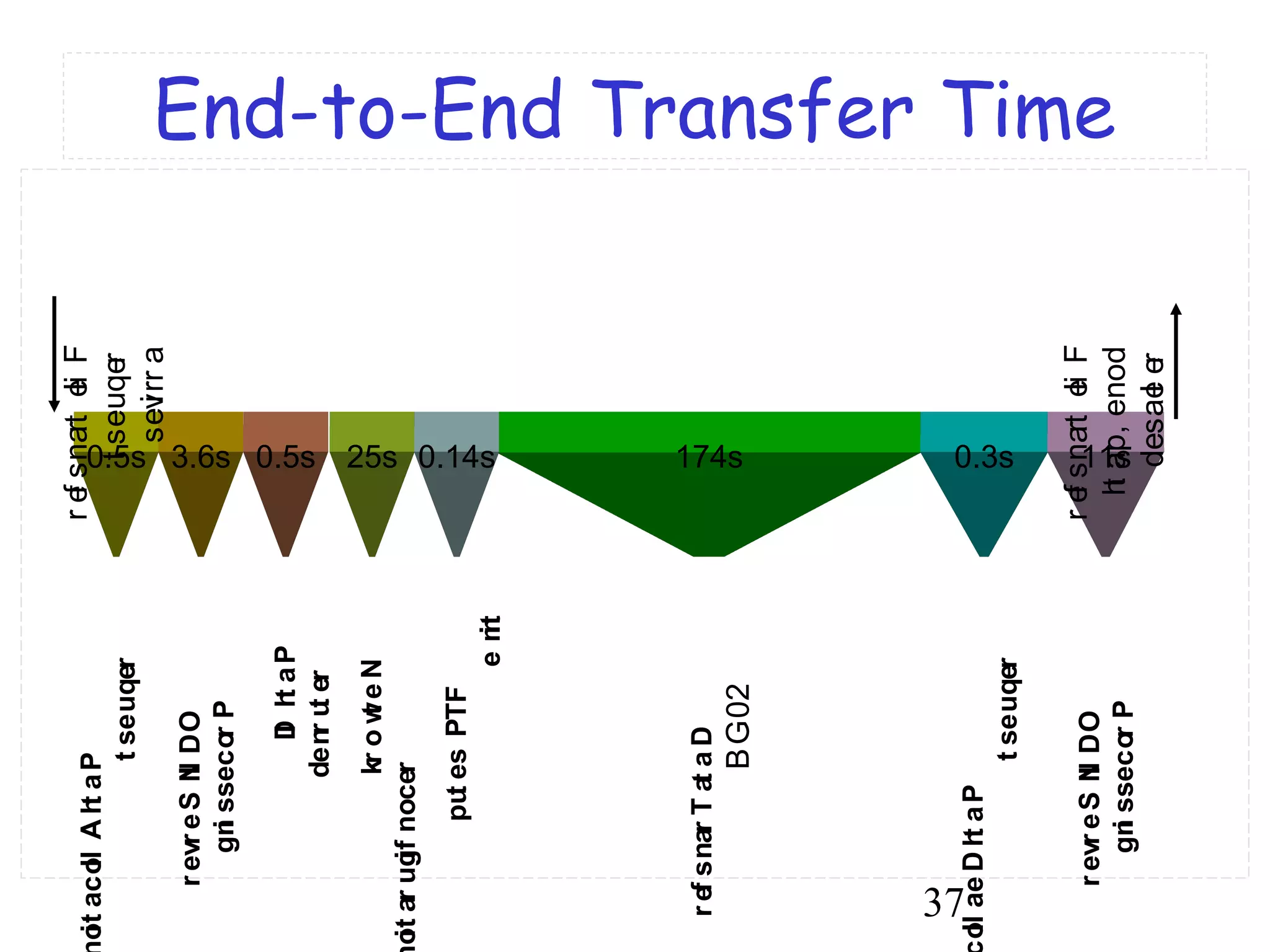

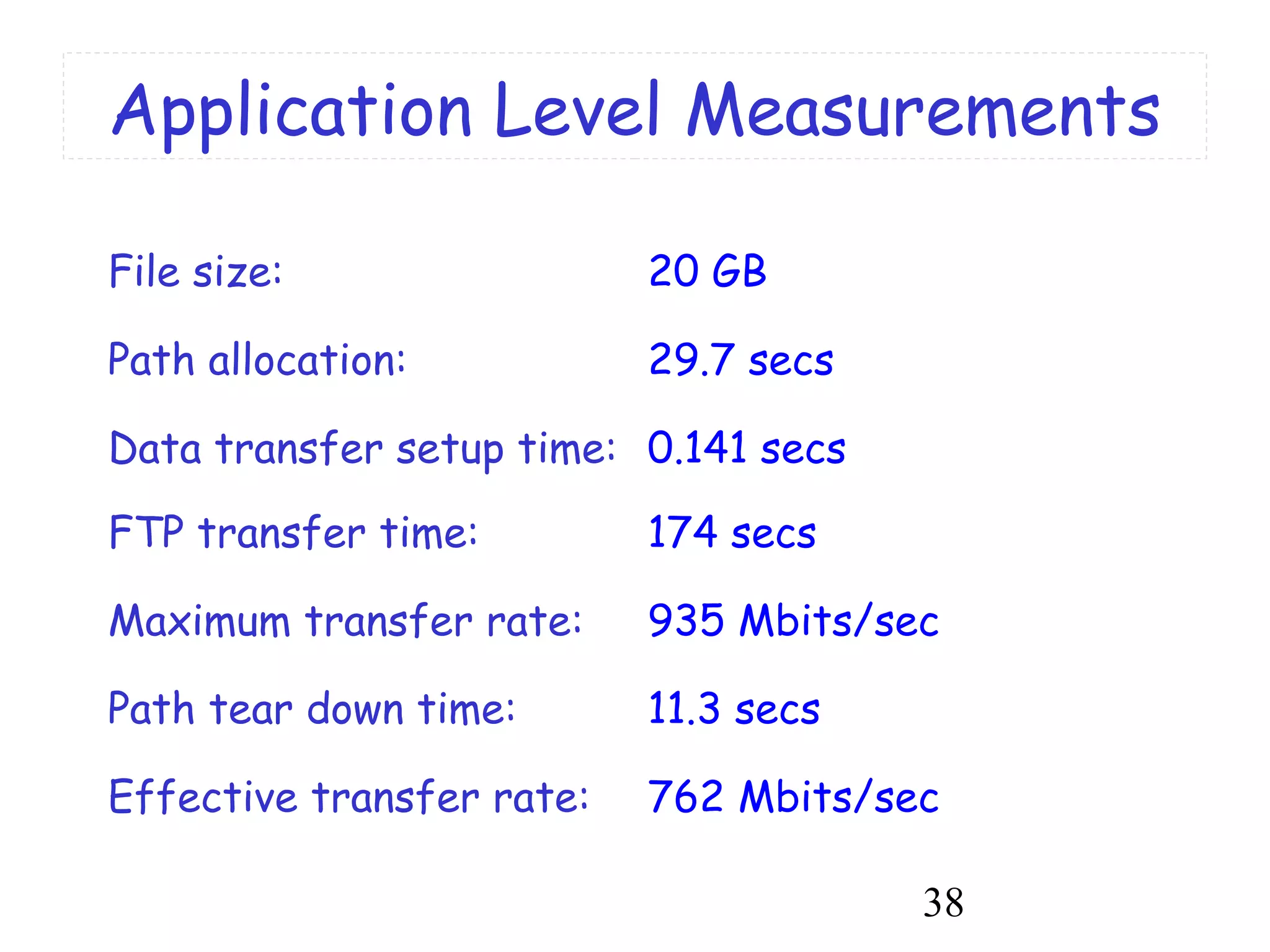

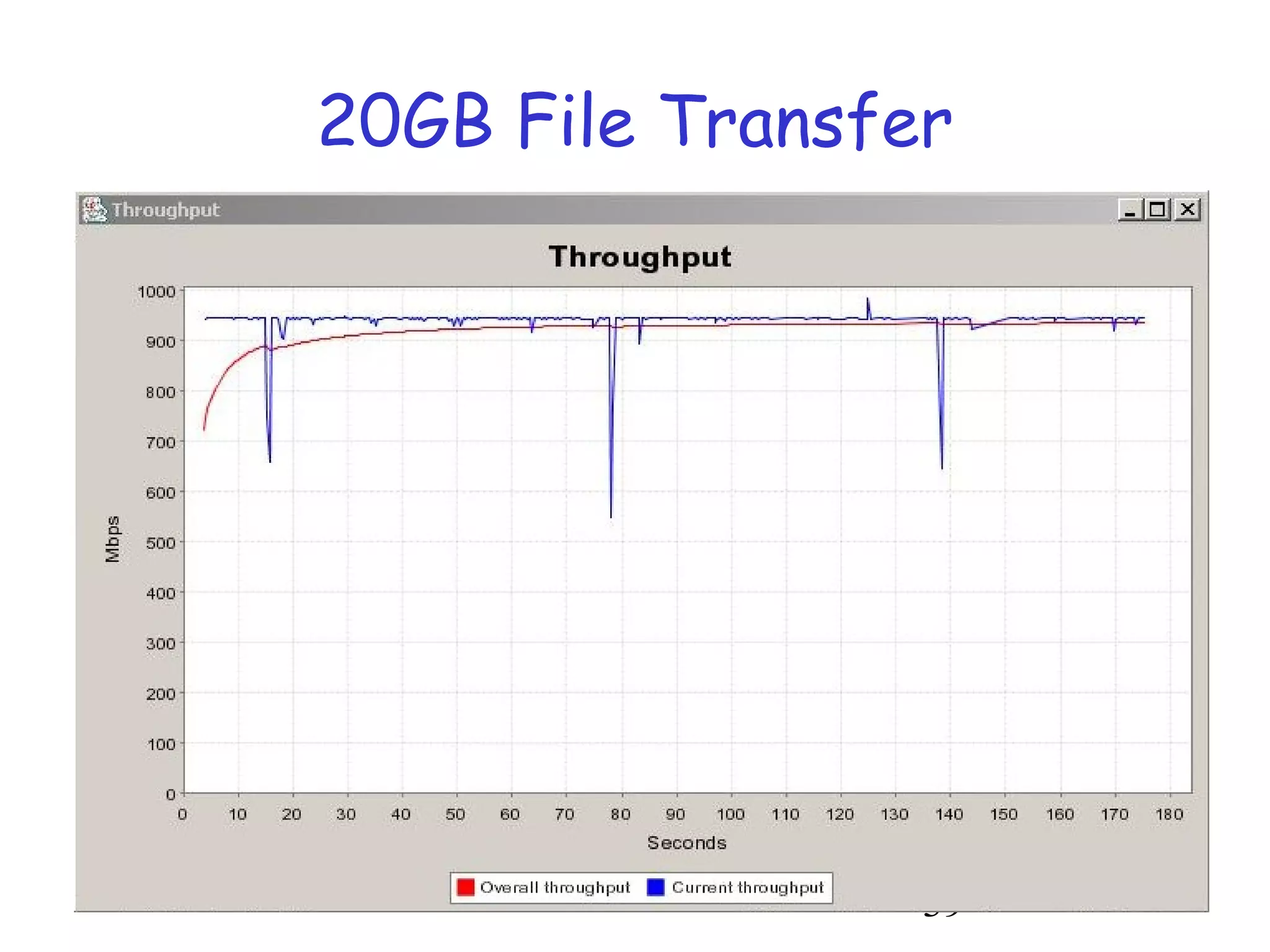

The document discusses the DWDM-RAM project aimed at enabling dynamic optical networks for data-intensive grid applications. It outlines the architecture and services designed to provide lightpaths, emphasizing the advantages of dynamic wavelength switching for high bandwidth needs. The project focuses on improving resource management and scheduling within optical networks to facilitate efficient data transfer across various applications.