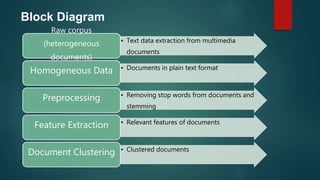

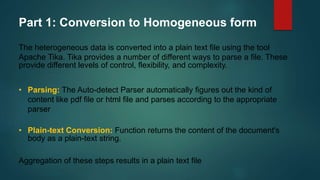

This document discusses document clustering. It begins with an introduction that defines document clustering as aiming to minimize within-cluster distances and maximize between-cluster distances. It then shows a block diagram of the clustering process, which includes preprocessing documents by removing stop words and stemming, extracting relevant features, and performing document clustering. The document clustering techniques are then described in three parts: converting heterogeneous documents to homogeneous plain text, extracting features like n-grams and part-of-speech tags, and performing k-means clustering on the feature space to group the documents.