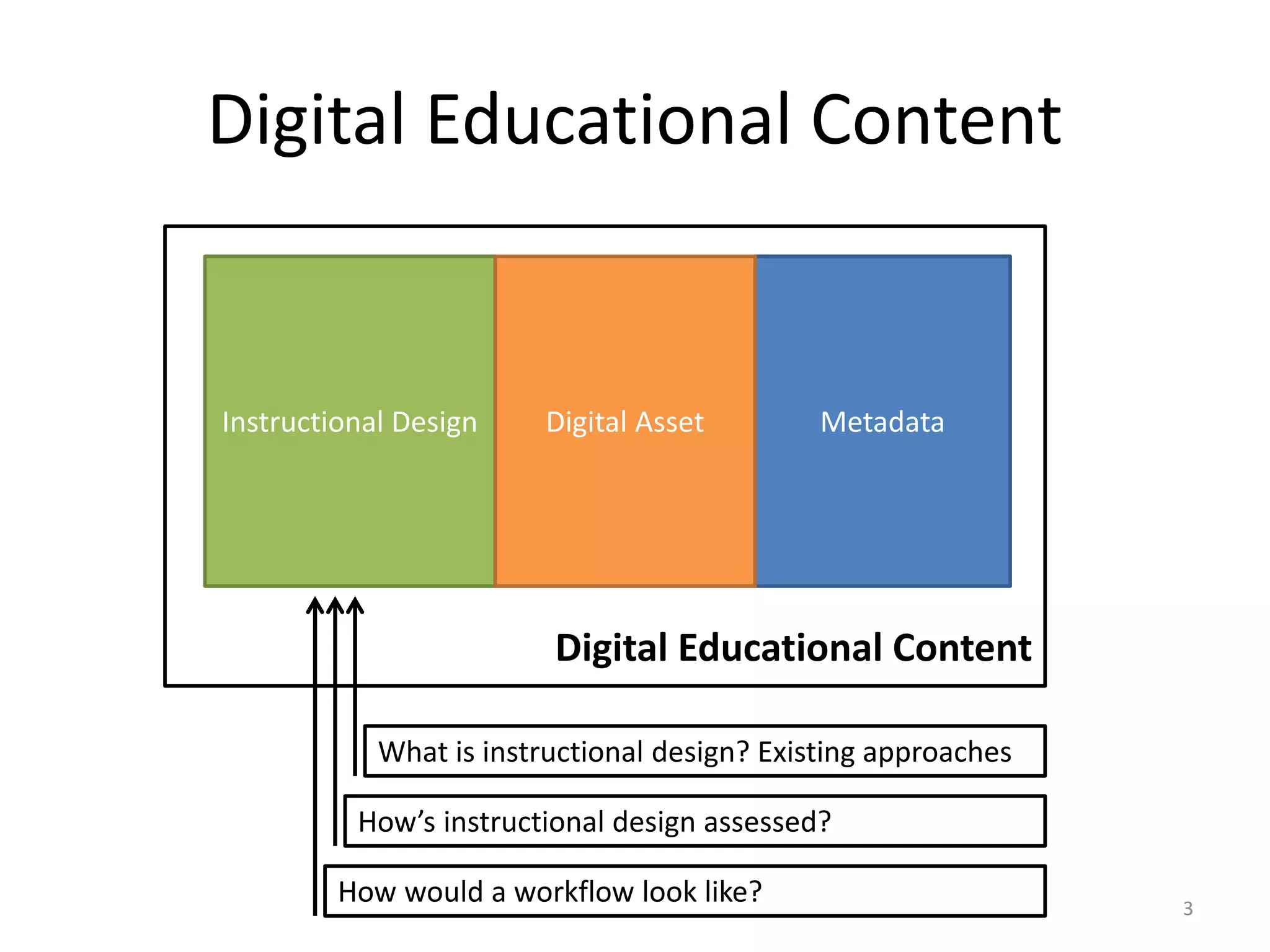

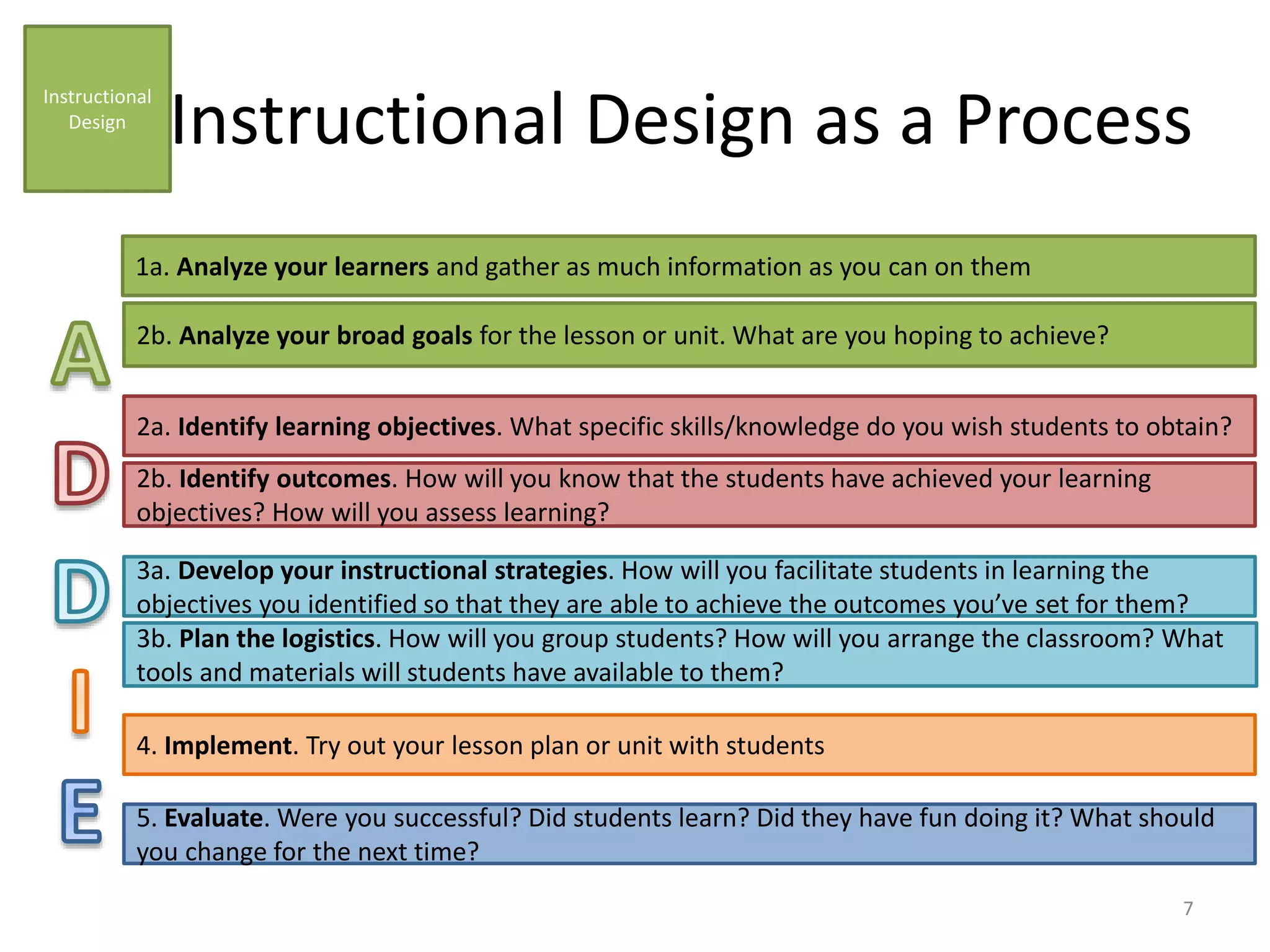

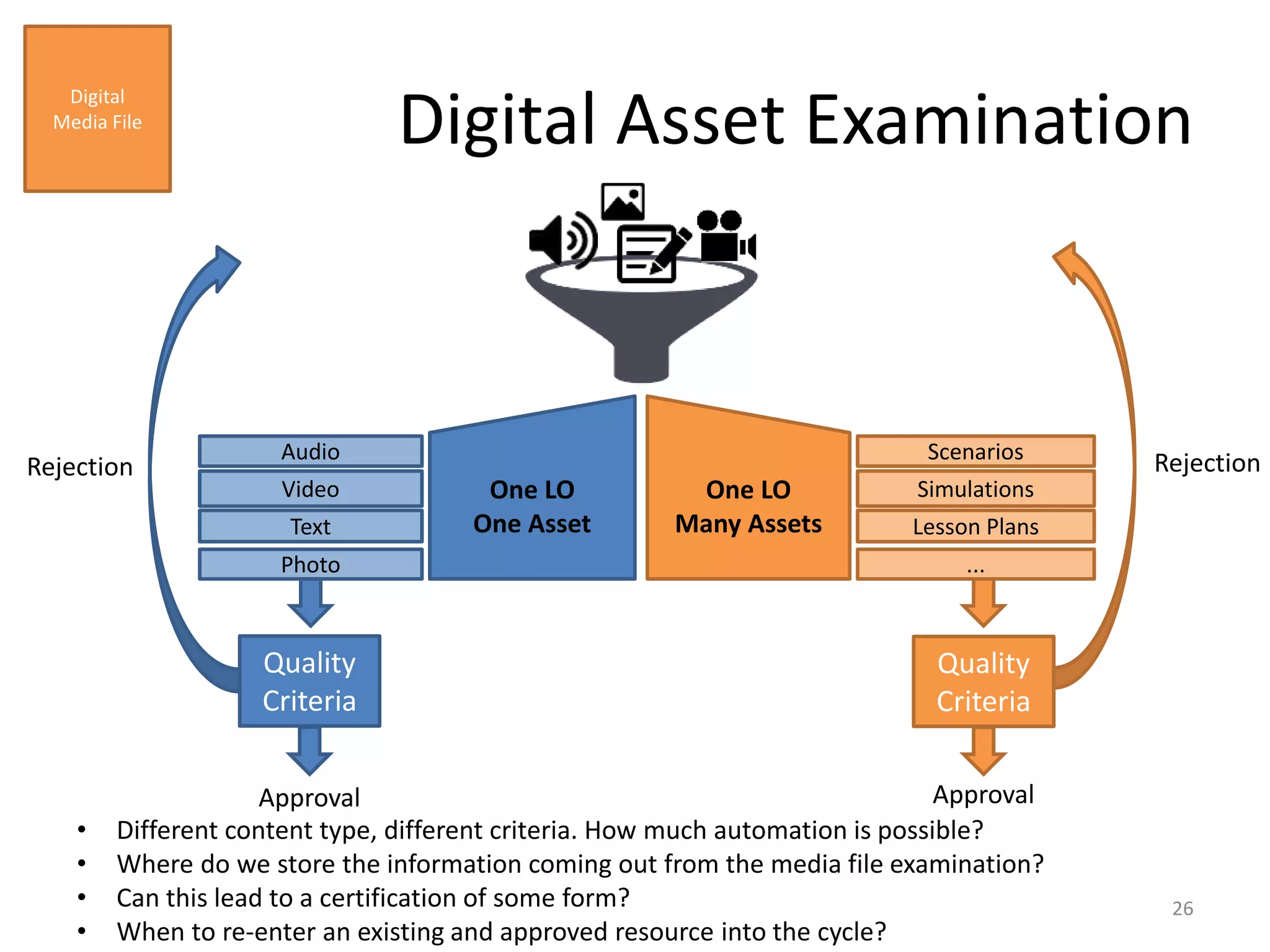

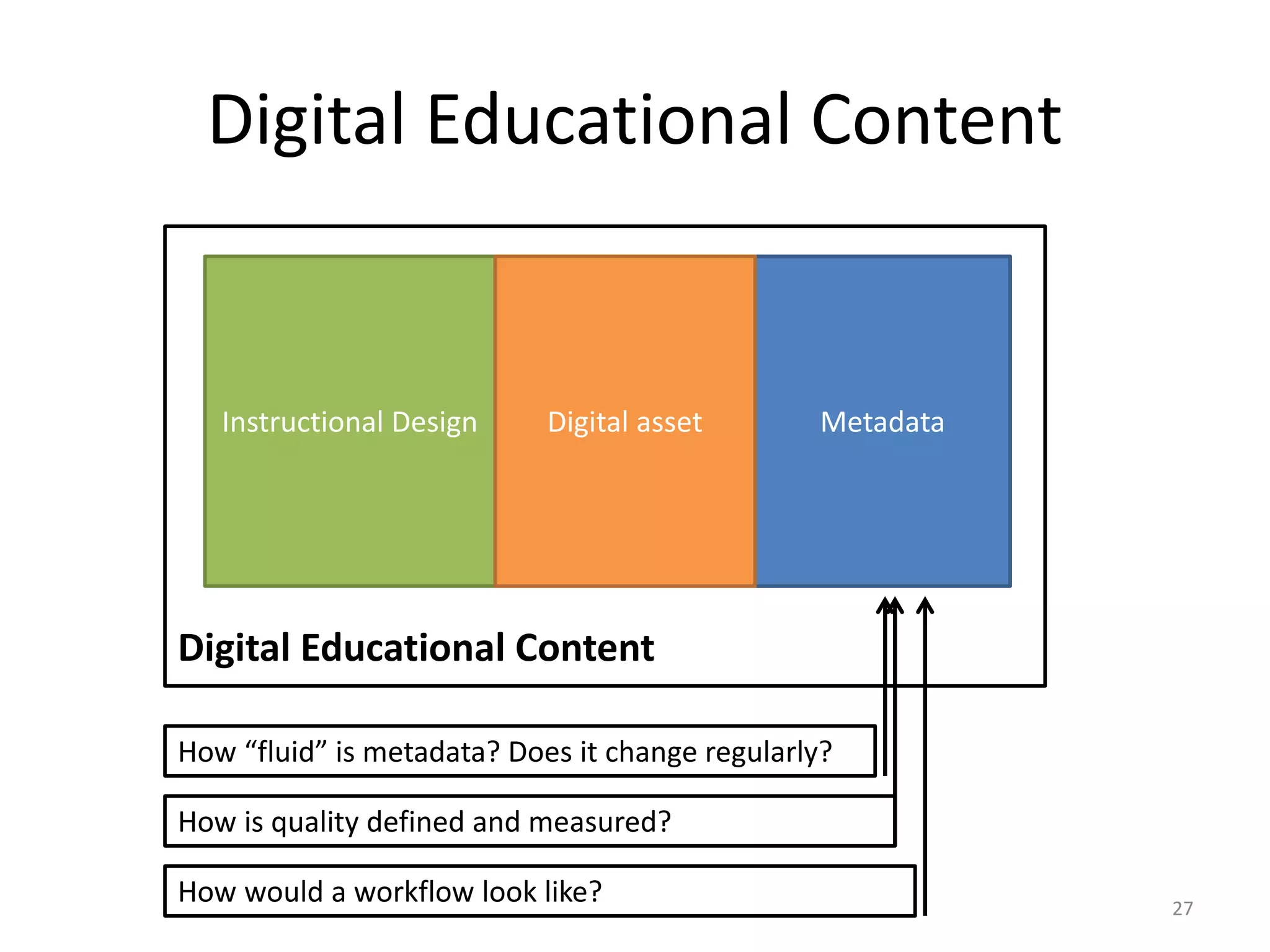

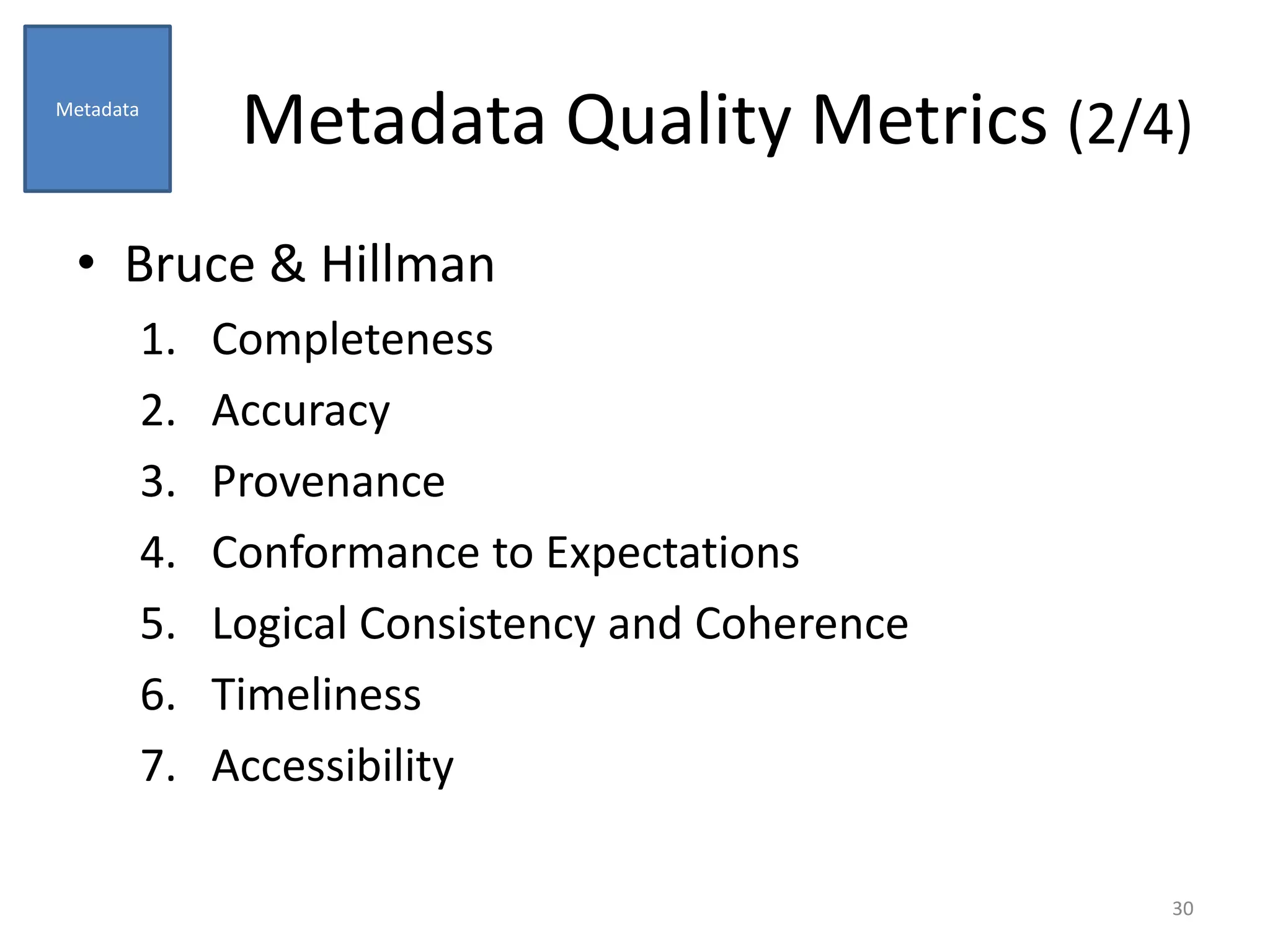

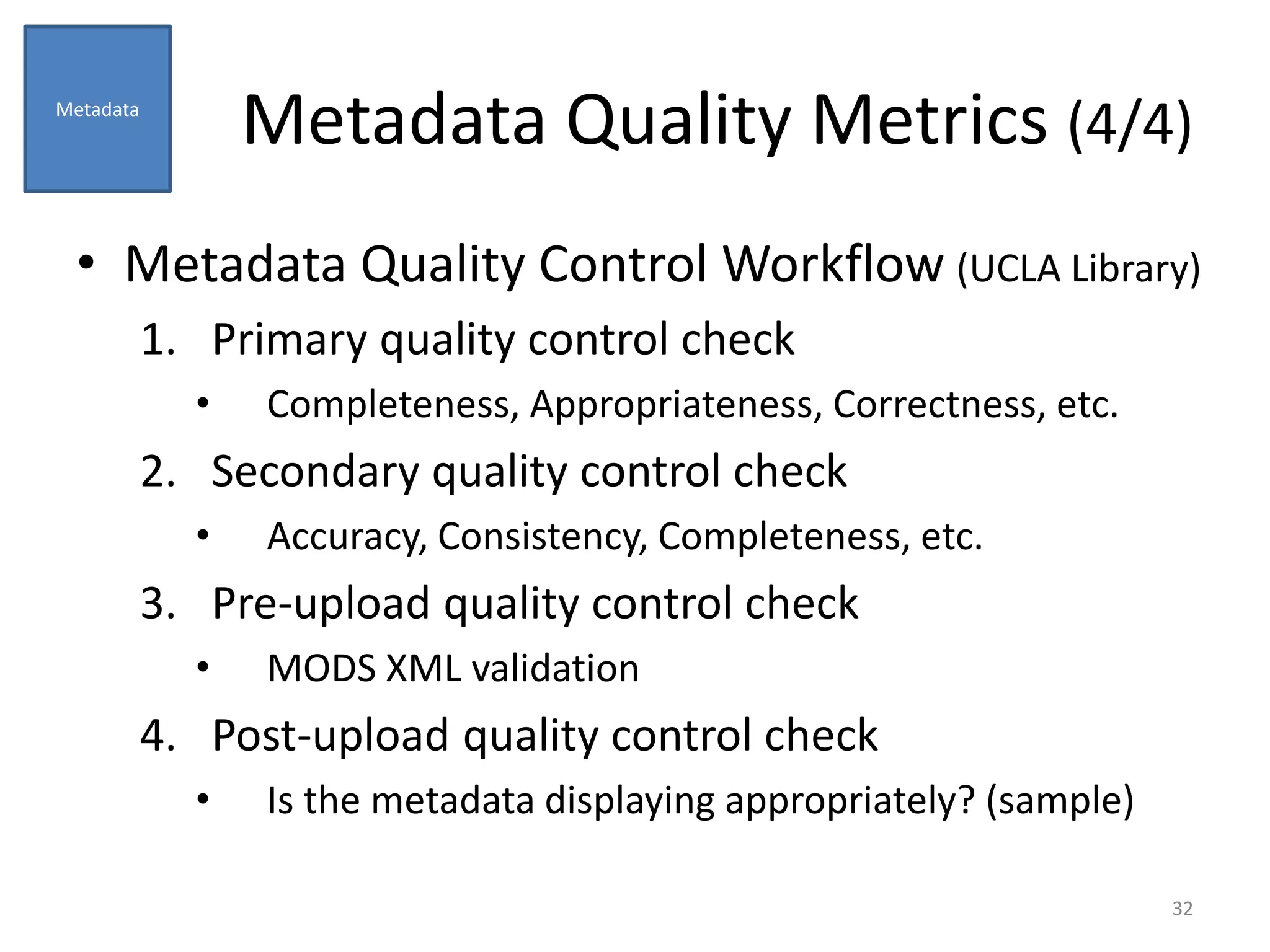

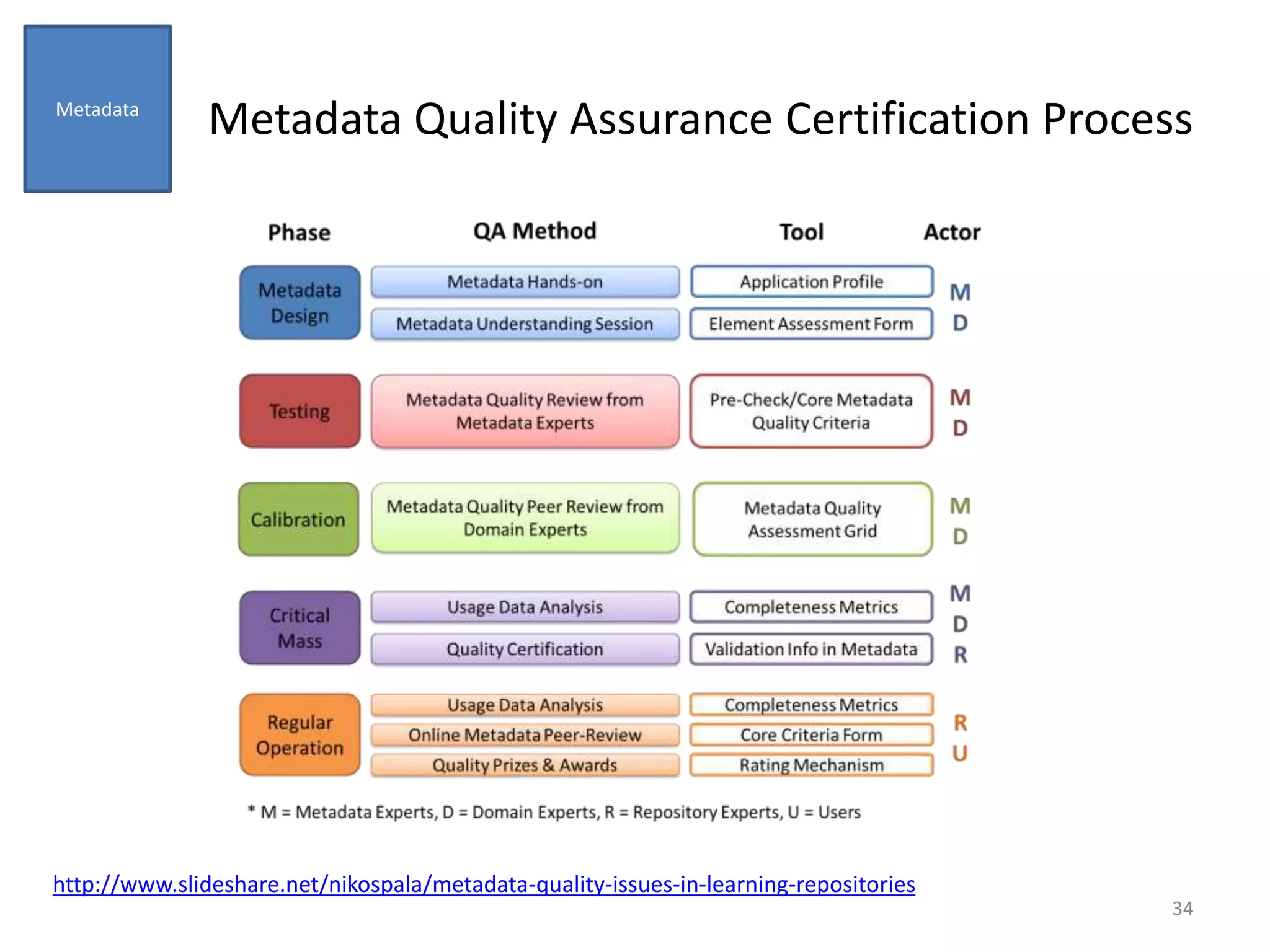

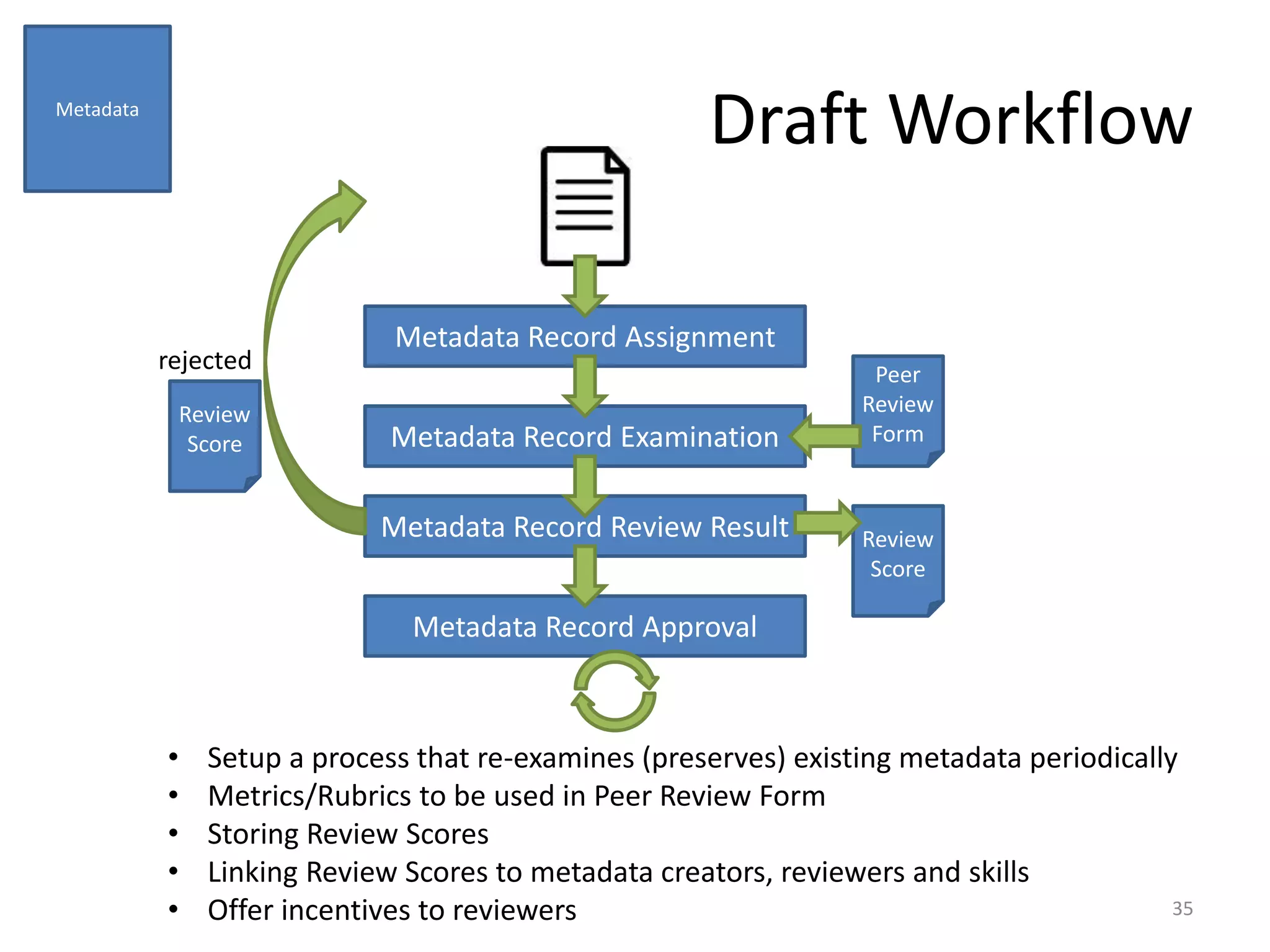

The document discusses quality assurance processes for digital educational content, focusing on aspects such as instructional design, digital assets, and metadata. It outlines various quality criteria and models used in instructional design, including the ADDIE model, and emphasizes the importance of effective design principles and thorough evaluation methods. The document also addresses metadata quality and its influence on the usability and accessibility of educational resources.