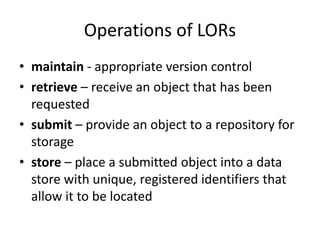

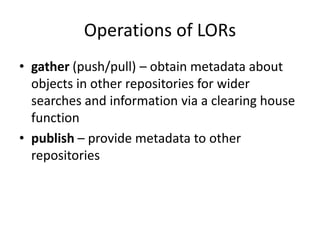

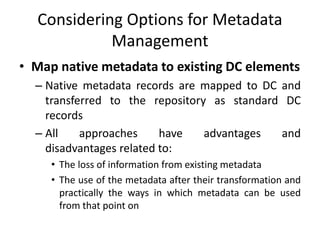

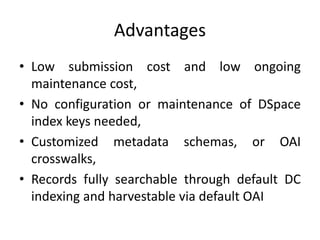

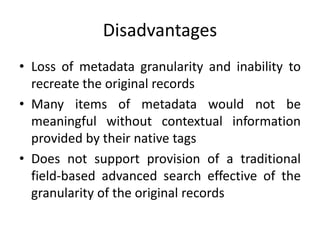

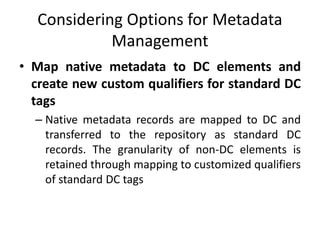

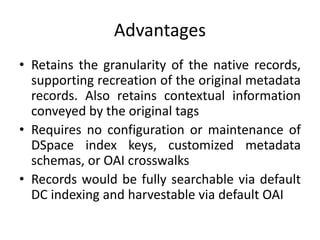

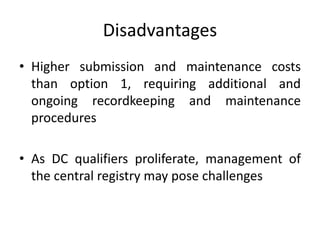

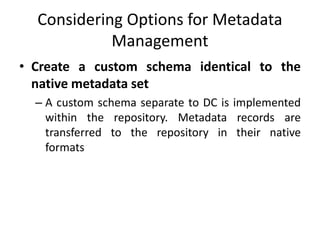

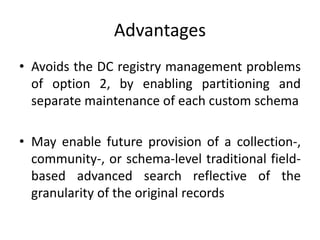

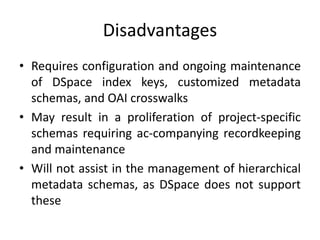

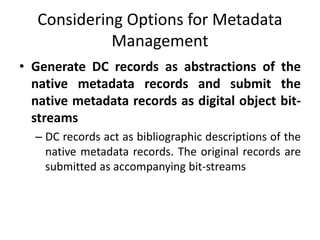

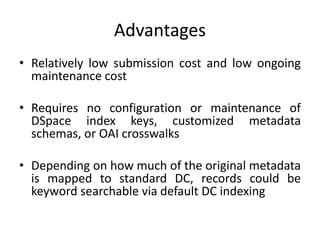

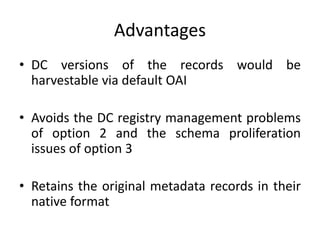

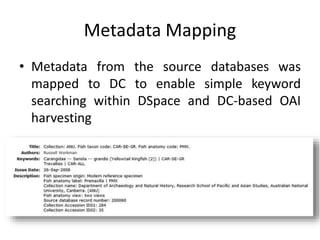

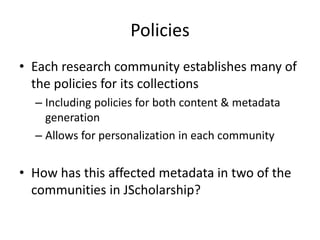

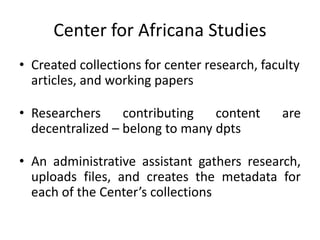

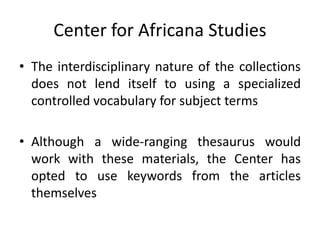

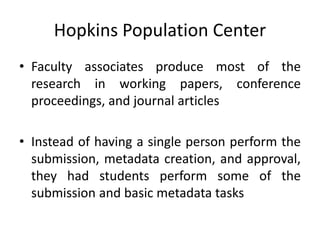

The document discusses the operational structure and case studies related to Learning Object Repositories (LORs), including technologies for federation, harvesting, and metadata management. It provides insights from the University of Sydney Library and JScholarship at Johns Hopkins University, detailing their approaches to metadata creation, submission processes, and guidelines for managing repository collections. Additionally, it highlights challenges and advantages associated with various metadata management strategies, emphasizing the importance of maintaining granularity and context in the metadata records.