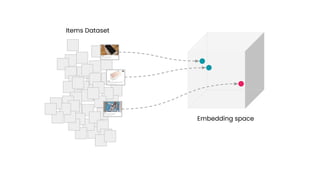

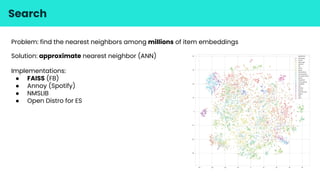

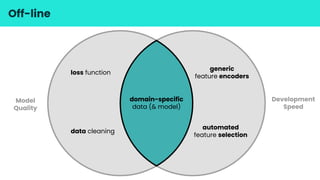

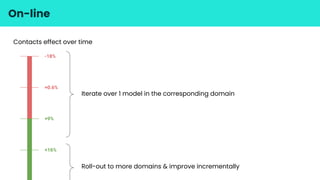

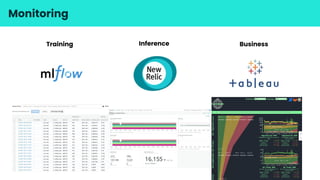

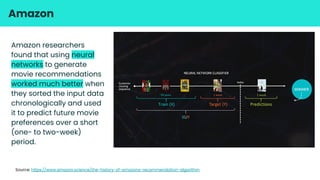

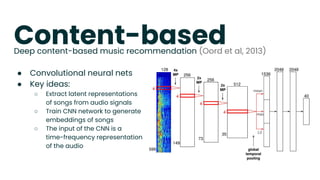

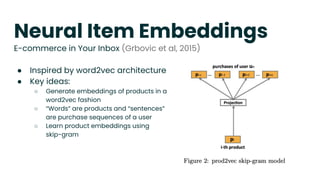

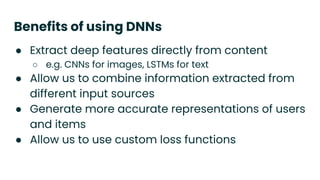

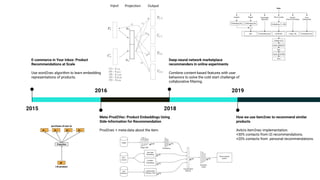

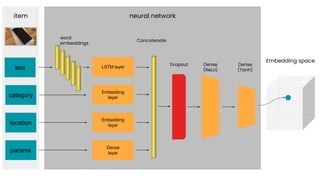

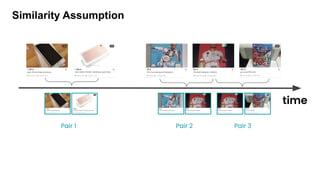

This document discusses deep learning recommender systems from prototypes to production. It provides an overview of modern recommender systems and how deep learning techniques like neural item embeddings, similarity search, and experimentation can improve recommender systems. The key points are: (1) Deep learning allows extracting features from different data sources and generating accurate user/item representations; (2) Neural item embeddings like word2vec learn vector representations of items to find similar items; (3) Similarity search techniques like ANN enable efficient nearest neighbor search in large embedding spaces; (4) Experimentation through offline/online testing and A/B testing is important for evaluating models and improving recommendations.

![<A, P>

<A, N>

Anchor

cross_entropy(

[<A,P>, <A,N>, ...],

[1 , 0 , …]

)

Positive

Negative

Dot Product Loss Function](https://image.slidesharecdn.com/deeplearningrecommendersystems-datatalks-210323170901/85/Deep-Learning-Recommender-Systems-20-320.jpg)