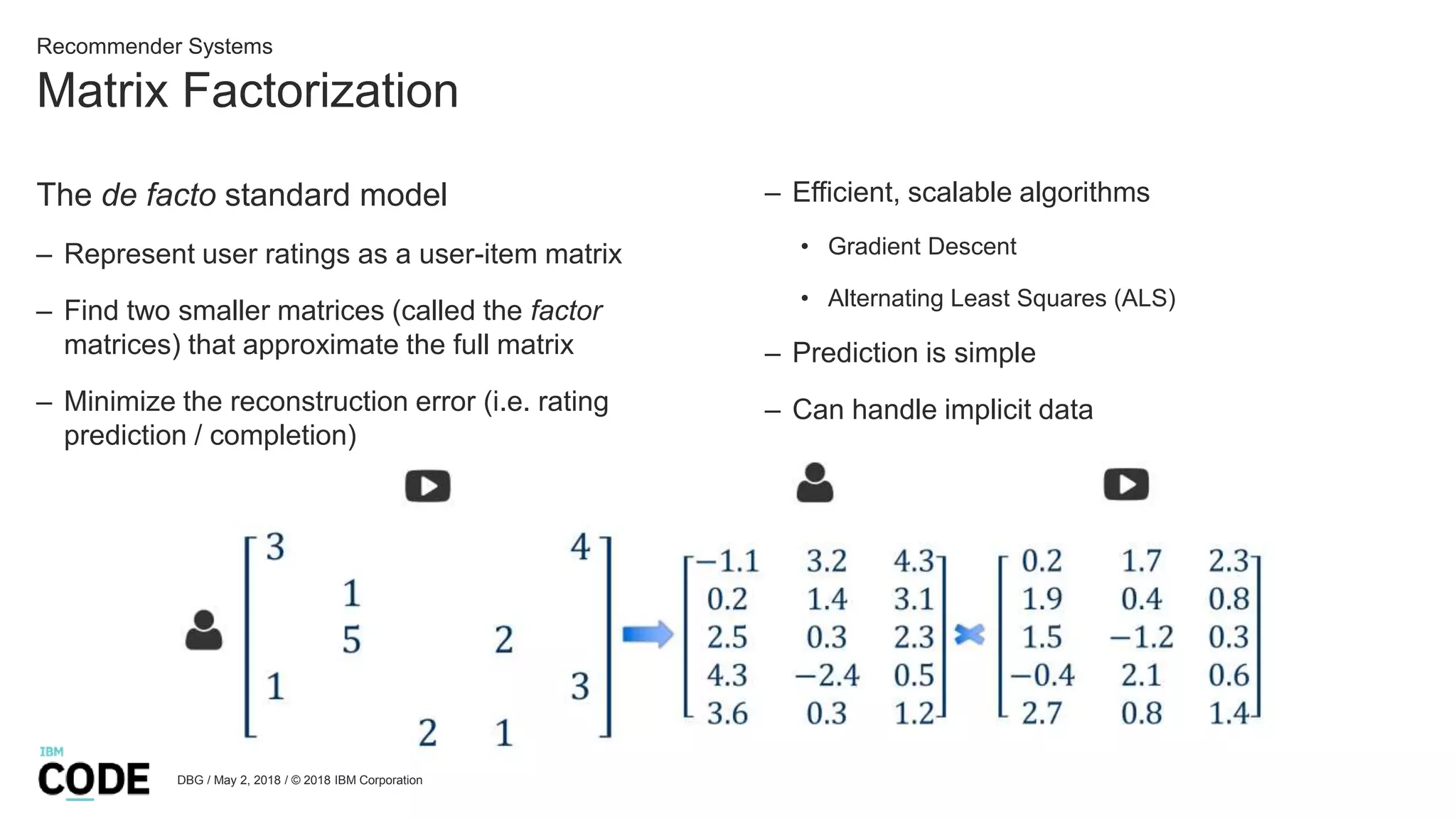

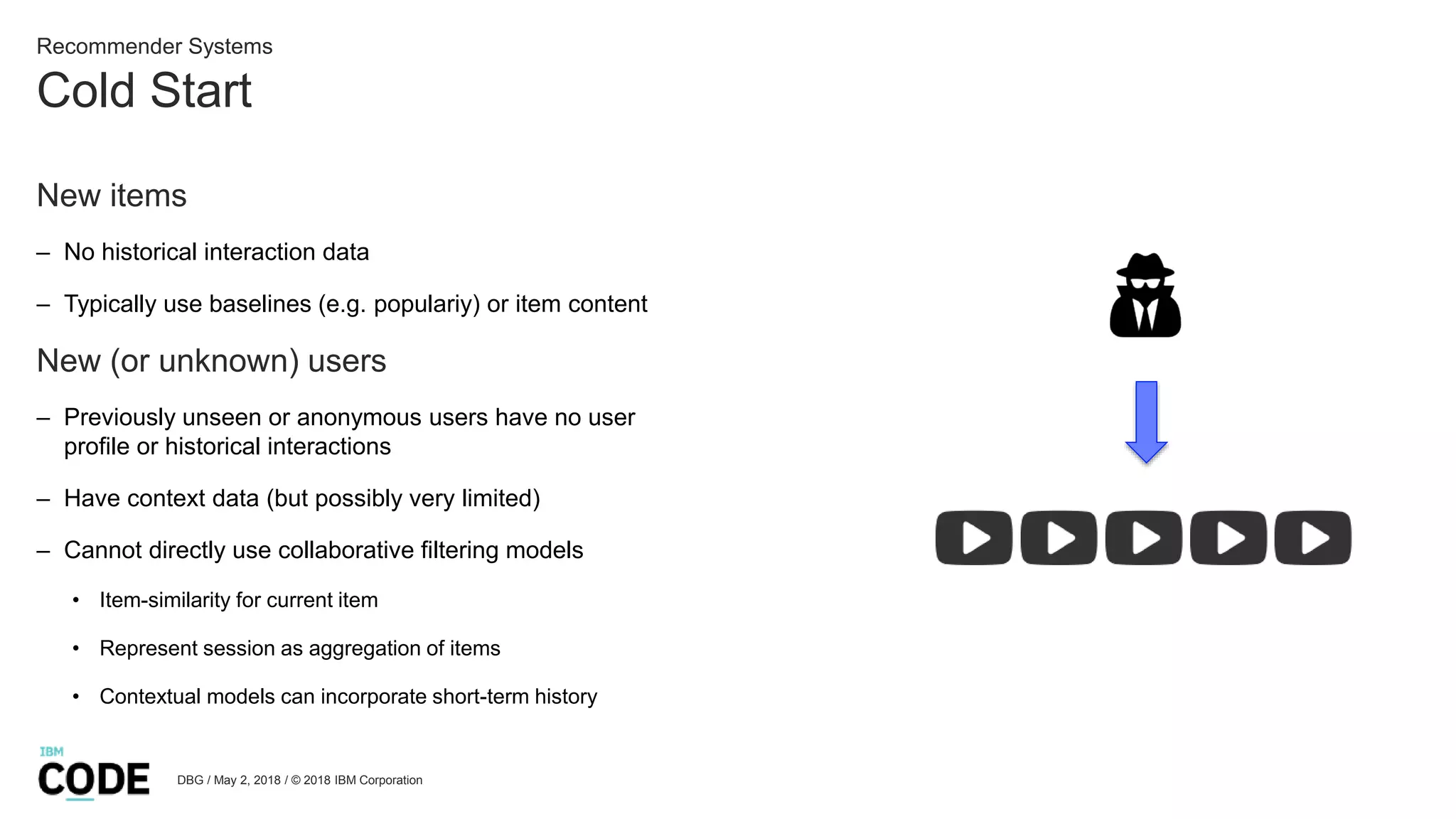

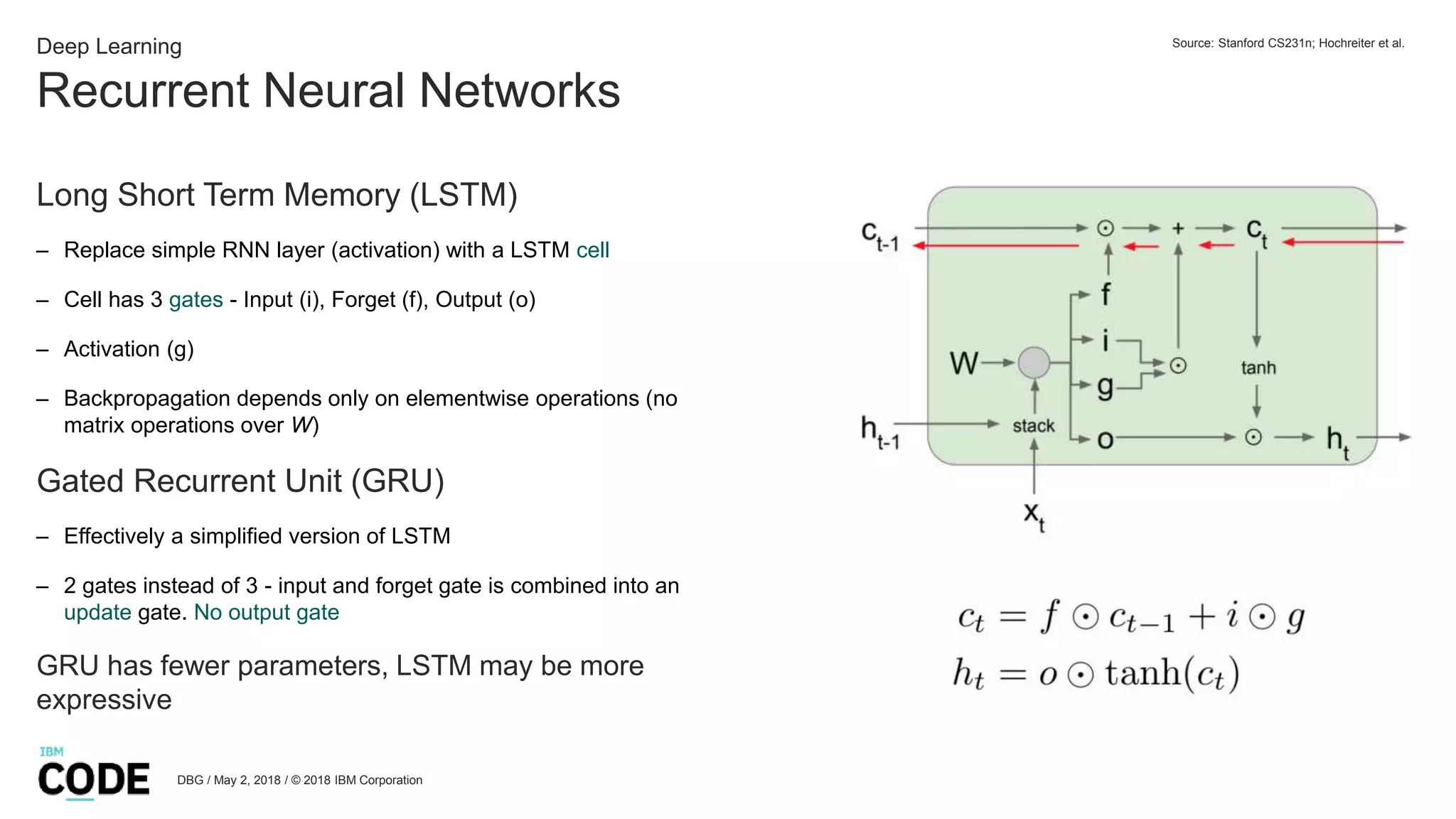

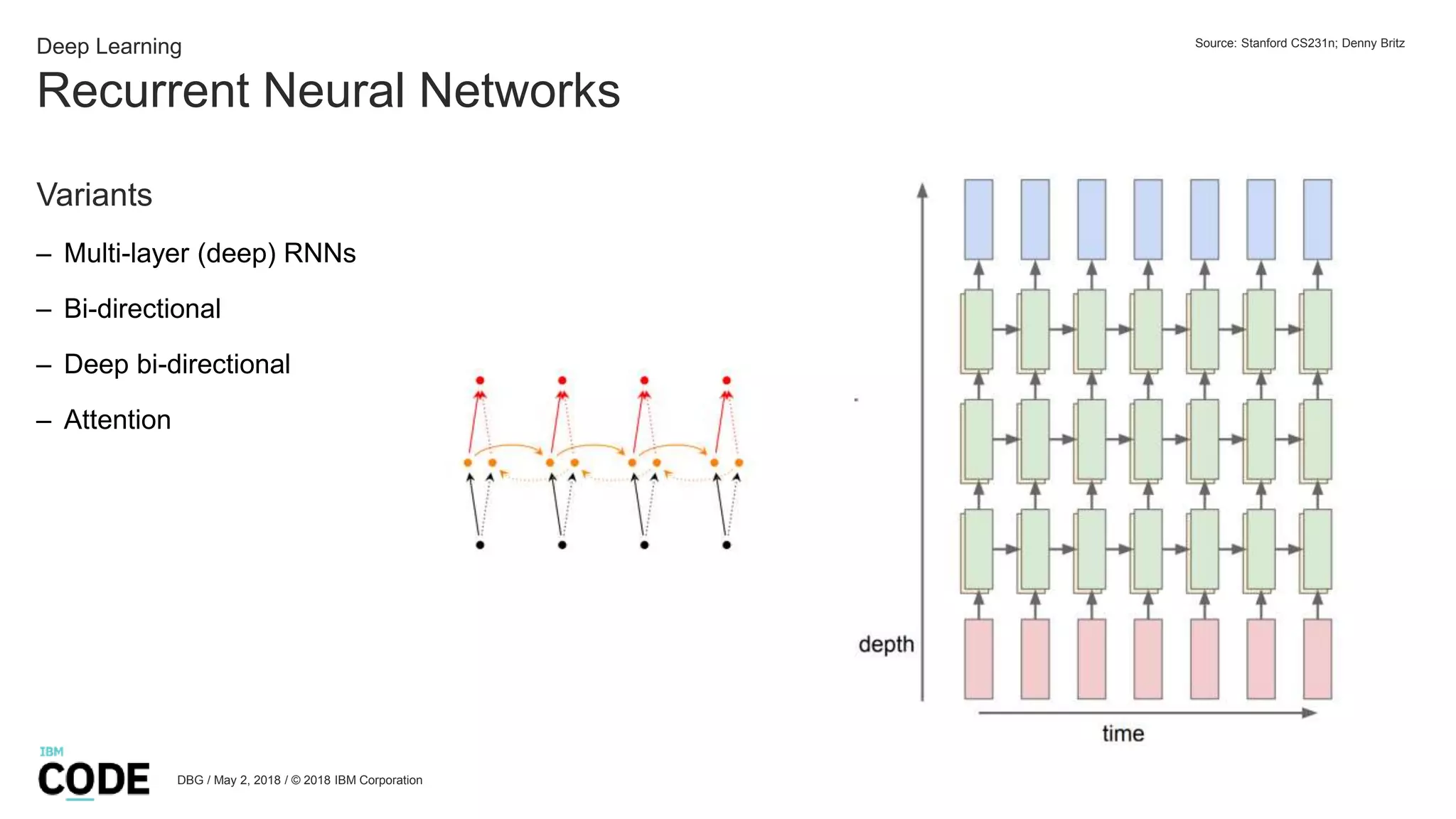

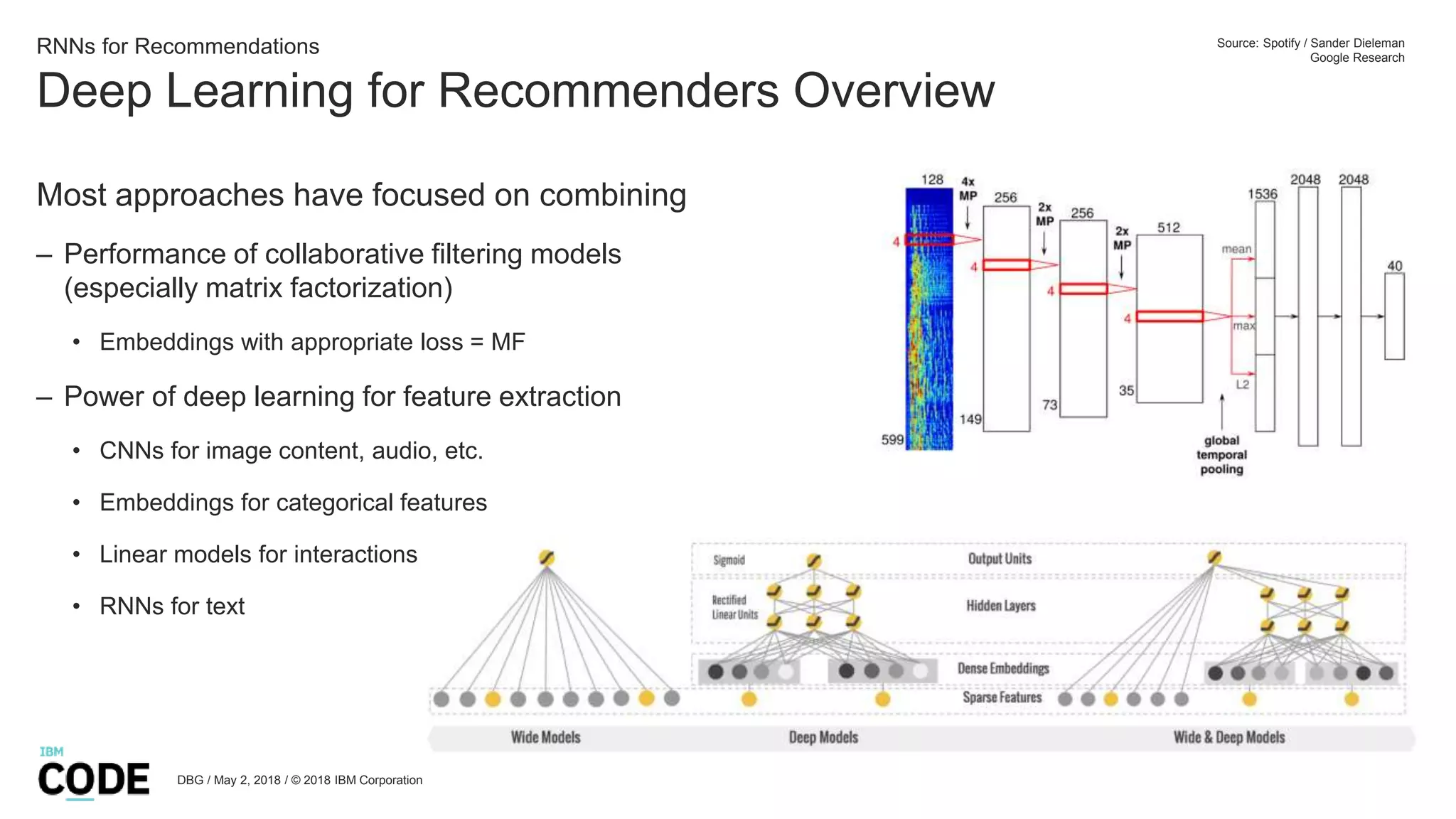

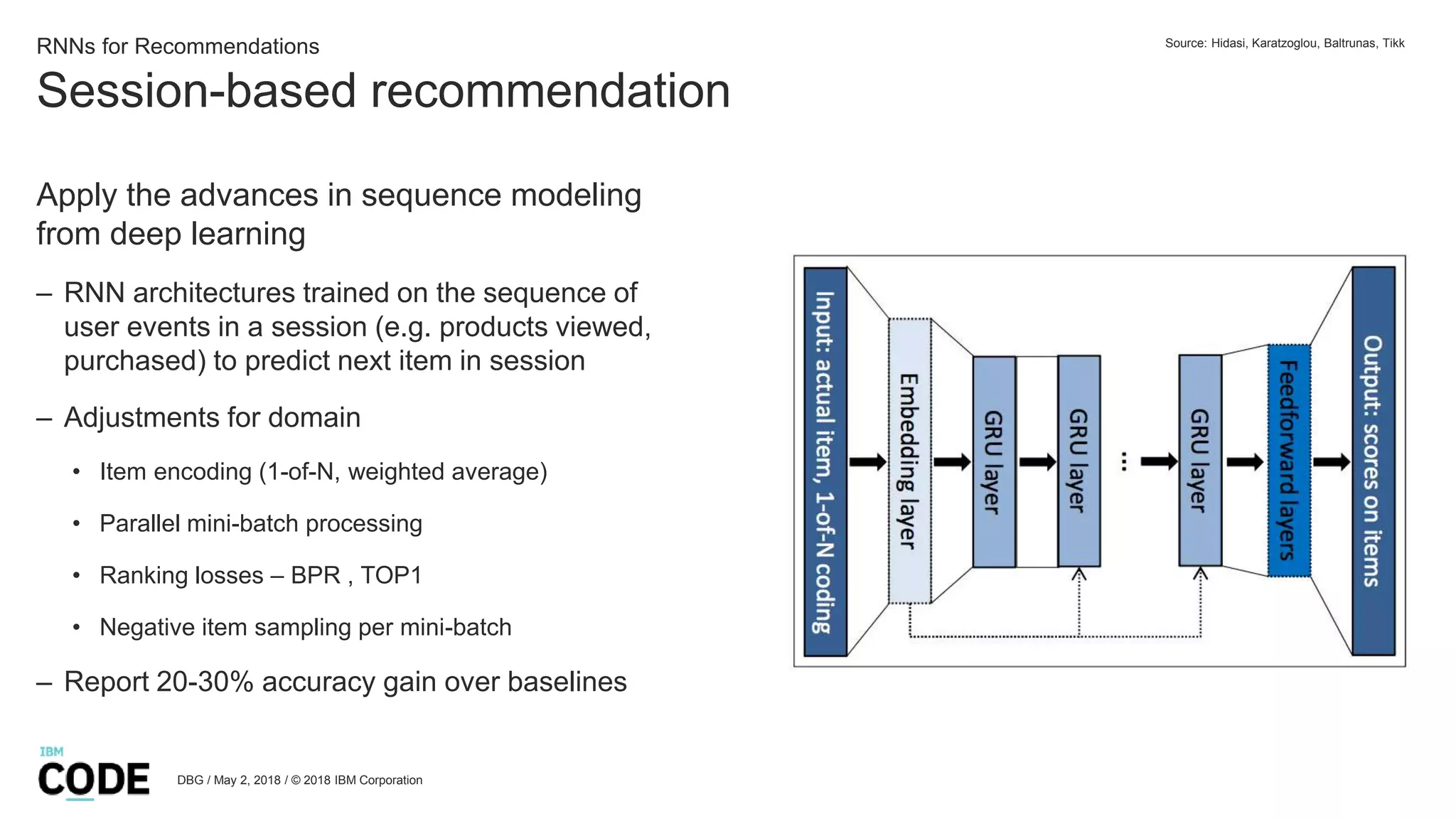

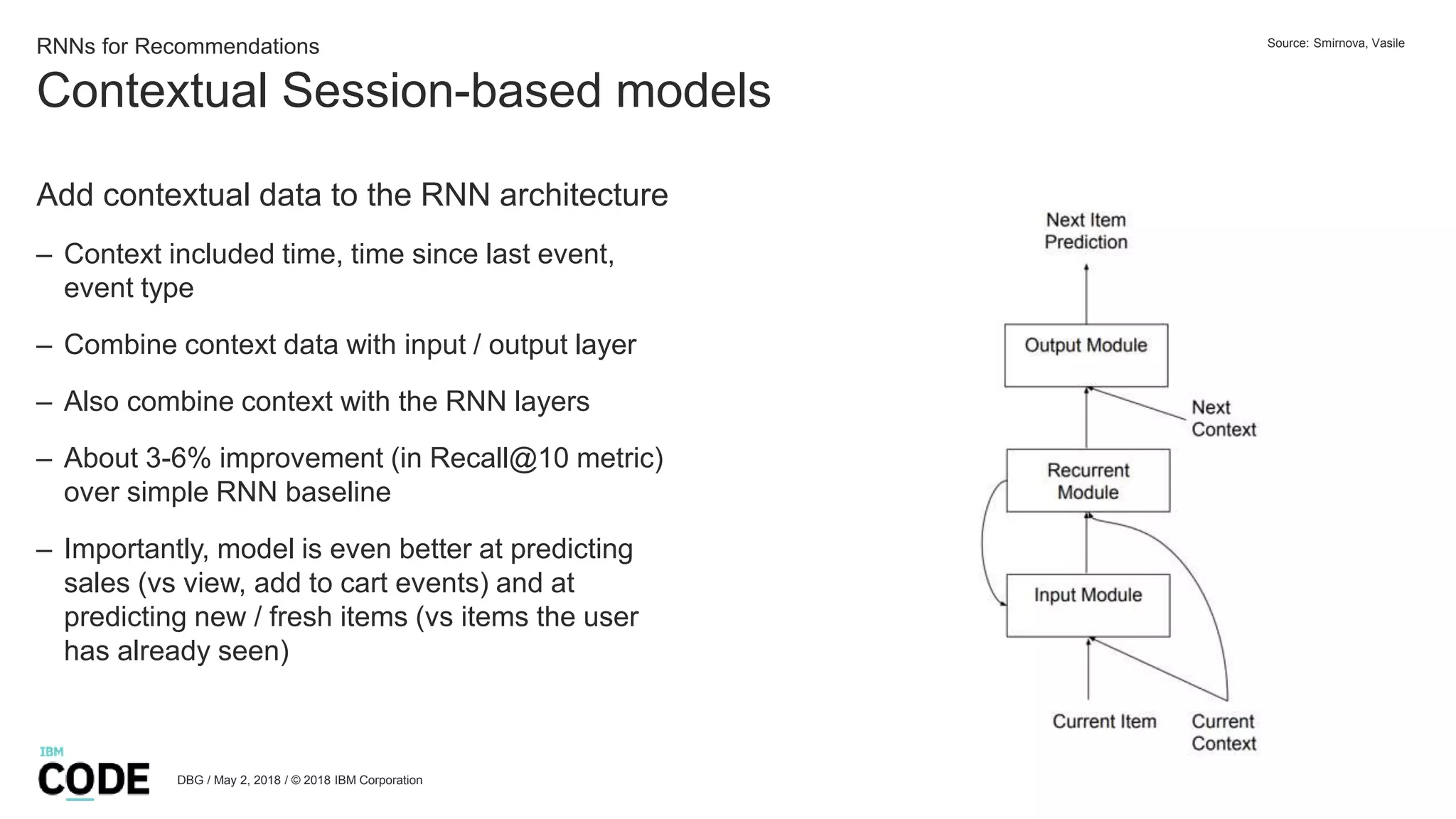

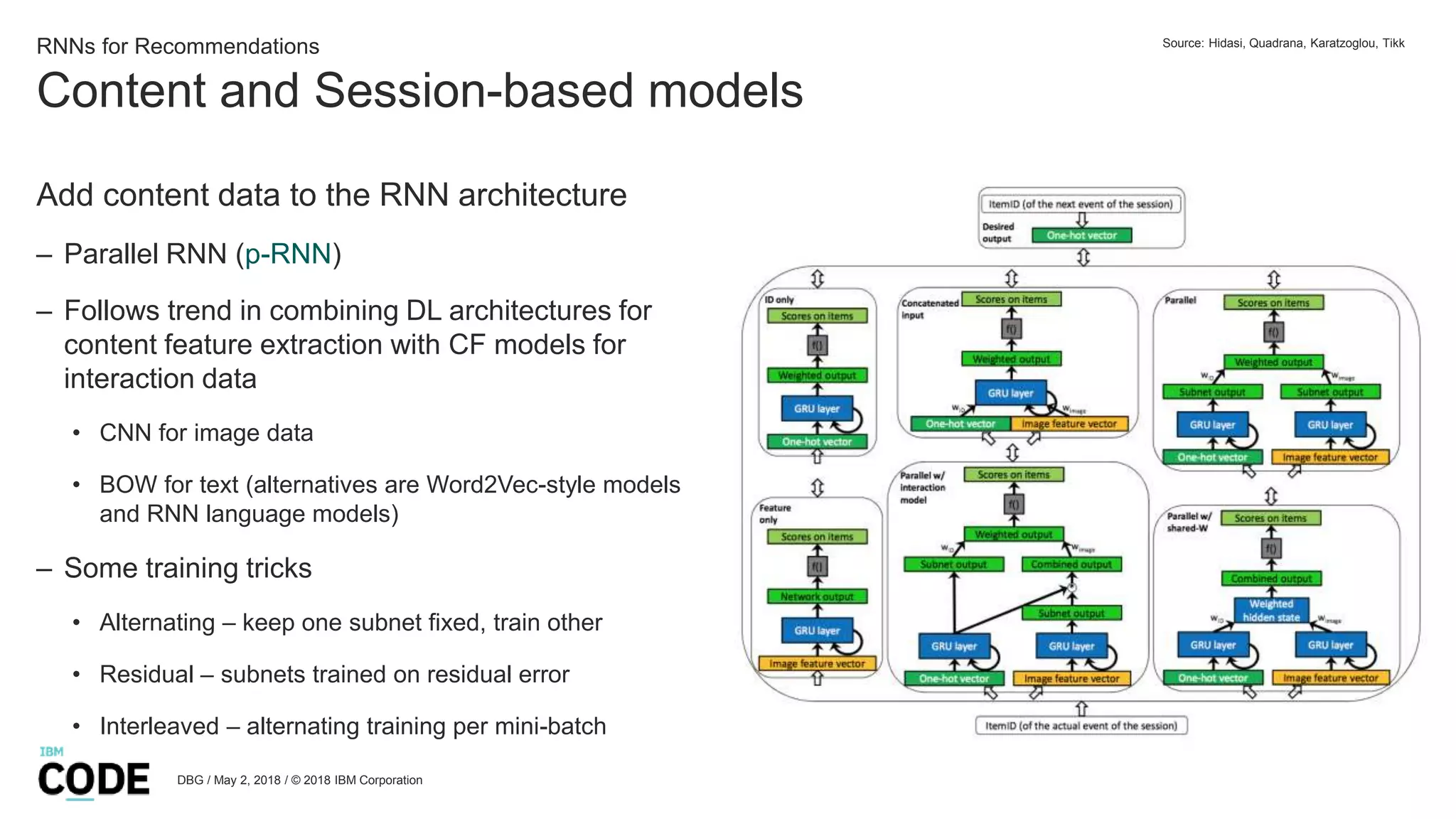

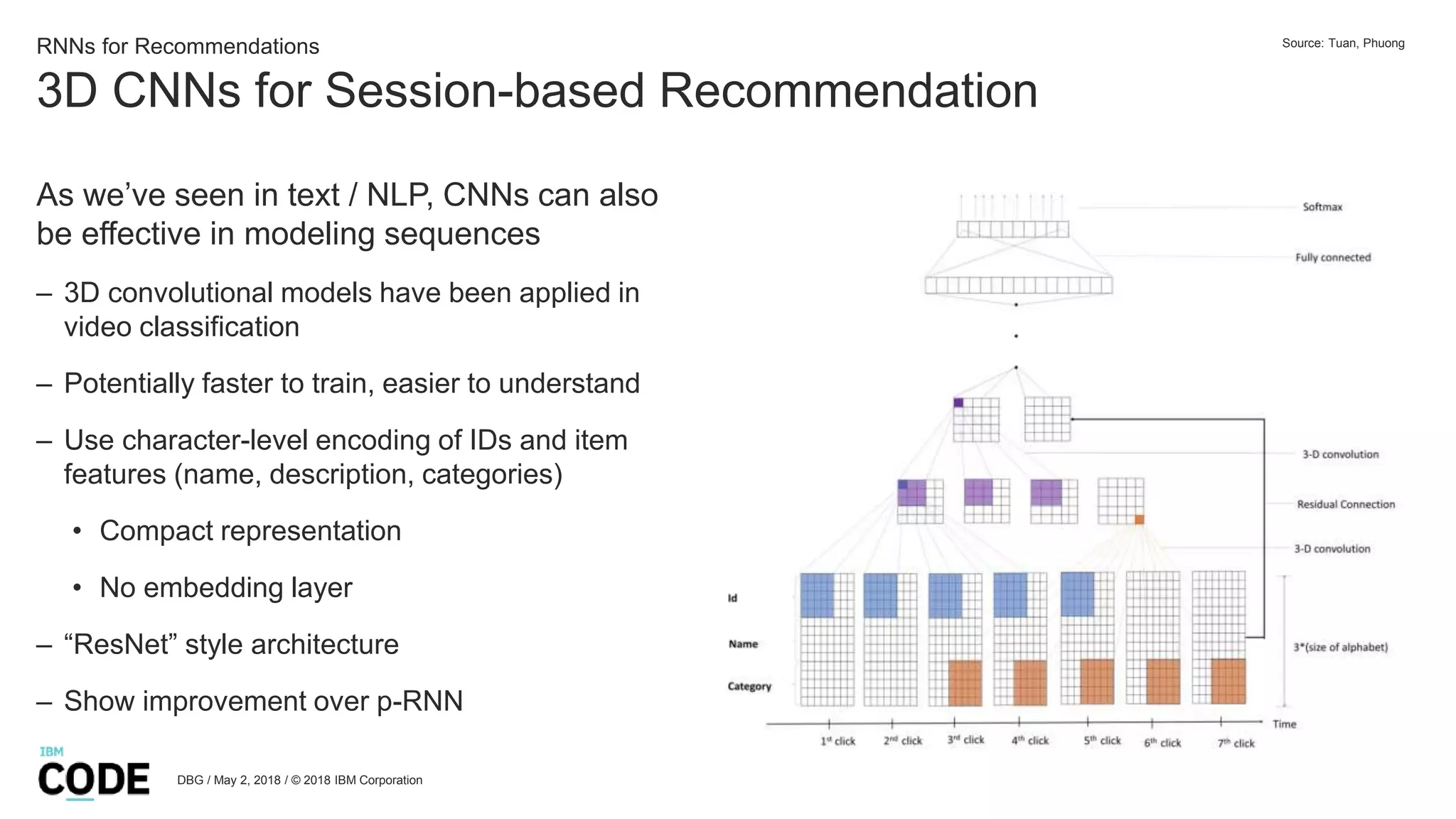

The document discusses the use of recurrent neural networks (RNNs) for recommendation systems, focusing on the integration of deep learning techniques with traditional collaborative filtering methods to enhance prediction accuracy. It covers various approaches, including session-based recommendations and the incorporation of contextual and content data, highlighting challenges such as data sparsity and computational complexity. The document also outlines future research directions in this field, emphasizing emerging models and techniques that improve recommendation effectiveness.