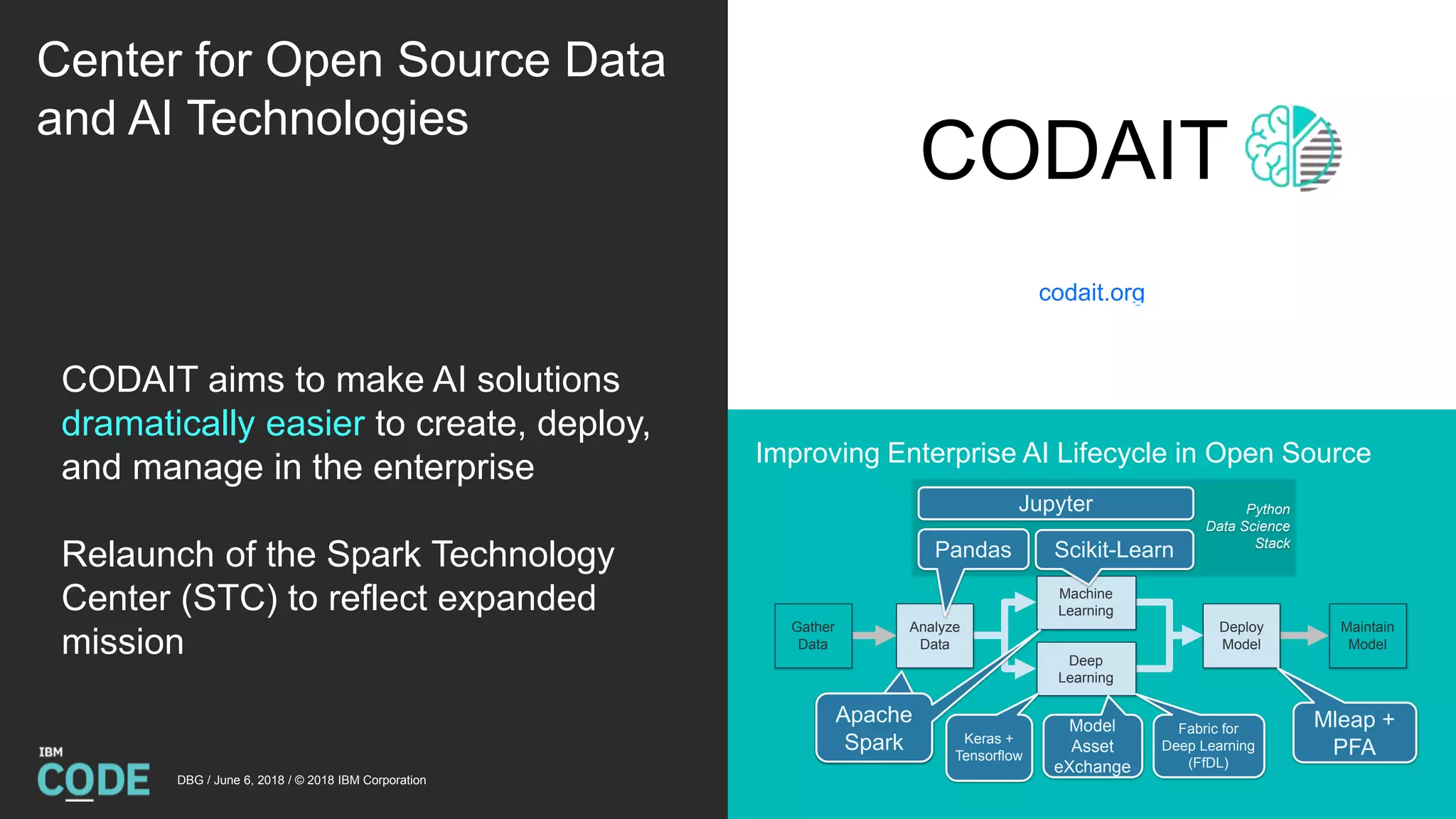

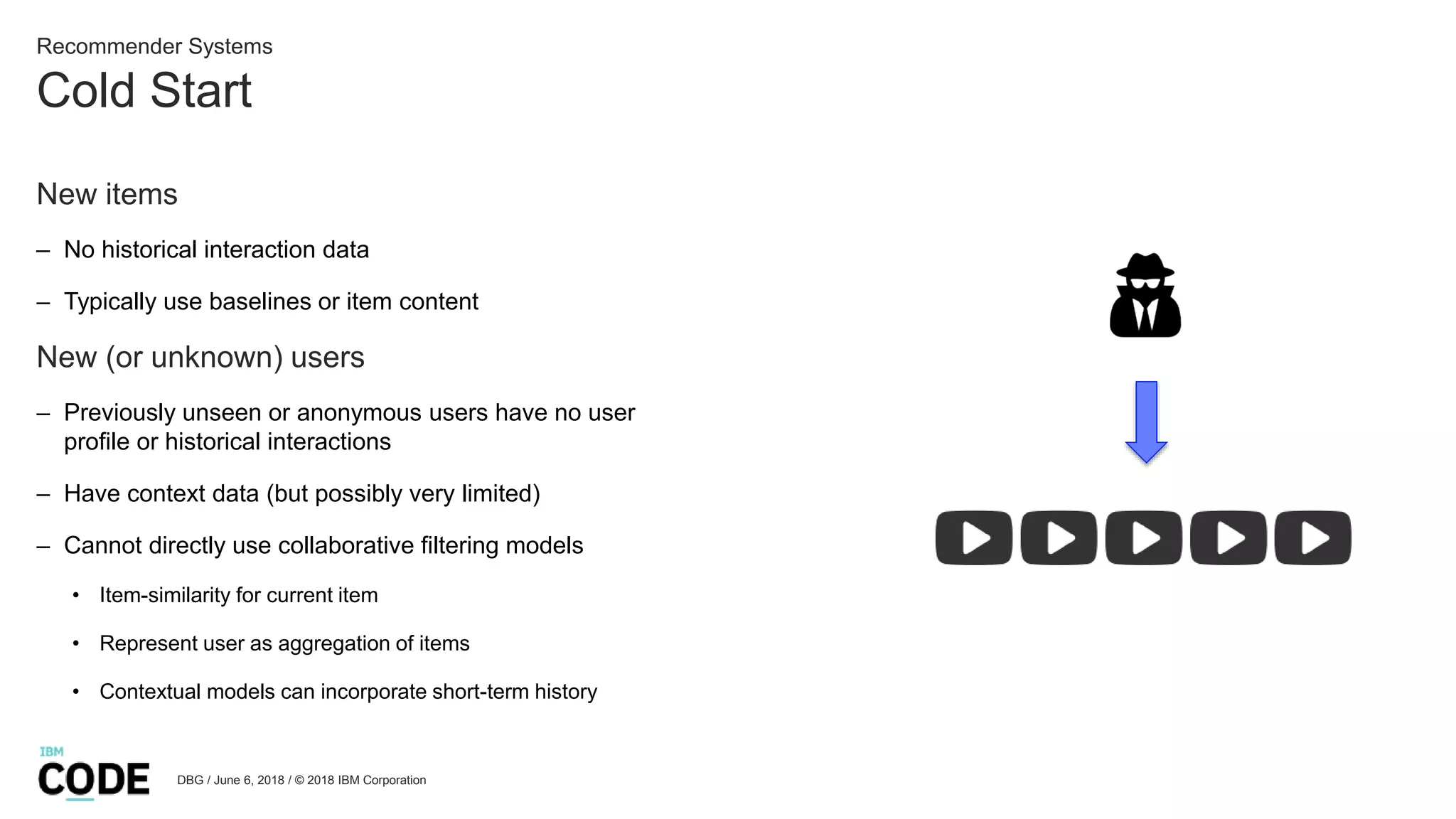

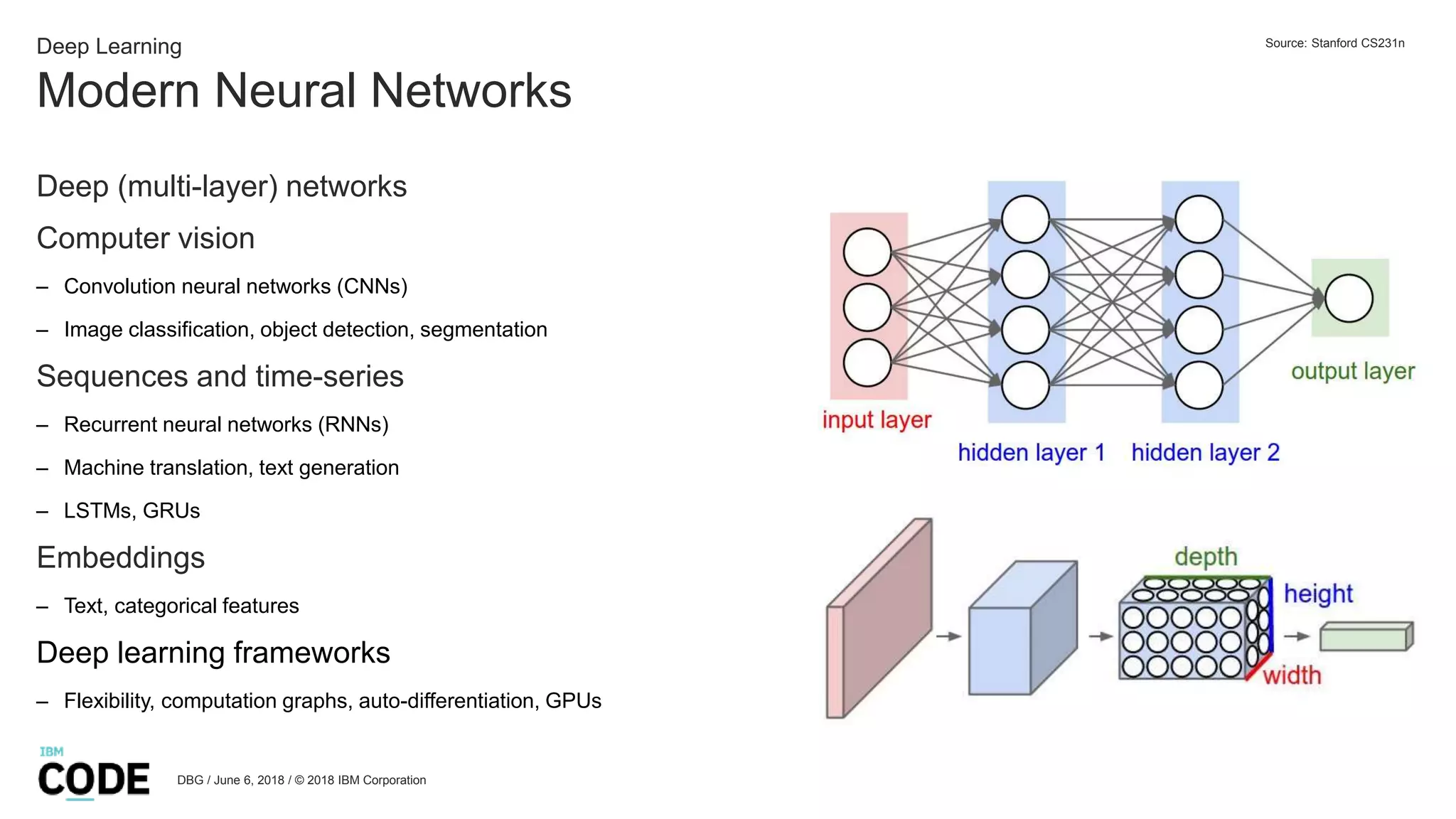

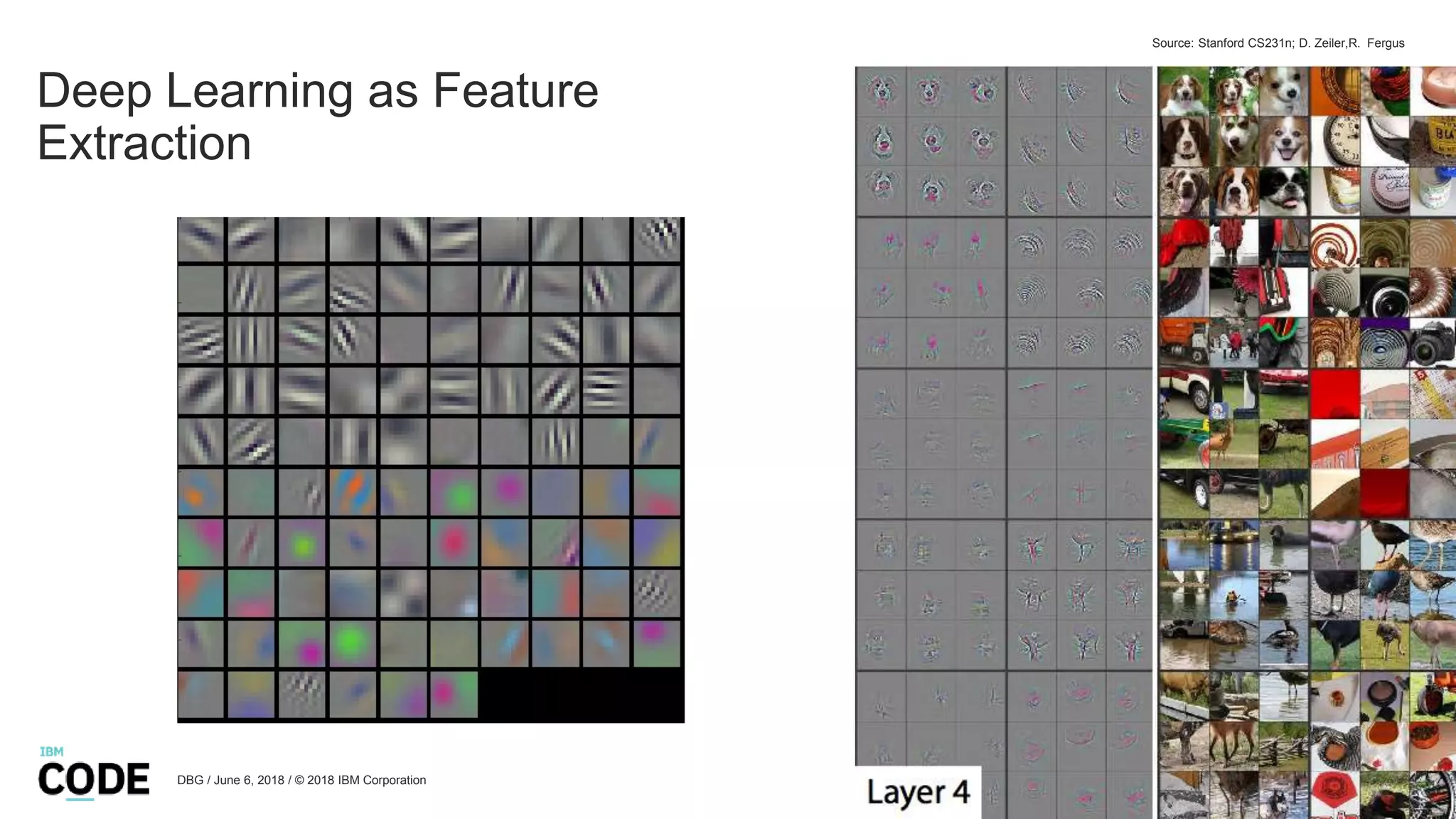

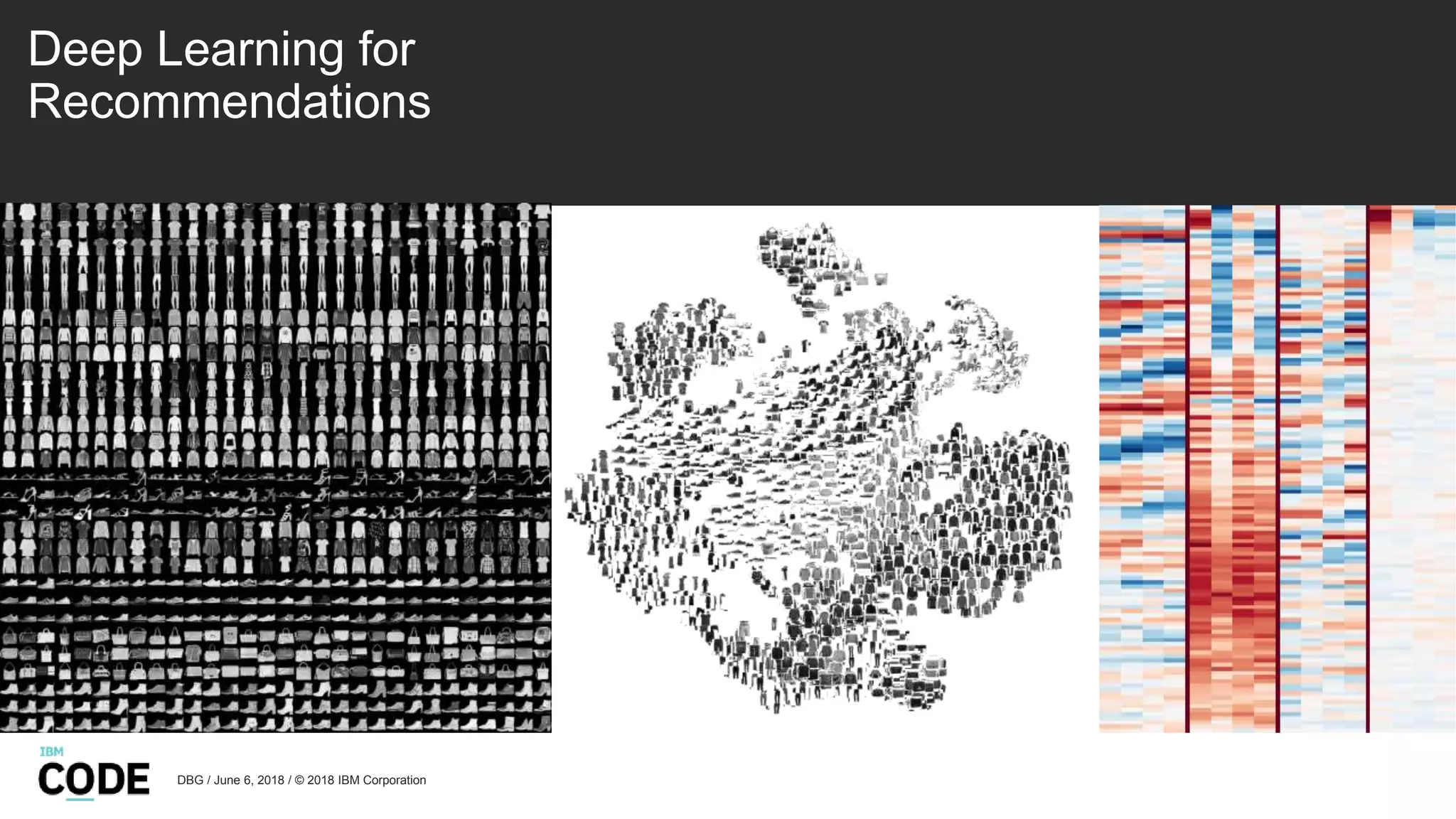

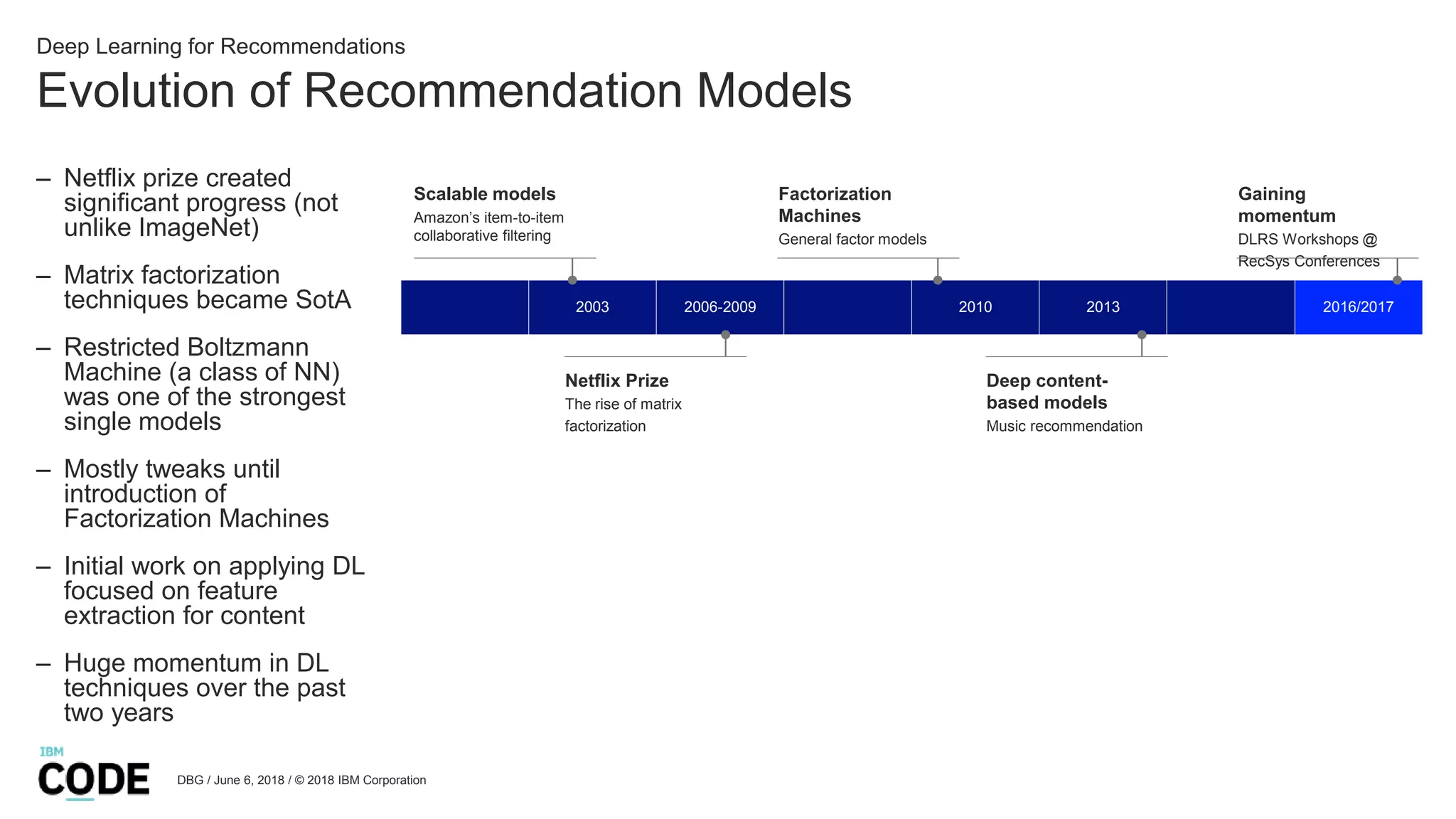

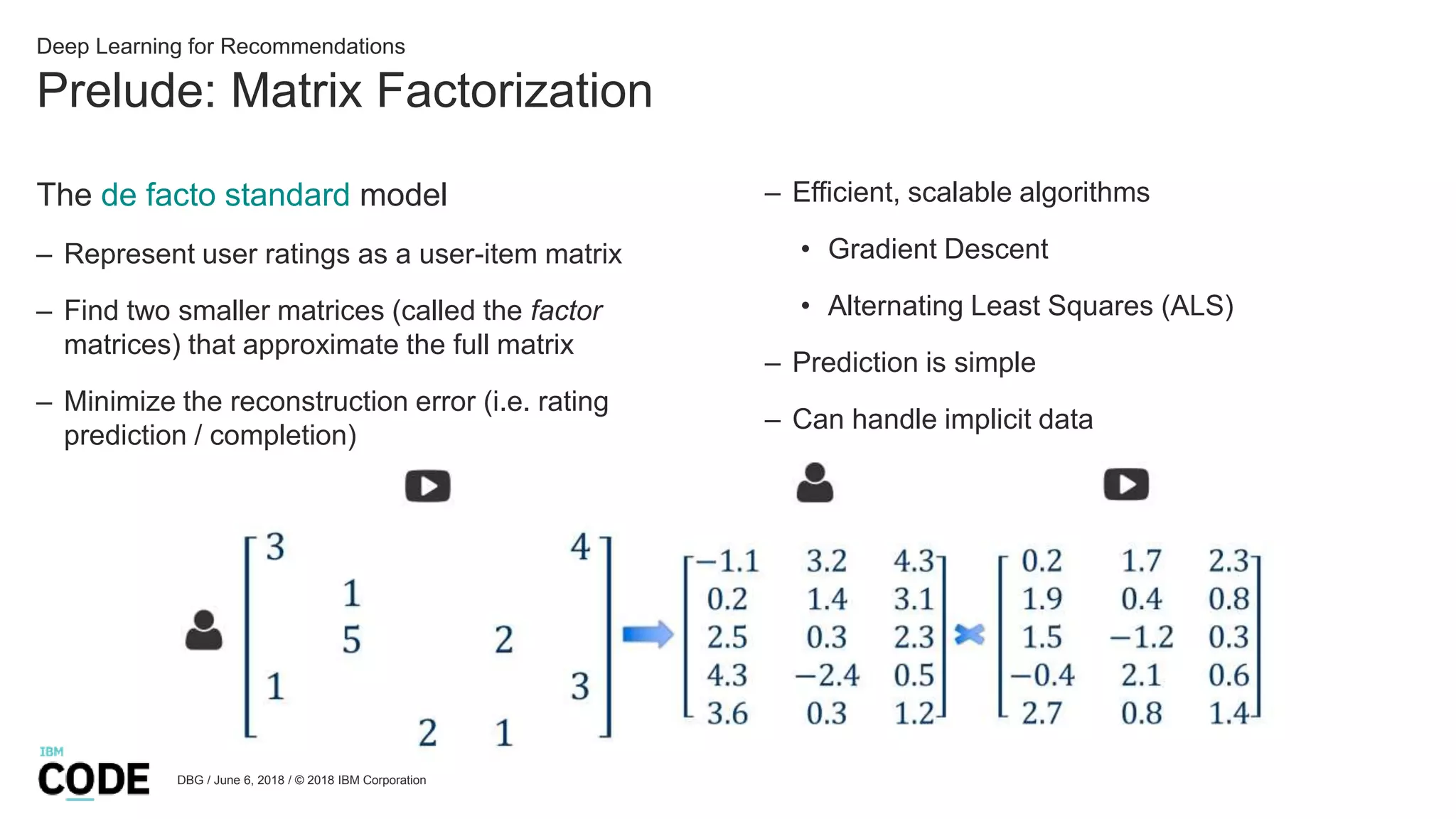

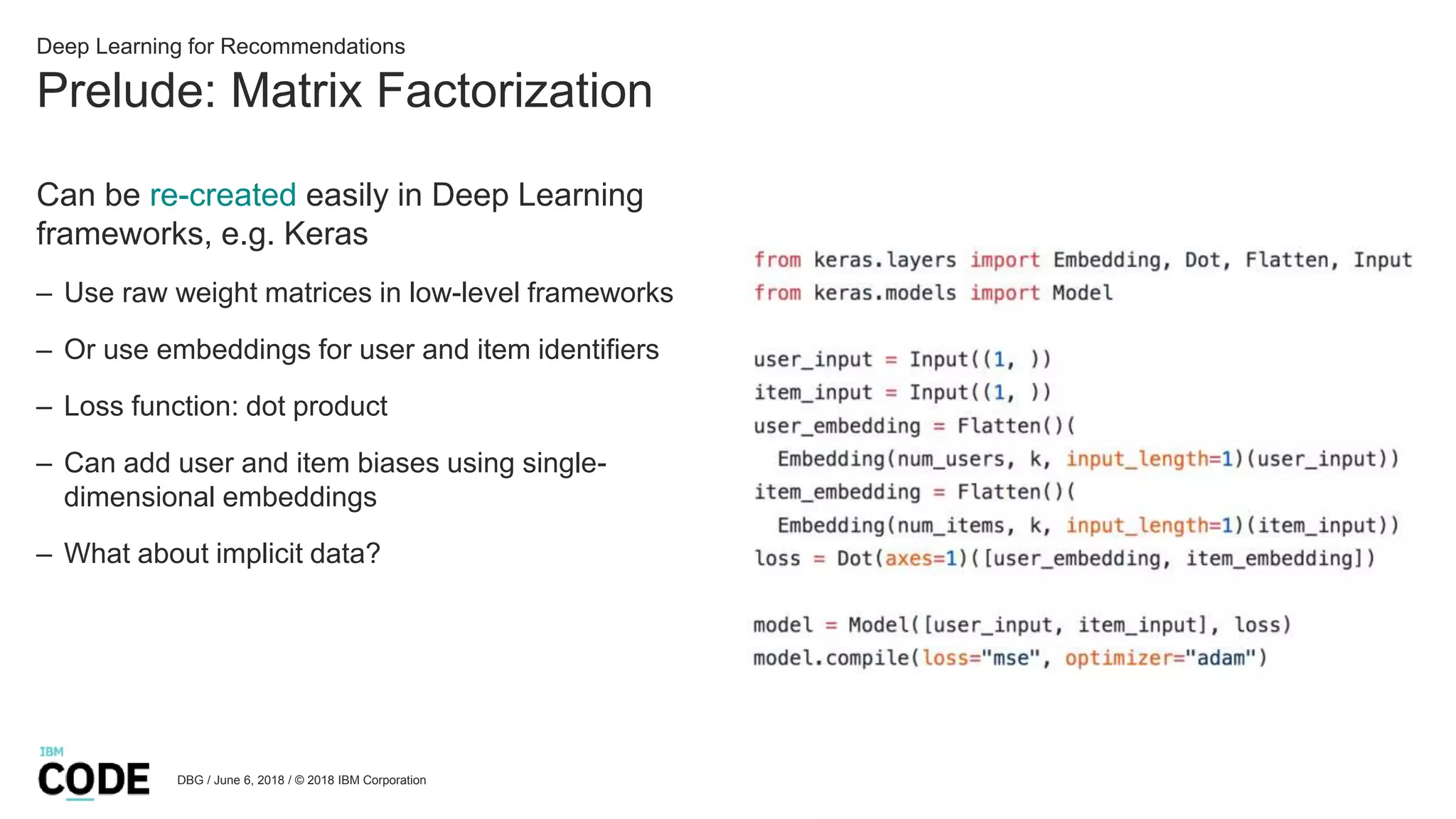

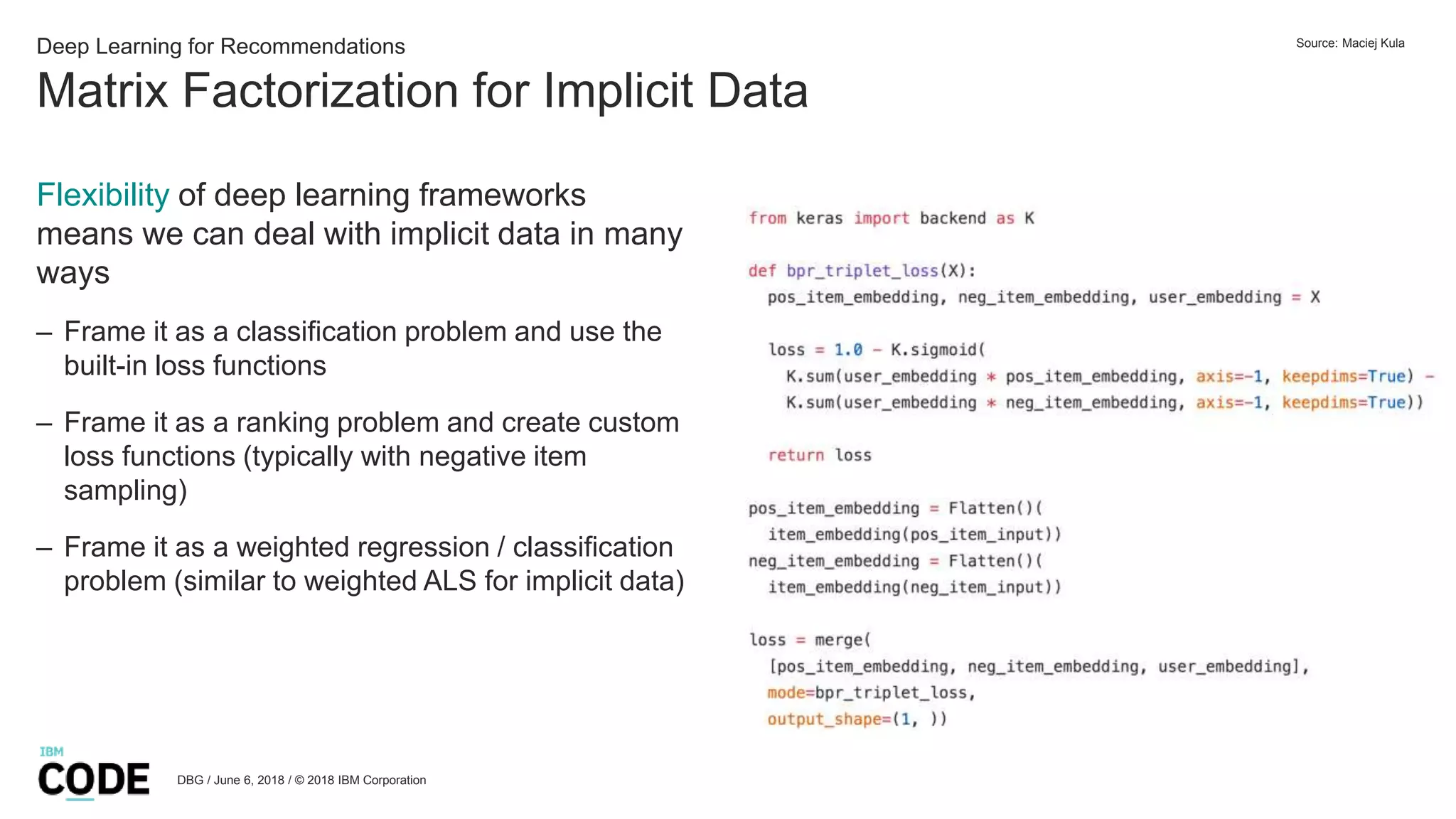

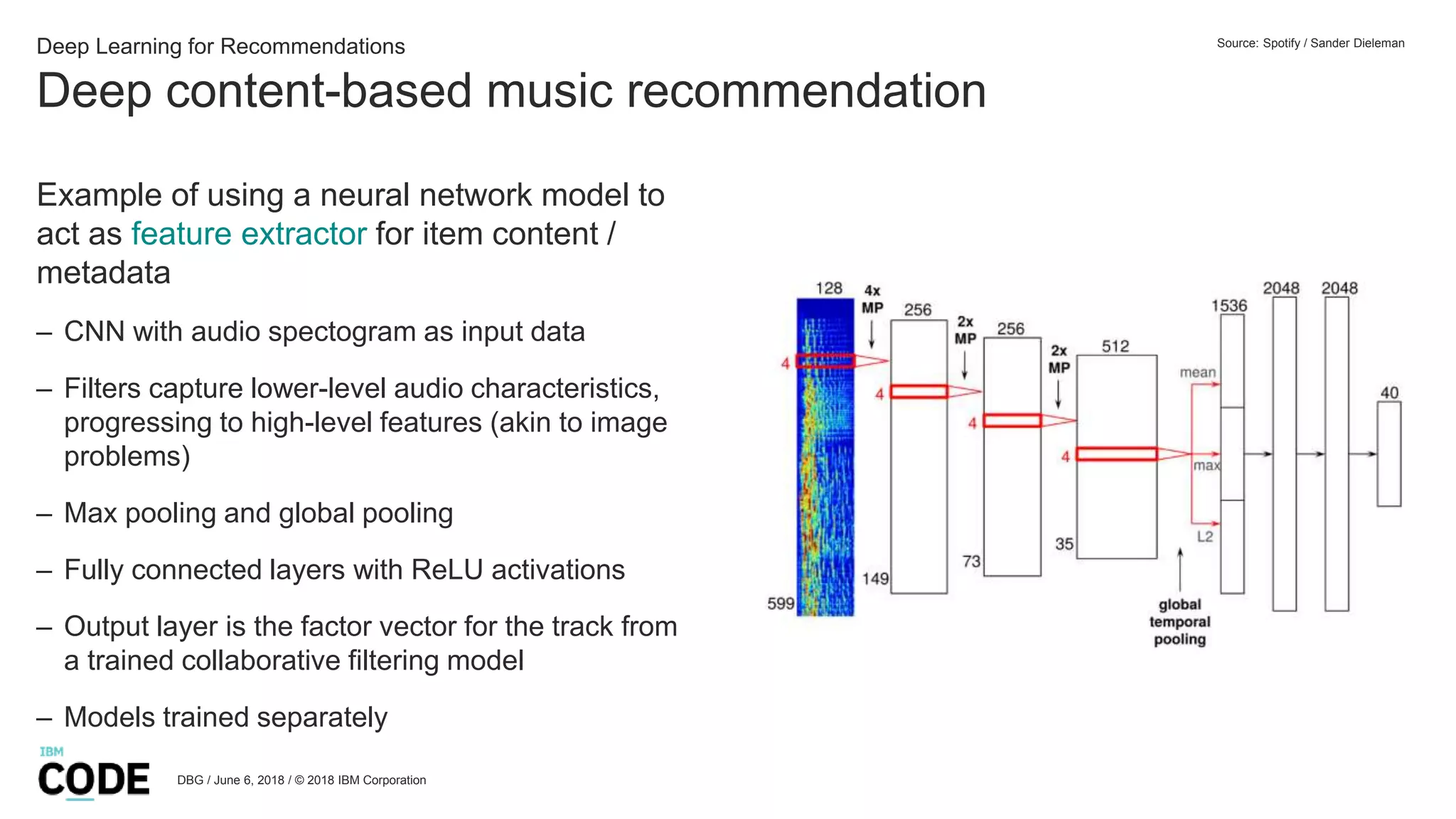

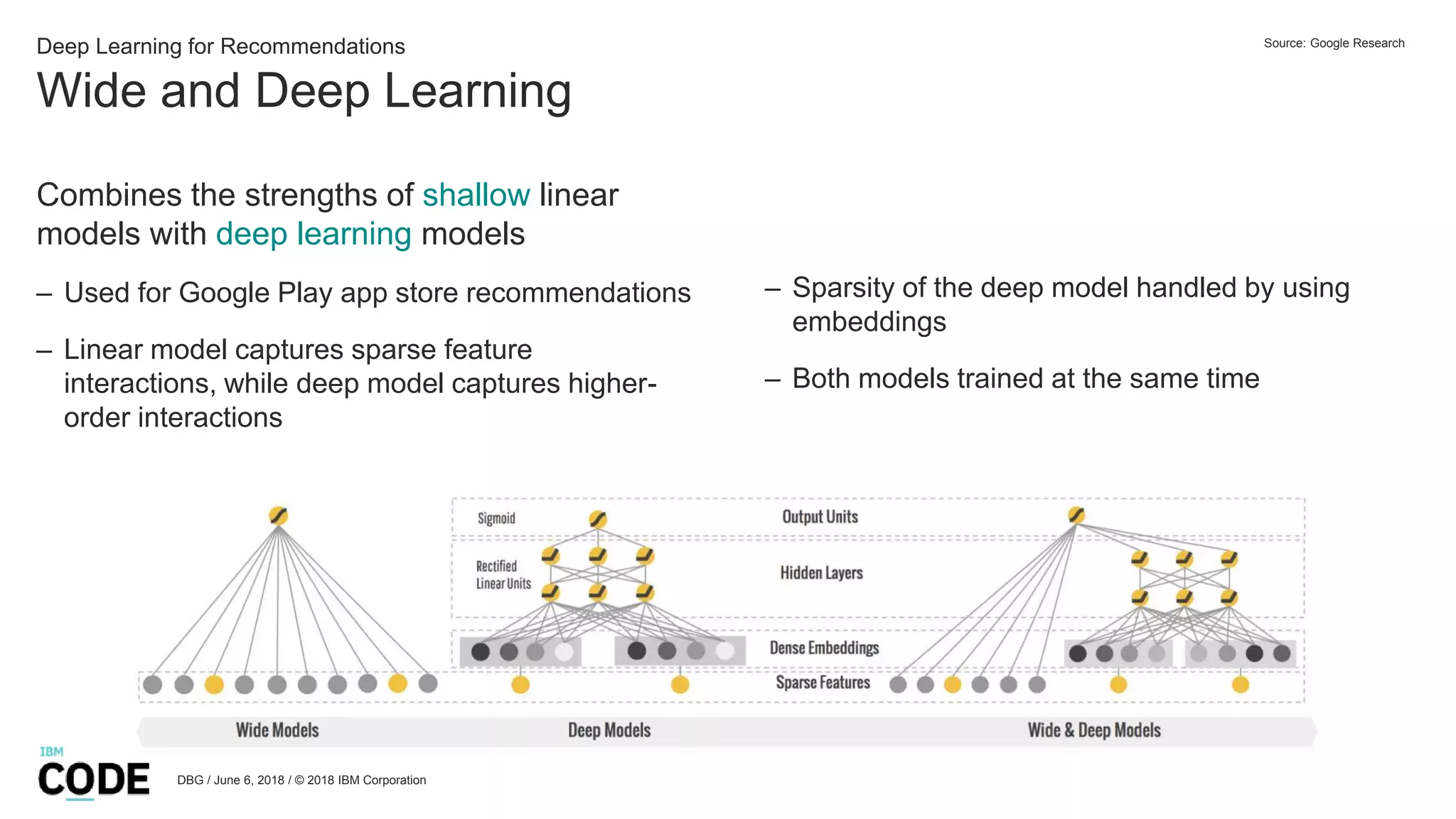

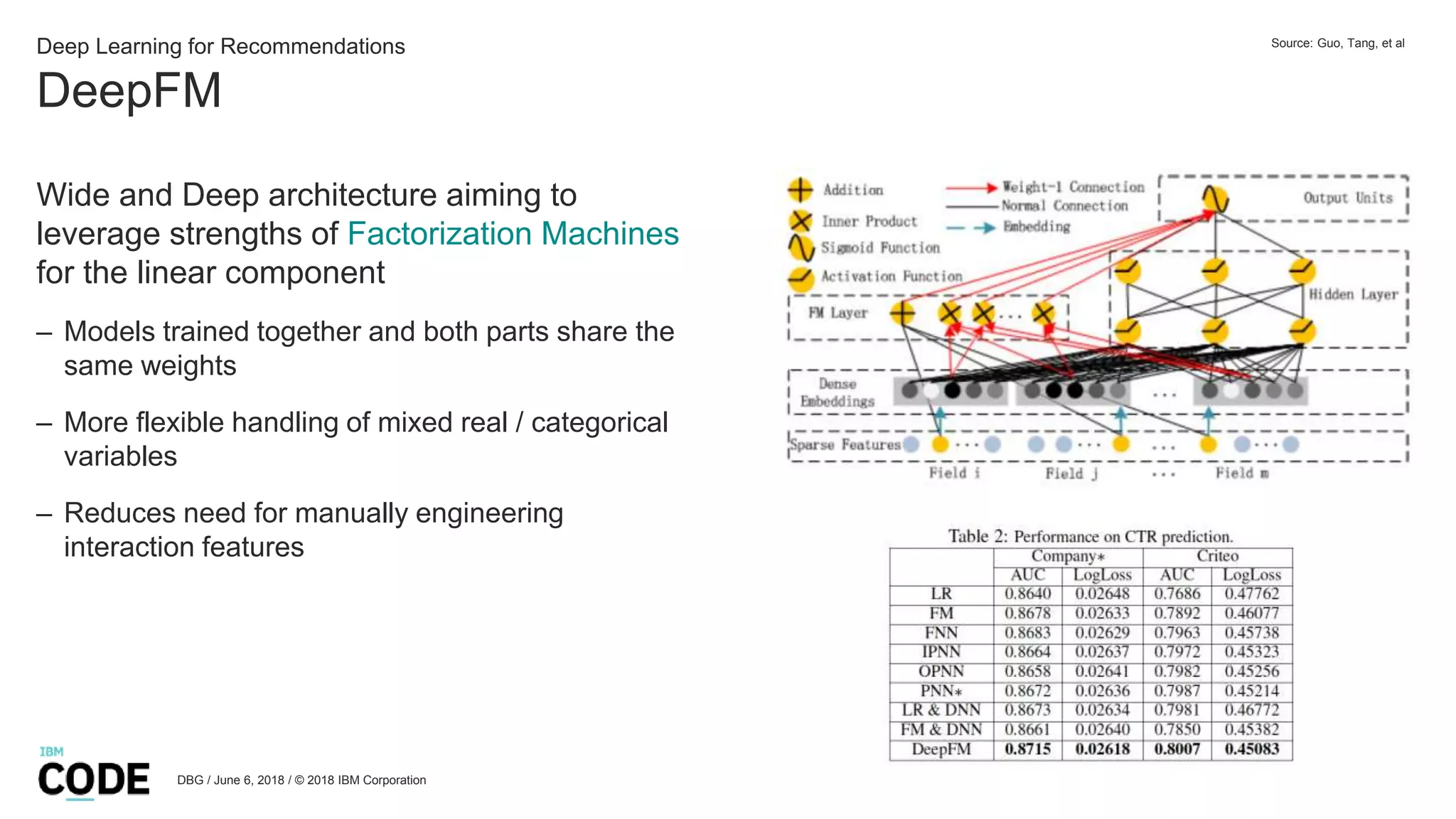

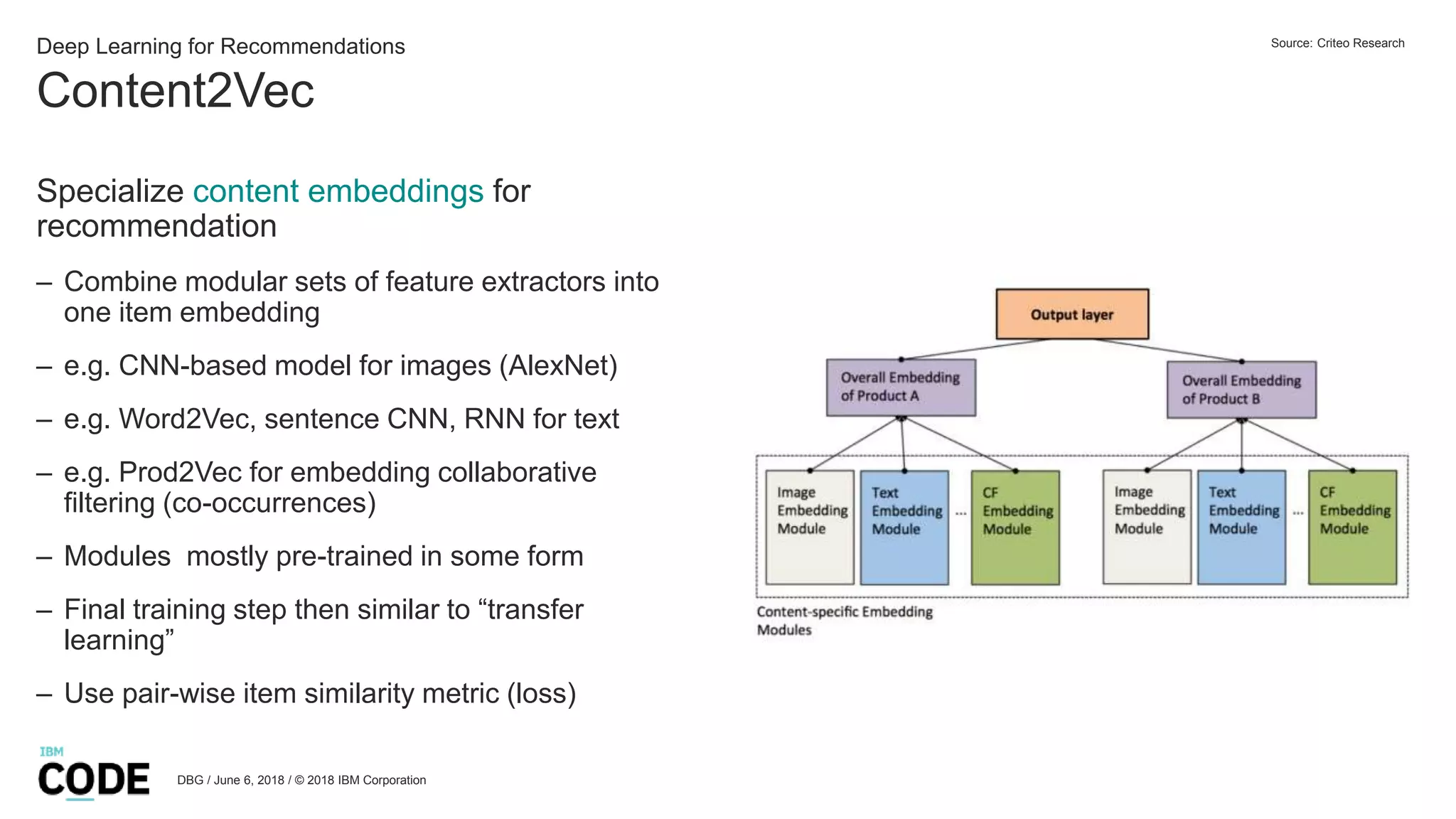

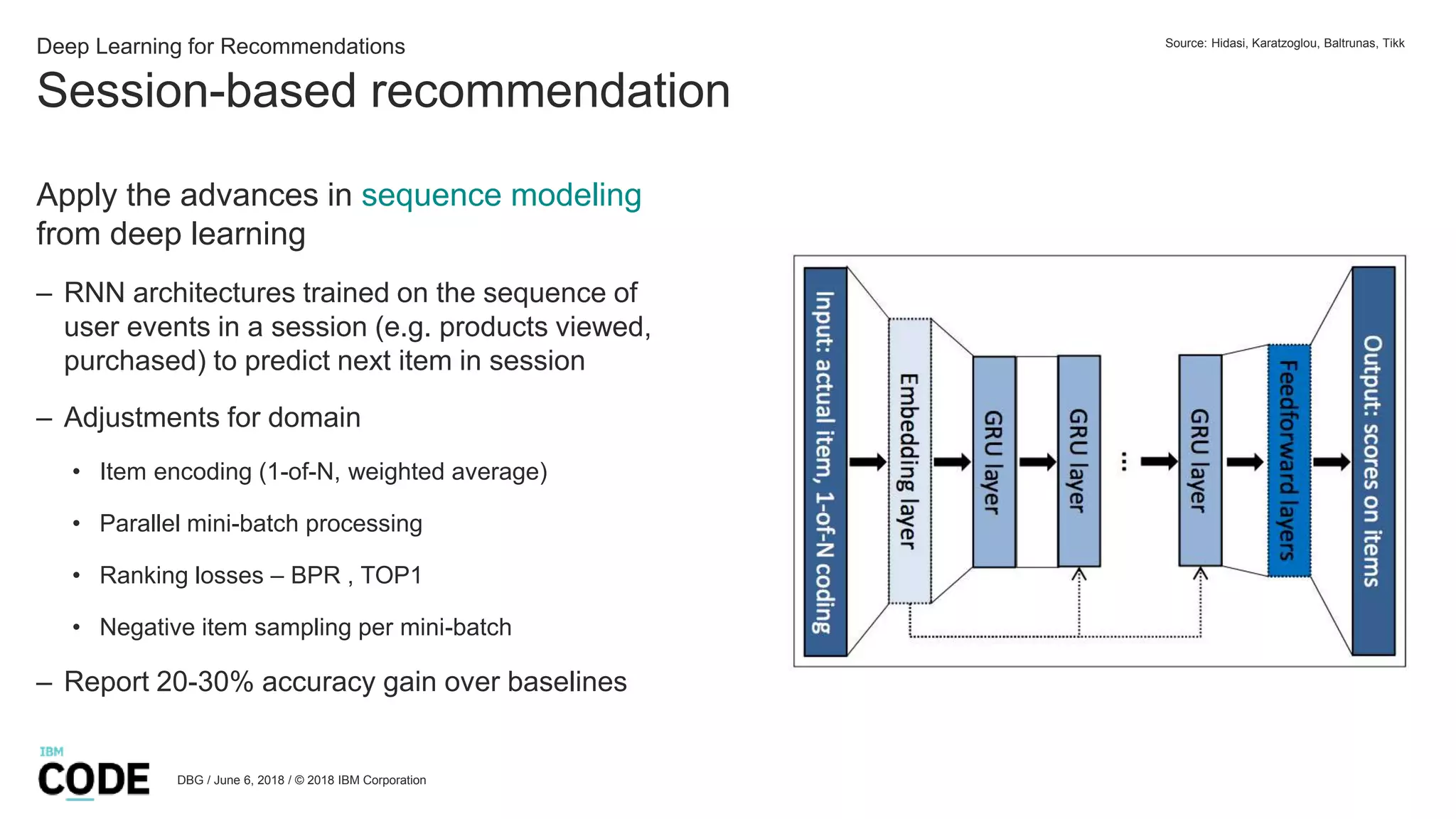

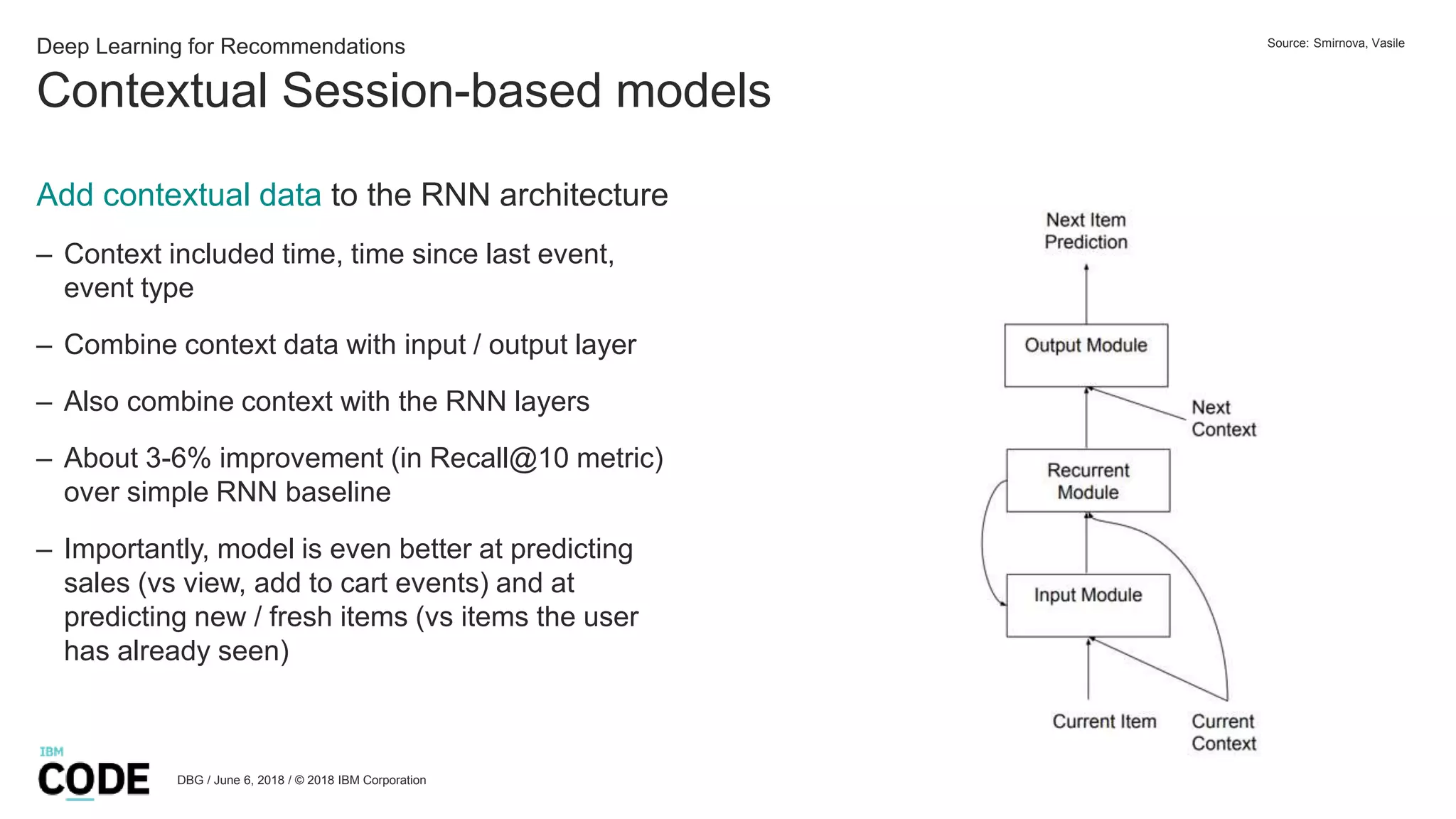

The document provides an overview of deep learning applications in recommender systems, highlighting the evolution of recommendation models, including the use and advantages of deep learning frameworks for handling both implicit and explicit user data. It discusses various methodologies, challenges, and future directions in improving recommendation accuracy and model efficiency. The potential for significant advancements in recommendations, particularly in cold start scenarios, is emphasized alongside the flexibility and incremental gains offered by deep learning techniques.