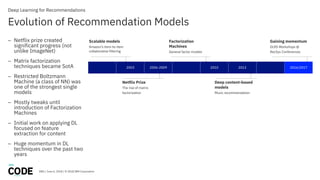

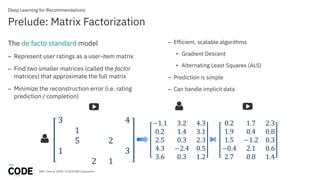

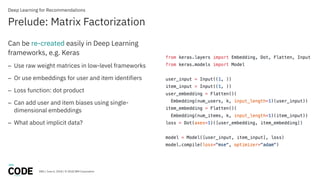

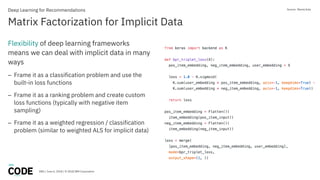

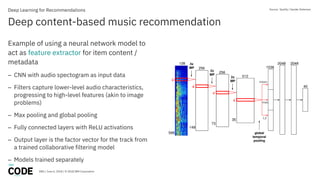

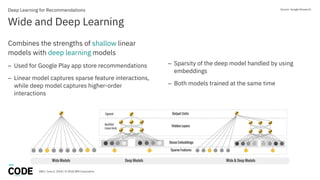

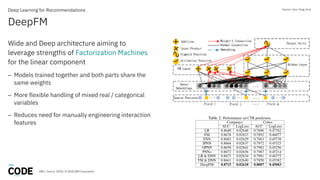

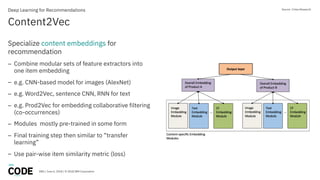

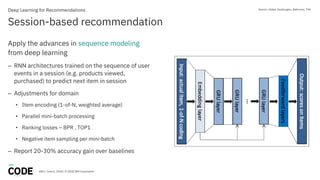

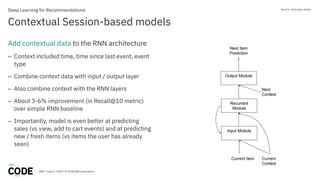

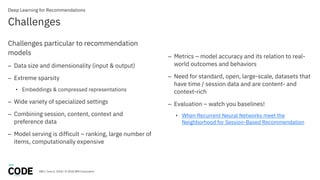

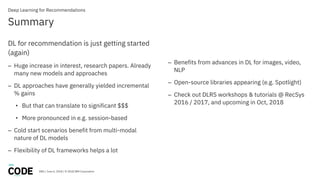

The document discusses deep learning techniques for recommender systems, outlining the evolution of recommendation models and the specific challenges they face. It highlights advancements such as deep content-based models, session-based recommendations, and contextual models, emphasizing the benefits of deep learning frameworks in improving predictive accuracy. The document concludes with future directions in research and industry, noting the growing interest in deep learning for recommendations and its potential market impact.