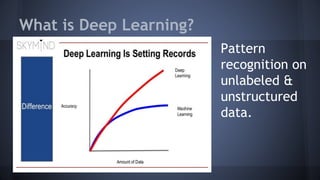

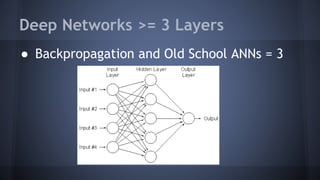

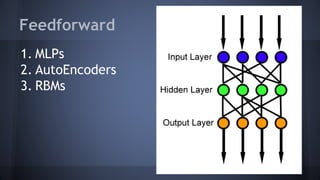

This document provides an overview of deep learning, including what it is, why it is difficult, and problems to consider. Deep learning uses neural networks with 3 or more layers to perform pattern recognition on unlabeled and unstructured data like images and text. It is computationally intensive and requires large datasets and specialized hardware like GPUs. Some challenges include dealing with messy real-world data, scaling networks across large clusters, combining different neural network types, and tuning hyperparameters.