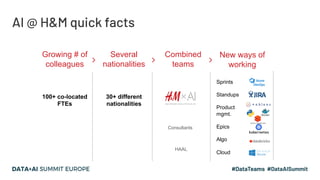

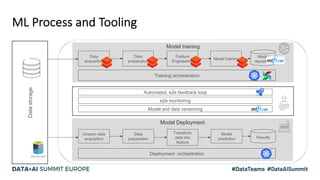

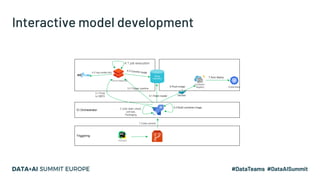

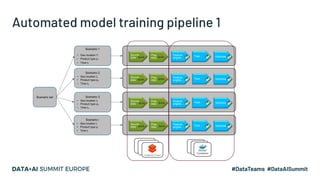

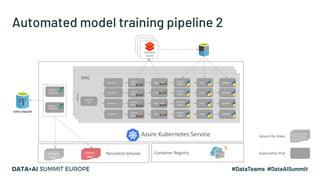

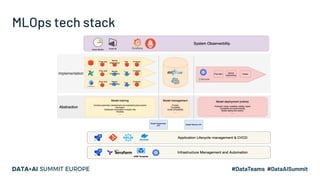

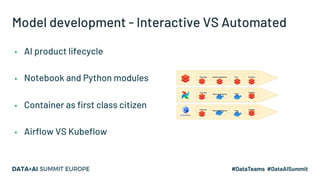

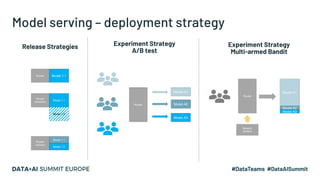

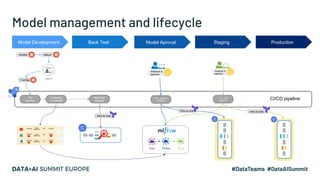

The document outlines H&M's AI journey and the implementation of MLOps at scale, detailing the evolution from initial proof of concepts in 2016 to establishing a robust AI function by 2019. It describes the key architectural elements of their machine learning processes and the collaborative efforts across various teams to optimize AI-driven capabilities in retail. Additionally, it emphasizes the importance of model scalability, reproducibility, and ongoing feedback to refine their AI initiatives and ensure they contribute to business value.