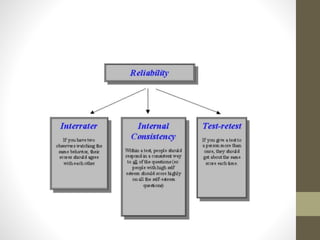

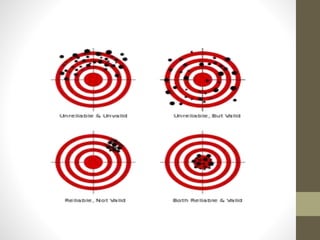

The document discusses reliability and validity in data collection. It defines reliability as the consistency of measurements and validity as the degree to which a measure accurately represents what it intends to measure. It describes different types of reliability, including internal consistency, test-retest reliability, and inter-rater reliability. It also discusses different types of validity, such as face validity, content validity, criterion-related validity, and construct validity. The document emphasizes that reliability and validity are both important for accurate and consistent research results.