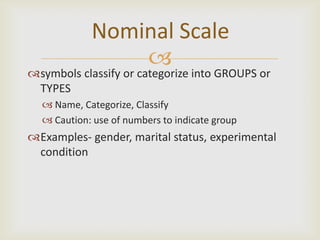

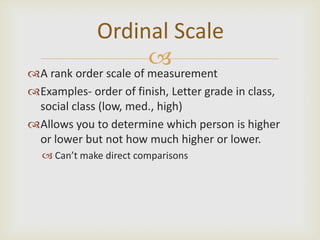

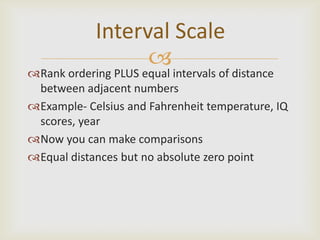

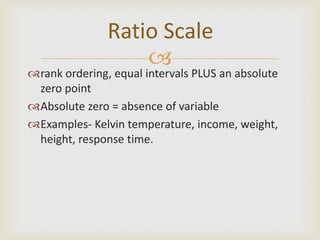

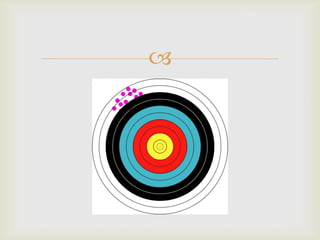

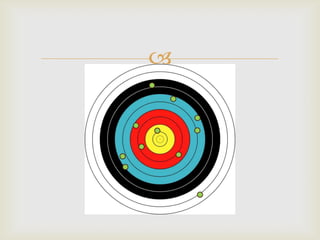

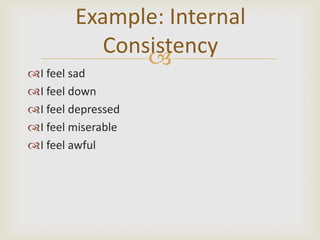

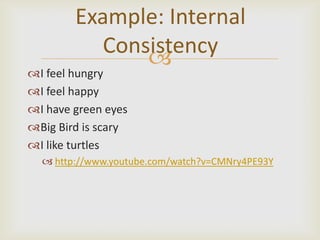

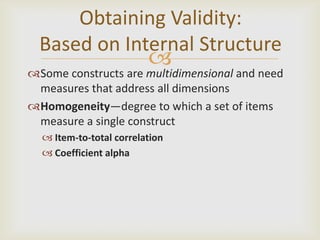

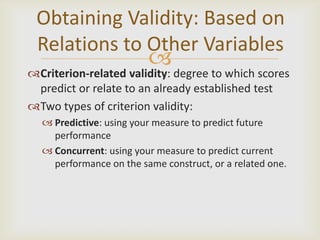

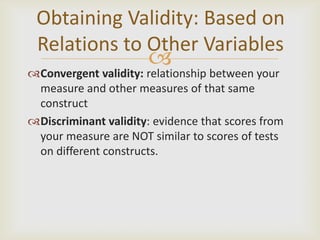

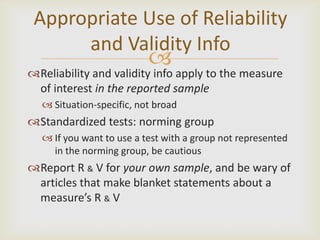

This document discusses measuring variables and scales of measurement, including nominal, ordinal, interval, and ratio scales. It also discusses psychometric properties of reliability and validity. Reliability refers to the consistency or stability of scores and is measured through test-retest reliability, equivalent forms reliability, internal consistency, and interrater reliability. Validity refers to whether a test accurately measures what it intends to measure and is obtained through content validity, construct validity, and criterion validity. Reliability is necessary for validity but not sufficient on its own.