CuPy is an open-source, NVIDIA GPU-compatible library designed to accelerate NumPy operations, making it easy for Python users to write CPU/GPU-agnostic code. The library boasts significant performance improvements over traditional NumPy, especially in large matrix manipulations, and supports a wide range of data types and advanced indexing. Recent updates include improved Windows and AMD GPU support, alongside new functions and features for efficient memory management and custom kernel creation.

![CuPy: A NumPy-Compatible Library for NVIDIA GPU

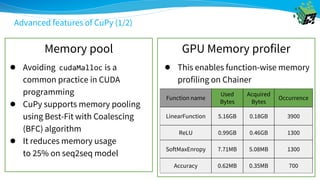

● NumPy is extensively used in Python but GPU is not supported

● GPU is getting faster and more important for scientific computing

import numpy as np

x_cpu = np.random.rand(10)

W_cpu = np.random.rand(10, 5)

y_cpu = np.dot(x_cpu, W_cpu)

import cupy as cp

x_gpu = cp.random.rand(10)

W_gpu = cp.random.rand(10, 5)

y_gpu = cp.dot(x_gpu, W_gpu)

y_gpu = cp.asarray(y_cpu)

y_cpu = cp.asnumpy(y_gpu)

for xp in [numpy, cupy]:

x = xp.random.rand(10)

W = xp.random.rand(10, 5)

y = xp.dot(x, W)

CPU/GPU-agnostic

NVIDIA GPUCPU](https://image.slidesharecdn.com/pycon2018cupy-180511165505/85/CuPy-A-NumPy-compatible-Library-for-GPU-5-320.jpg)

![Performance comparison with NumPy

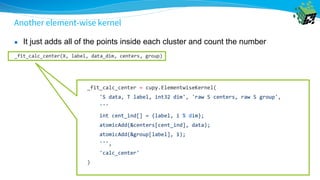

● CuPy is faster than NumPy even in simple manipulation of large matrix

Benchmark code

Size CuPy [ms] NumPy [ms]

10^4 0.58 0.03

10^5 0.97 0.20

10^6 1.84 2.00

10^7 12.48 55.55

10^8 84.73 517.17

Benchmark result

6x faster](https://image.slidesharecdn.com/pycon2018cupy-180511165505/85/CuPy-A-NumPy-compatible-Library-for-GPU-20-320.jpg)

![Looks very easy?

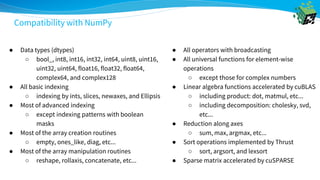

● CUDA and its libraries are not designed for Python nor NumPy

━ CuPy is not just a wrapper of CUDA libraries for Python

━ CuPy is a fast numerical computation library on GPU with NumPy-compatible API

● NumPy specification is not documented

━ We have carefully investigated some unexpected behaviors of NumPy

━ CuPy tries to replicate NumPy’s behavior as much as possible

● NumPy’s behaviors vary between different versions

━ e.g, NumPy v1.14 changed the output format of __str__

• `[ 0. 1.]` -> `[0. 1.]` (no space)](https://image.slidesharecdn.com/pycon2018cupy-180511165505/85/CuPy-A-NumPy-compatible-Library-for-GPU-24-320.jpg)