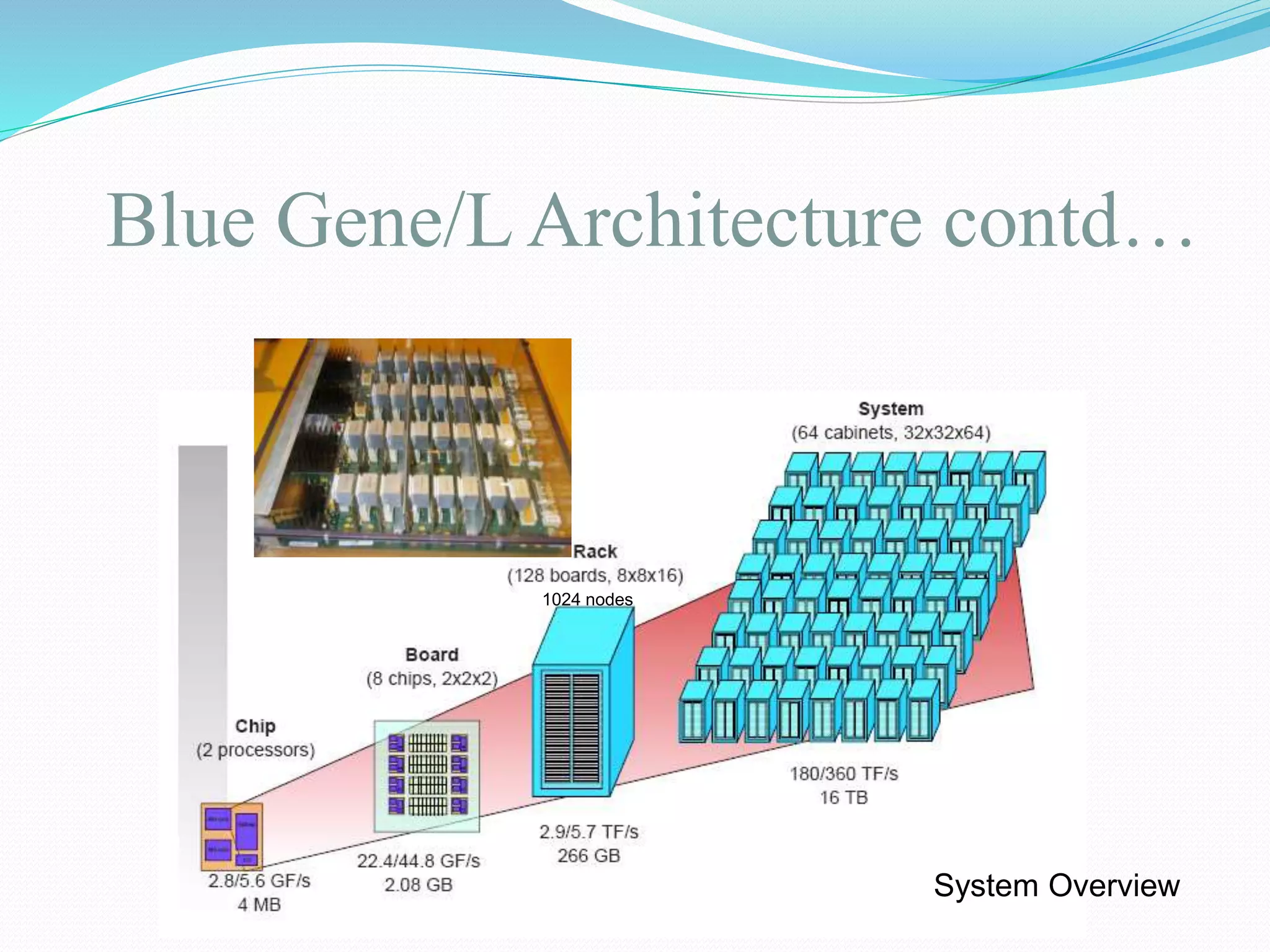

Blue Gene is a massively parallel supercomputer architecture developed by IBM using embedded PowerPC processors. It was named Blue Gene due to IBM's corporate color and its intended use for computational biology and protein folding. Major Blue Gene projects include Blue Gene/L, Blue Gene/C, Blue Gene/P, and Blue Gene/Q. Blue Gene/L was the first in the series and set performance records, achieving over 280 teraflops using a large number of low-power nodes connected by a 3D torus network. The Blue Gene architecture emphasizes low power consumption by using embedded processors designed for efficiency.