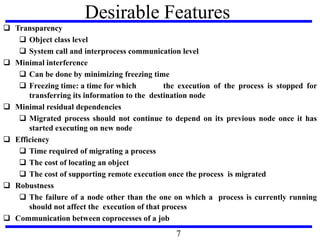

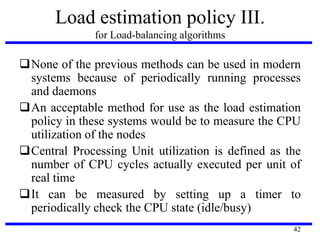

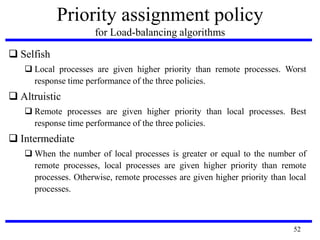

The document elaborates on process and resource management in distributed systems, focusing on process migration, scheduling algorithms, and load balancing approaches. It highlights various mechanisms for process migration, including preemptive and non-preemptive types, as well as desirable features such as transparency, efficiency, and robustness. Additionally, it discusses scheduling techniques aimed at optimizing resource allocation while considering factors like fairness, stability, and fault tolerance.