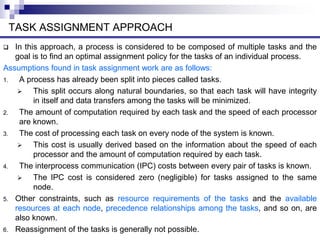

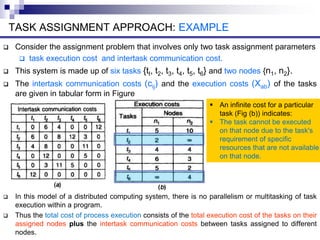

This document discusses different approaches to resource management in distributed systems, including task assignment, load balancing, and load sharing. The task assignment approach views each process as a collection of tasks and schedules the tasks across nodes to improve performance. The load balancing approach distributes processes across nodes to equalize workloads. The load sharing approach aims to ensure no nodes are idle while processes wait. Effective resource management requires algorithms that make quick decisions with minimal overhead while optimizing resource usage and response times.