This document provides information on various data mining and warehousing techniques including:

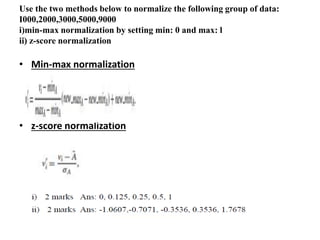

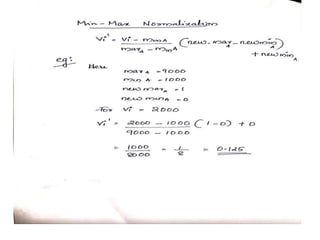

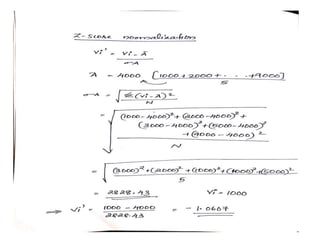

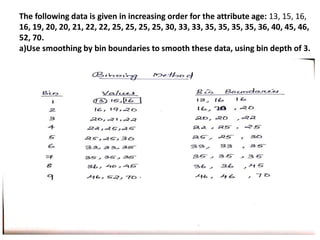

1. Normalization methods and binning for data preprocessing

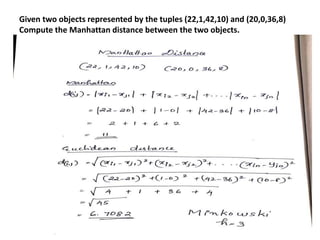

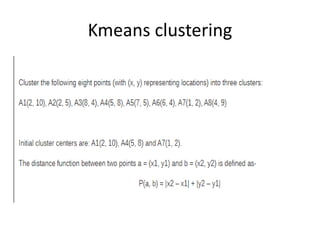

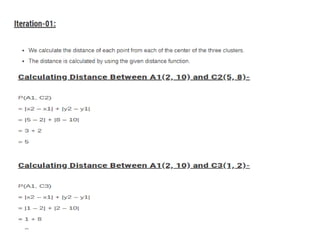

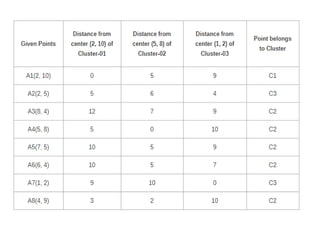

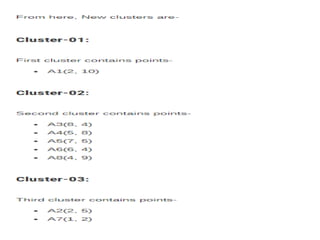

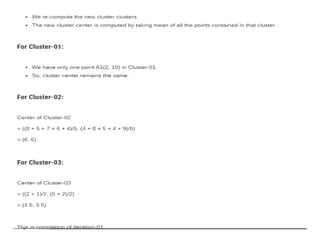

2. Computing Manhattan distance between objects

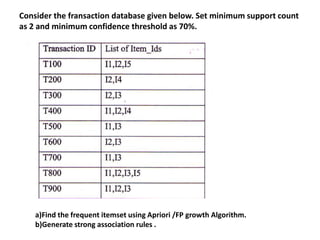

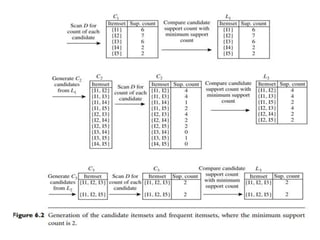

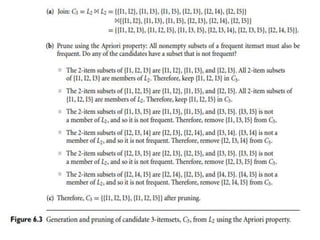

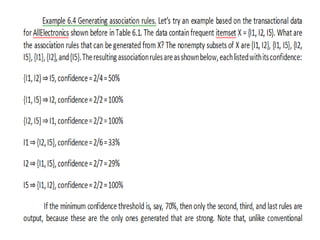

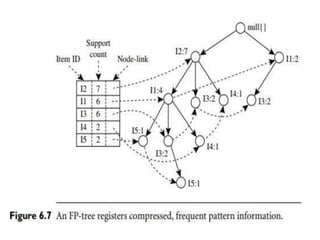

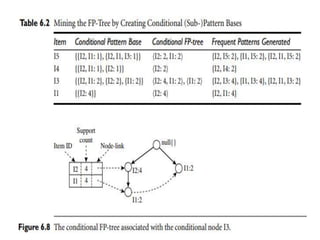

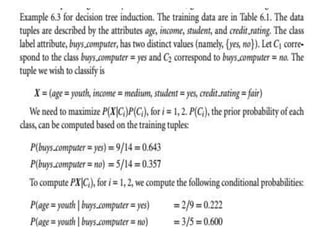

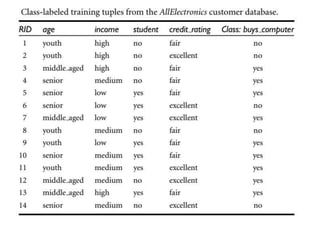

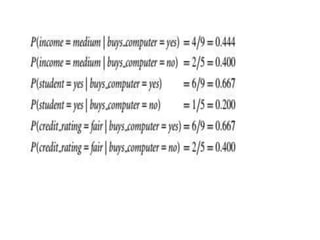

3. Using the Apriori and FP growth algorithms to find frequent itemsets and generate association rules