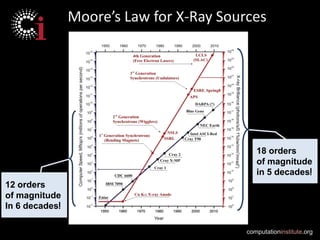

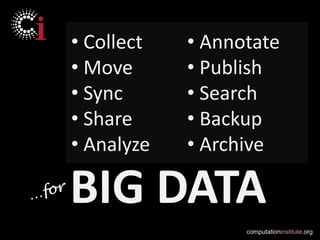

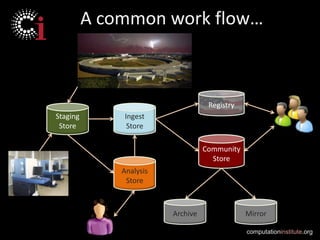

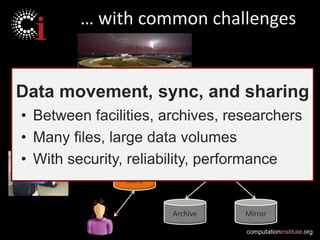

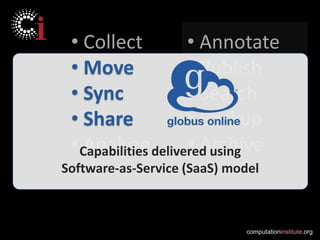

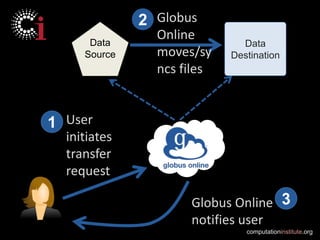

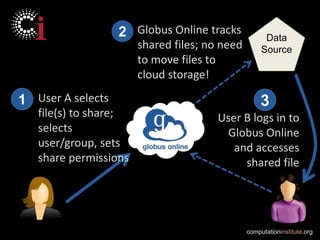

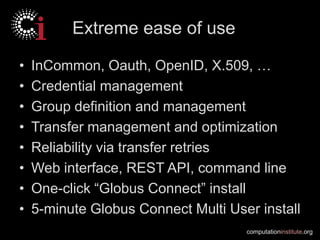

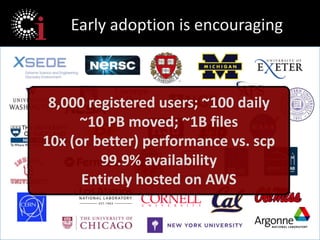

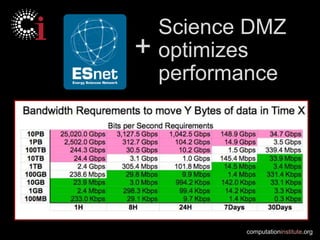

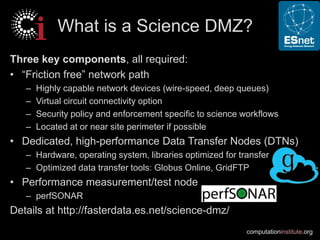

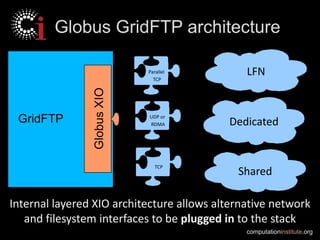

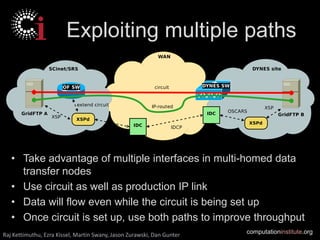

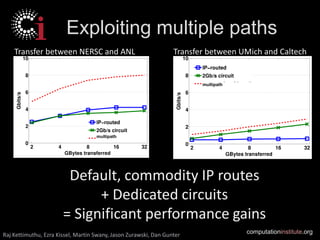

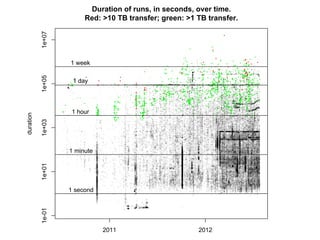

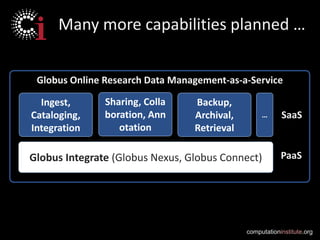

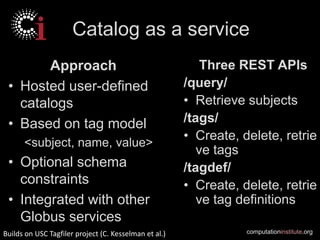

The document discusses advancements in cyberinfrastructure aimed at enhancing research data management, emphasizing the importance of user experience and high-performance infrastructure. It highlights the development of tools like Globus Online for seamless data transfer and management, and introduces the concept of a 'Science DMZ' to optimize network performance for scientific workflows. Overall, it outlines a vision for a 21st-century research ecosystem that makes sophisticated data handling accessible and affordable for a broader audience.