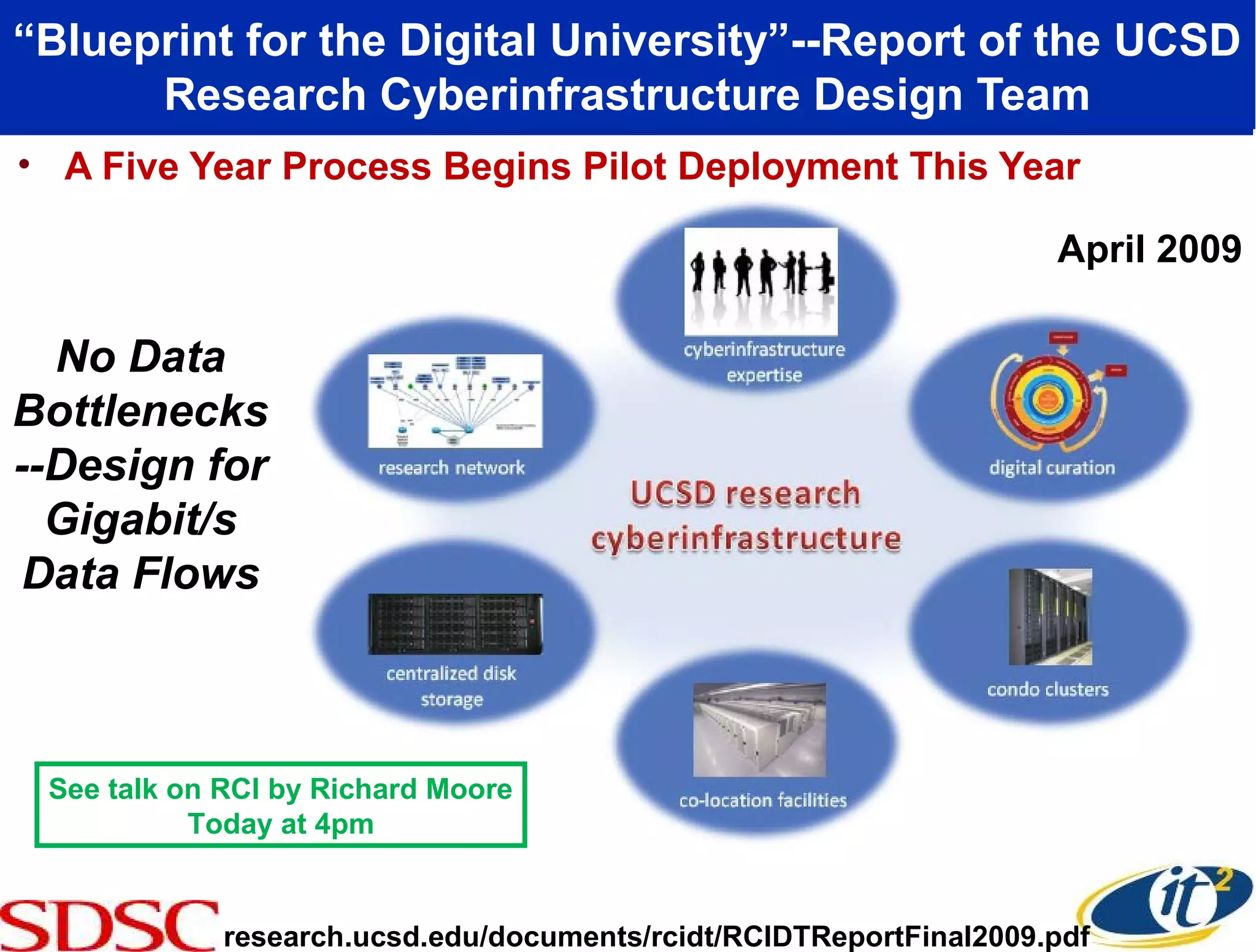

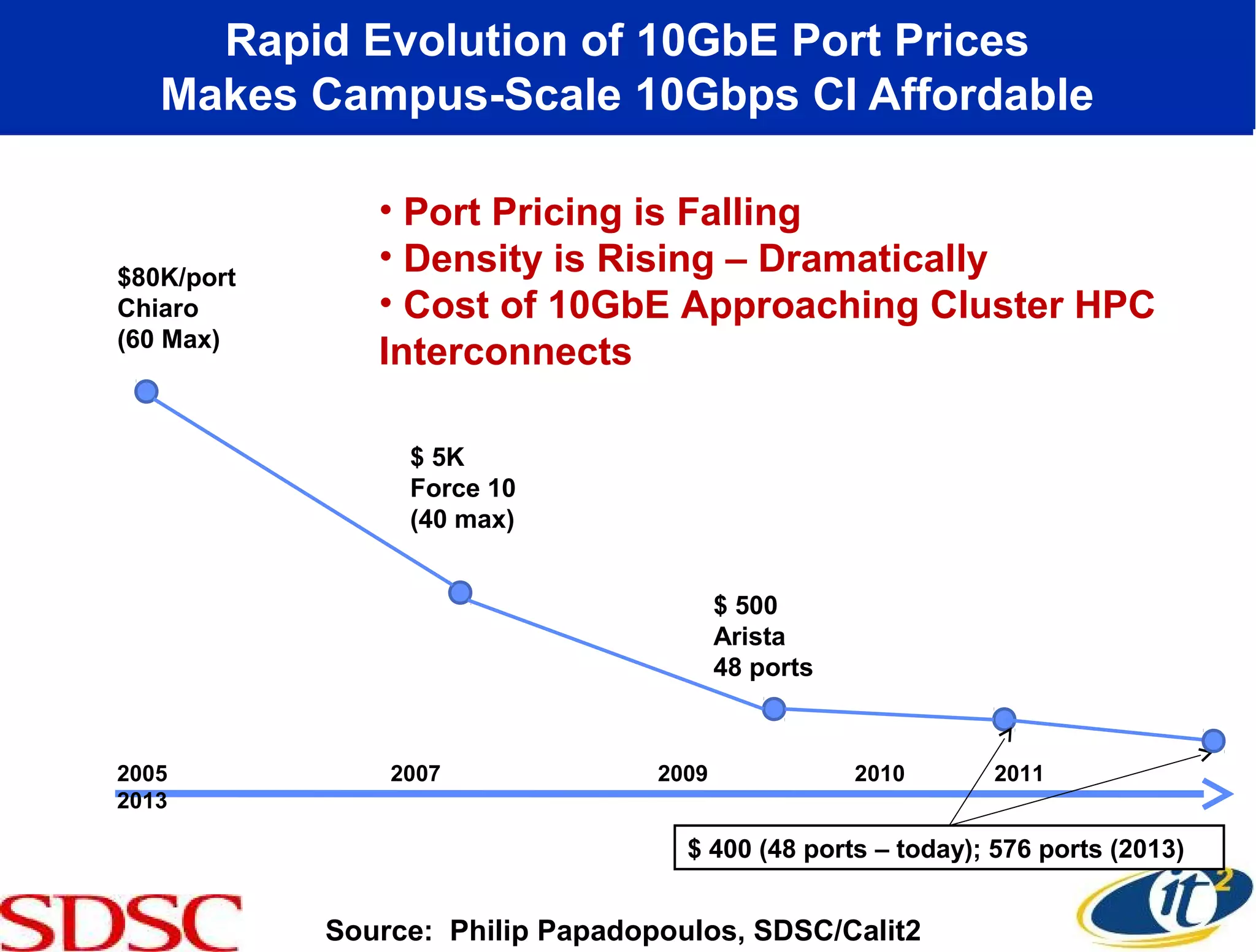

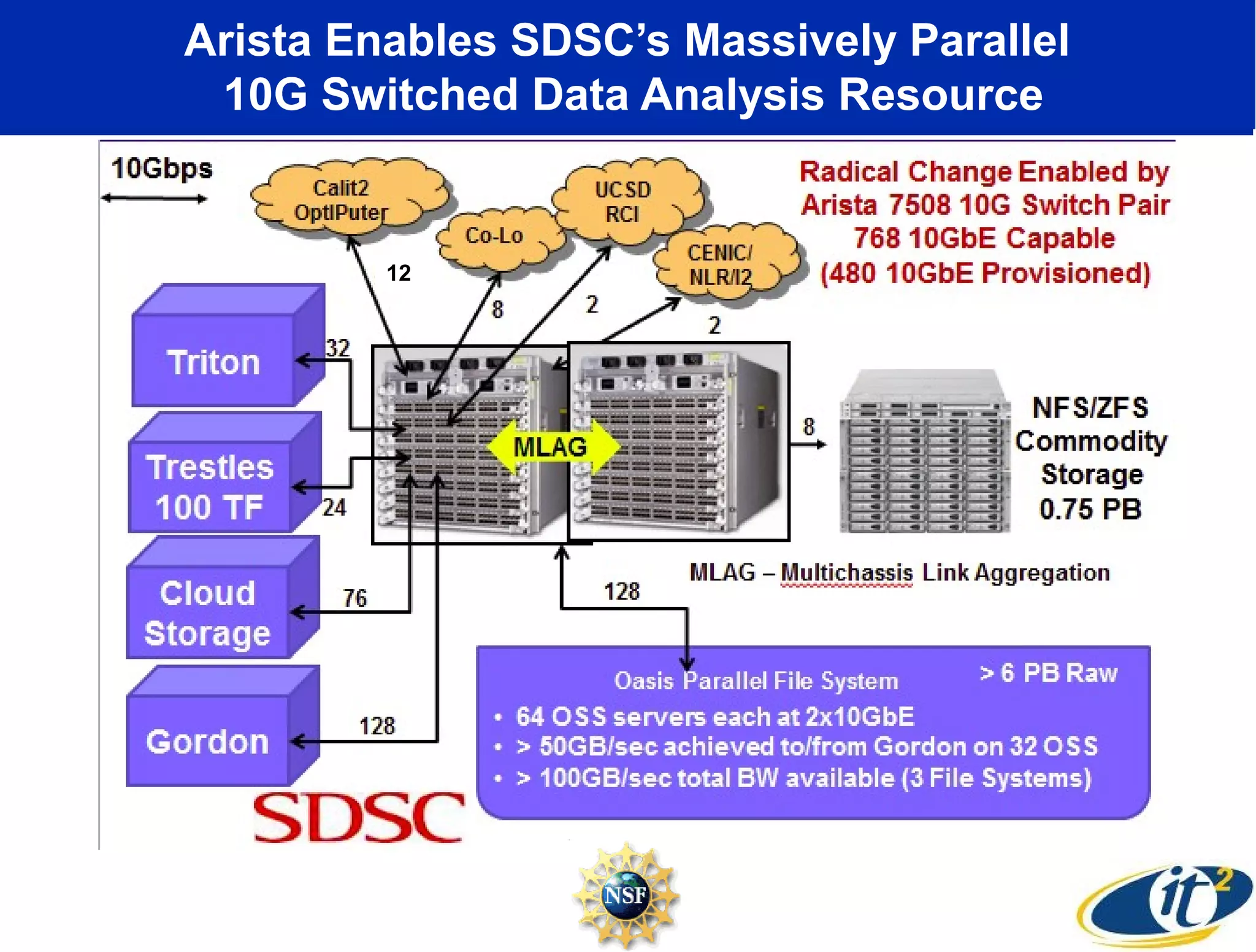

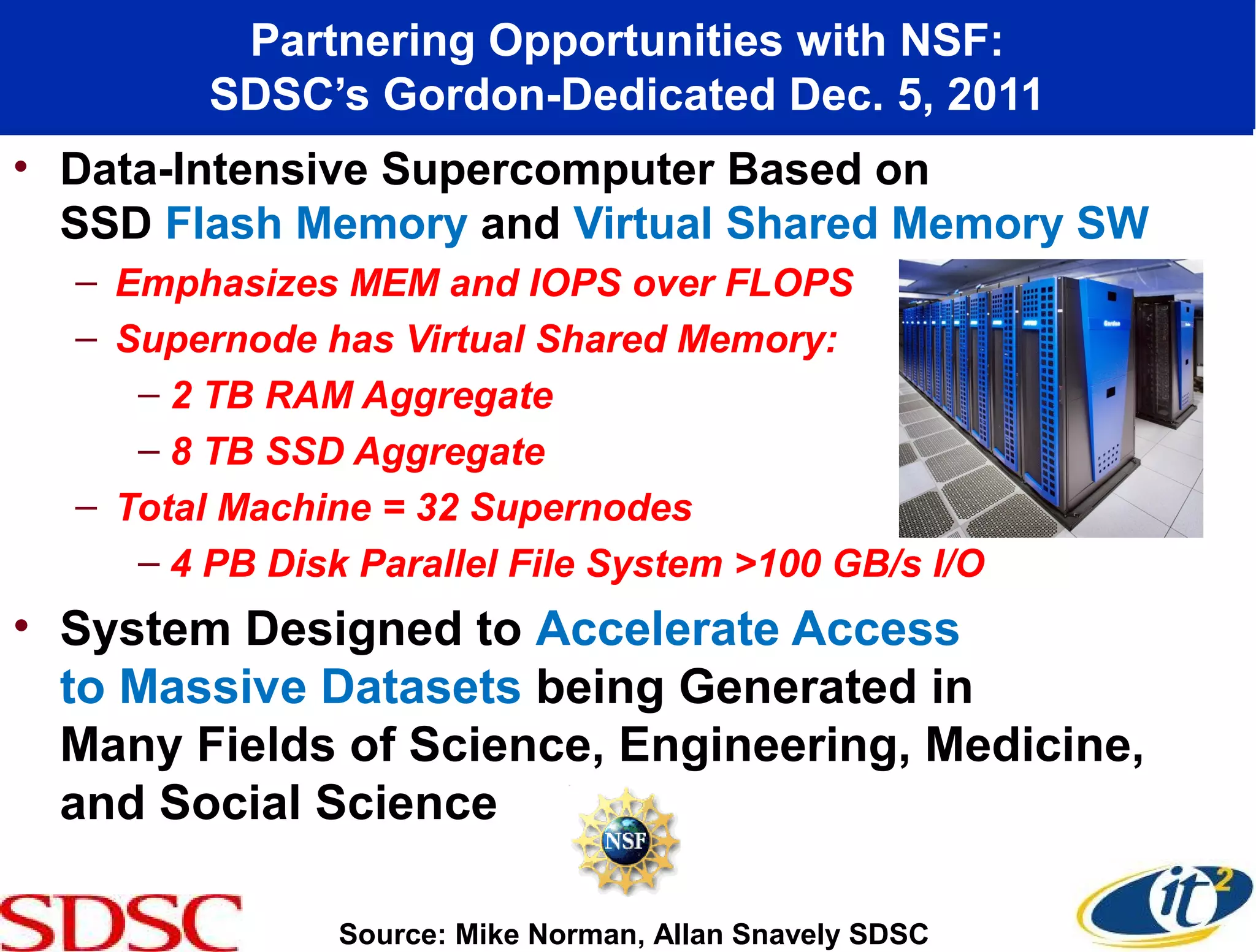

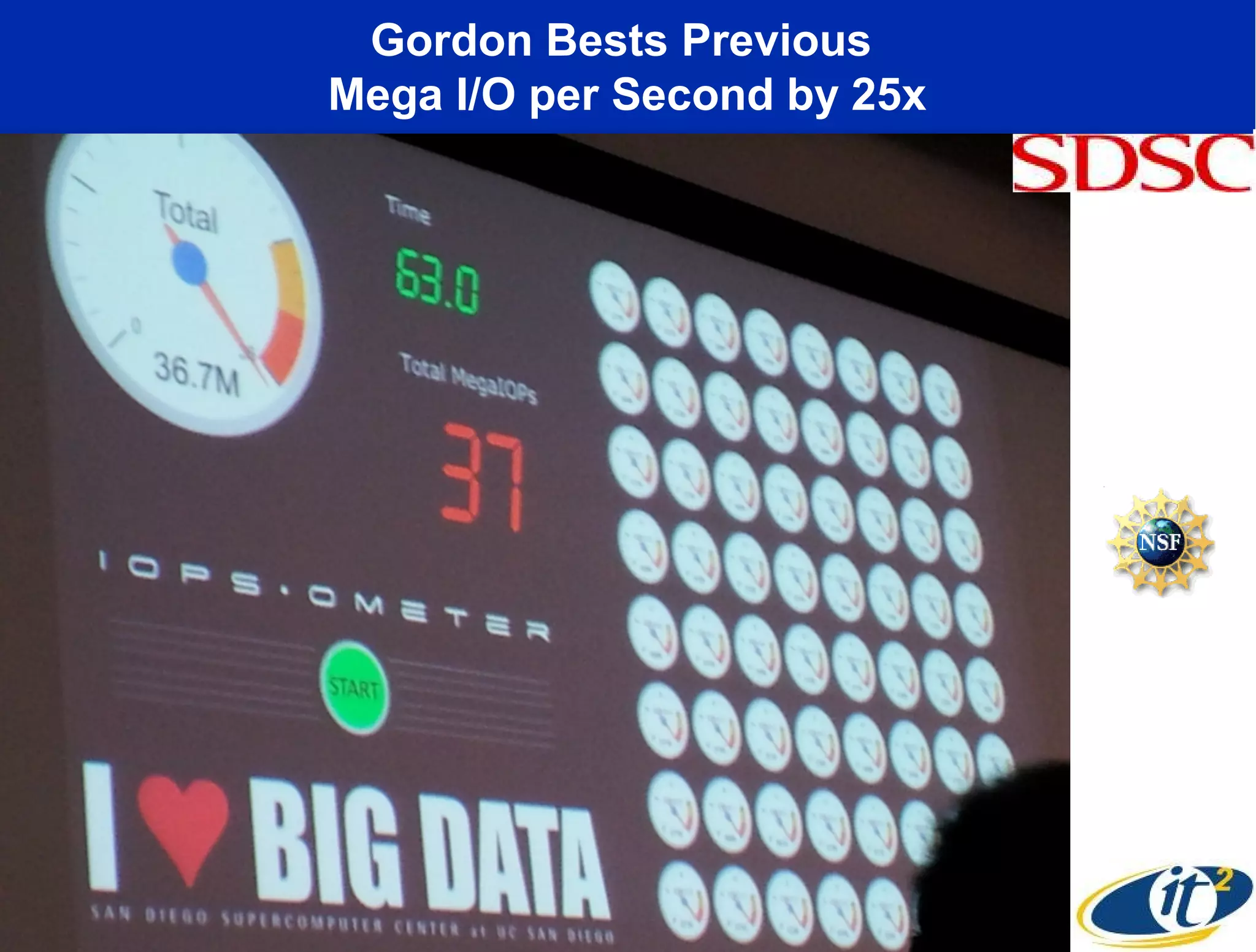

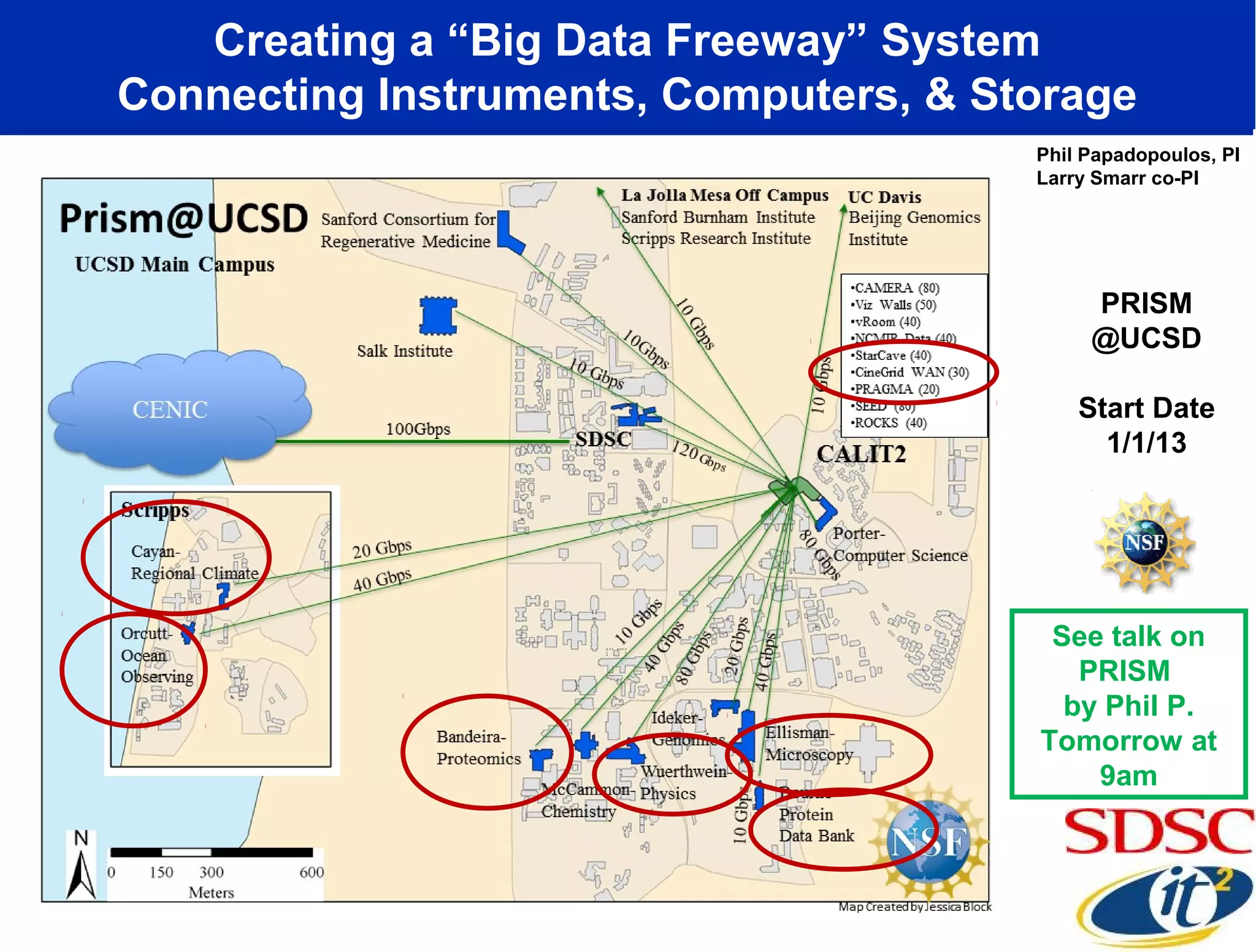

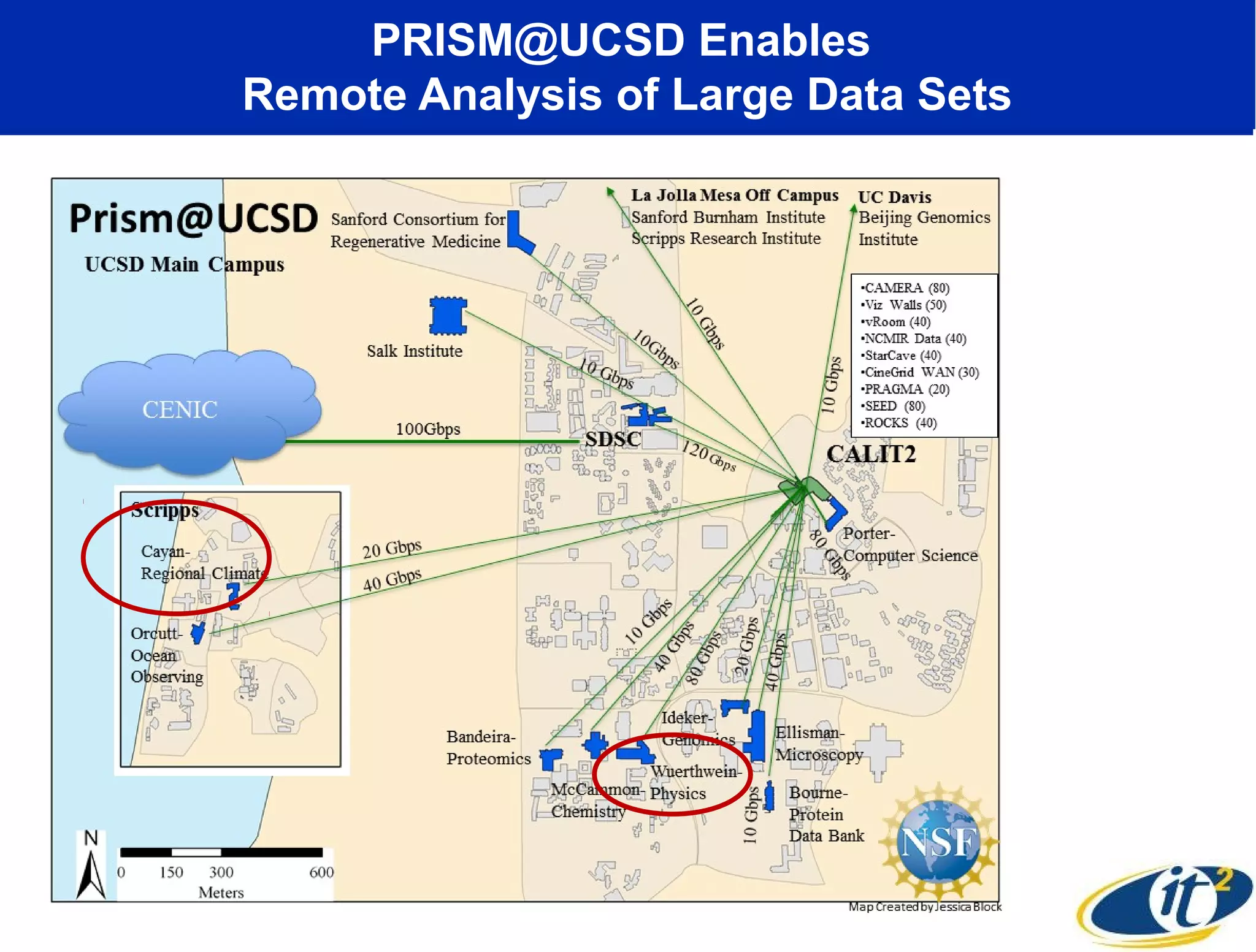

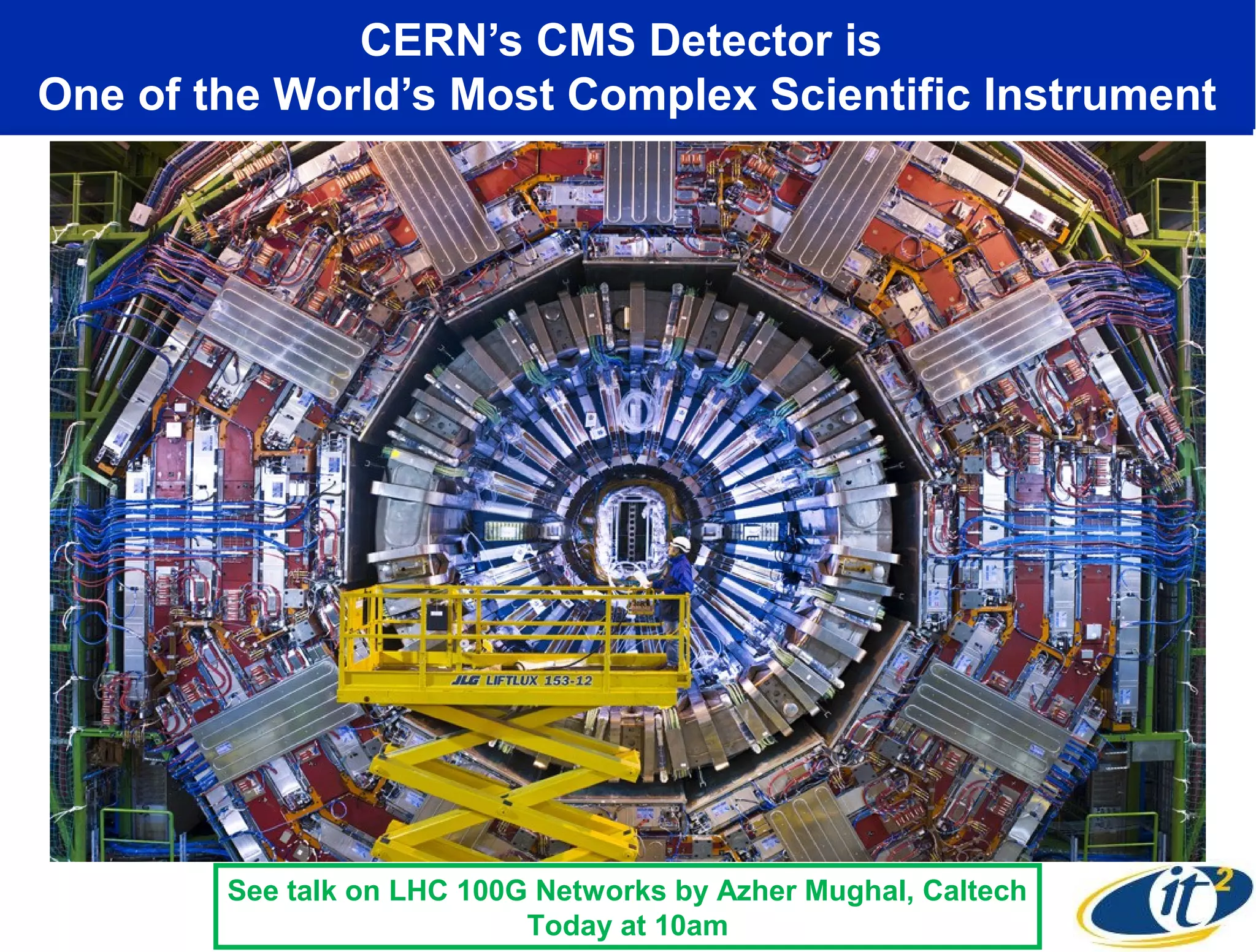

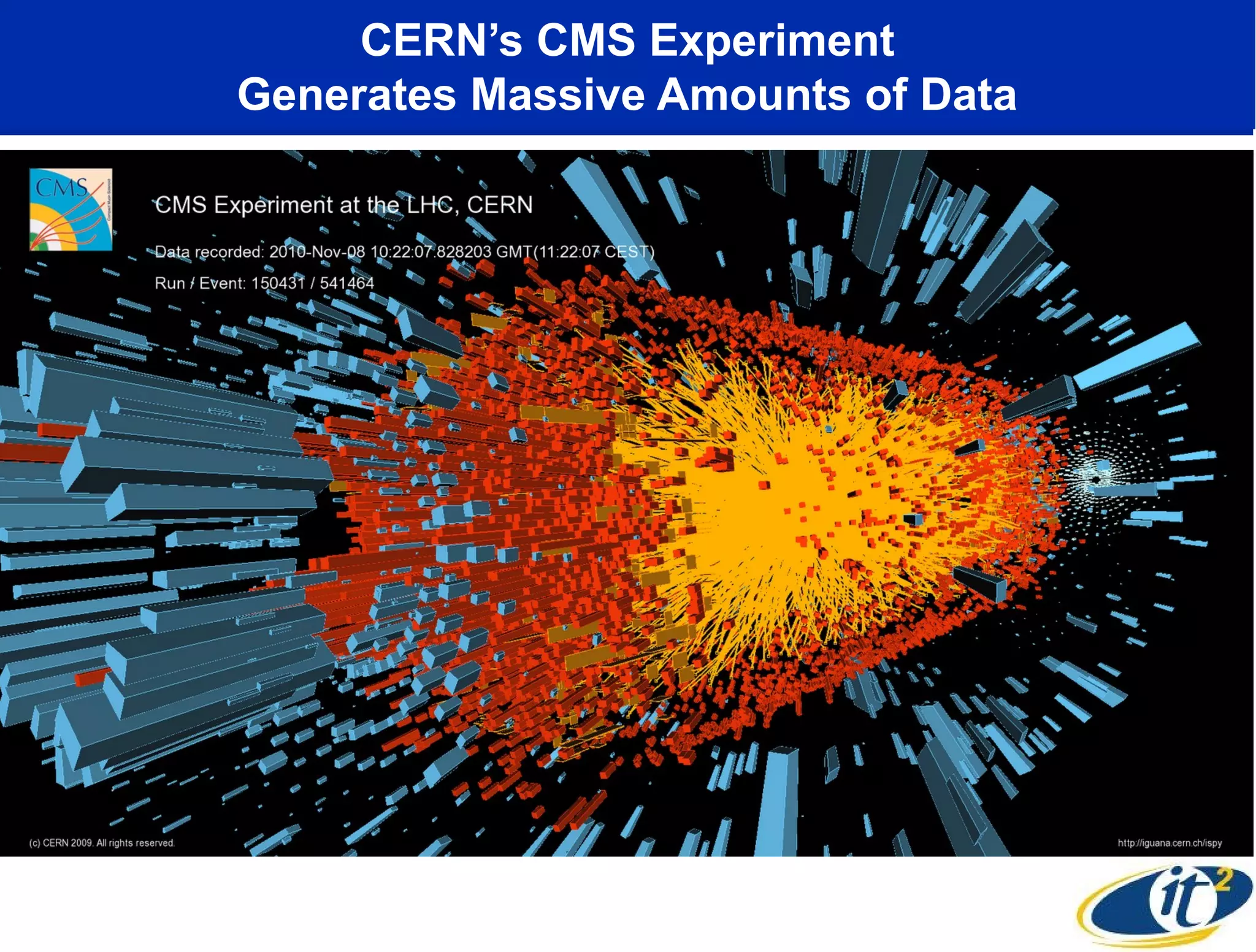

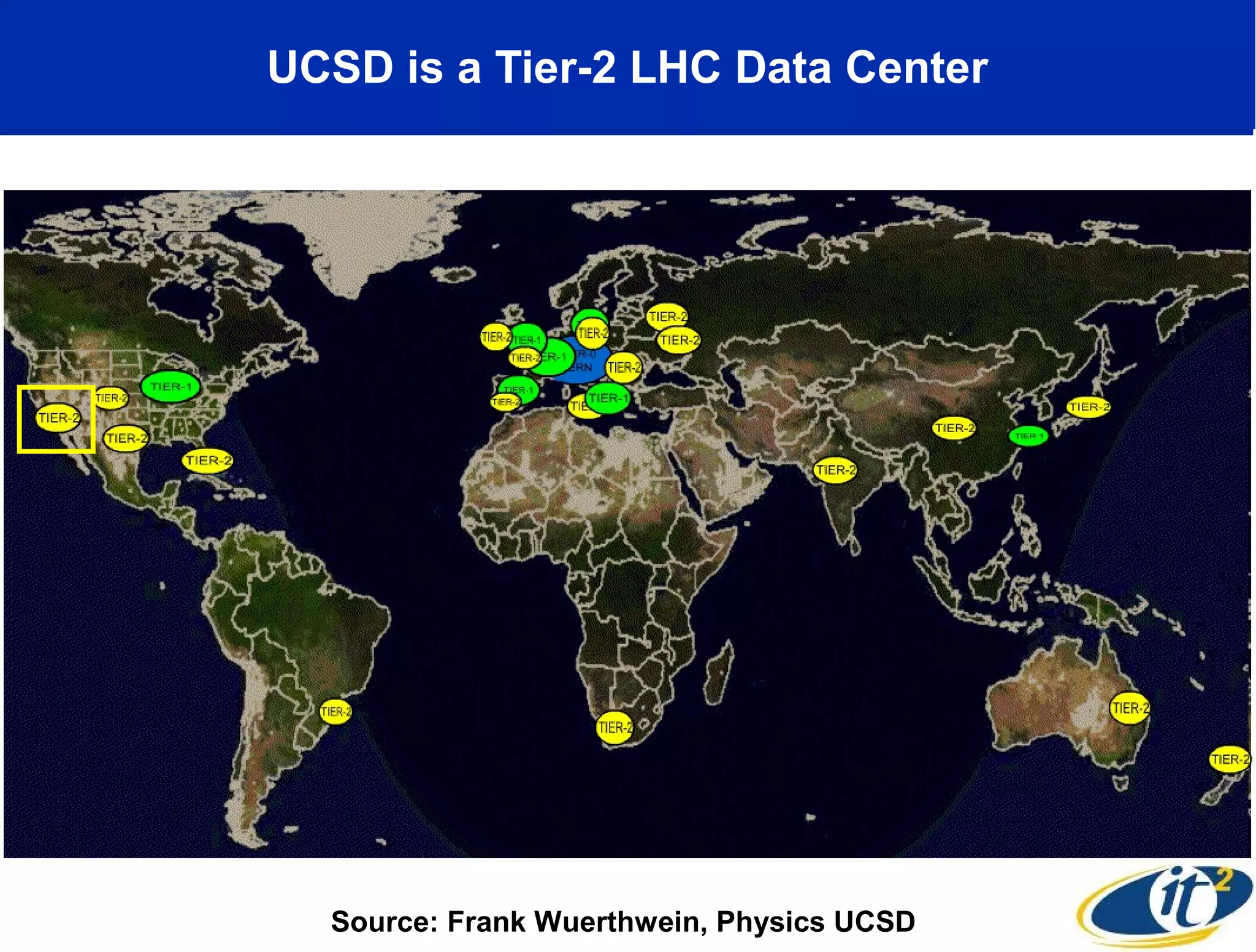

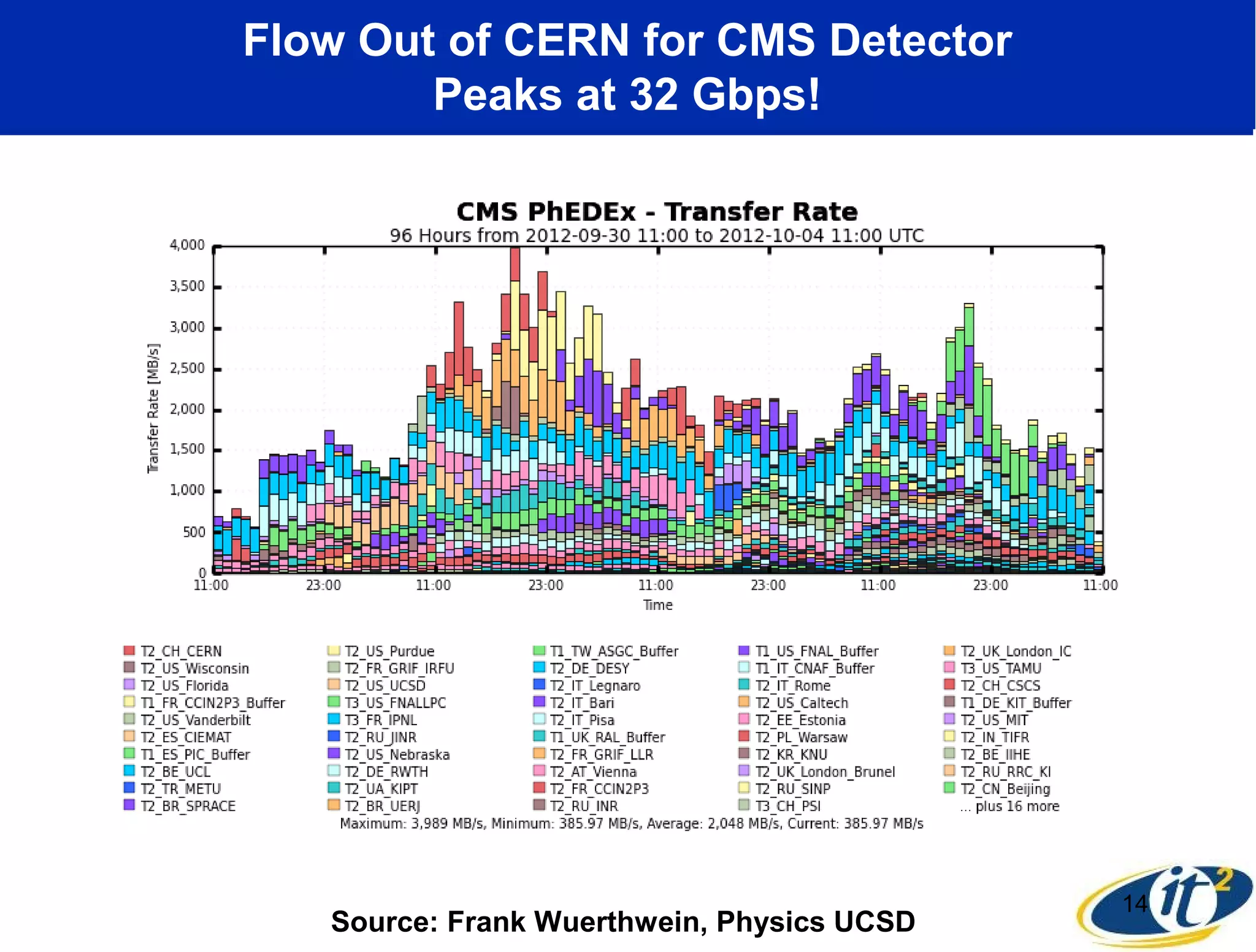

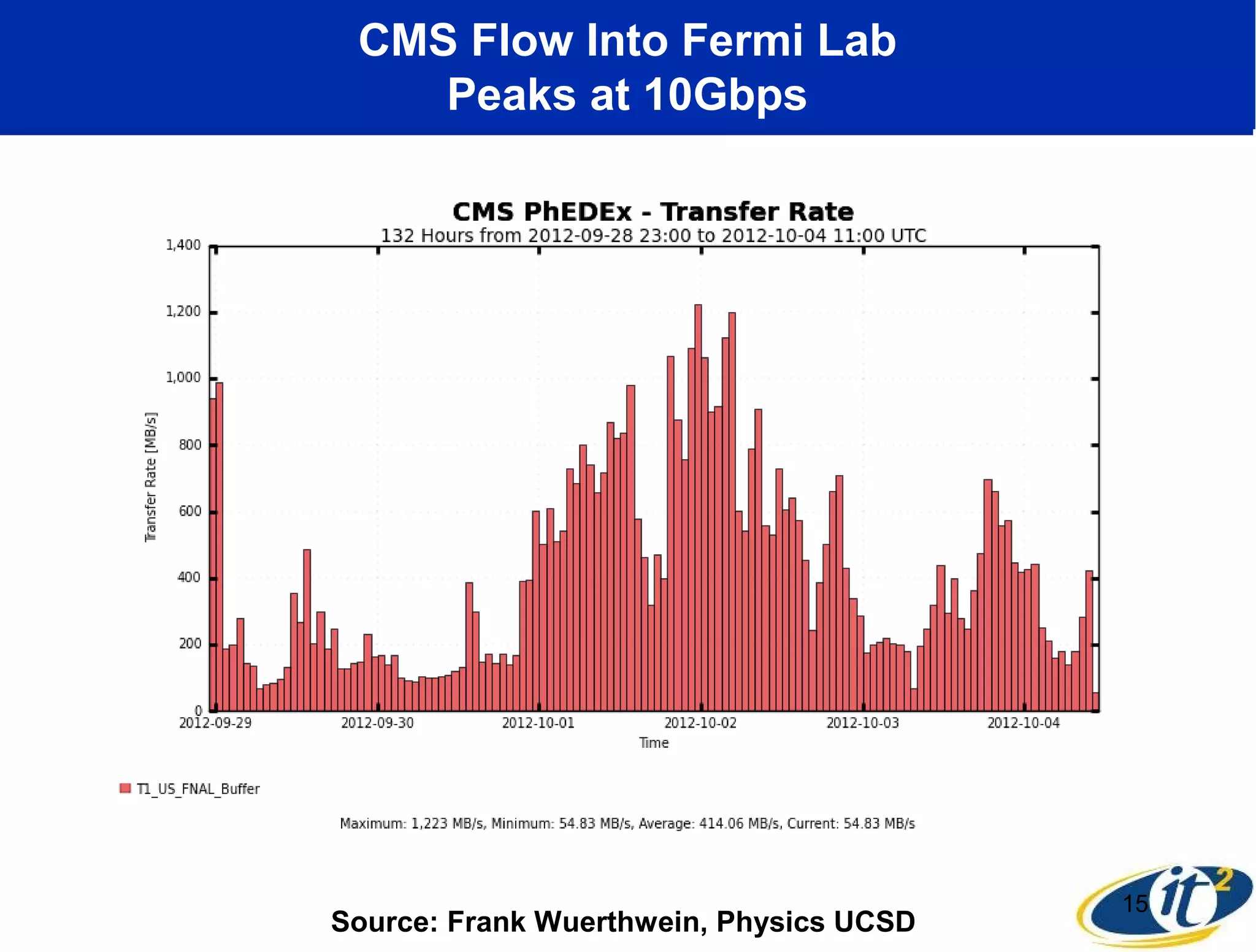

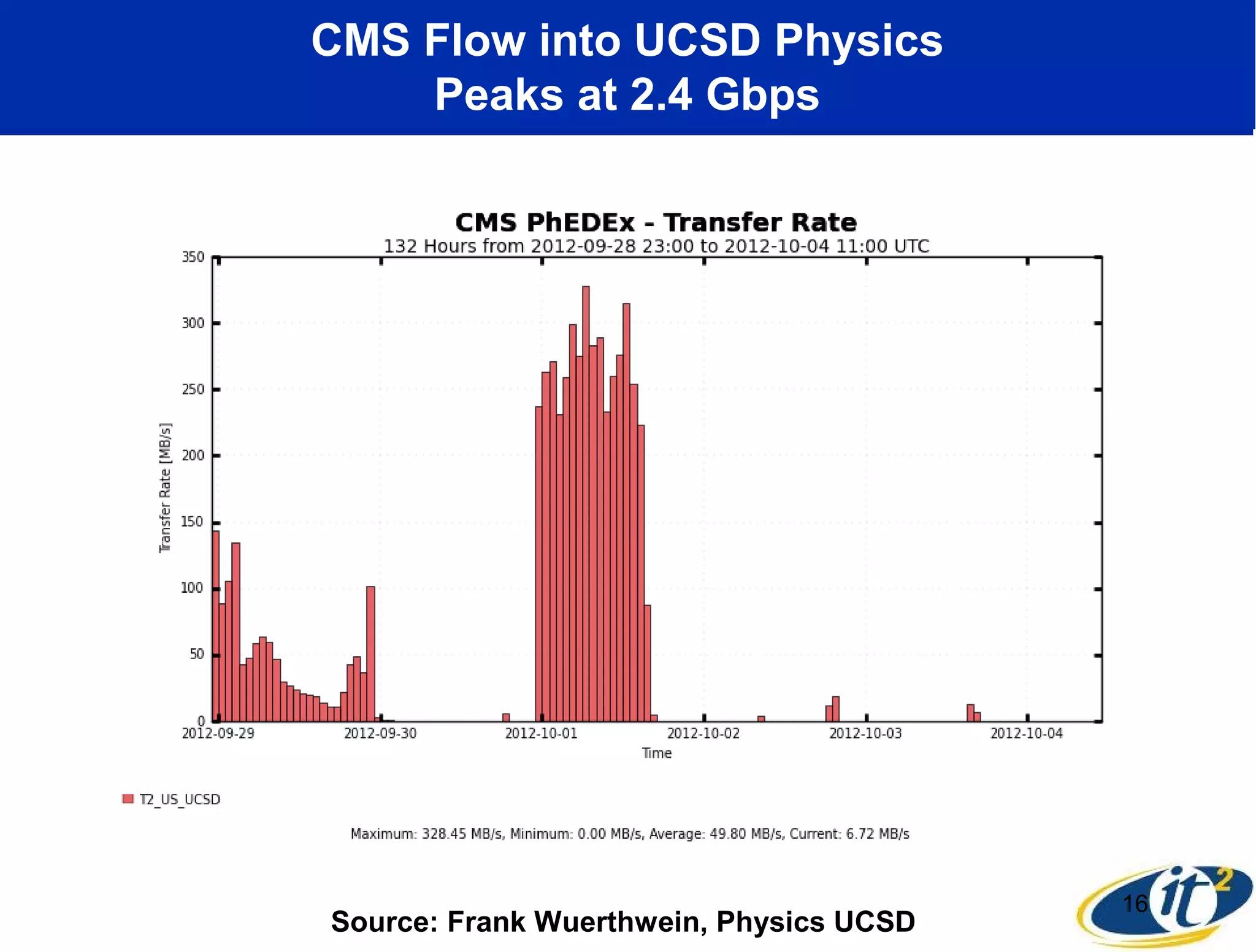

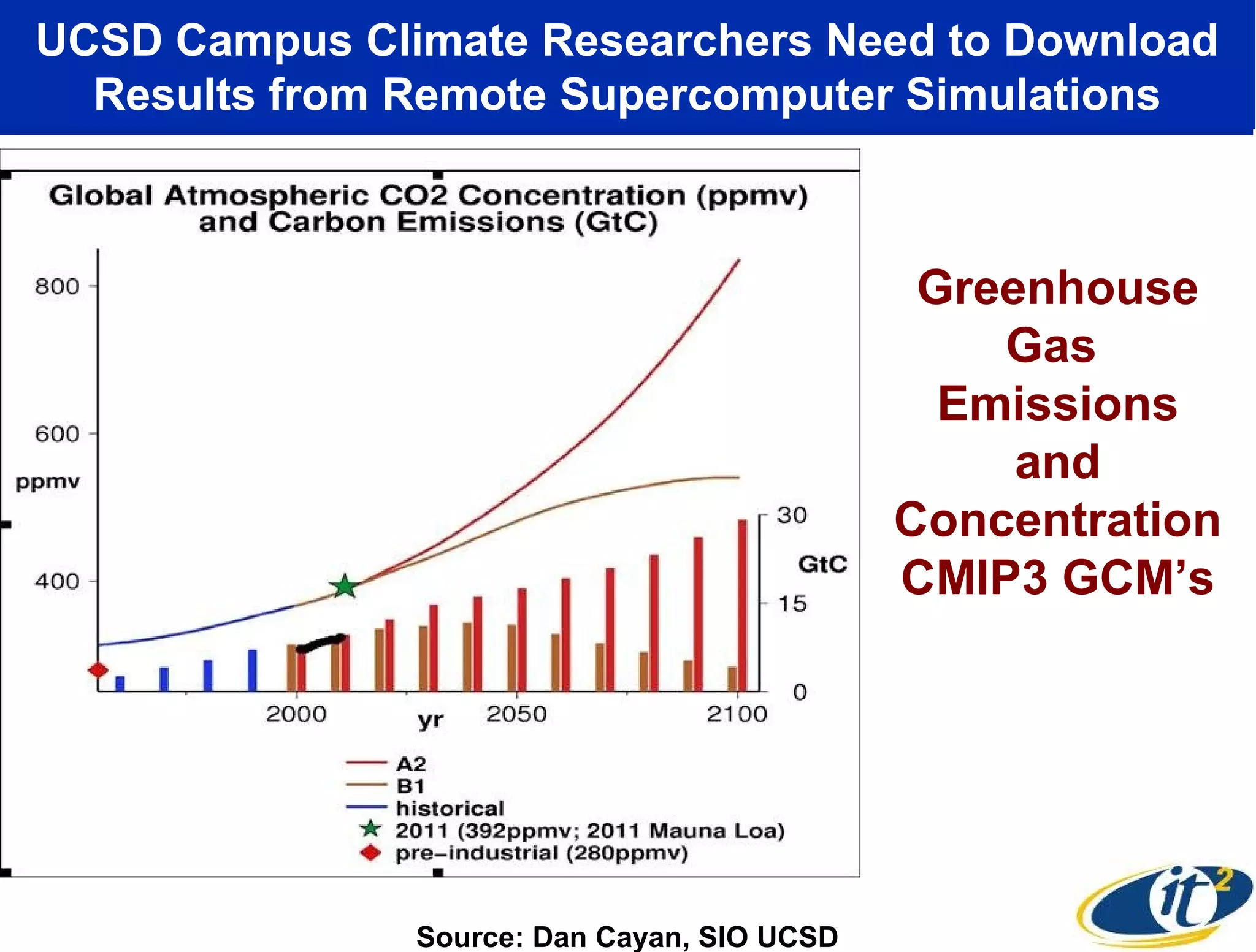

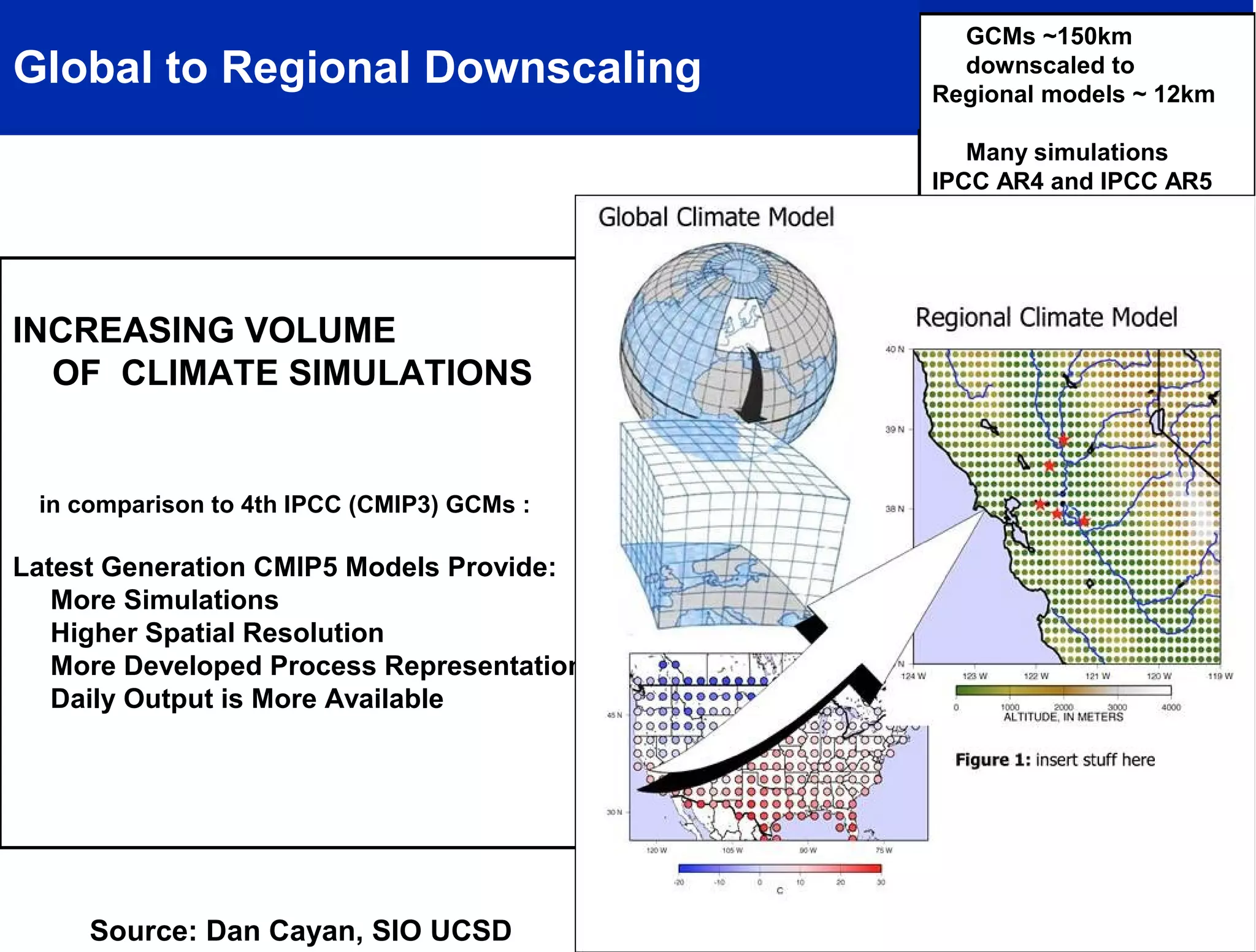

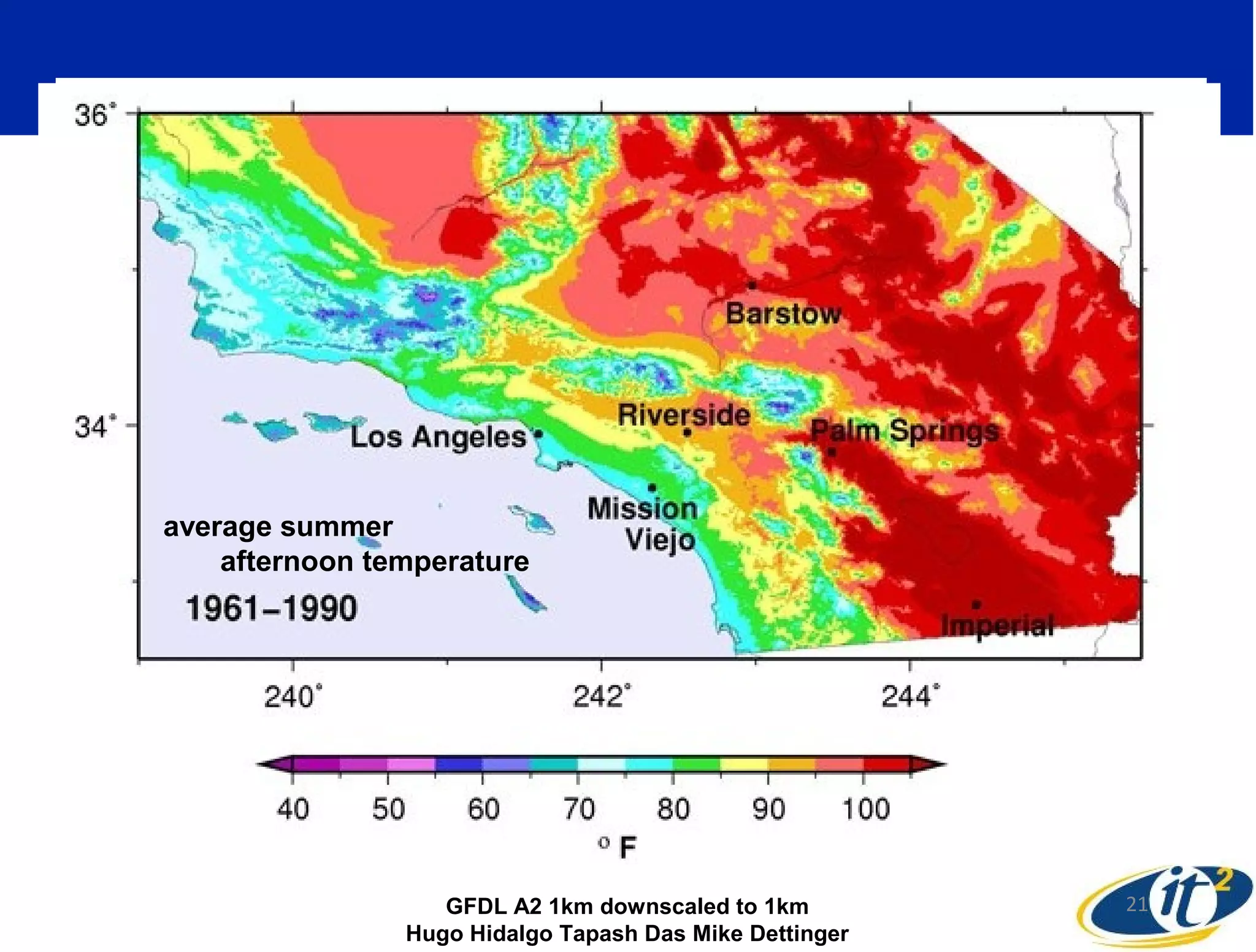

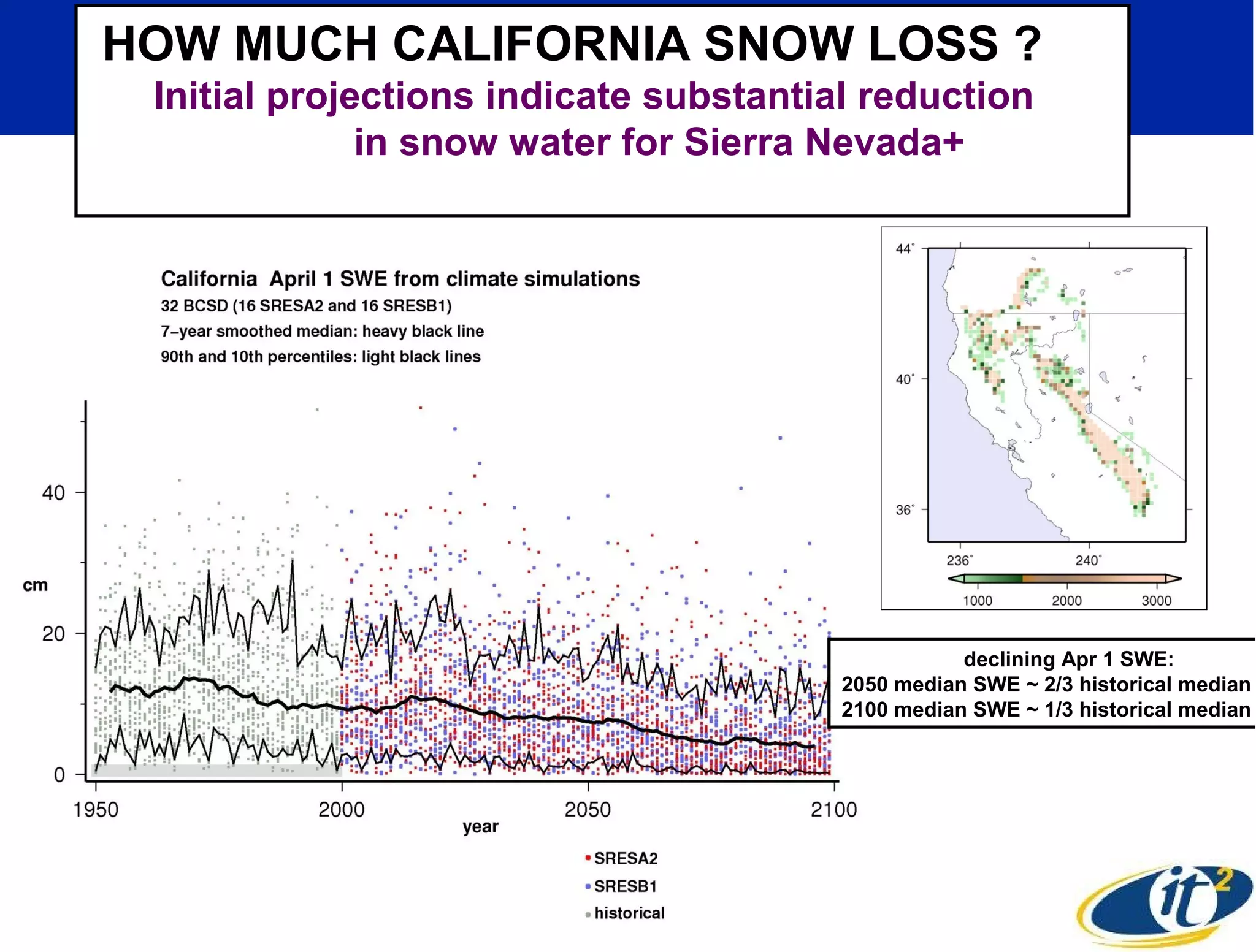

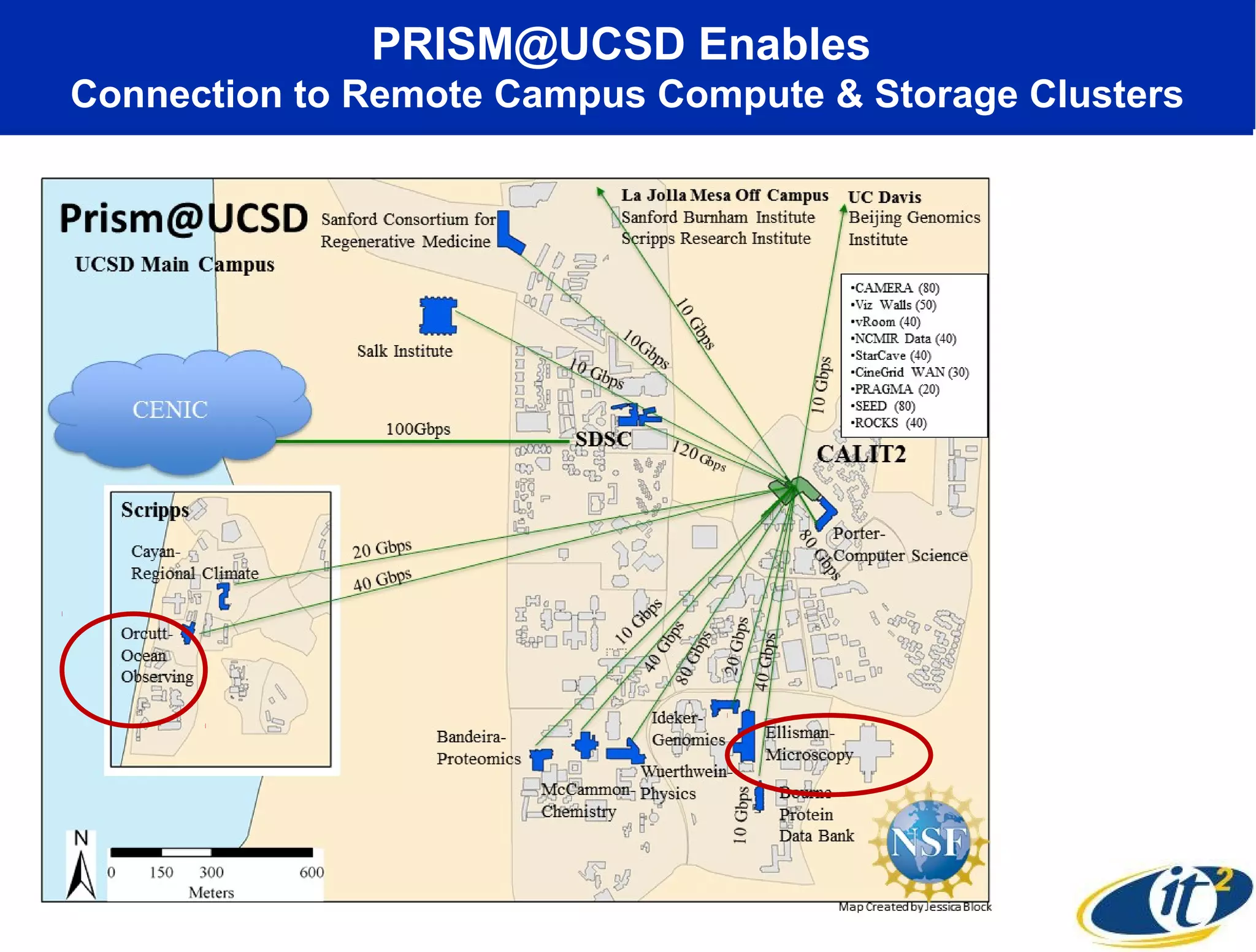

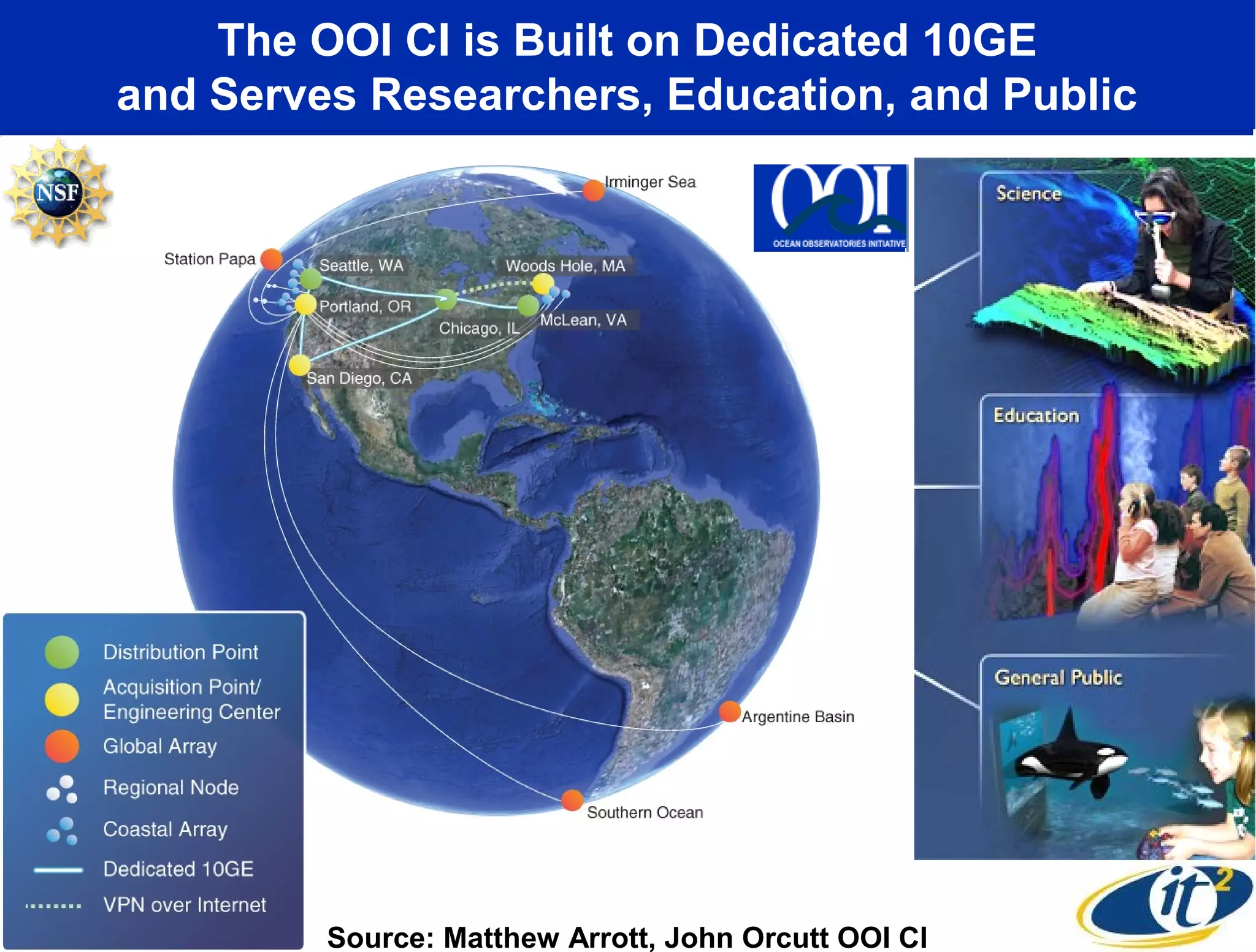

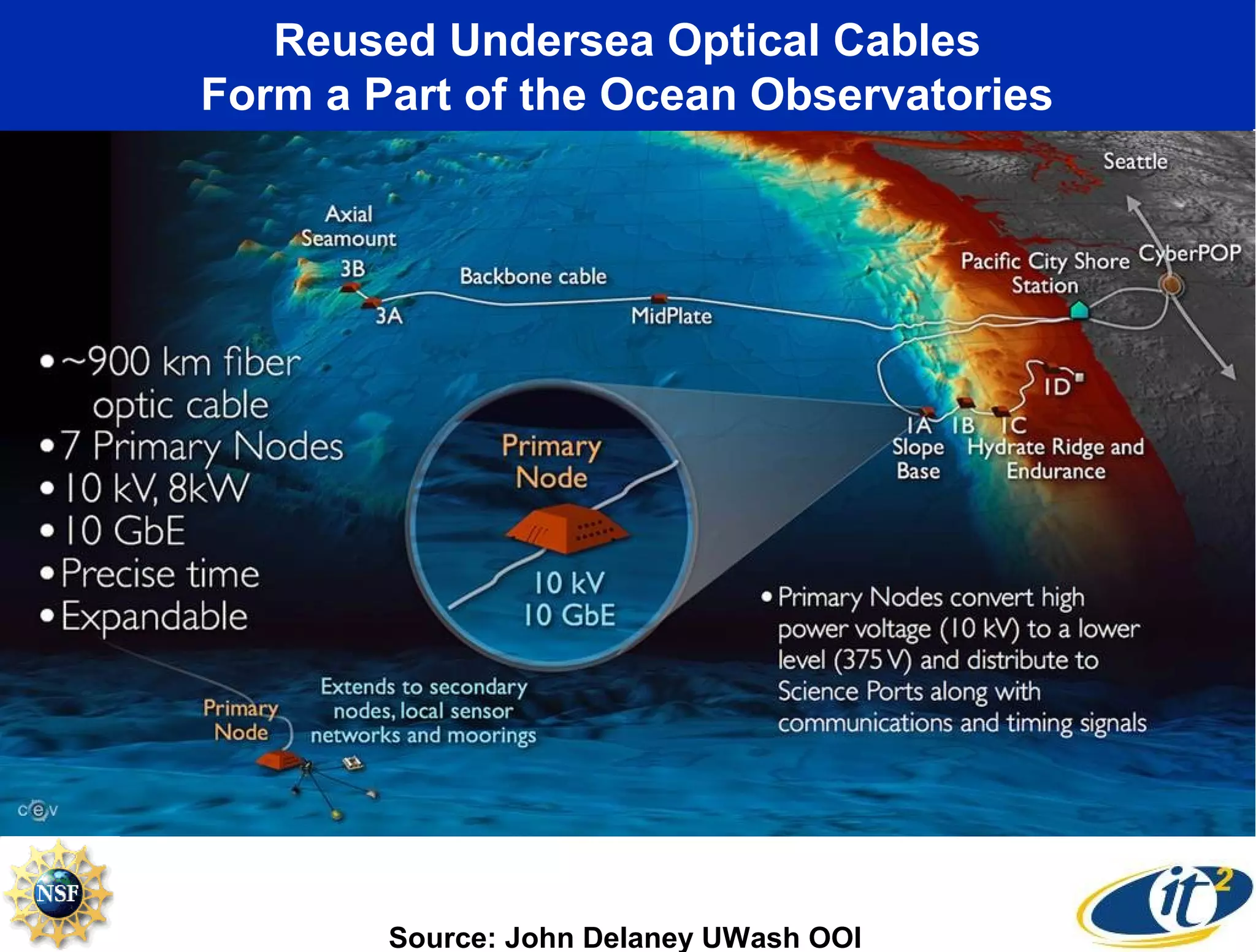

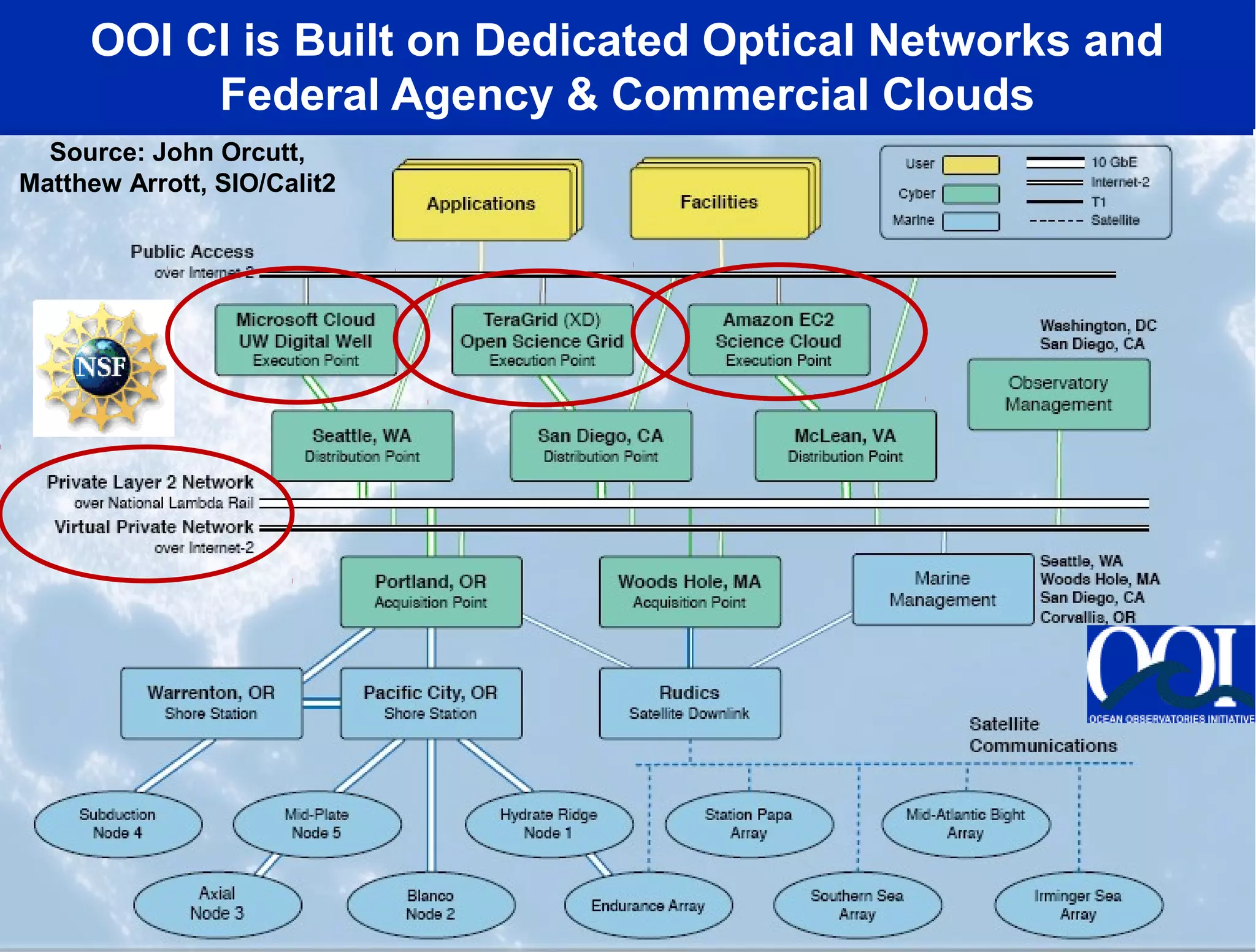

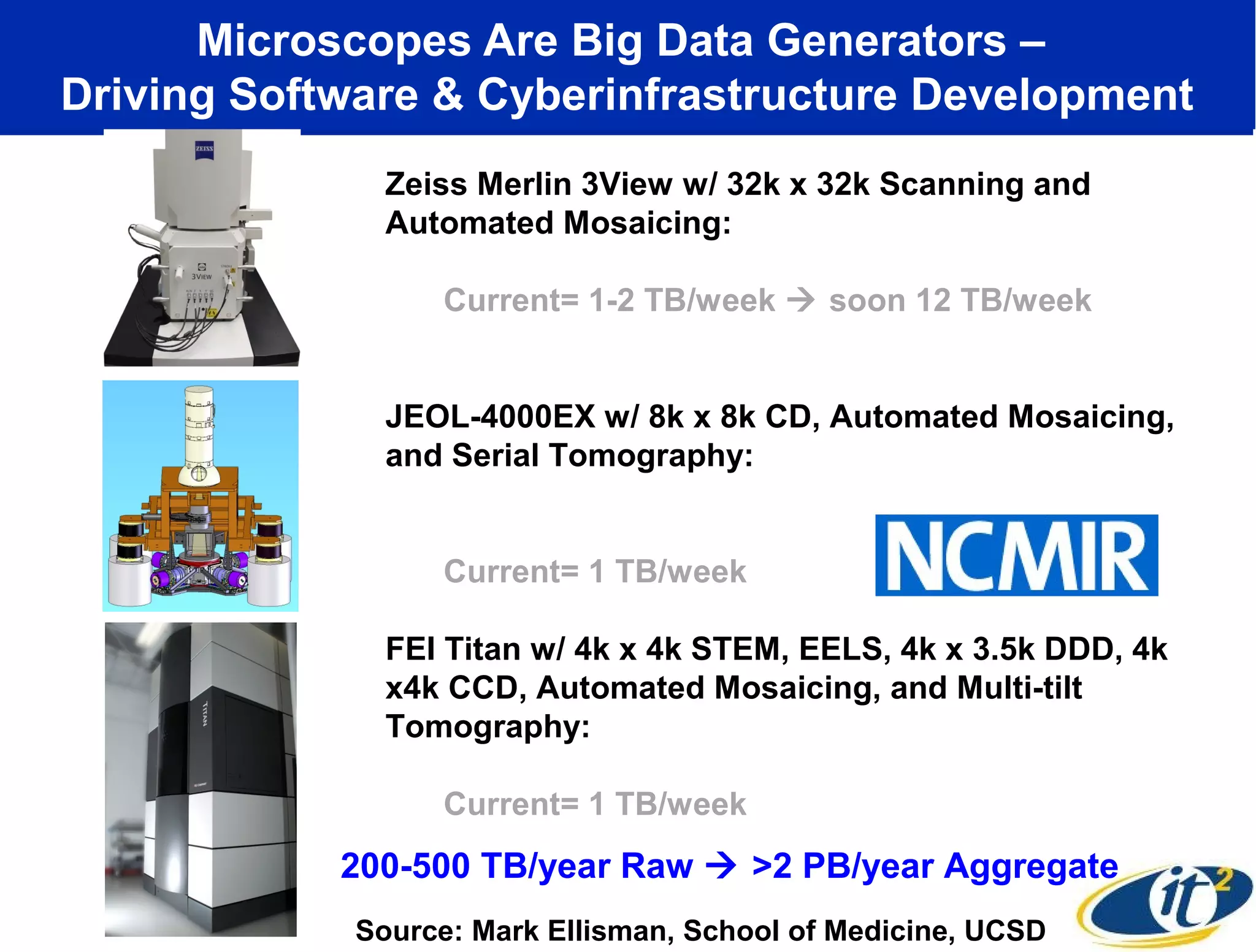

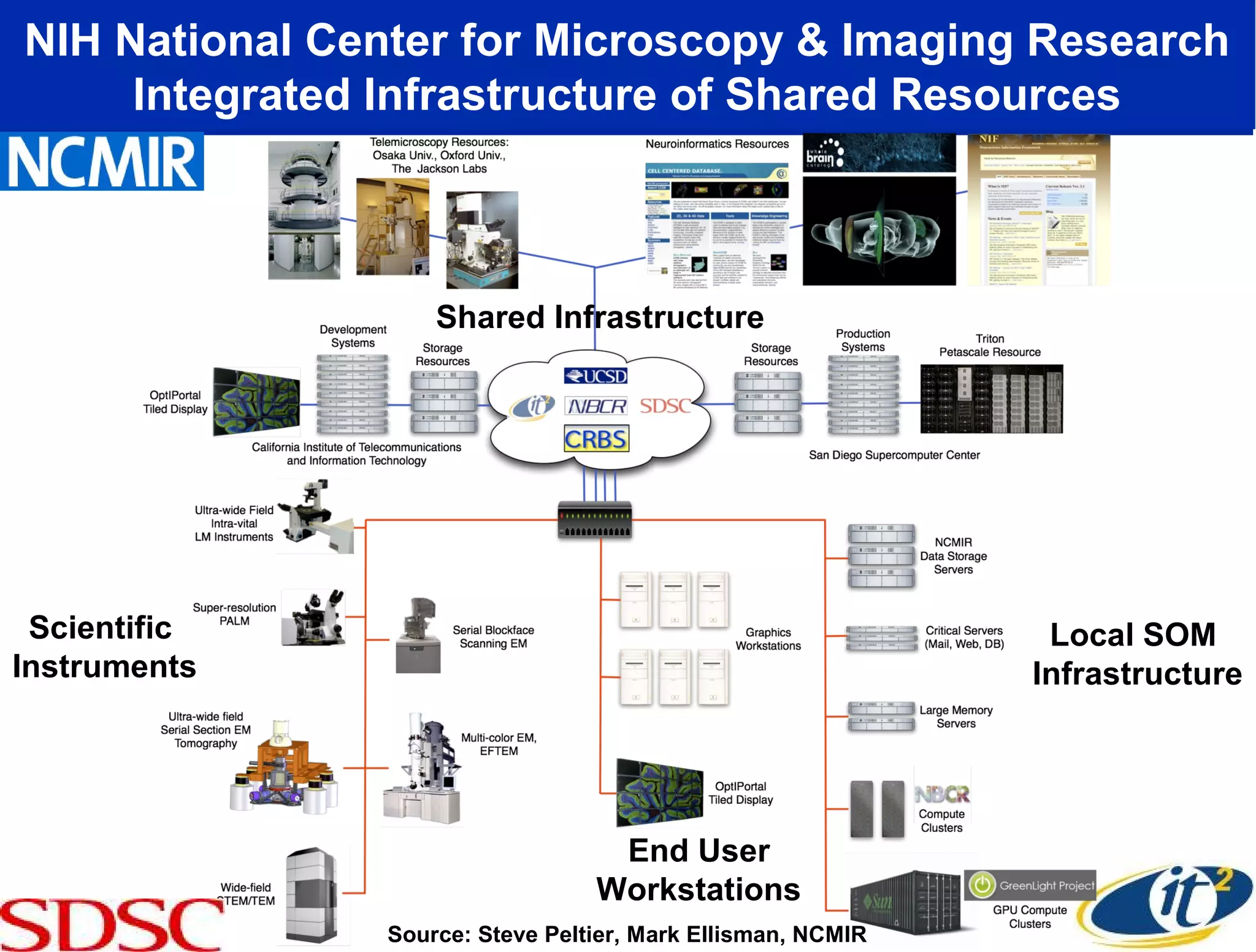

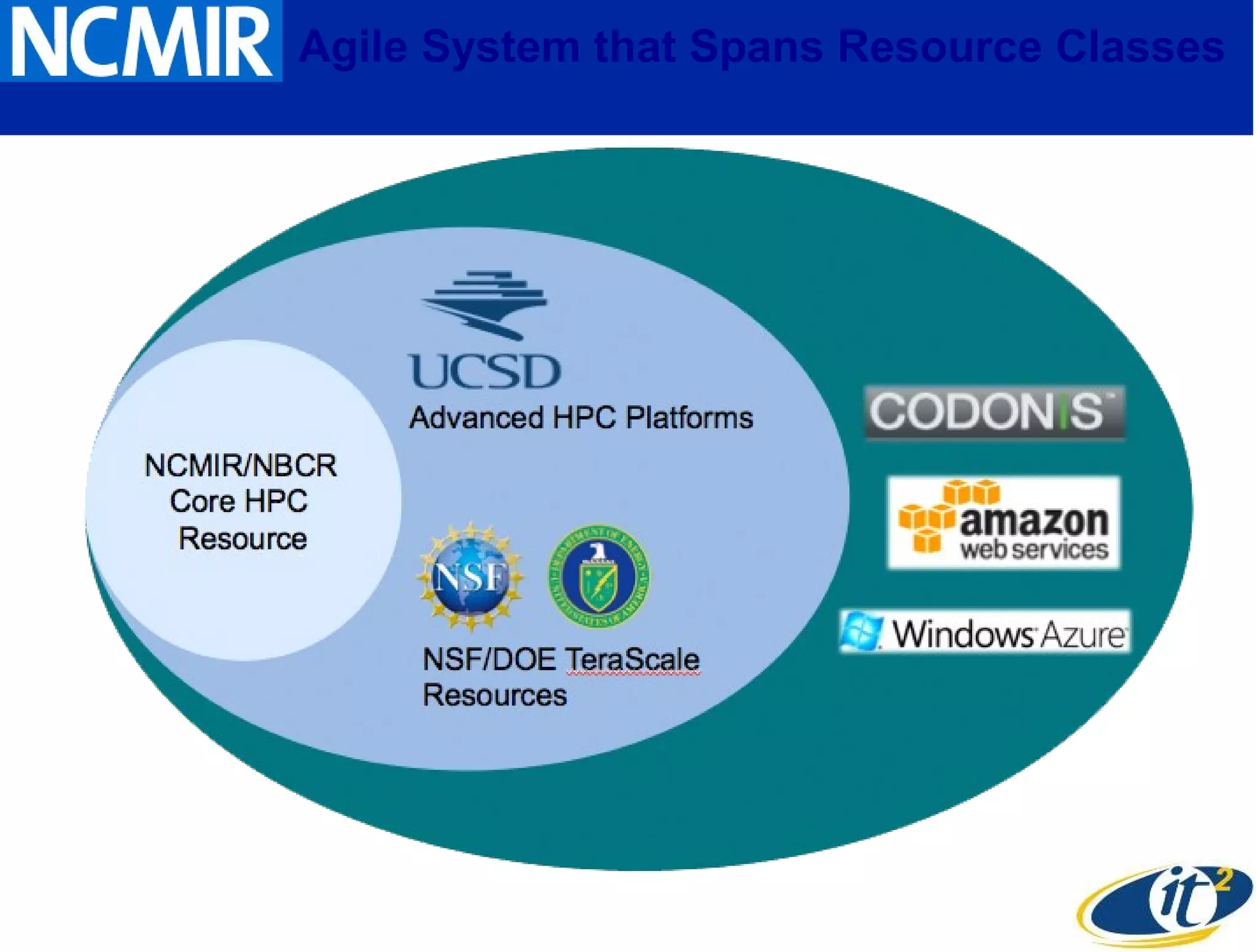

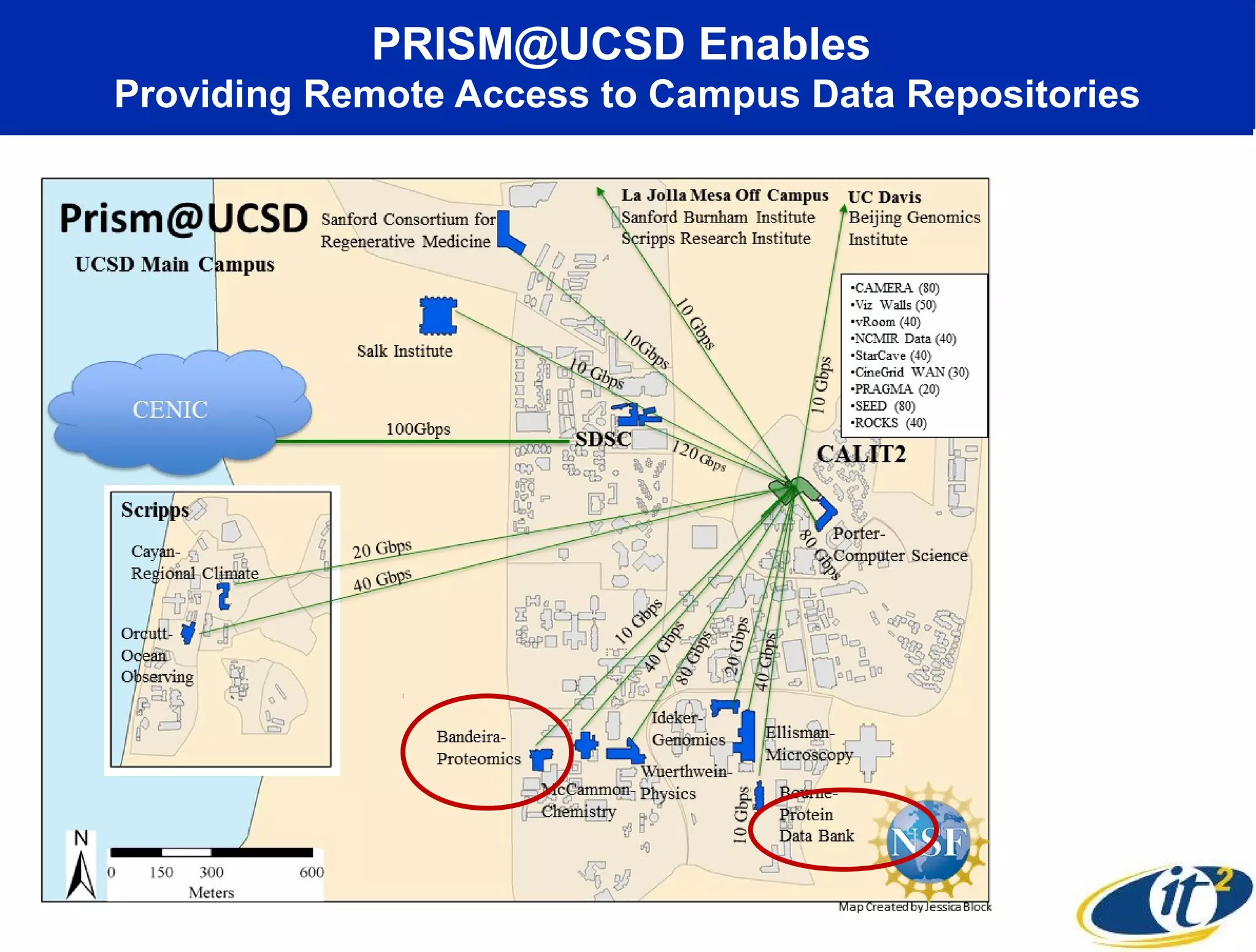

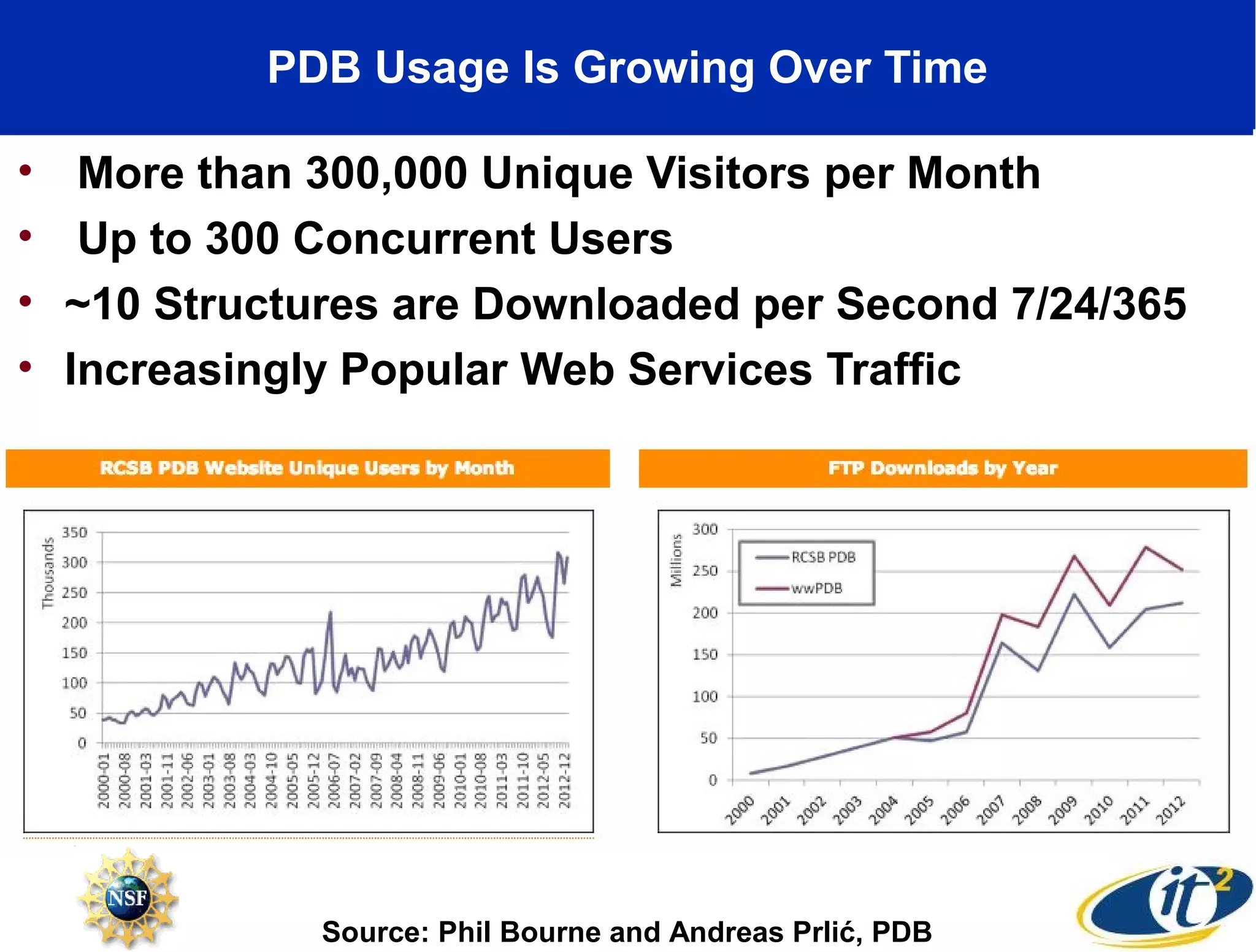

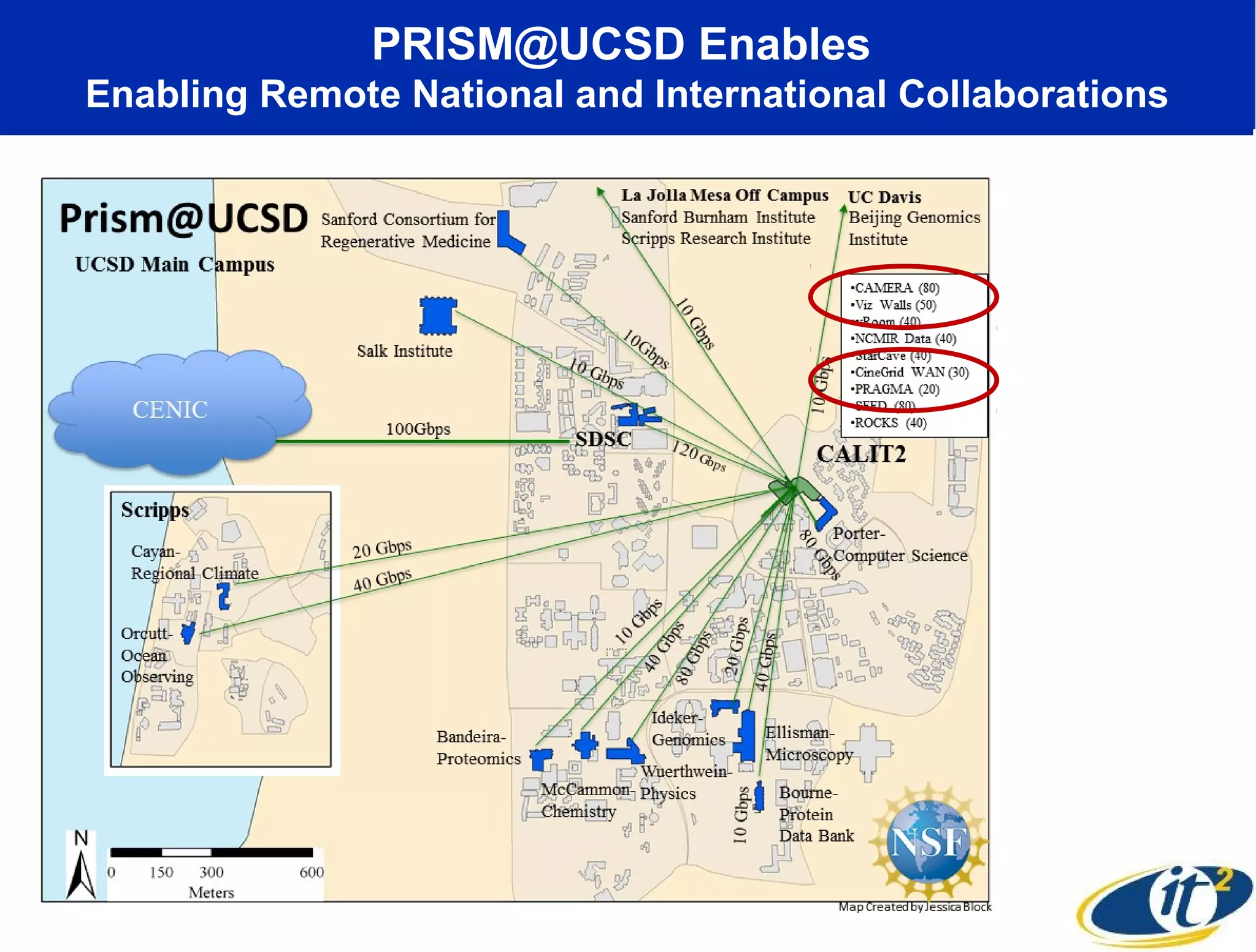

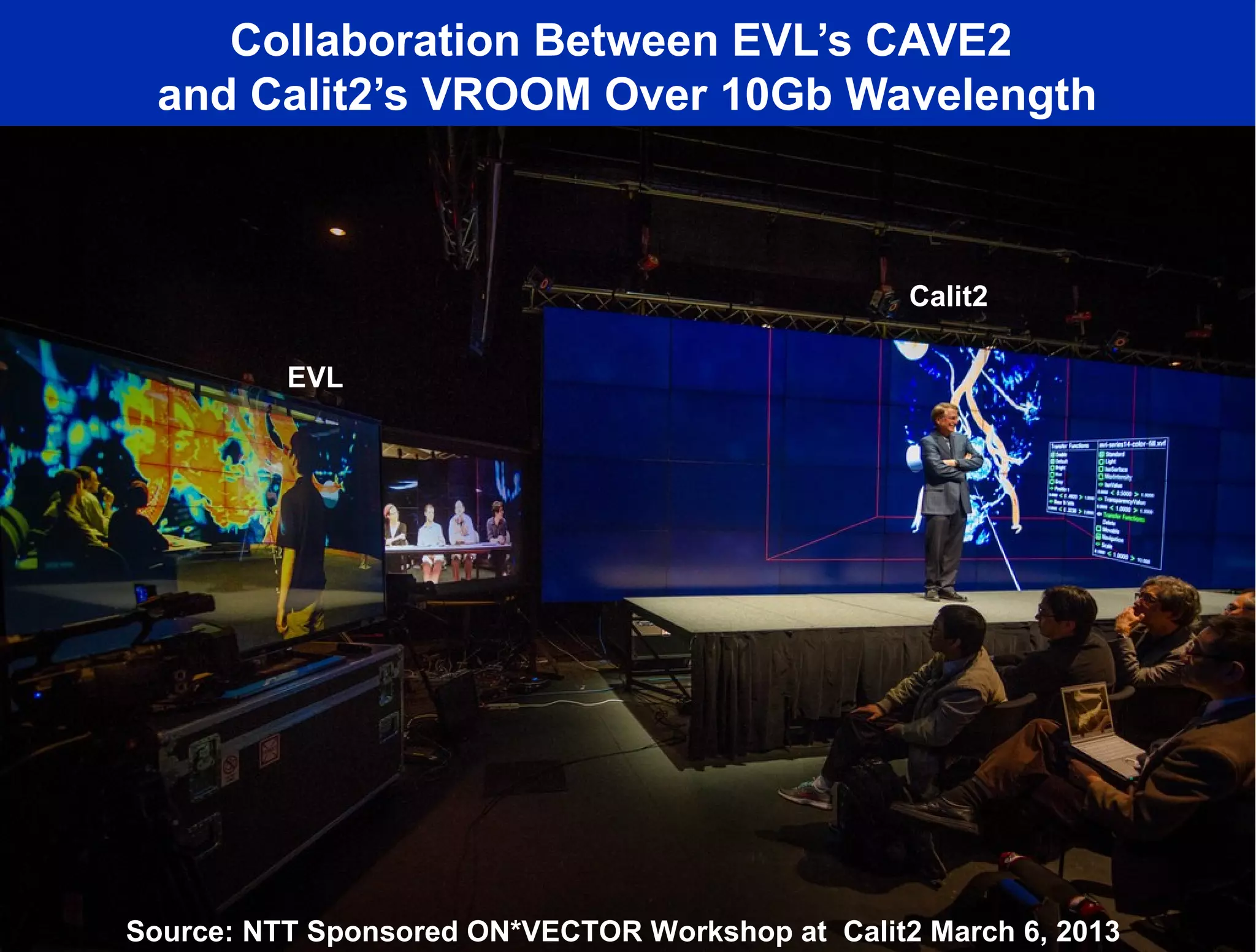

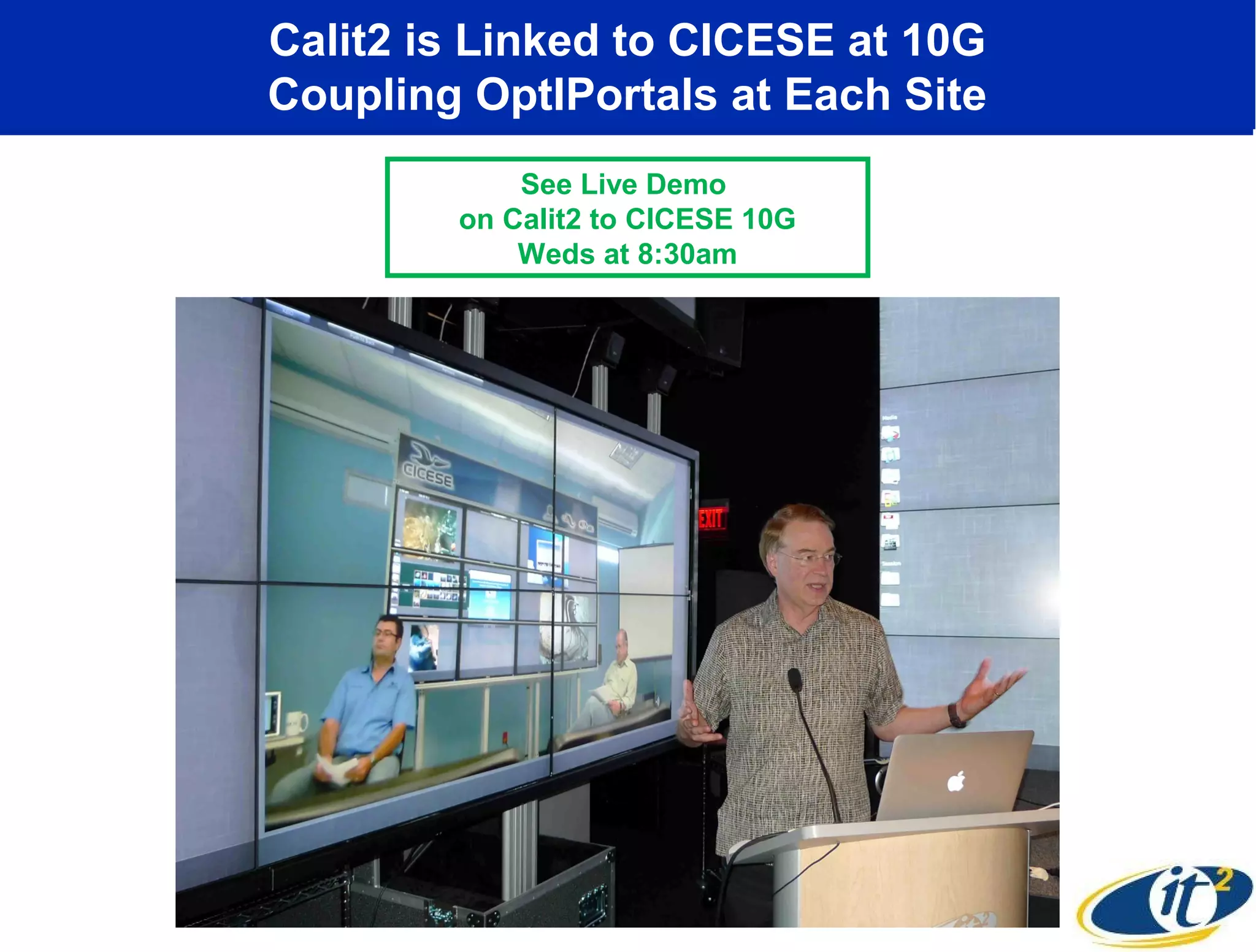

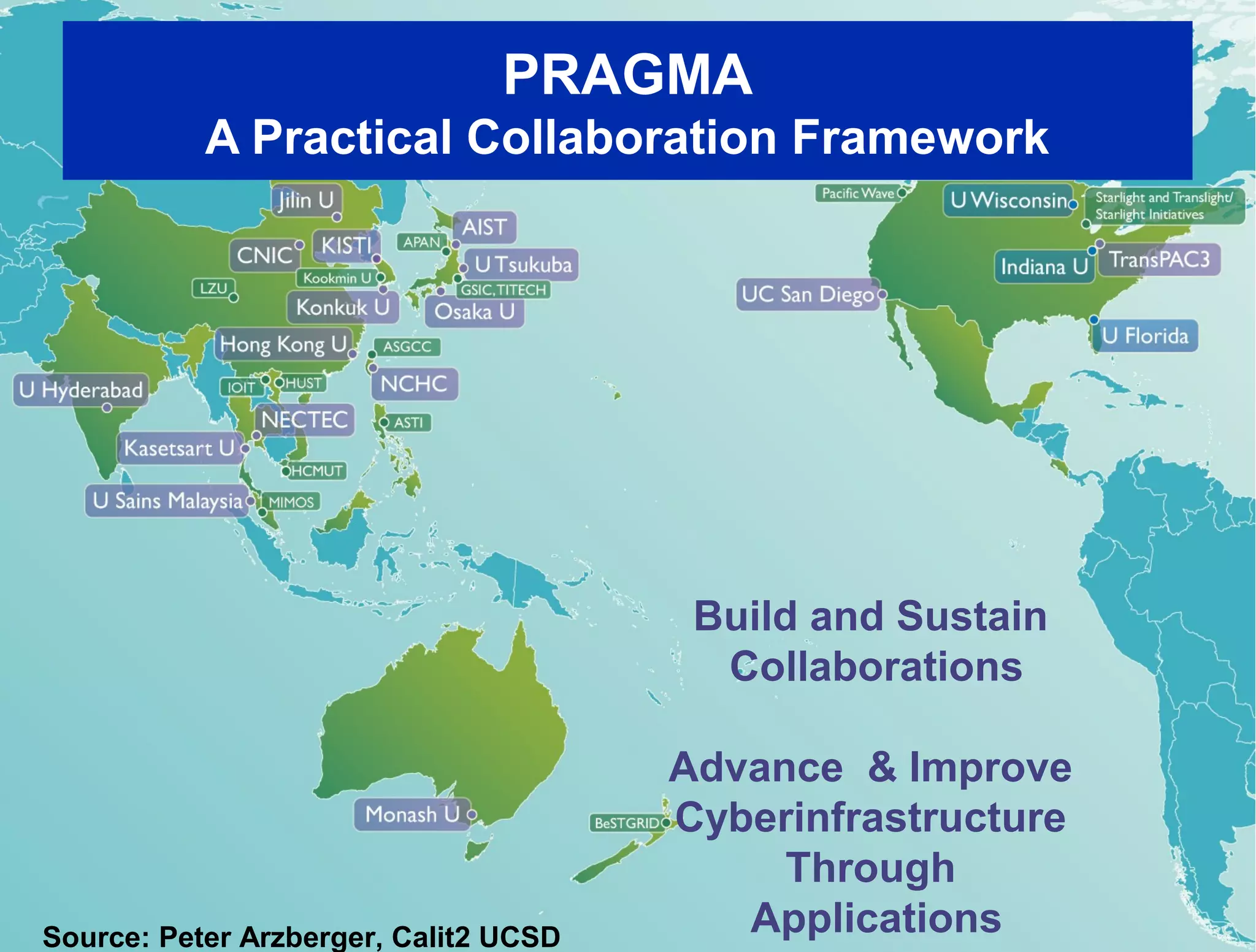

This document discusses the need for a campus-scale high performance cyberinfrastructure at UCSD to support data-intensive research. It notes that various research projects on campus, such as those related to particle physics, climate modeling, ocean observing, and microscopy, generate massive amounts of data and require high-speed connections both on campus and to remote resources. It highlights several projects that have implemented dedicated 10 Gbps connections but notes that bandwidth needs continue growing rapidly. The document argues that affordable 10 Gbps networking across the entire campus is now possible and would support data-driven collaborative research.