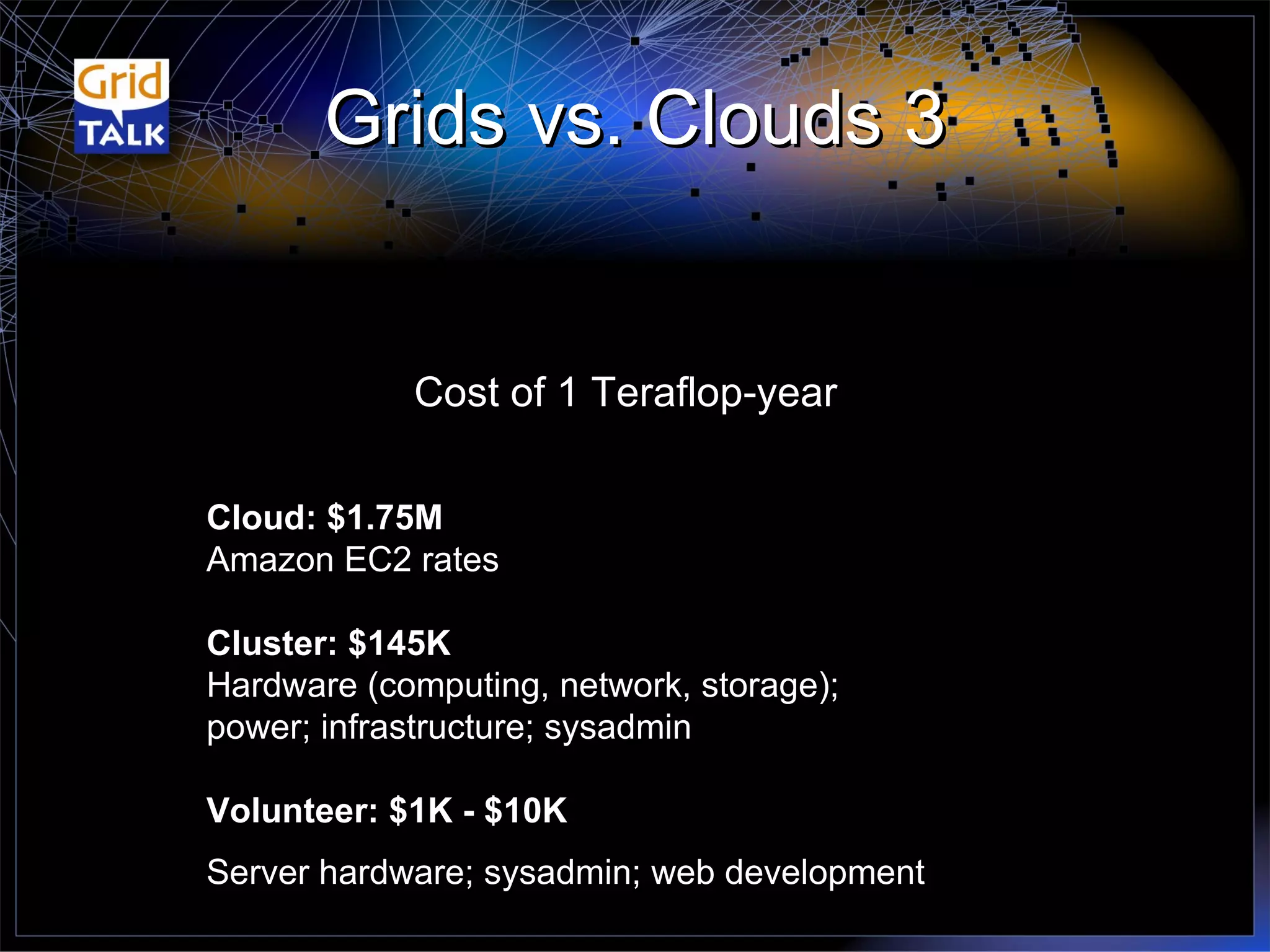

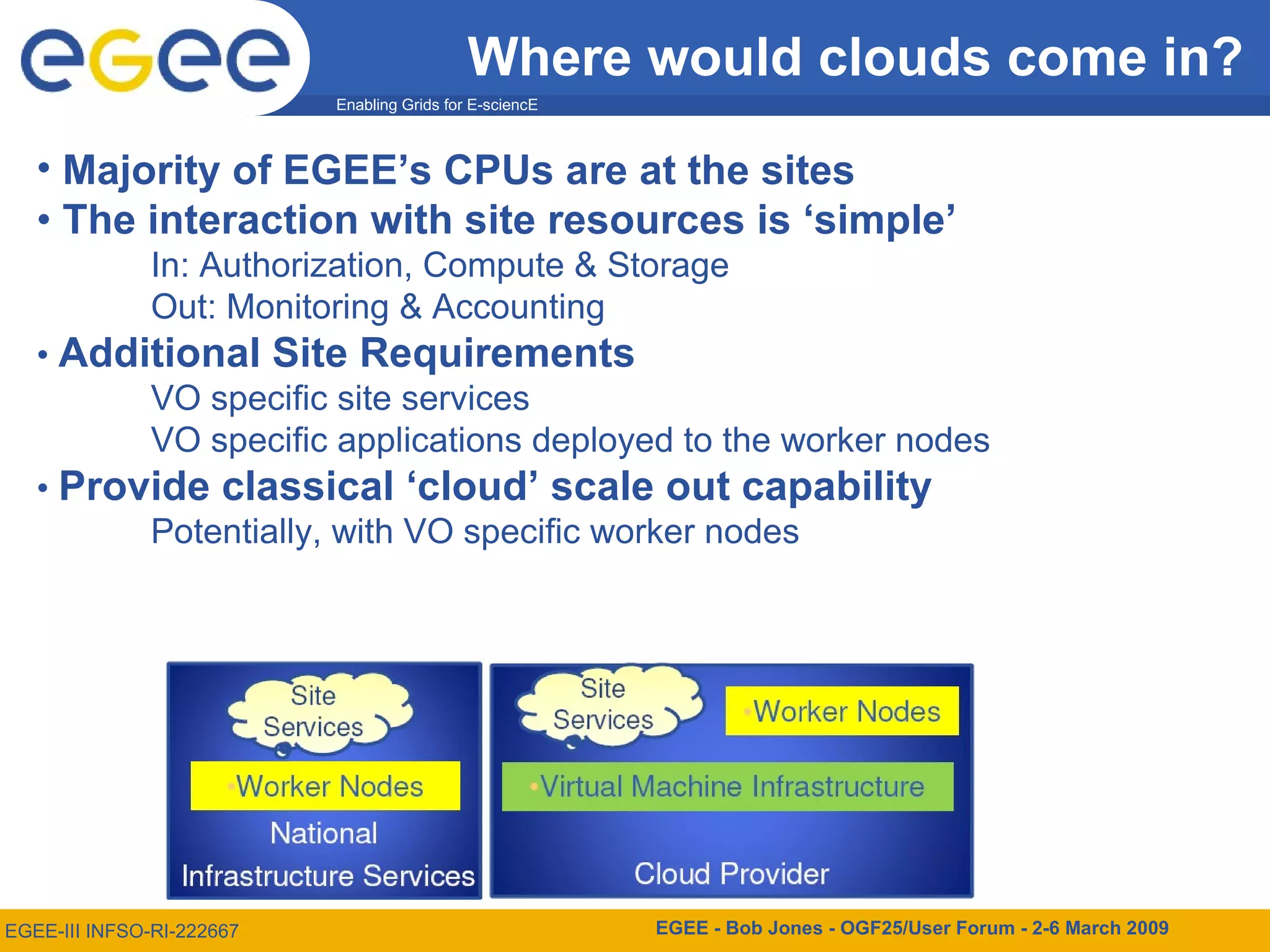

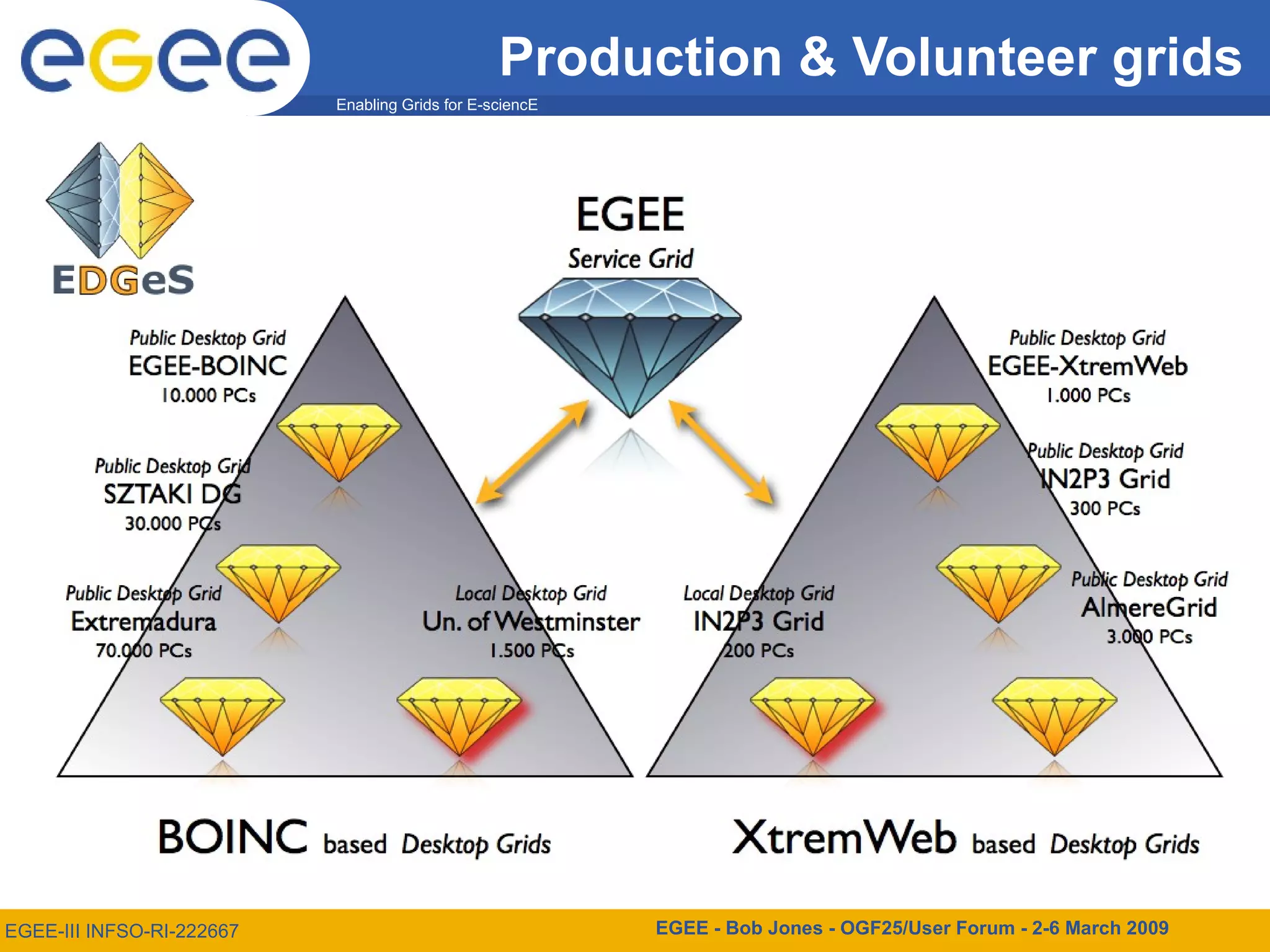

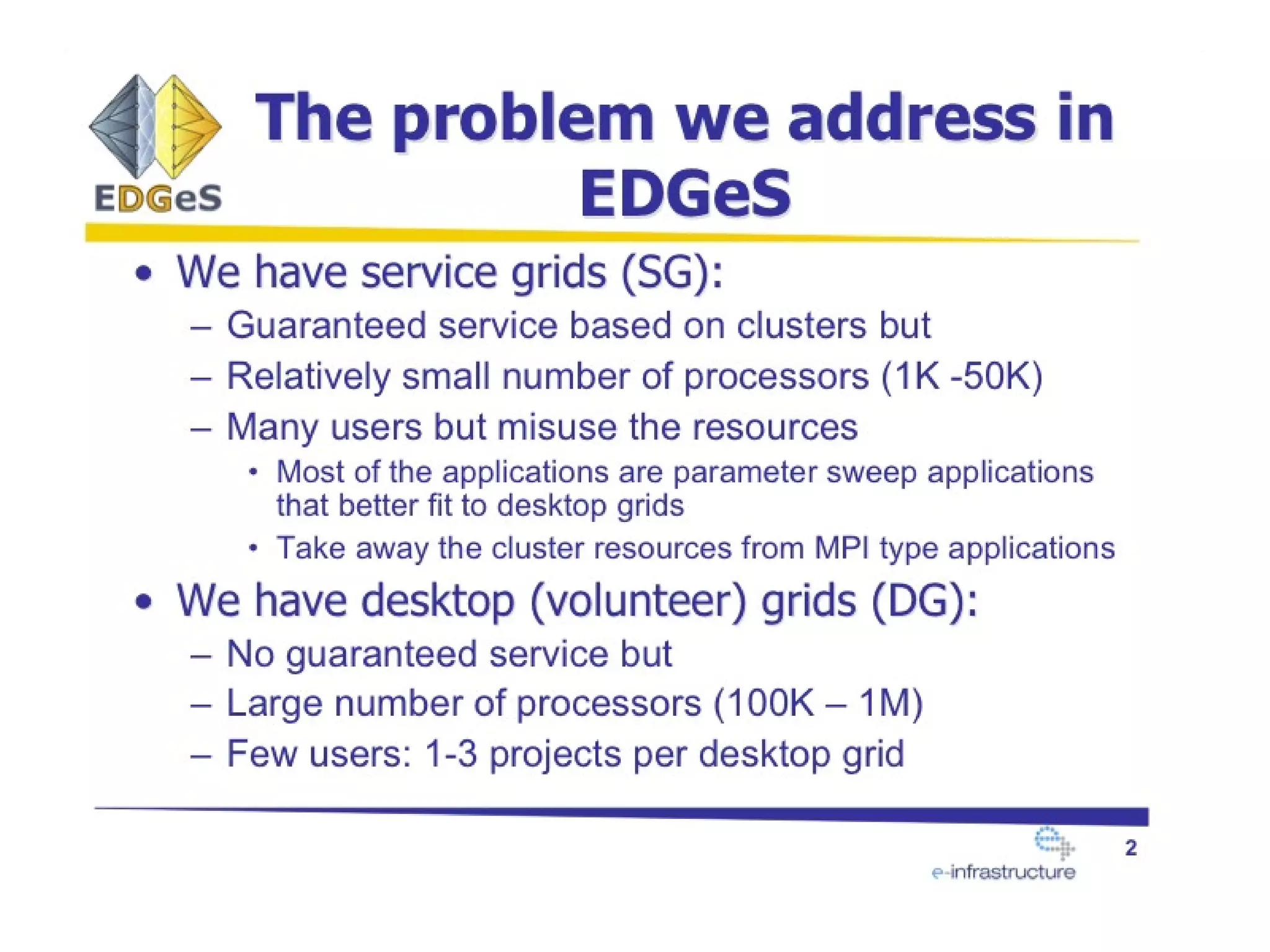

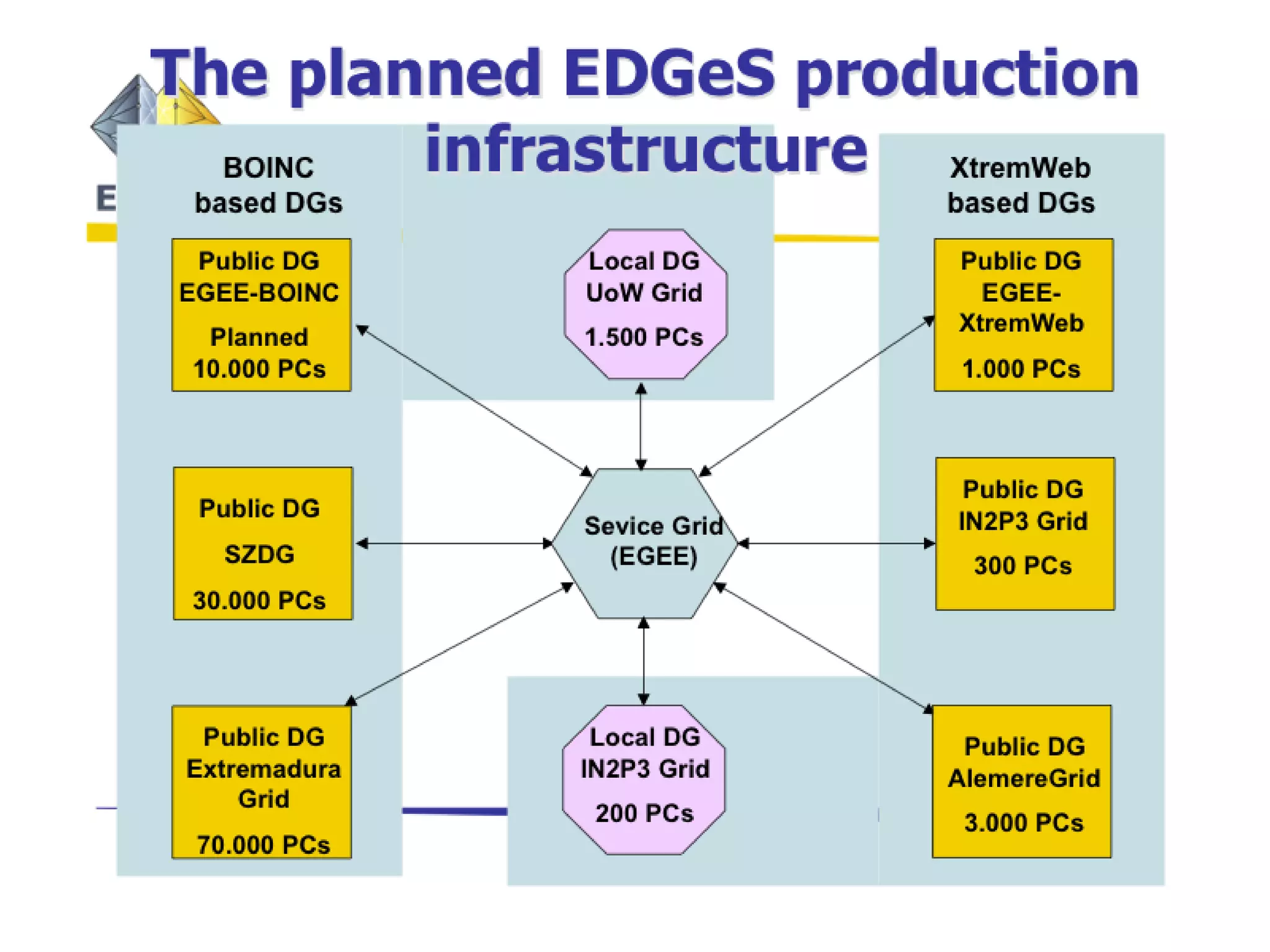

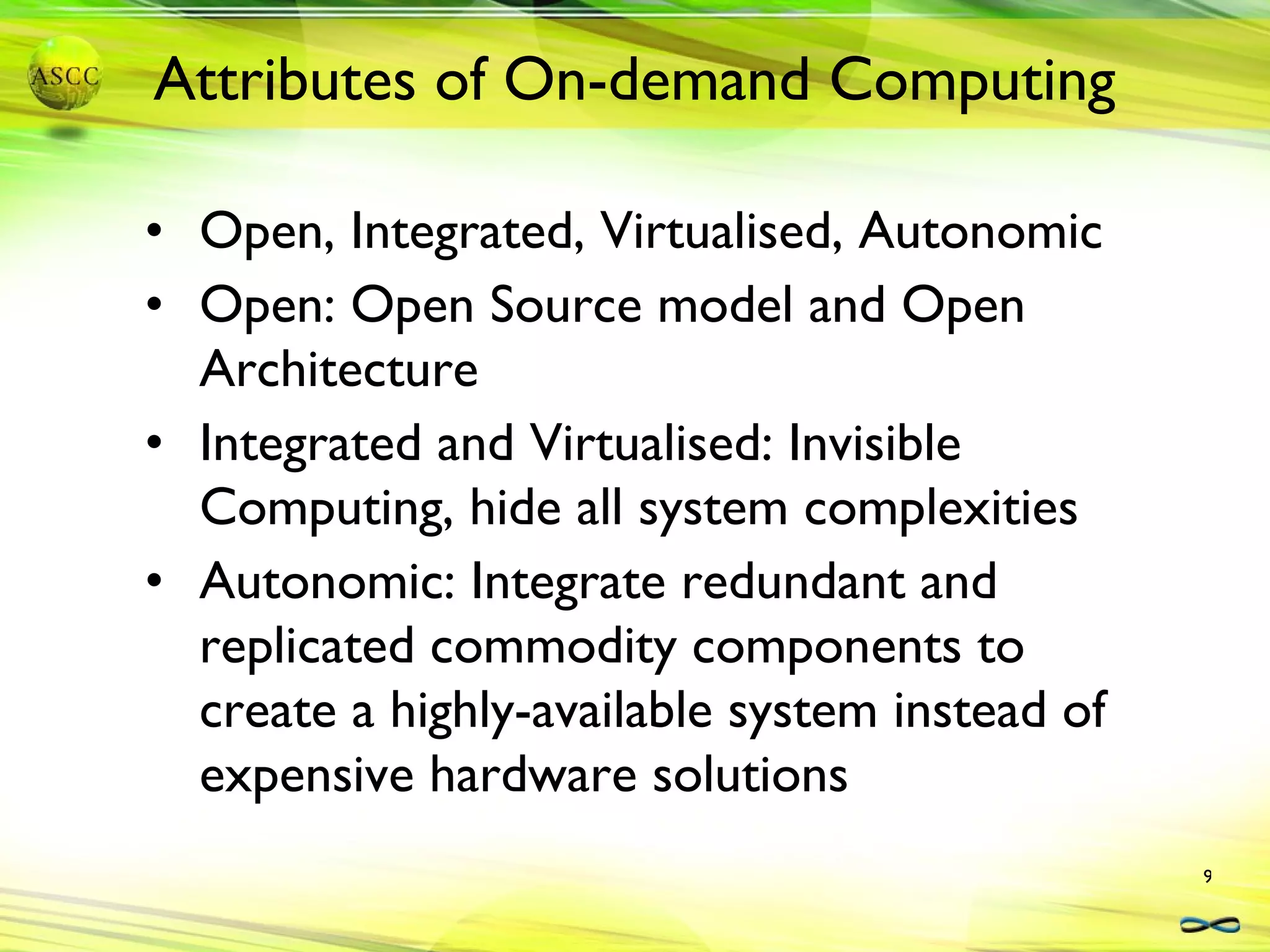

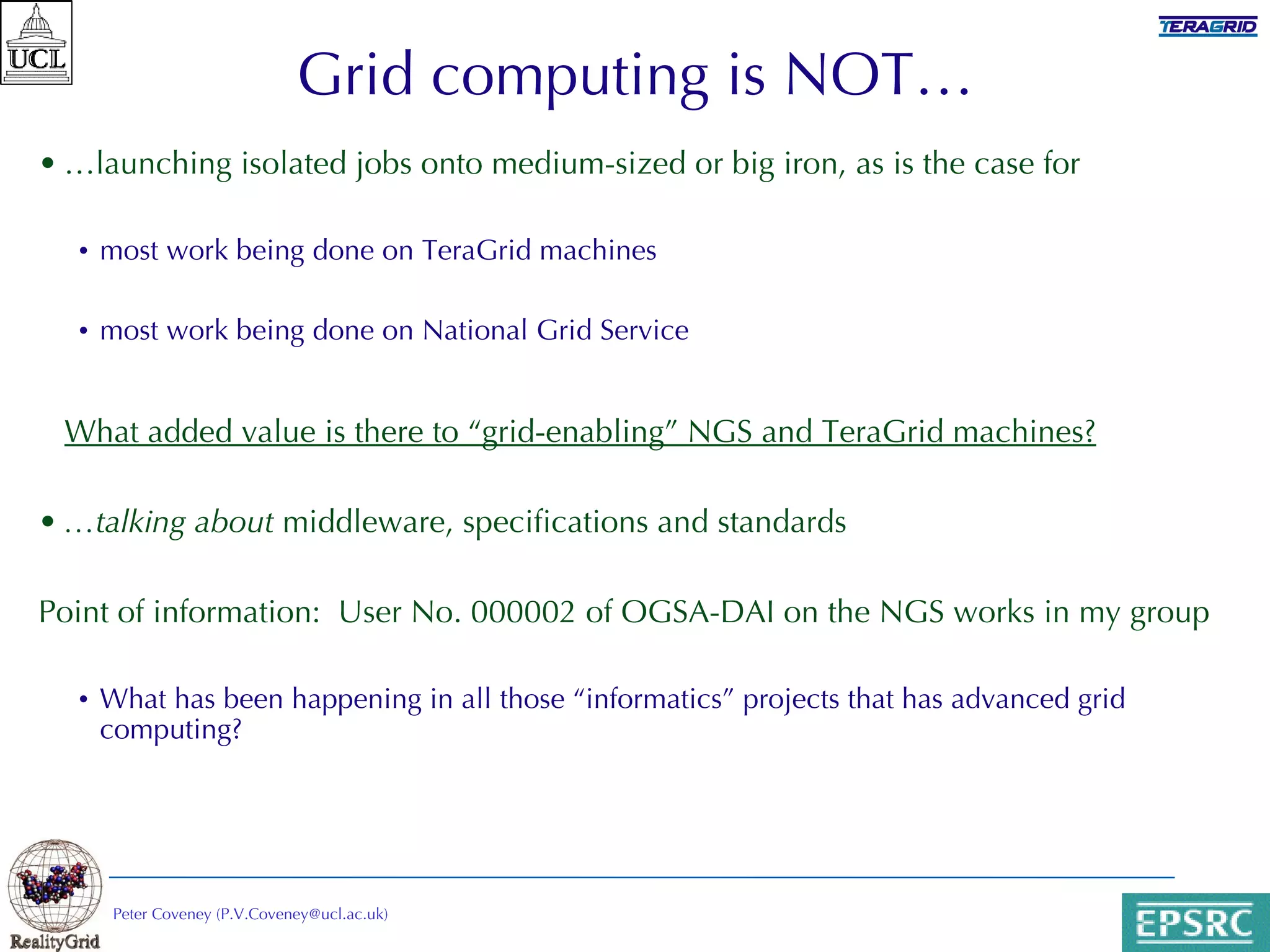

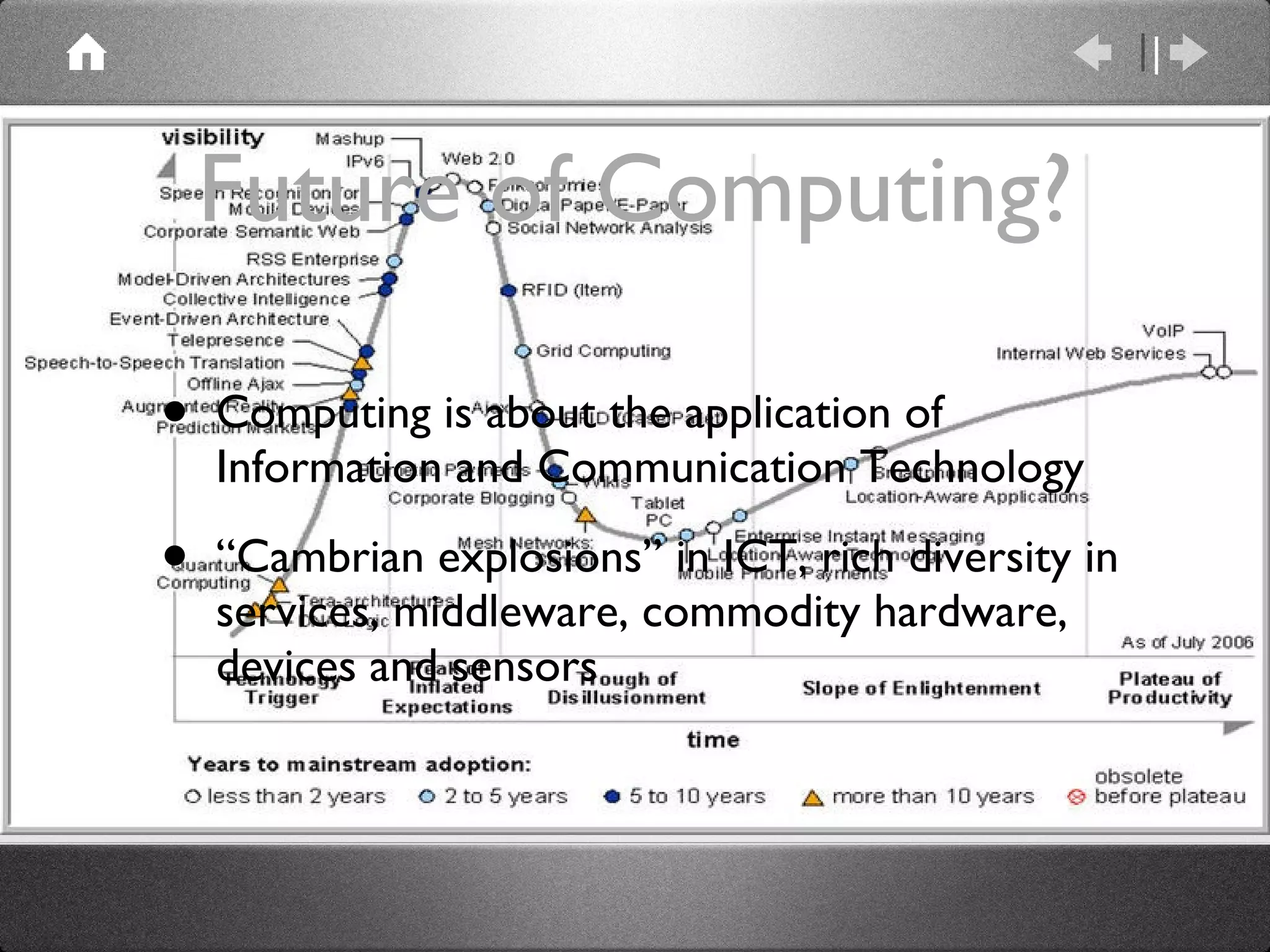

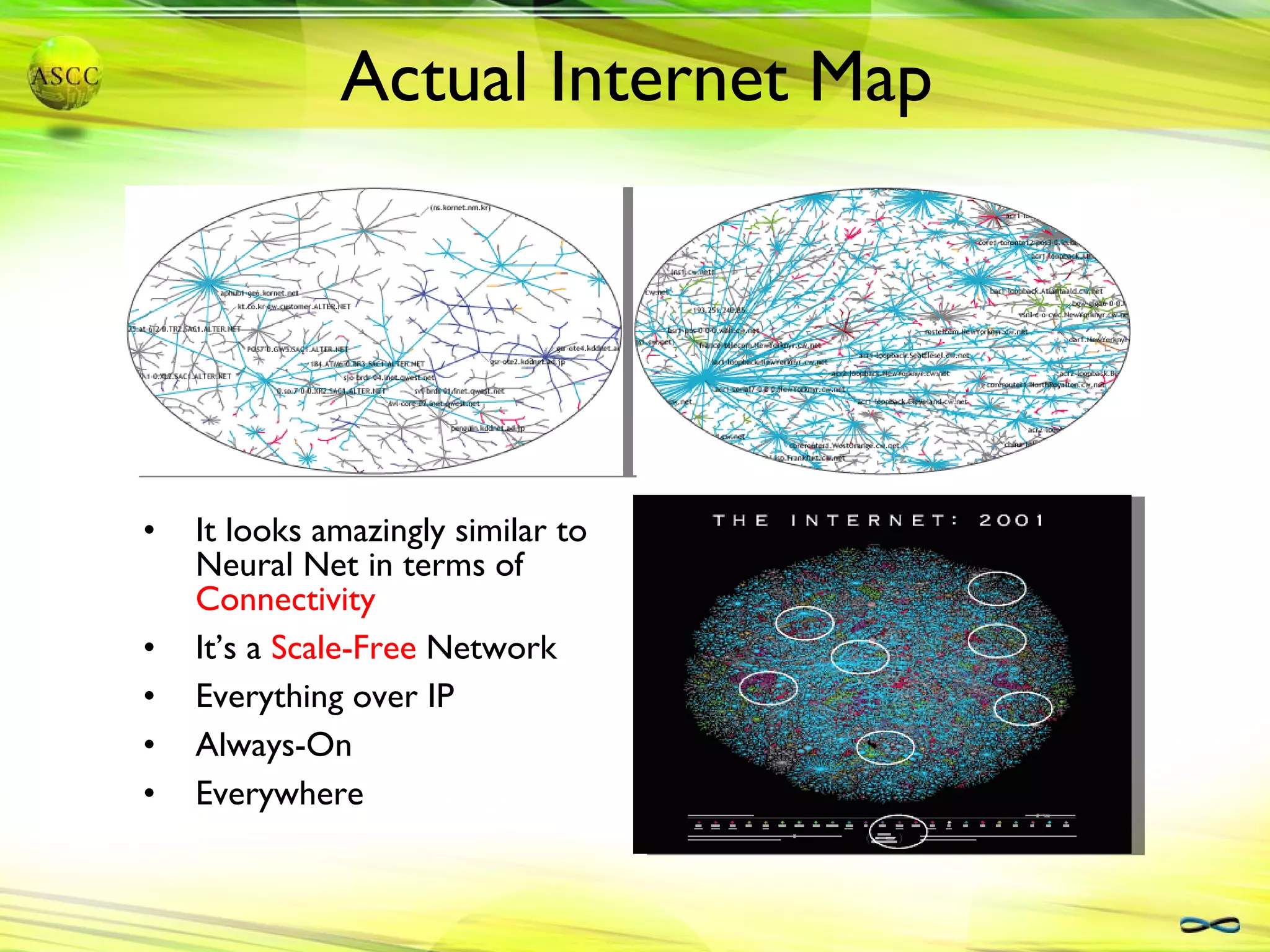

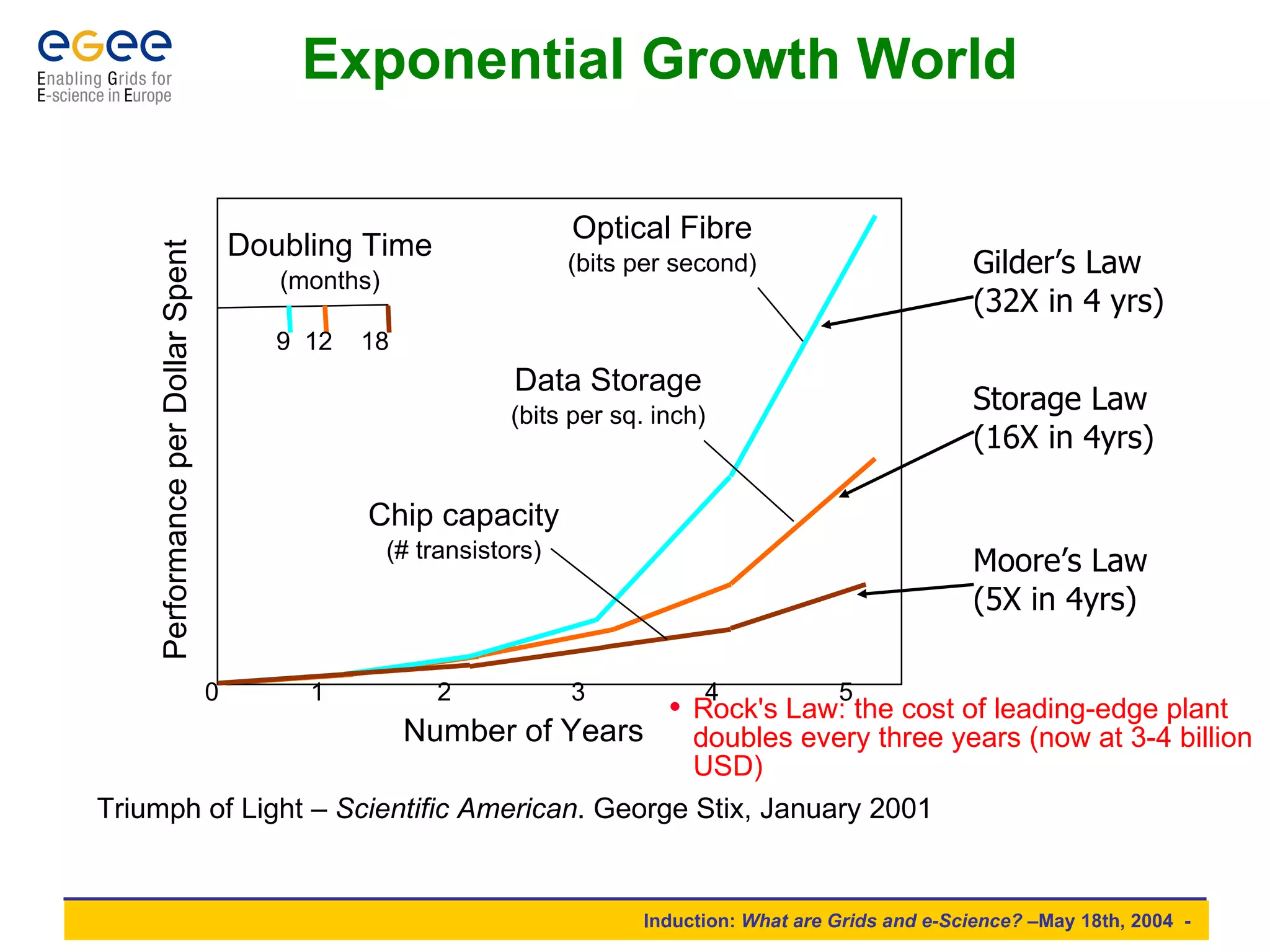

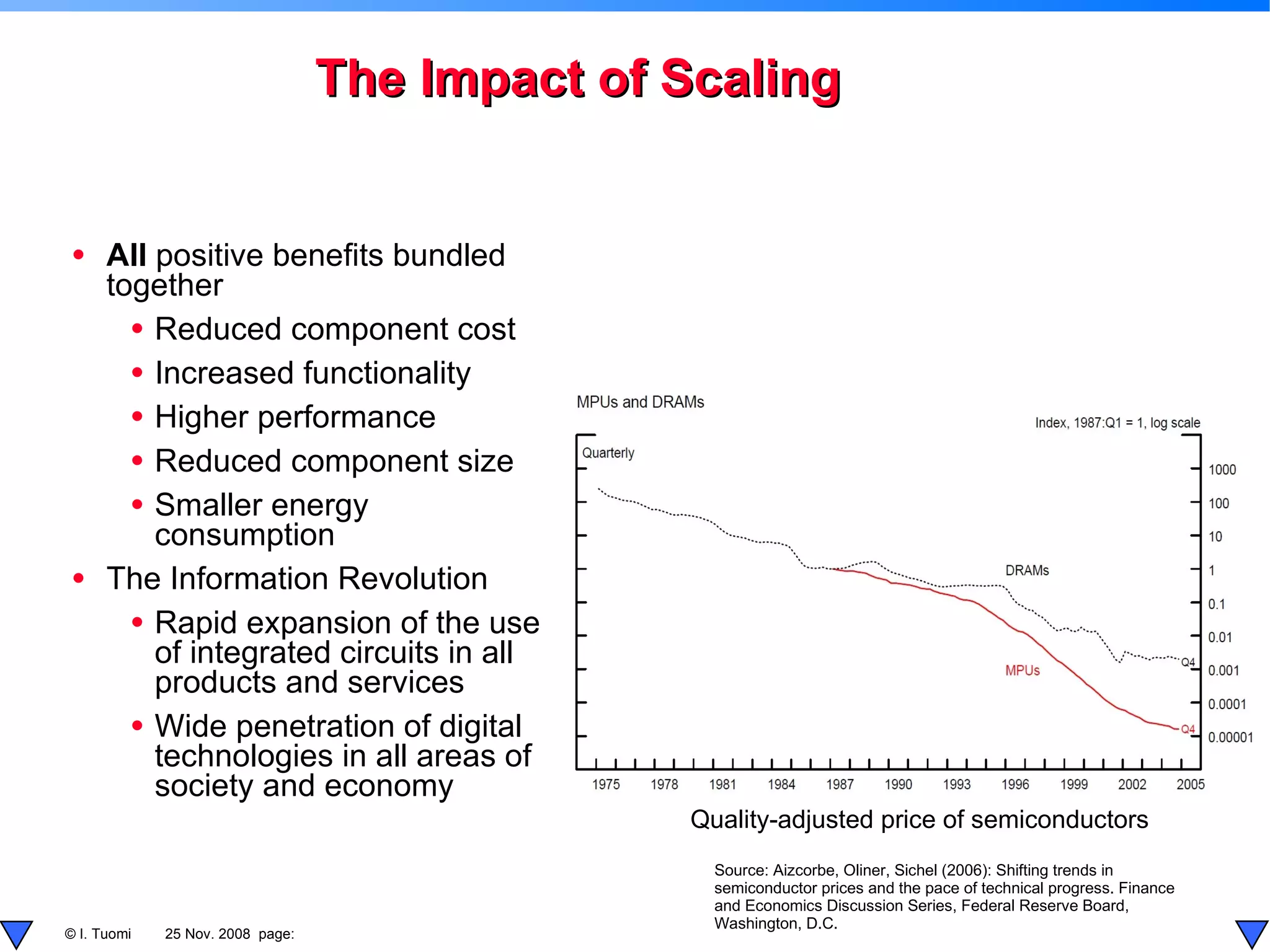

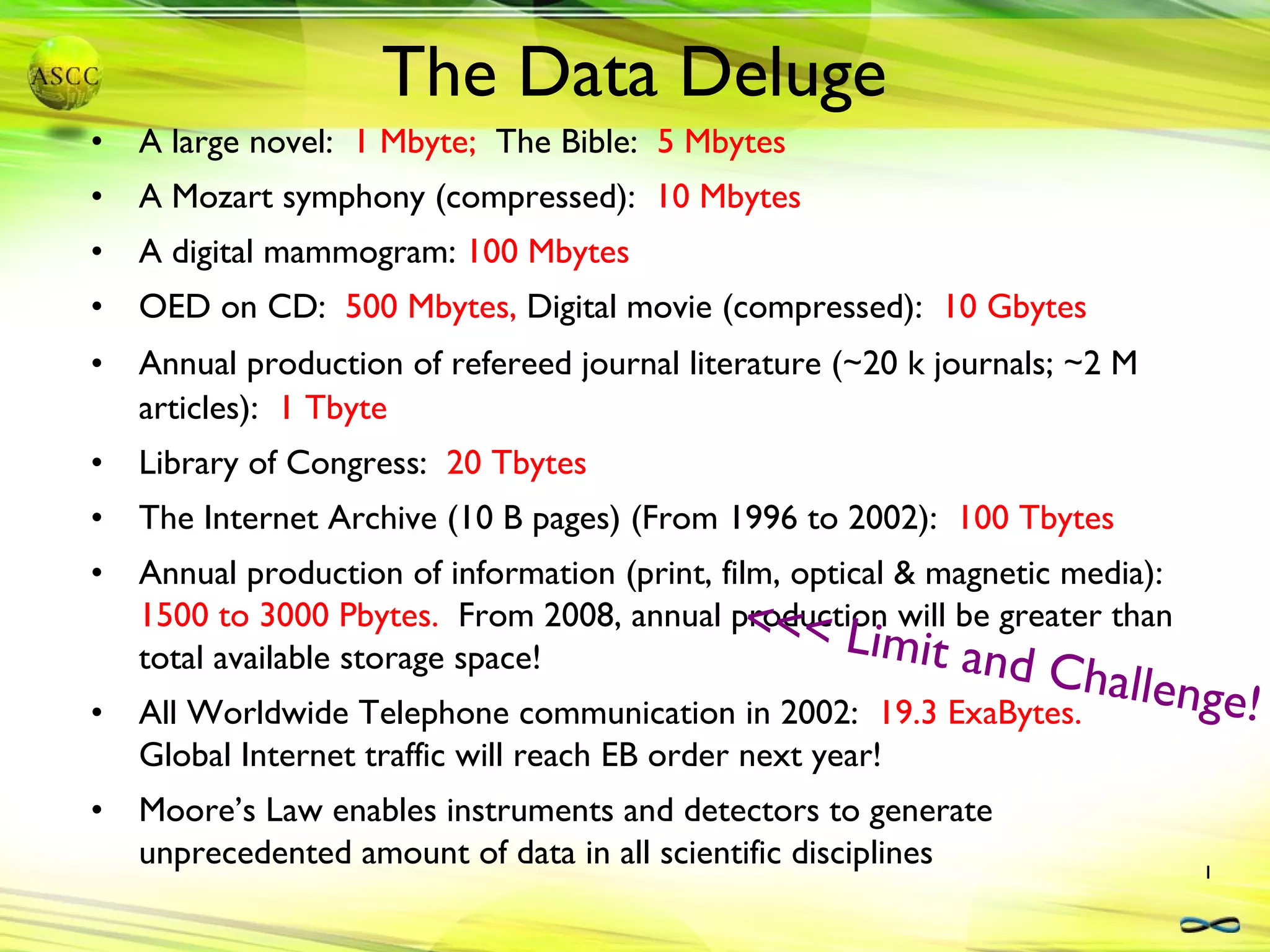

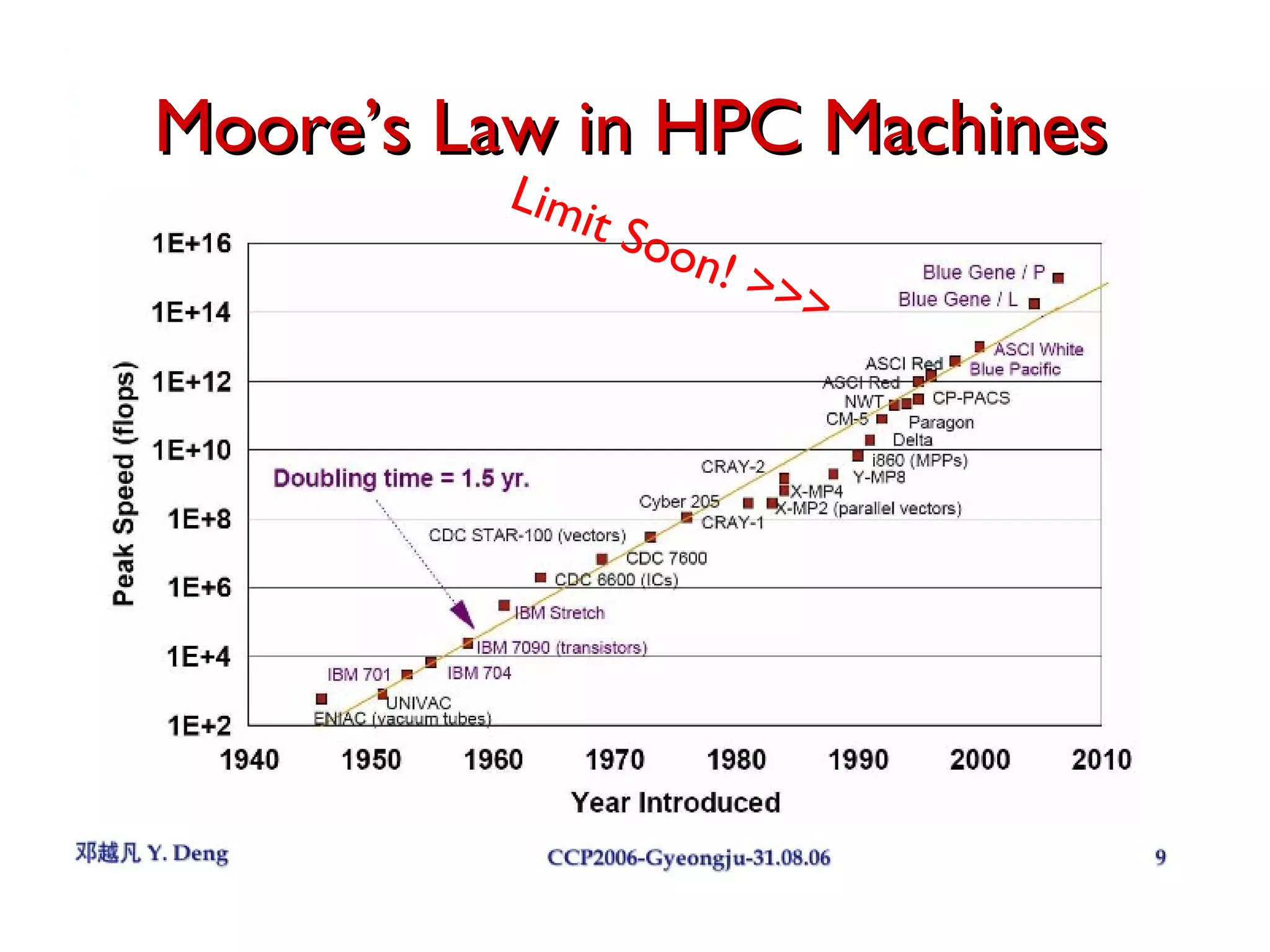

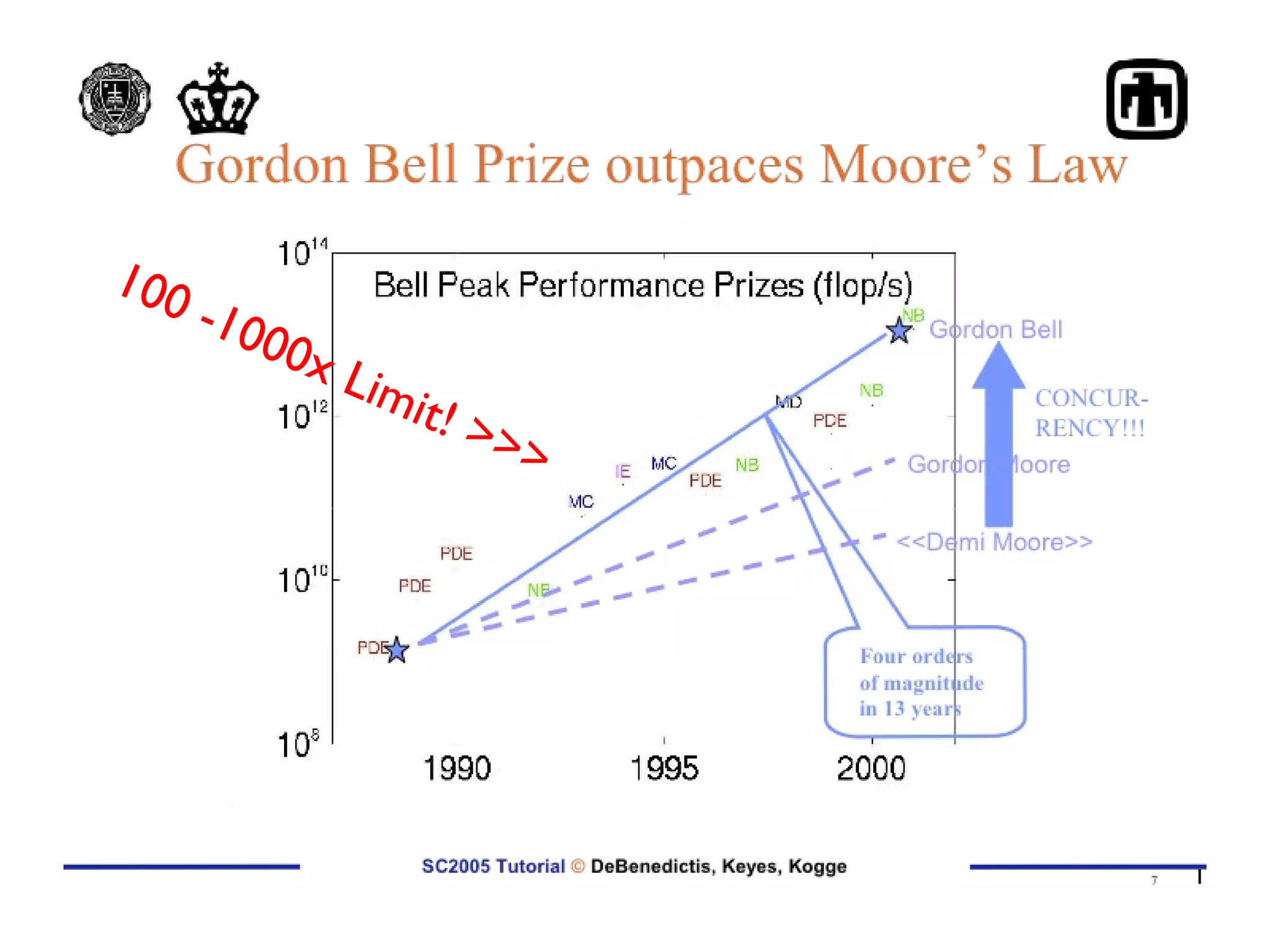

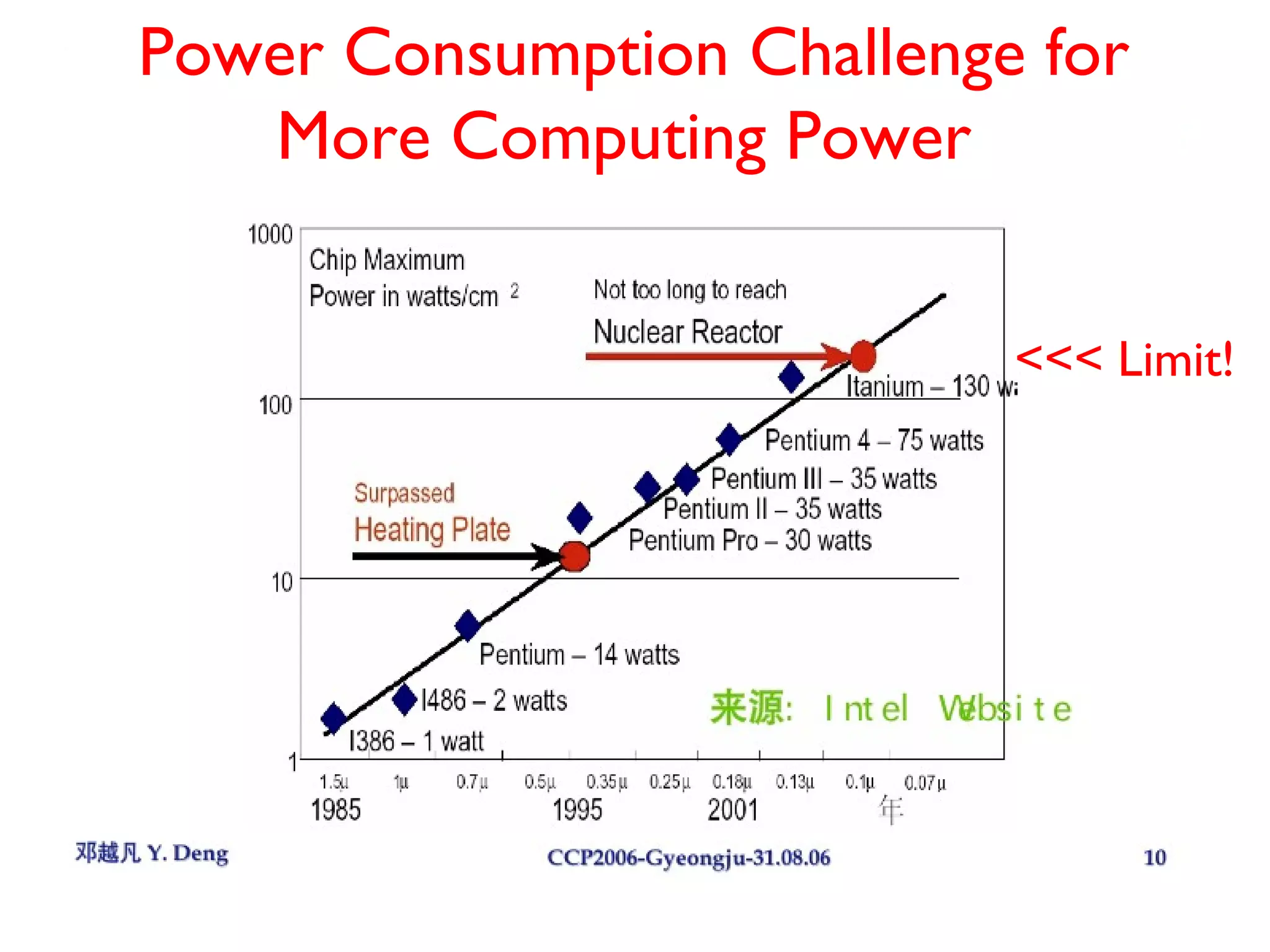

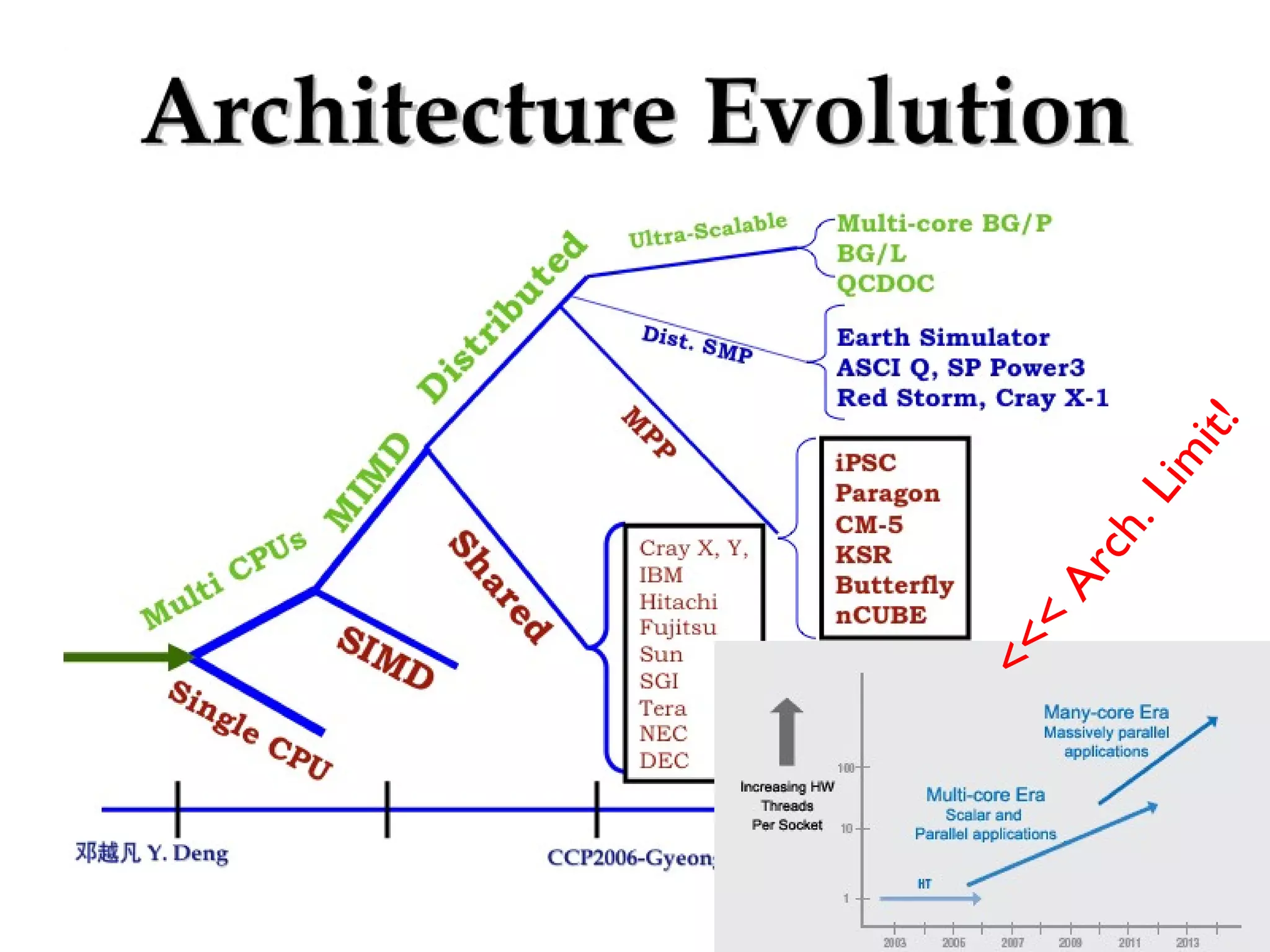

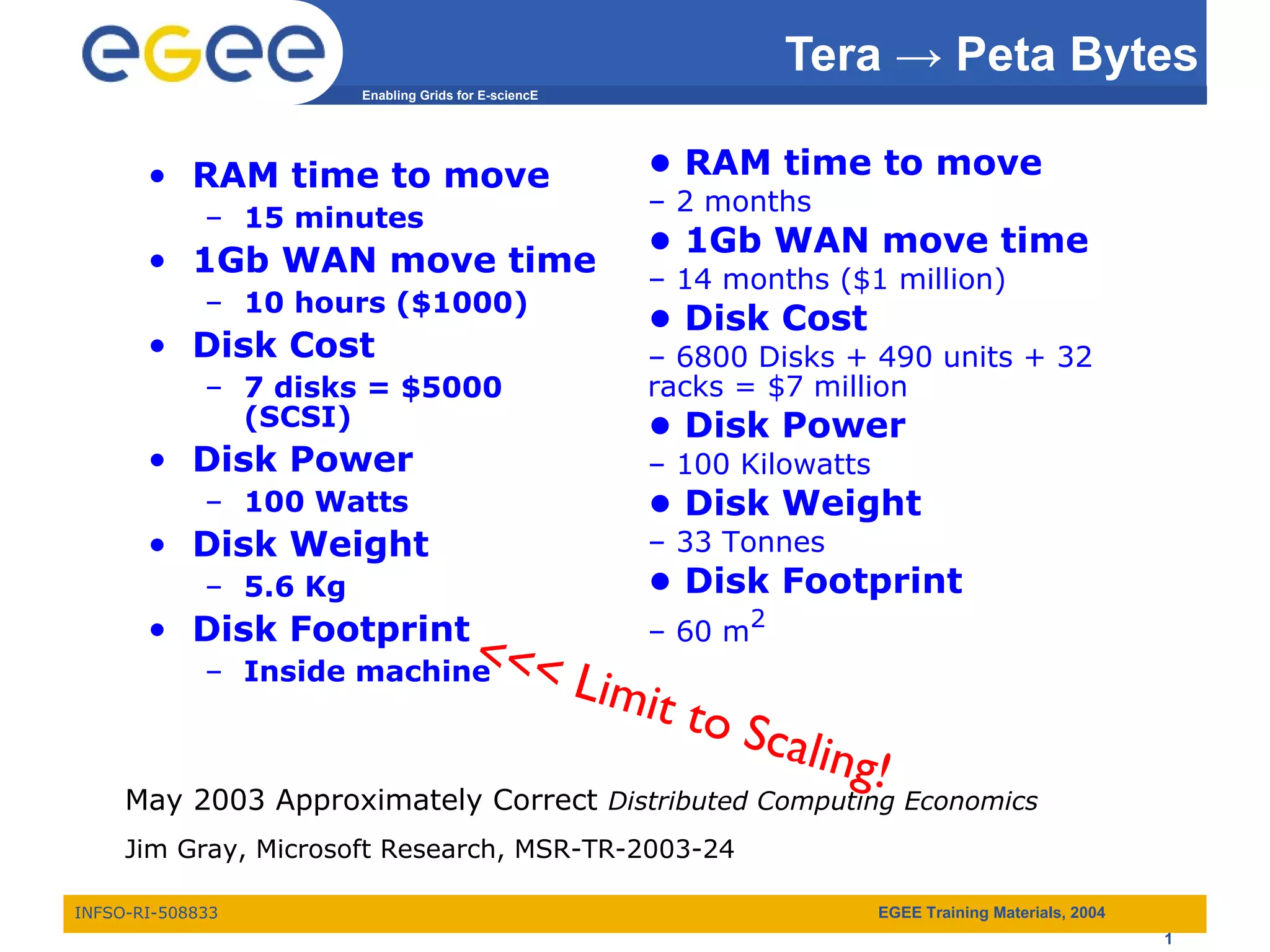

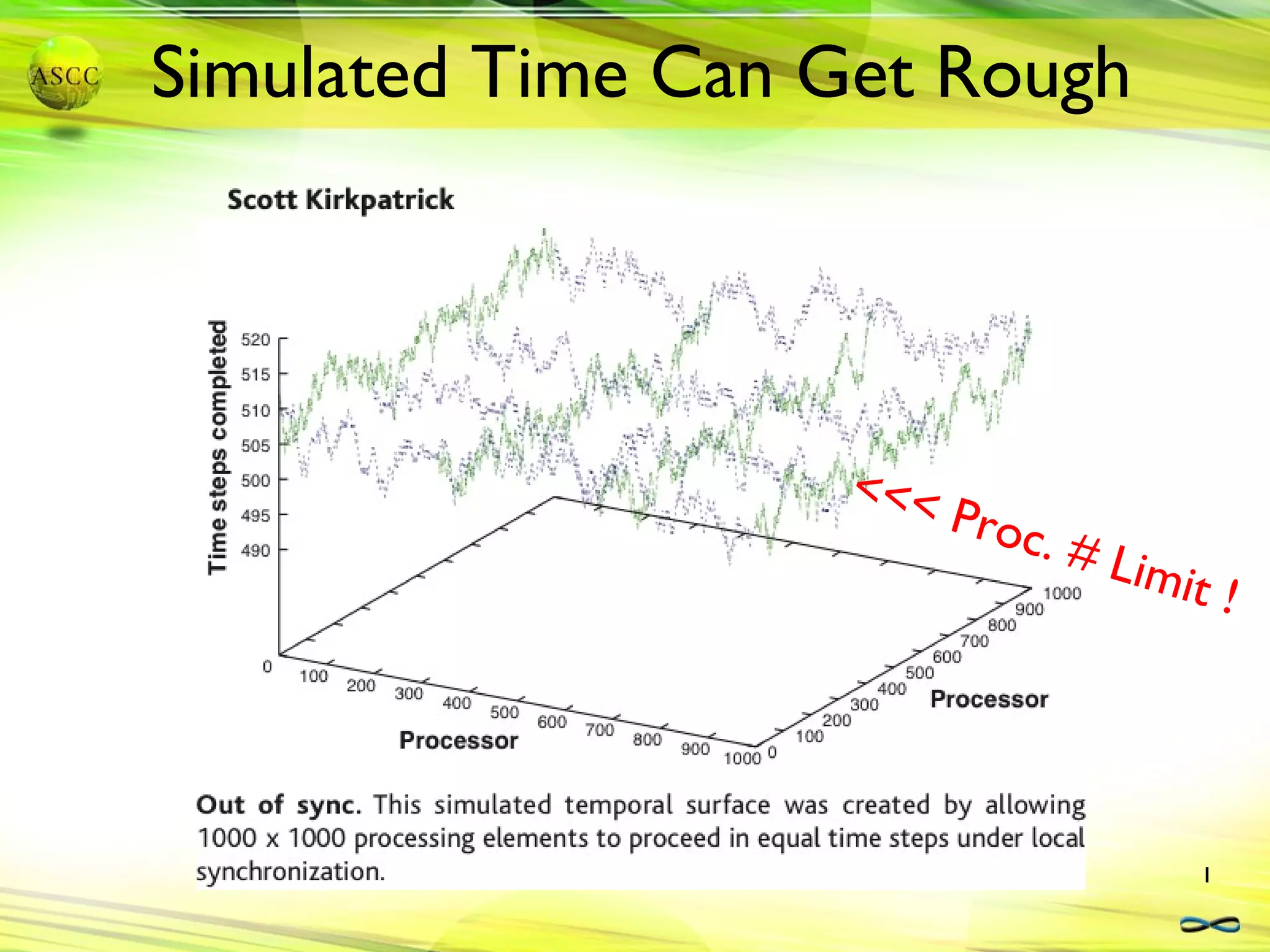

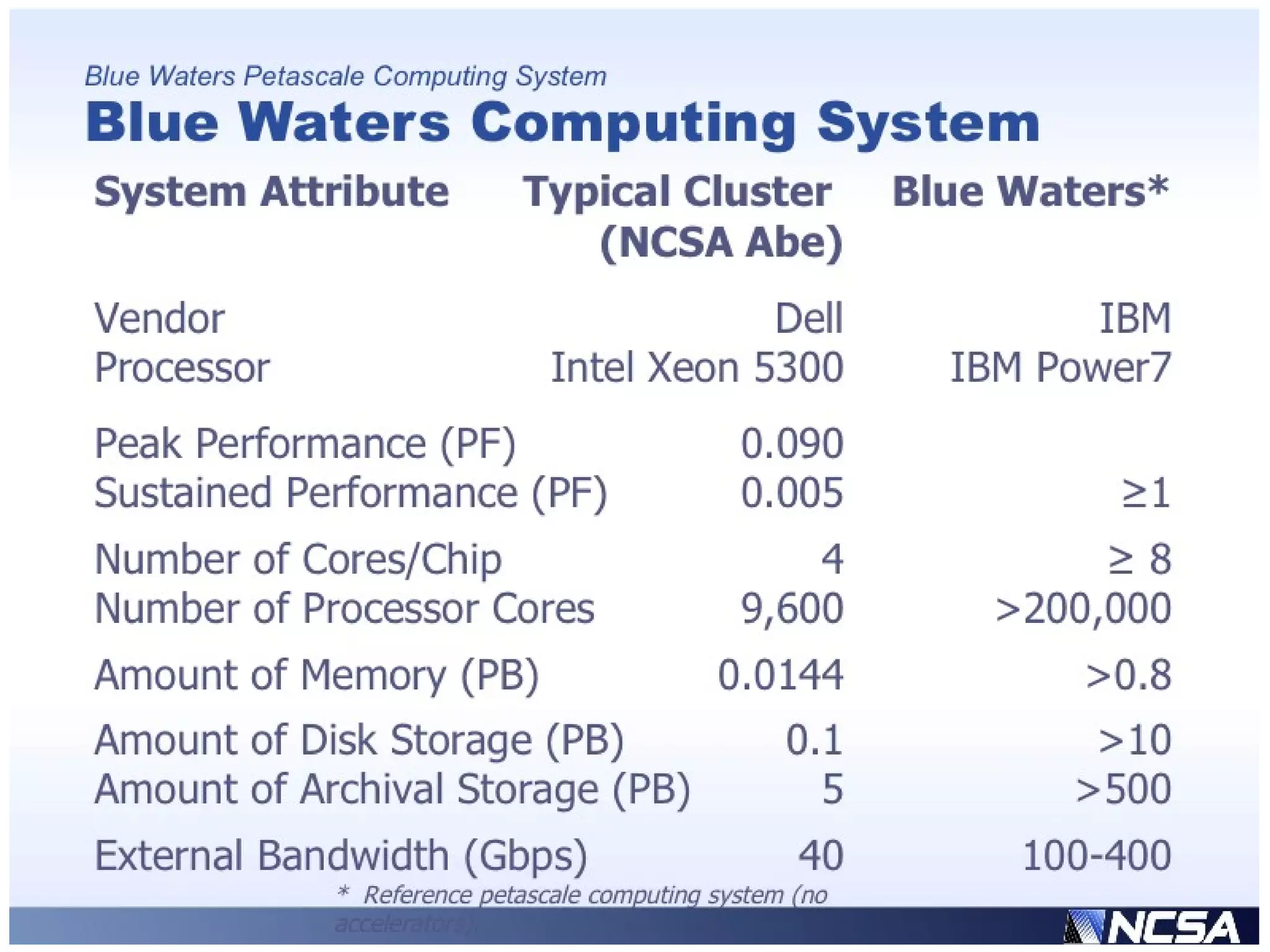

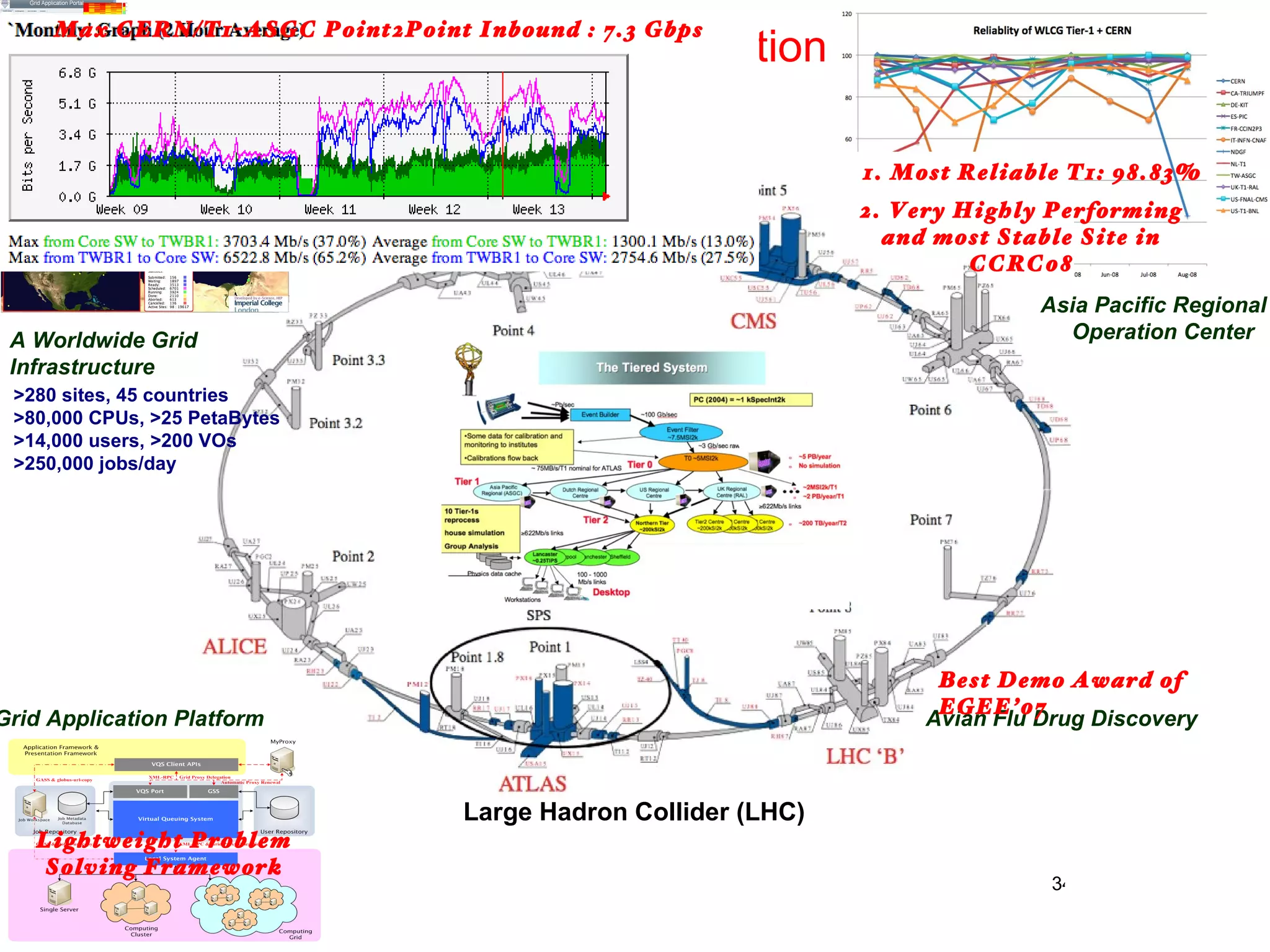

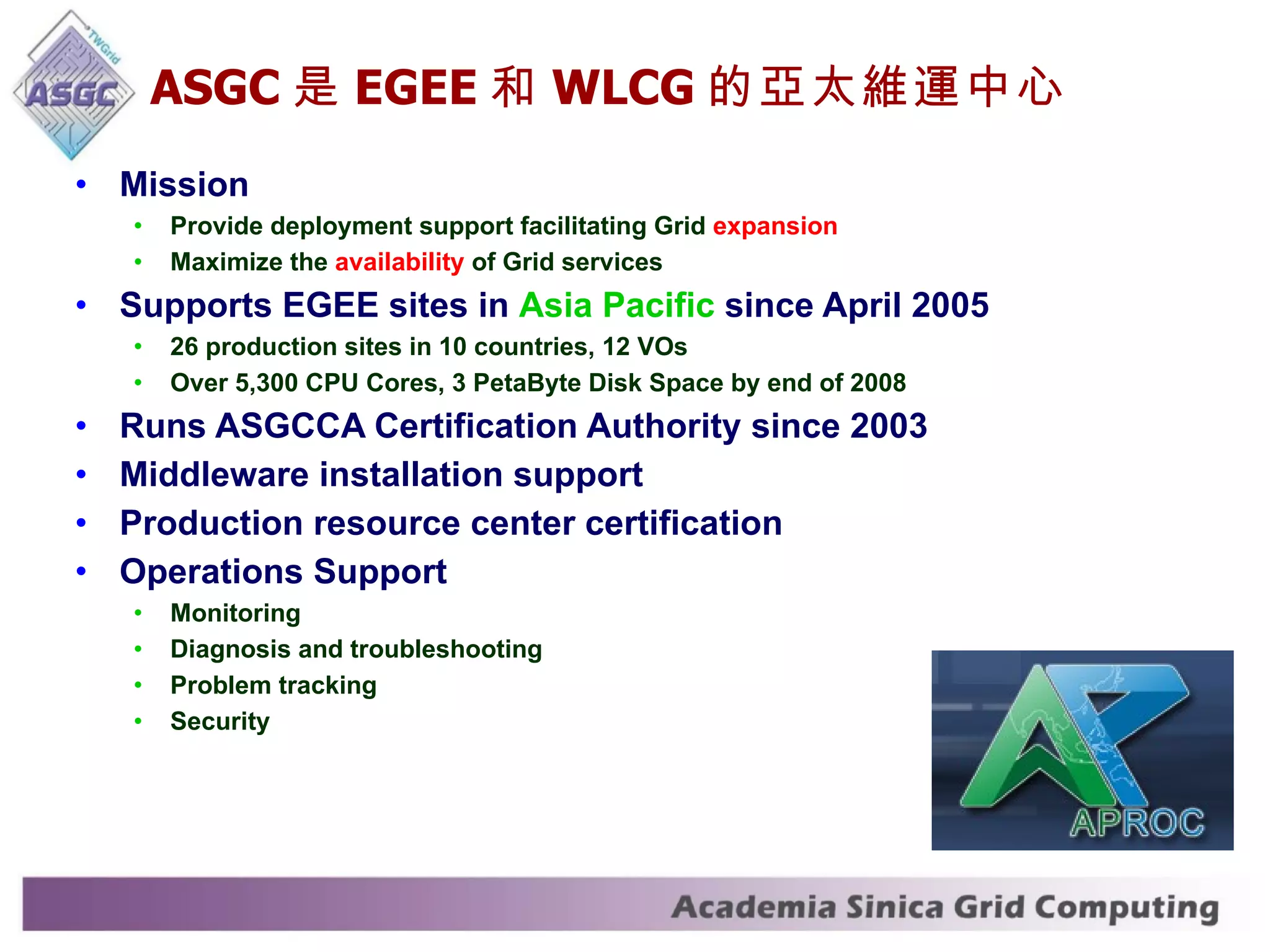

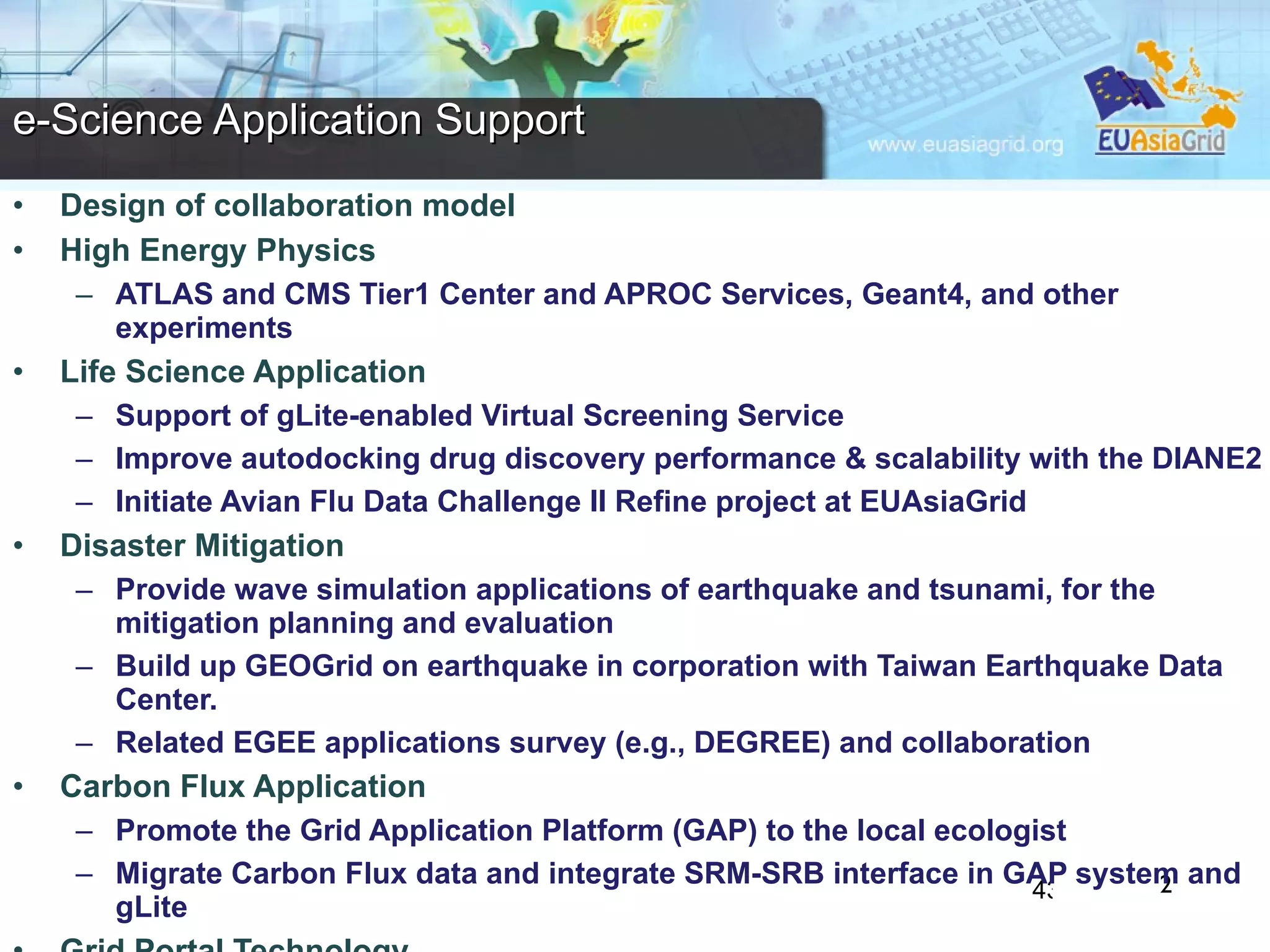

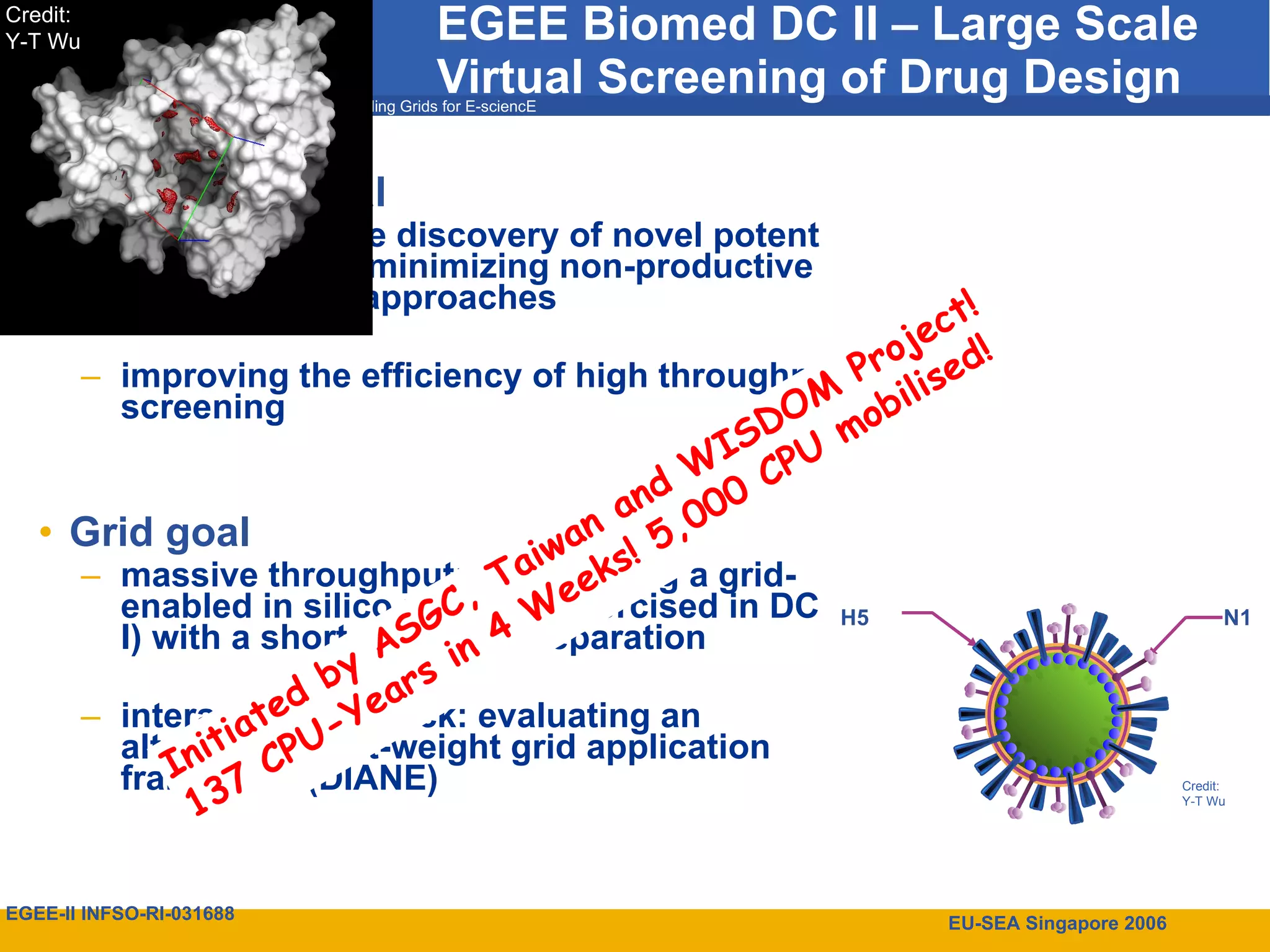

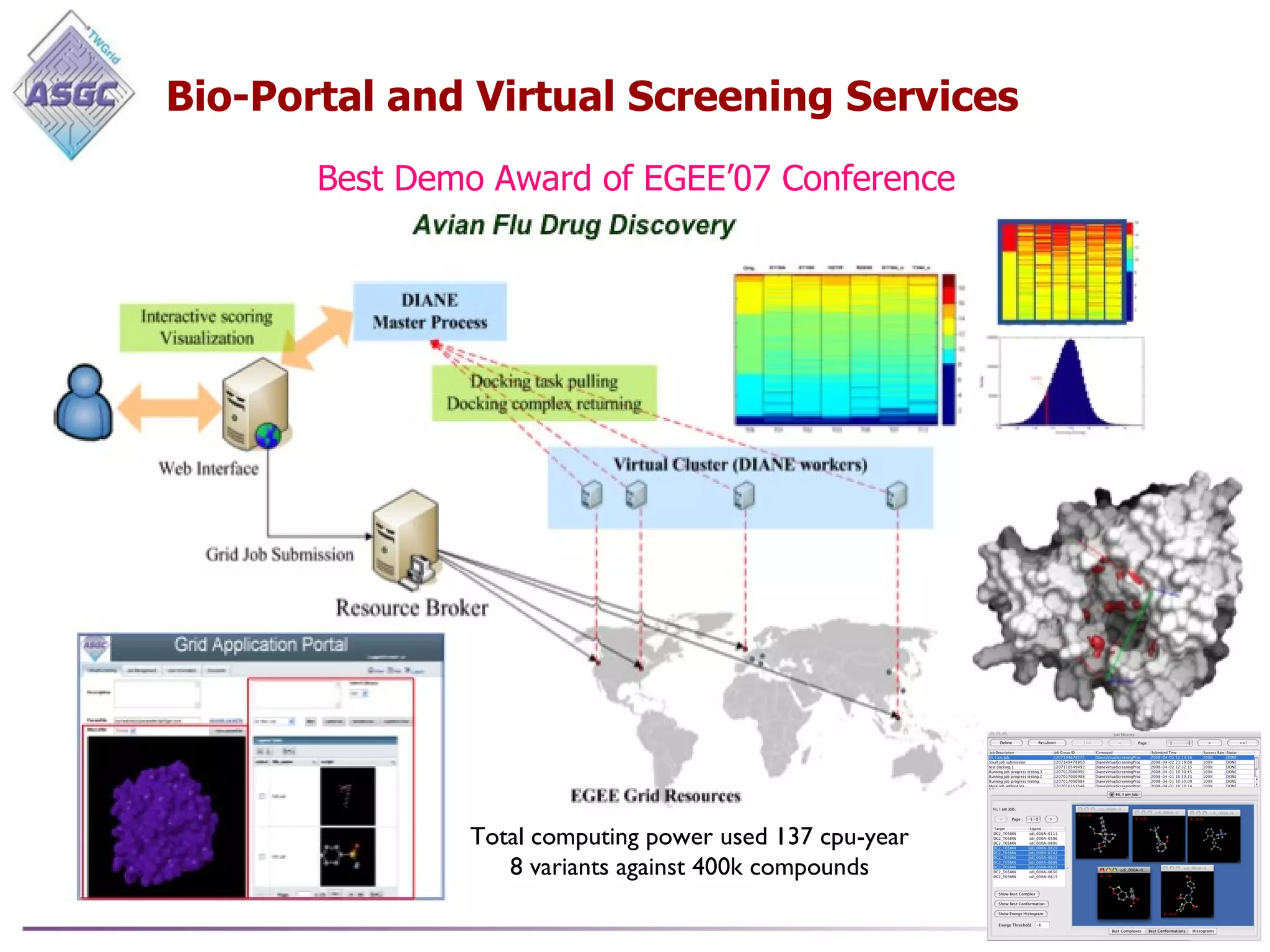

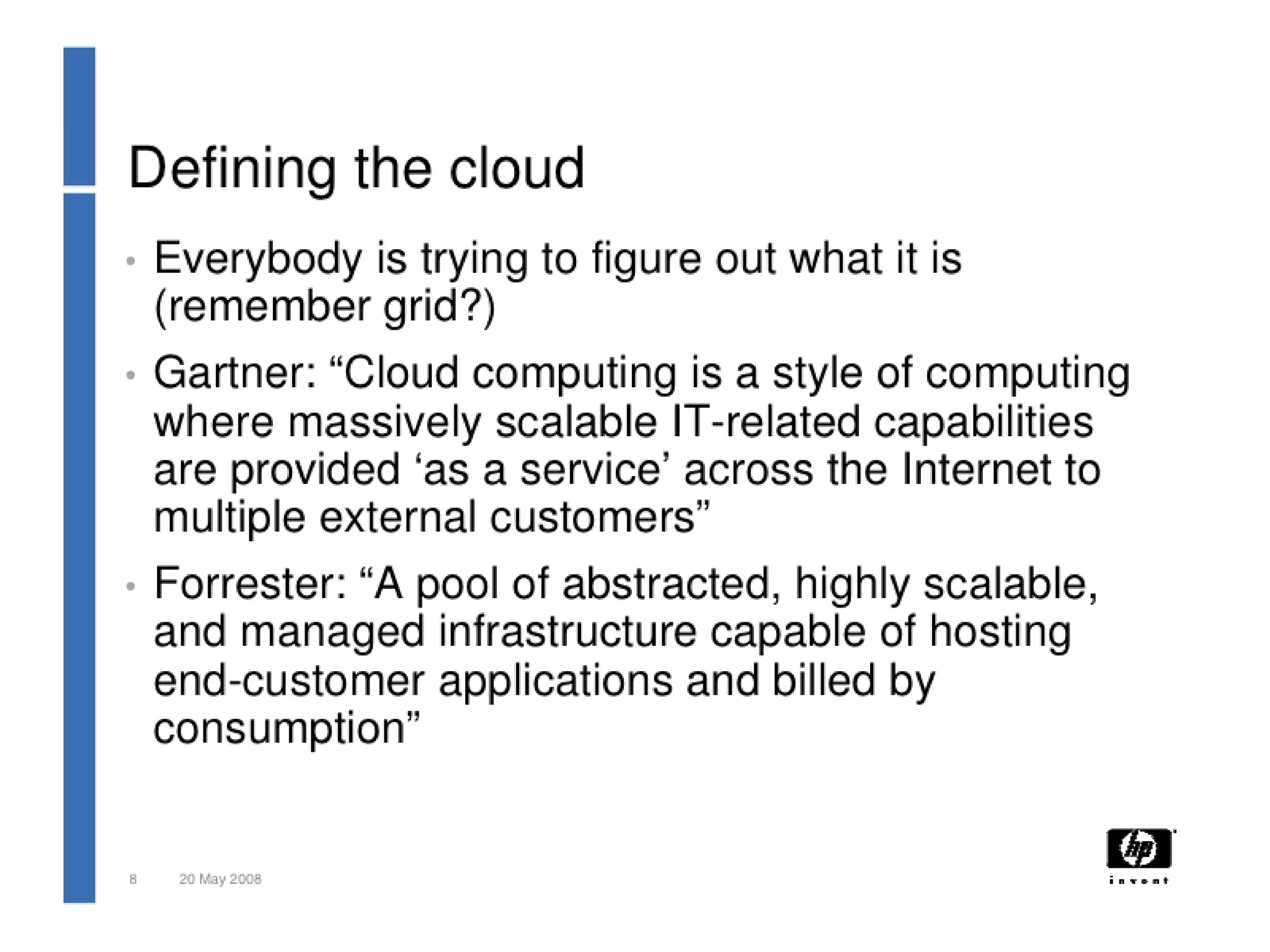

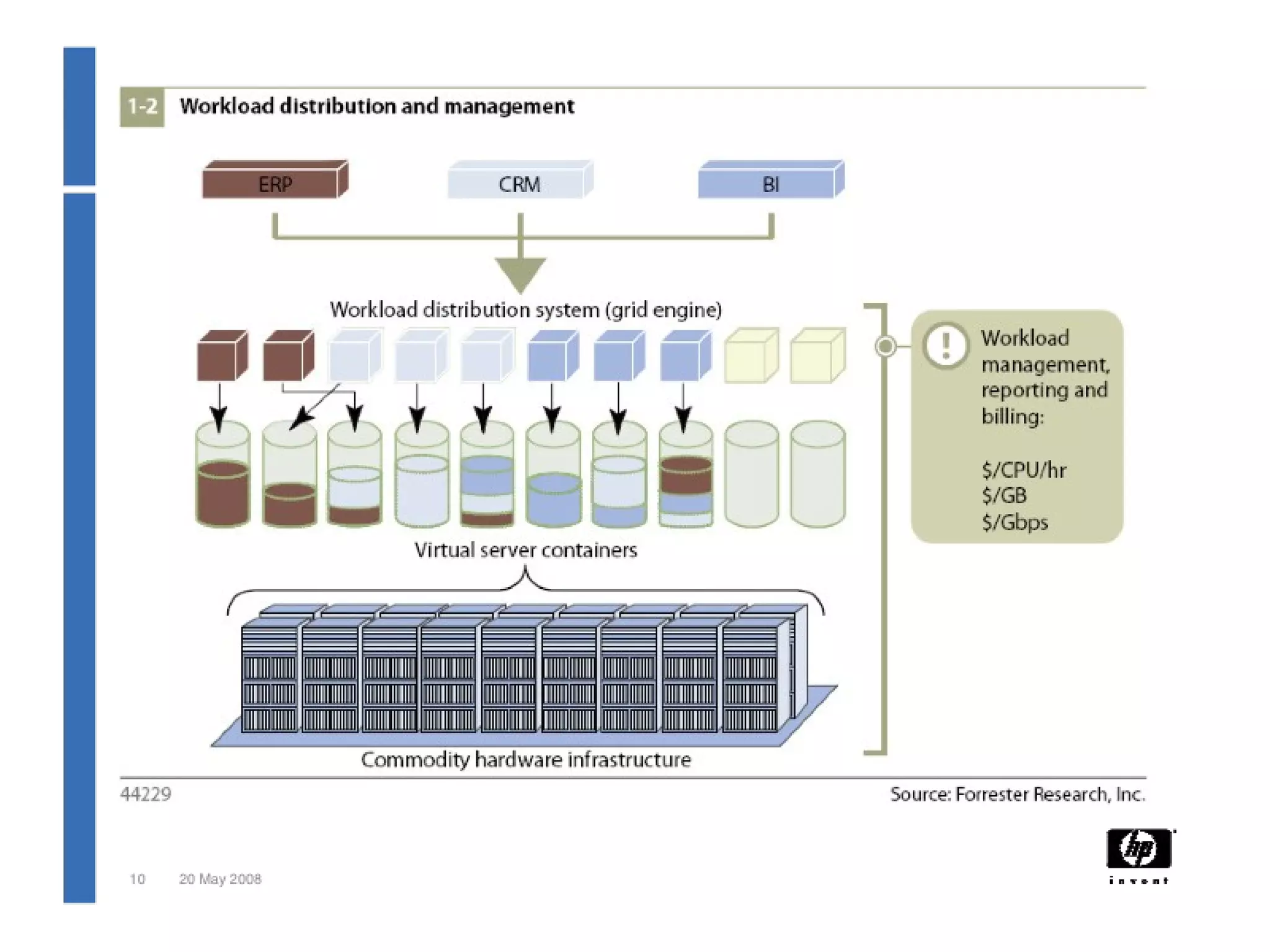

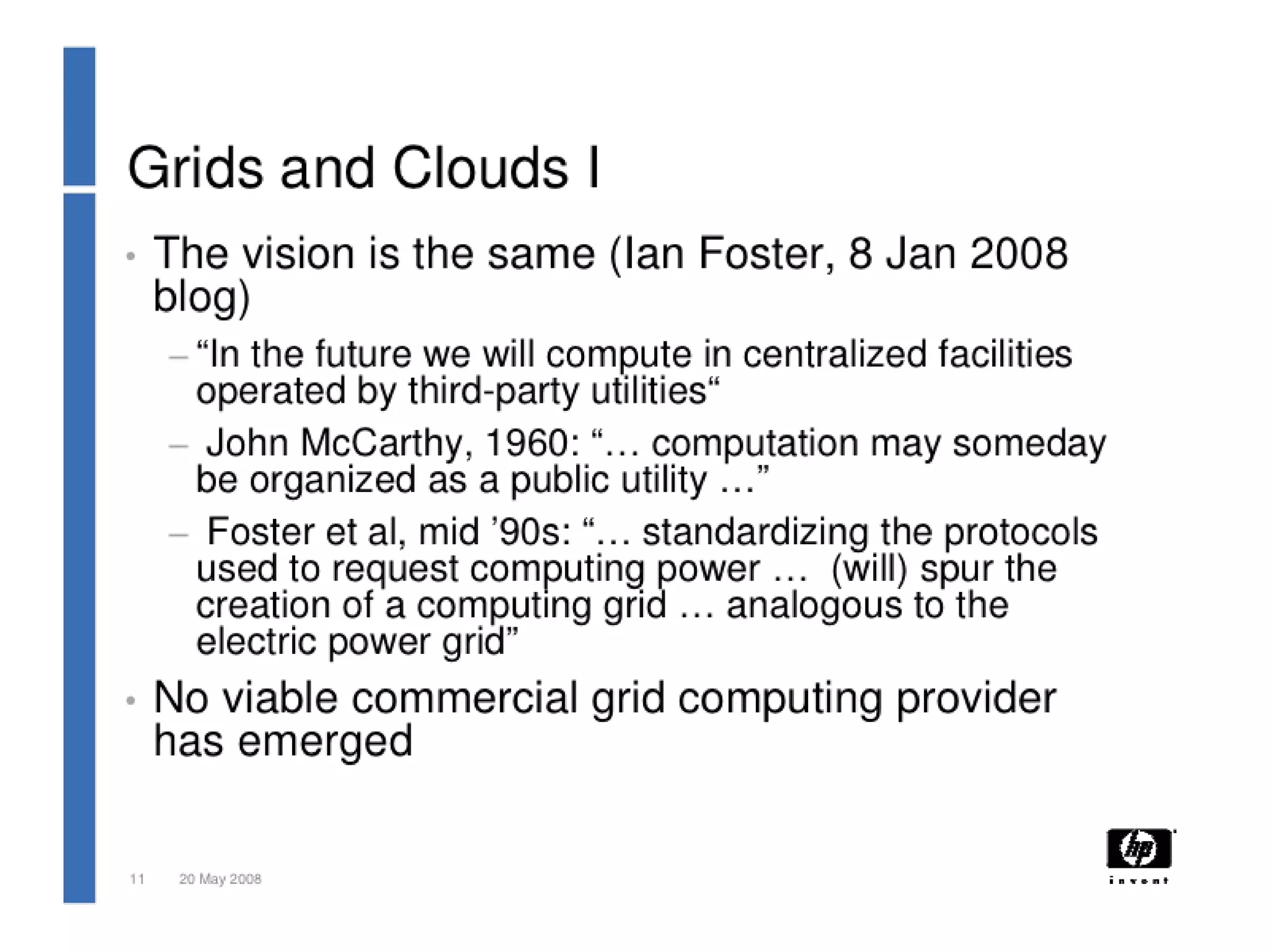

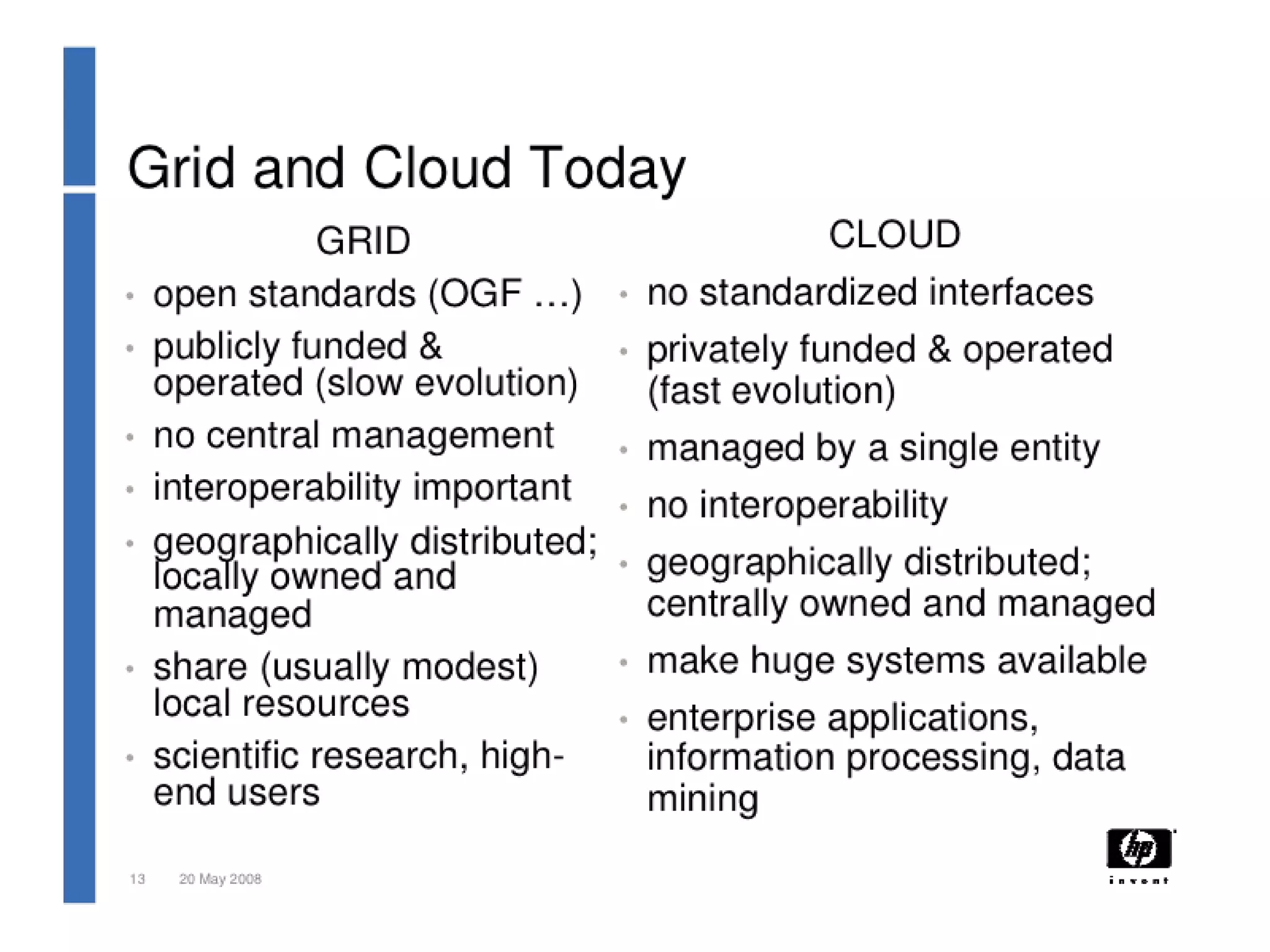

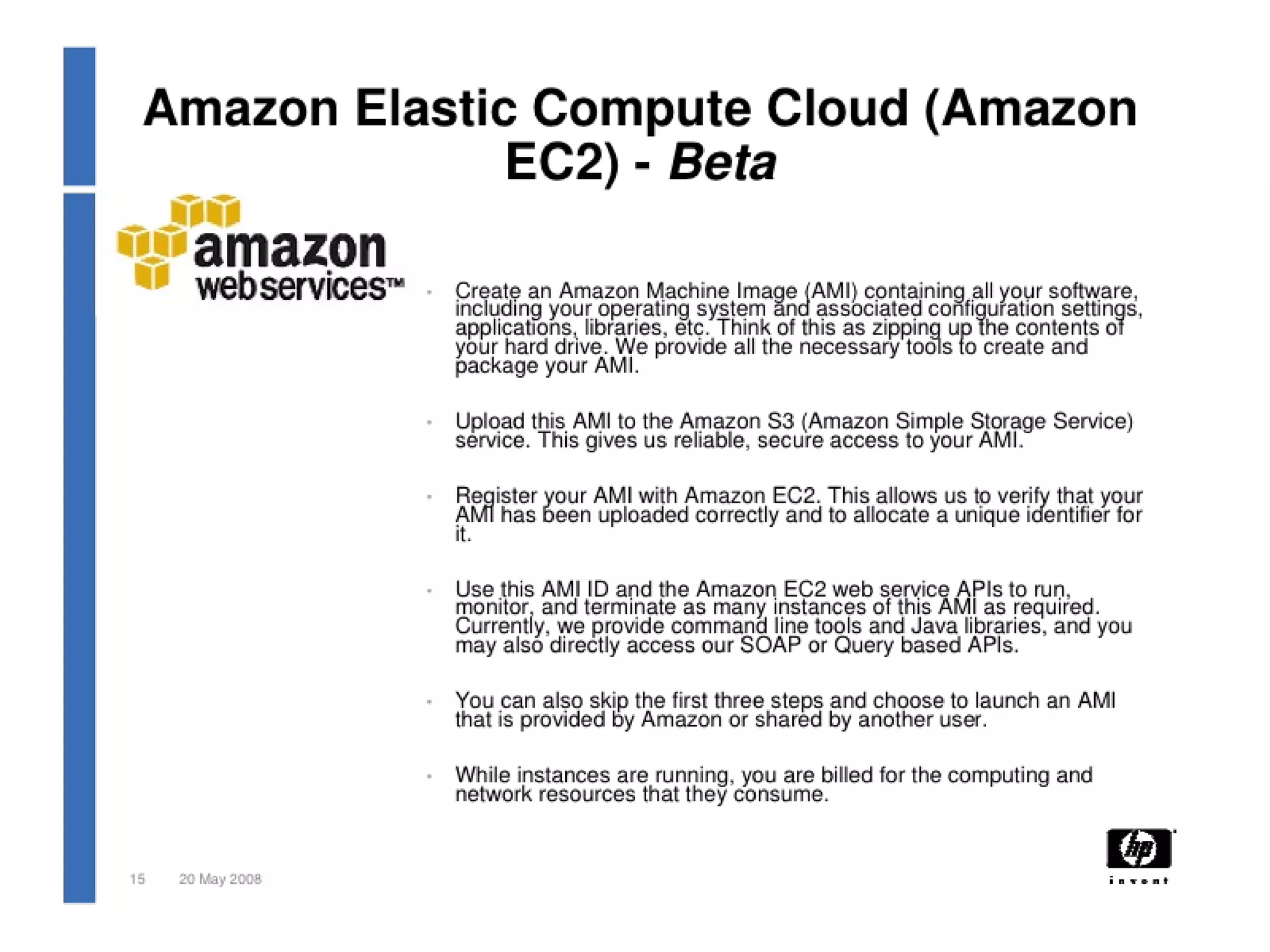

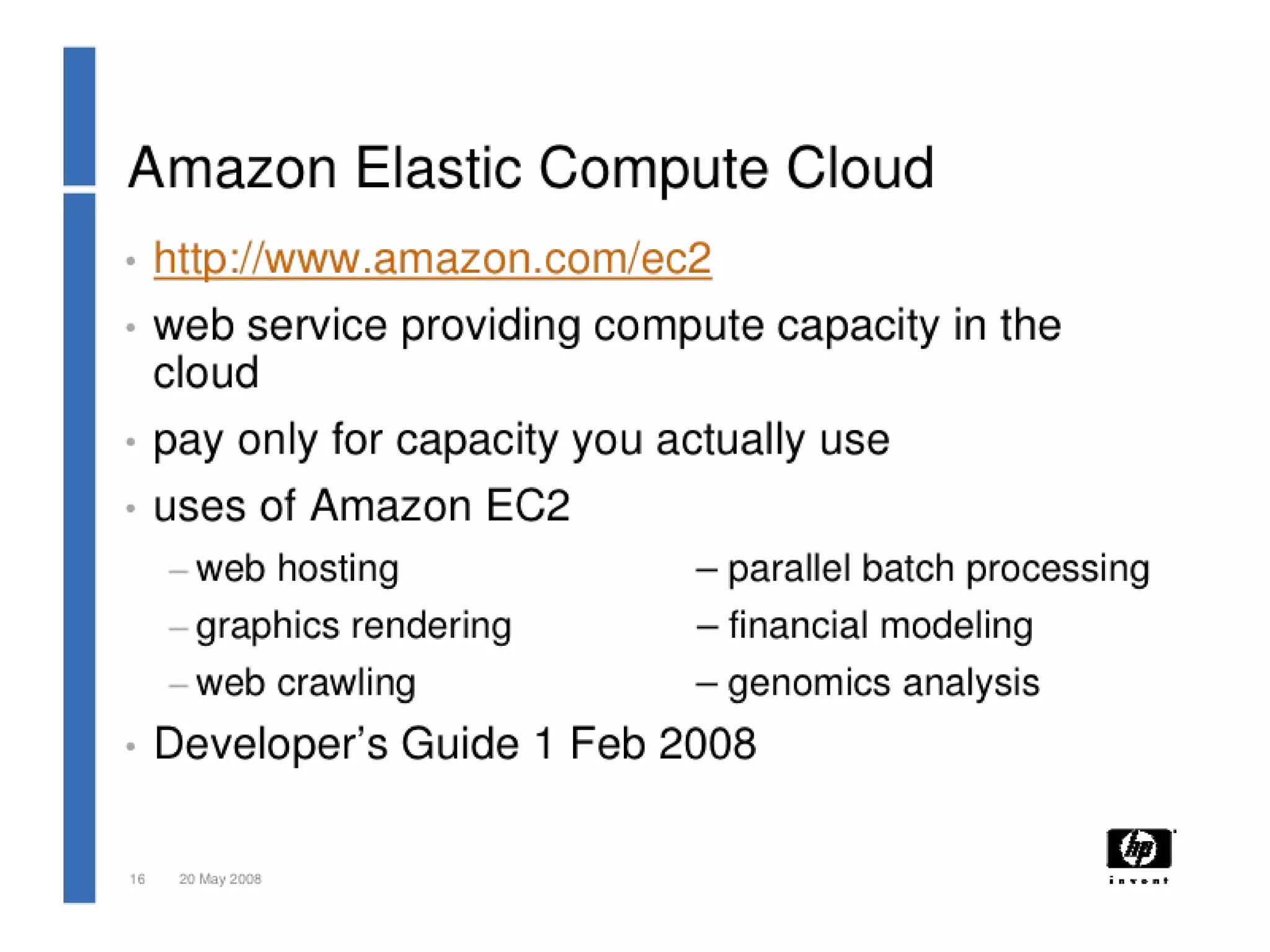

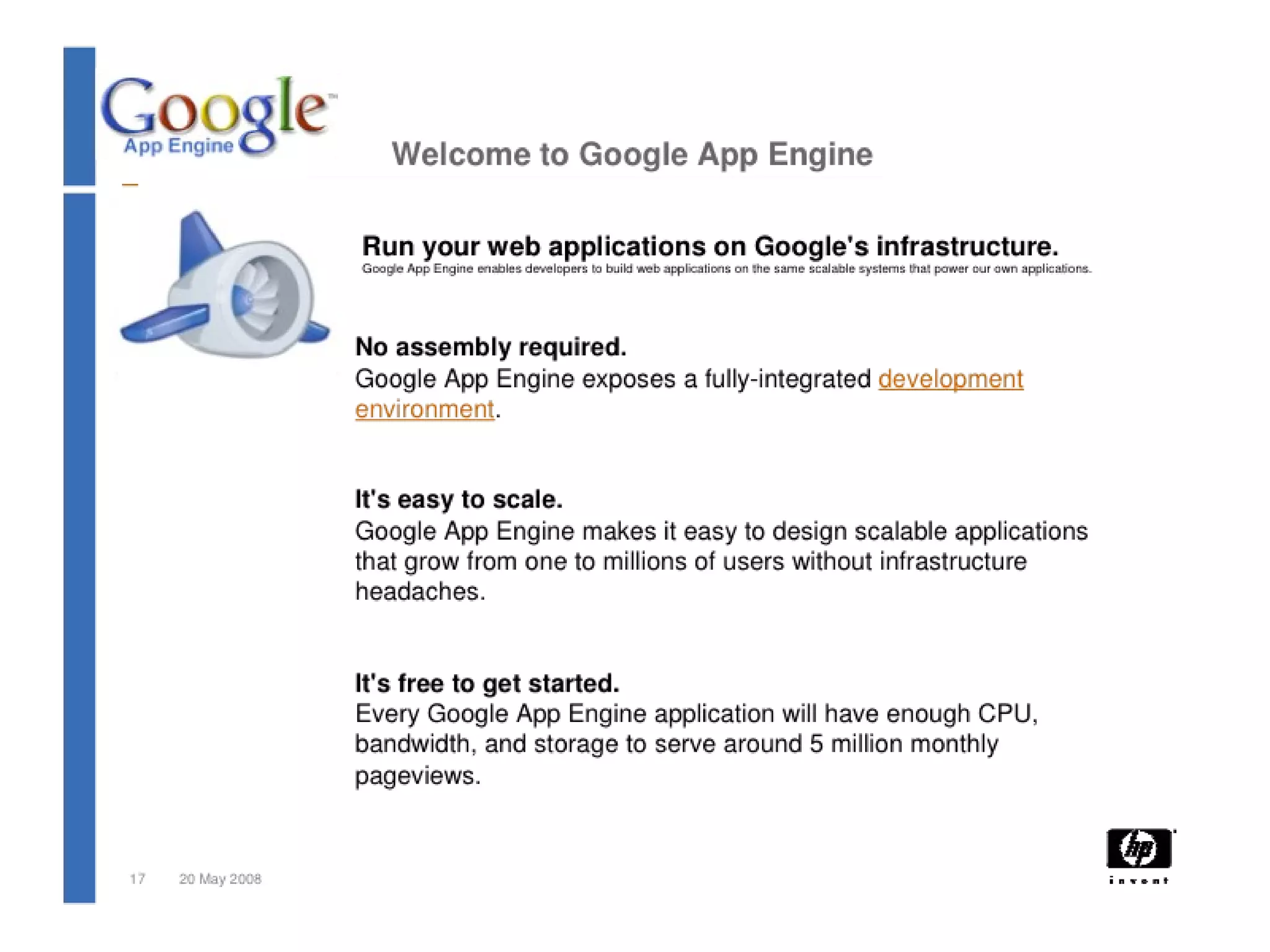

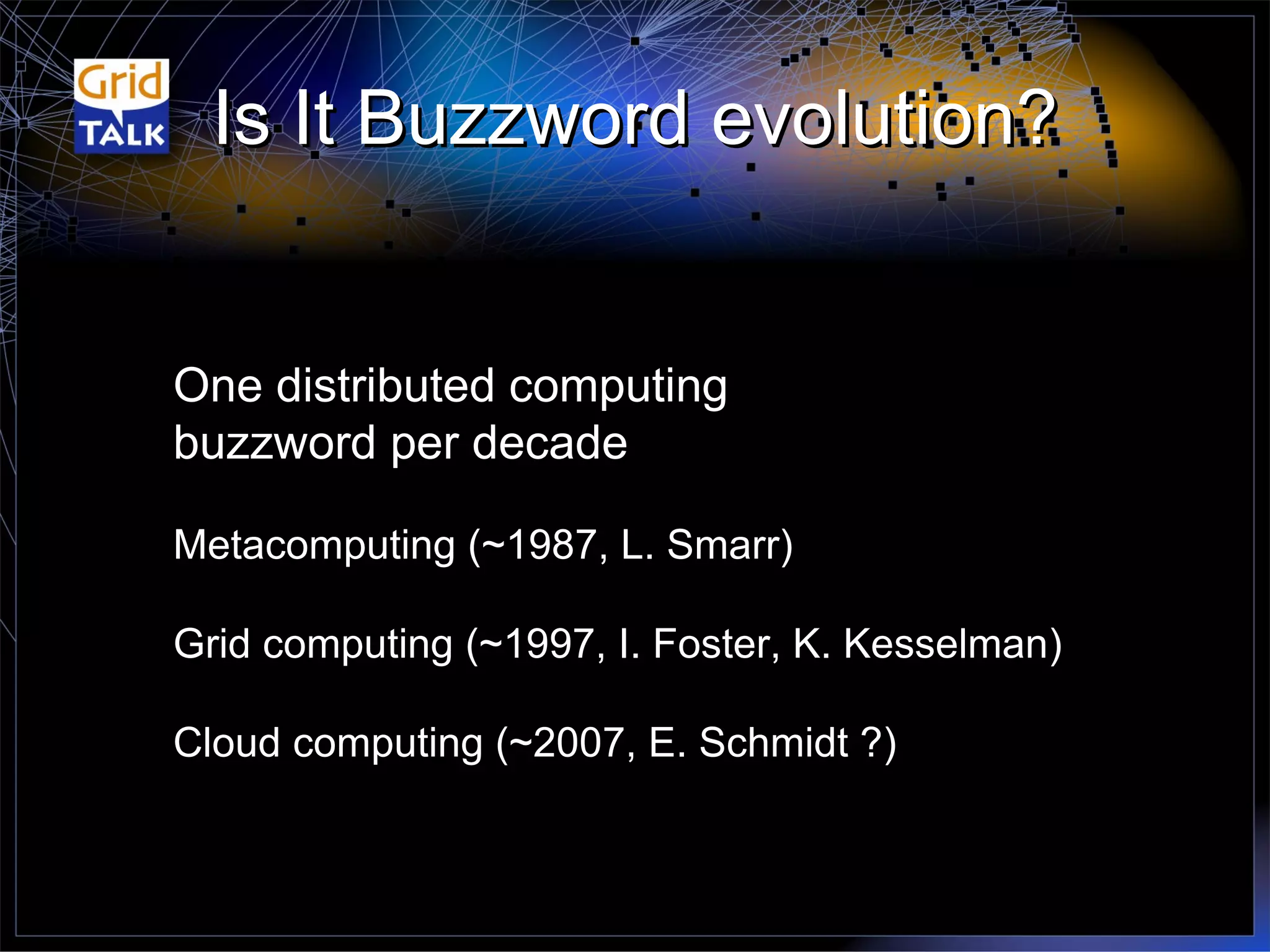

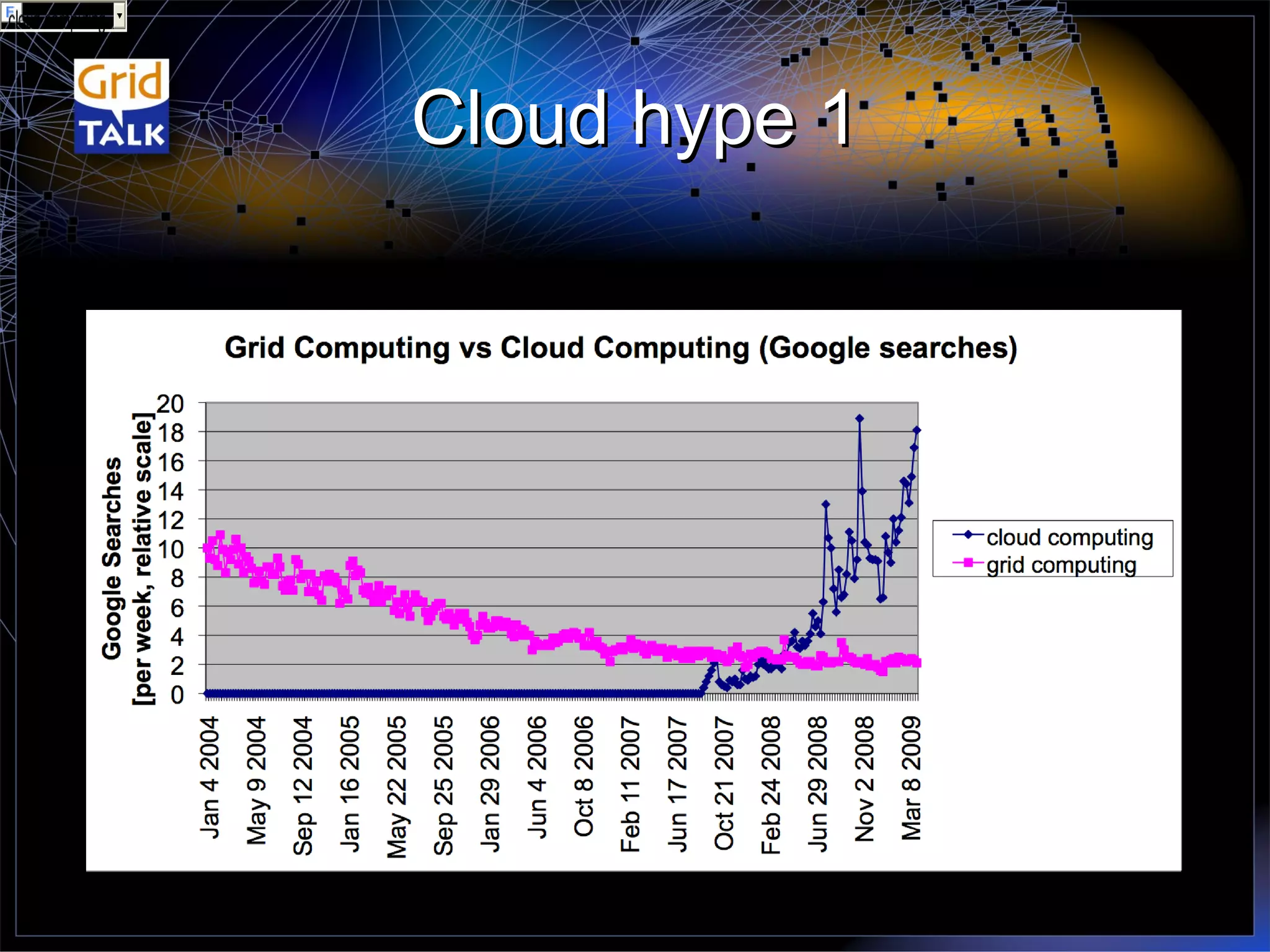

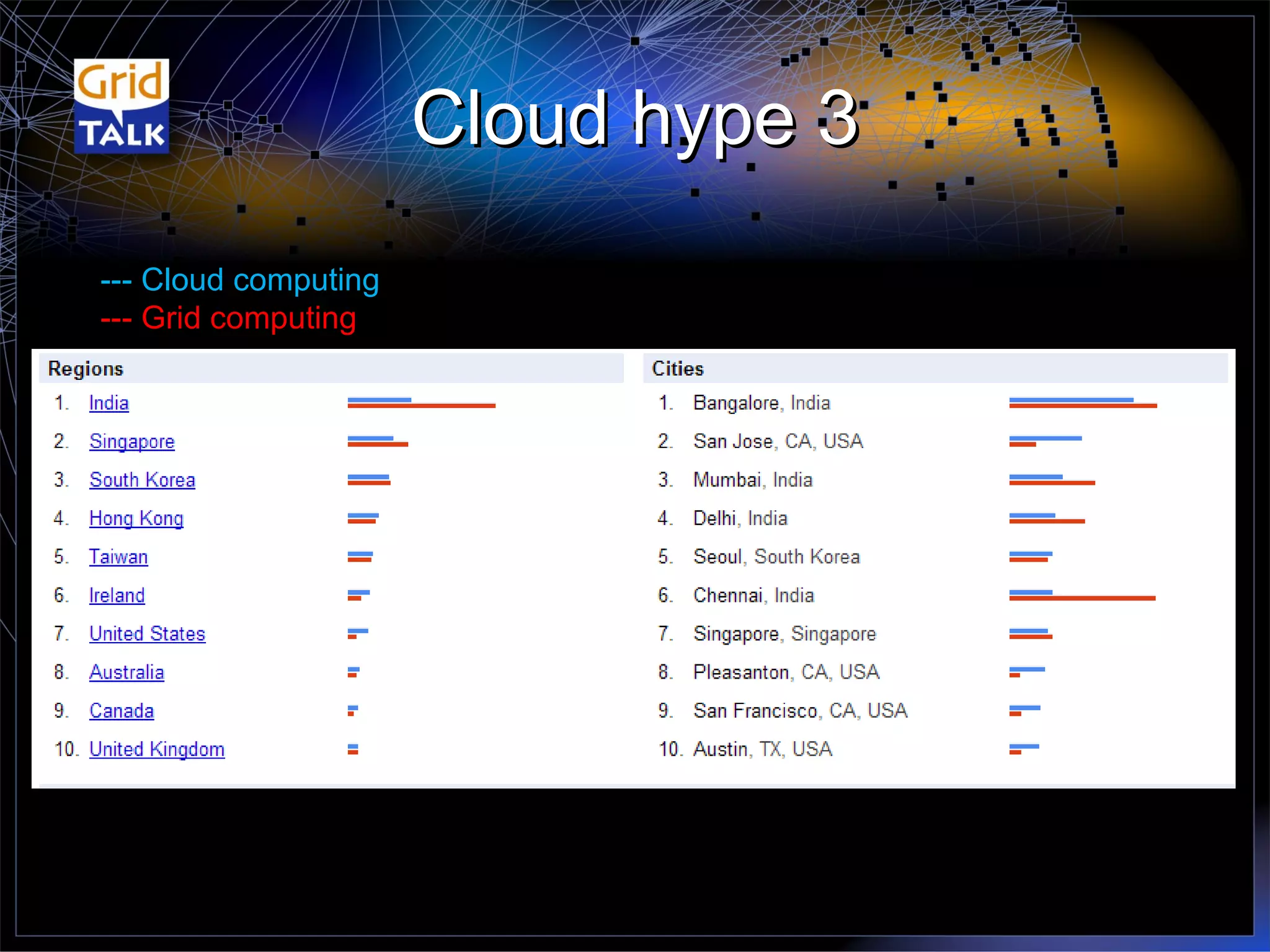

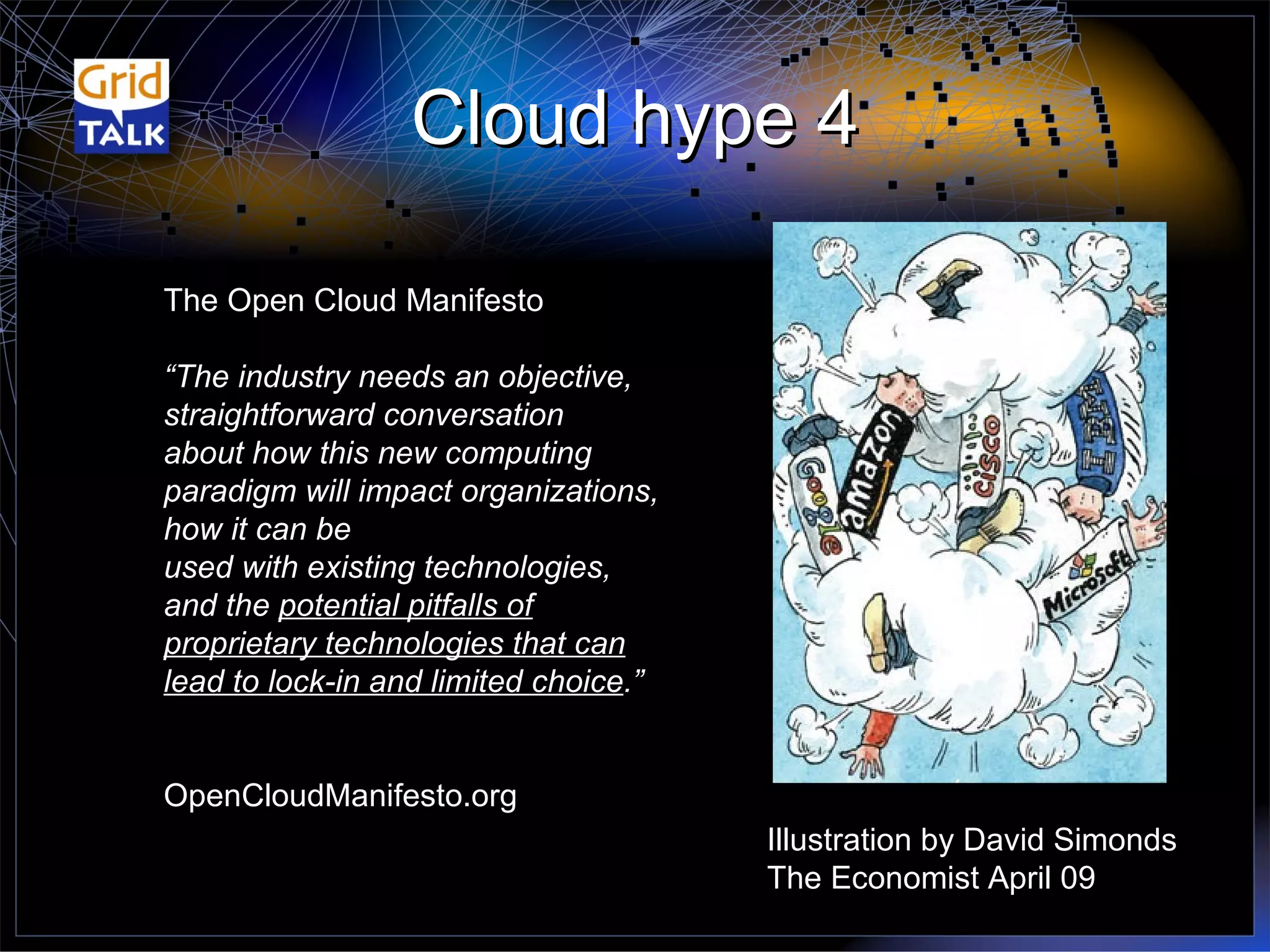

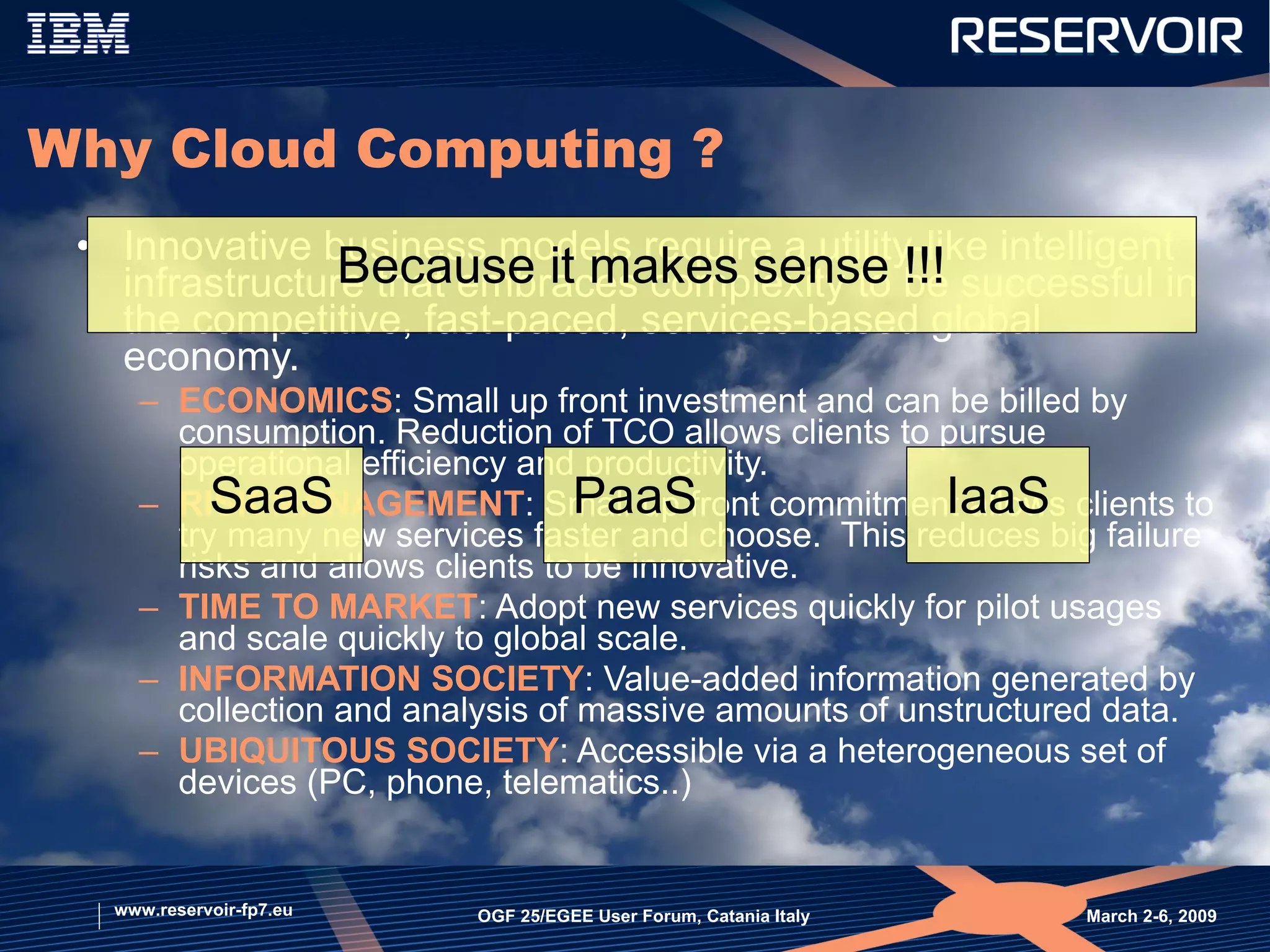

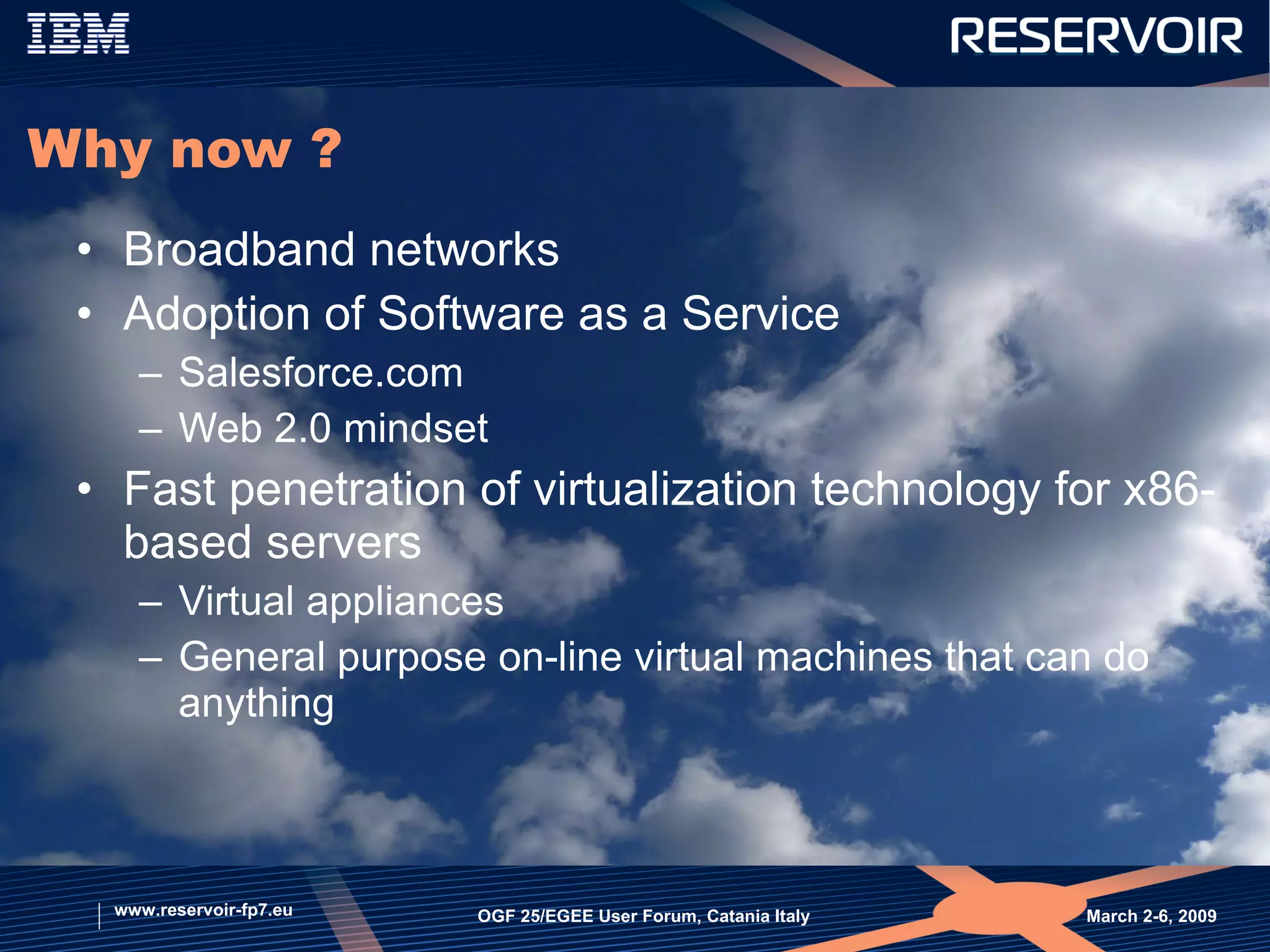

The document discusses the limits of information and communication technologies (ICT) such as computing power, data storage, and network bandwidth. It proposes that future networks will need to scale in both size and functionality through approaches like federation of multiple networks. Cloud computing is presented as a potential approach to tackle these limits by providing on-demand access to shared computing resources over a network in a scalable and elastic manner. However, cloud computing is still associated with many marketing hype and open questions remain regarding its impact and how it can integrate with existing technologies.

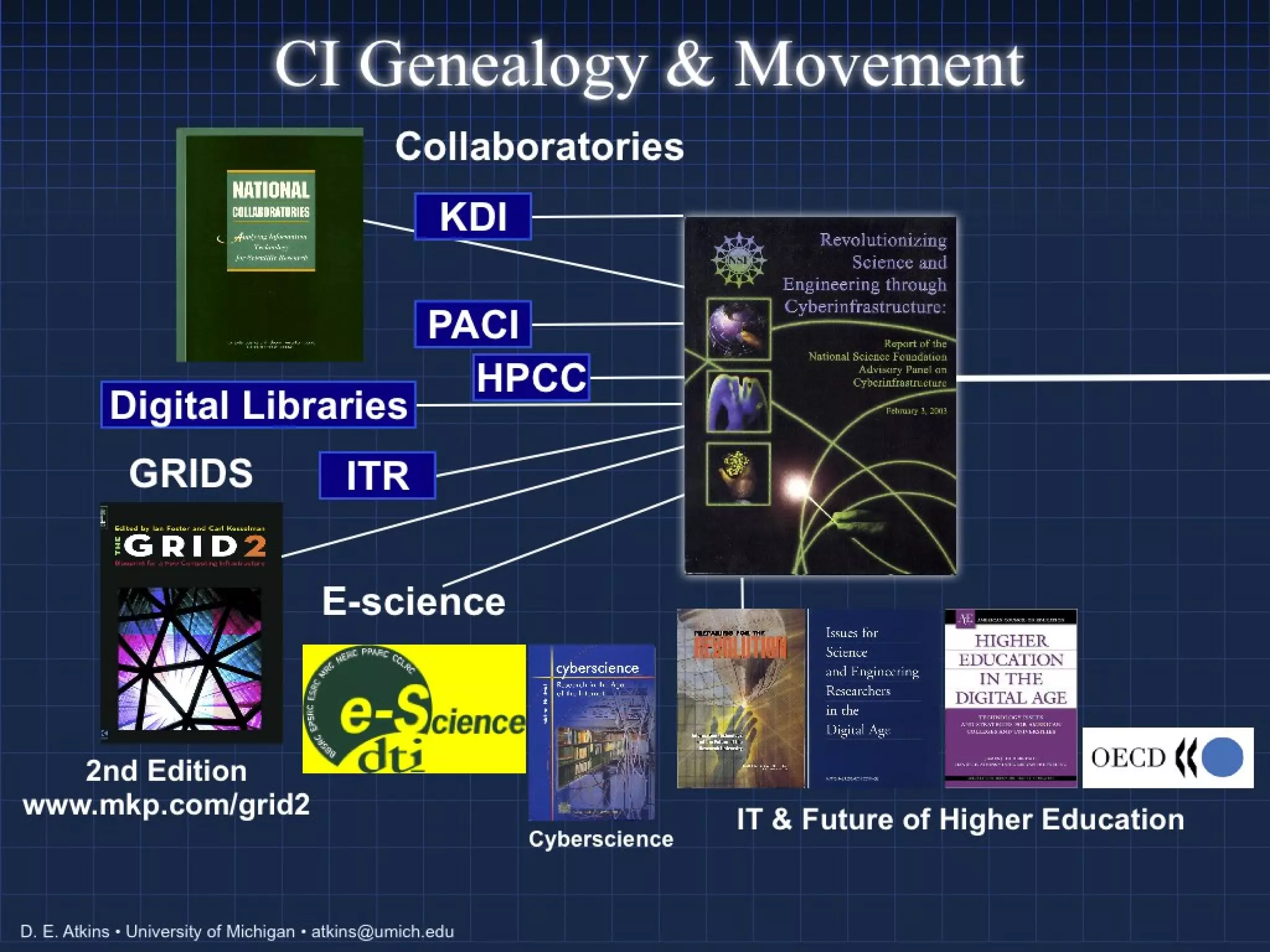

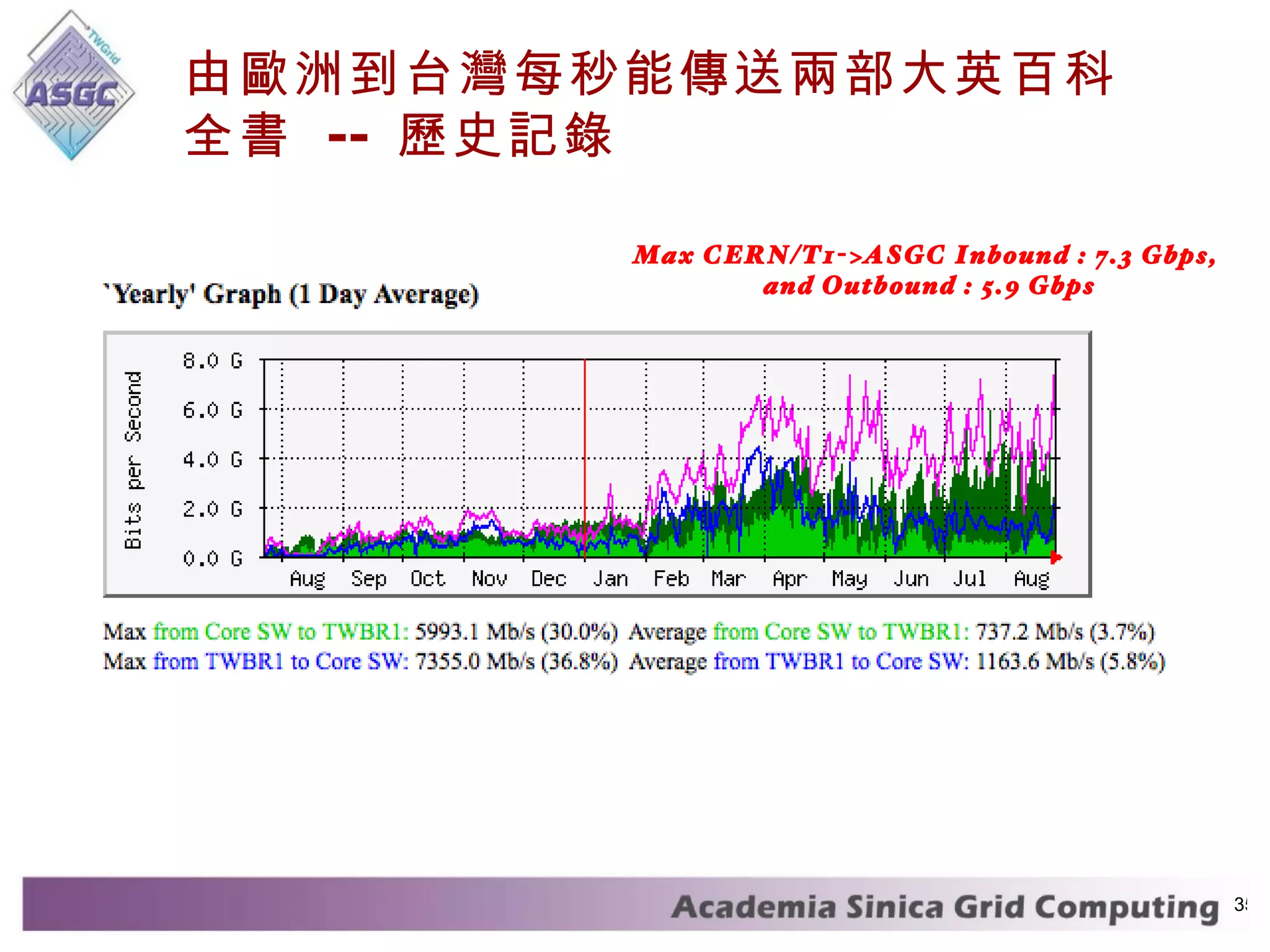

![Grids and Clouds: Tackle the Limits of ICT Tackle the Limits of ICT Simon C. Lin ( 林誠謙 ) Academia Sinica Grid Computing, Taipei, Taiwan 11529 [email_address] 28 April 2009, TrendMicro Clouds Seminar](https://image.slidesharecdn.com/random-090524102753-phpapp01/75/Cloud-Computing-1-2048.jpg)

![The Internet Hourglass [email_address] IP Application layer Link layer Ethernet, WIFI (802.11), ATM, SONET/SDH, FrameRelay, modem, ADSL, Cable, Bluetooth… Voice, Video, P2P, Email, youtube, …. Protocols – TCP, UDP, SCTP, ICMP,… Disruptive approaches need a disruptive architecture Changing/updating the Internet core is difficult or impossible ! (e.g. Multicast, Mobile IP, QoS, …) Everything on IP Homogeneous networking abstraction IP on Everything IPv6 IPvX](https://image.slidesharecdn.com/random-090524102753-phpapp01/75/Cloud-Computing-5-2048.jpg)

![Heterogeneity We have to extend the waist and host more paradigms Future networks have to scale in size AND functionality Enable network evolution Federation instead of homogeneous abstraction [email_address] Application layer Link layer CLEAN SLATE We need a framework that is able to host multiple networks ANA PROJECT (J02) Generic framework IP Sensor Home NW ??? Pub/Sub](https://image.slidesharecdn.com/random-090524102753-phpapp01/75/Cloud-Computing-6-2048.jpg)

![History of volunteer computing Applications Middleware 1995 2005 distributed.net, GIMPS SETI@home, Folding@home Commercial: Entropia, United Devices, ... BOINC Climateprediction.net [email_address] IBM World Community Grid [email_address] [email_address] ... 2005 2000 now Academic: Bayanihan, Javelin, ... Applications](https://image.slidesharecdn.com/random-090524102753-phpapp01/75/Cloud-Computing-33-2048.jpg)

![Is it really new ? Massive scale resource sharing over the Internet Sound a lot like grid computing, yet … March 2-6, 2009 OGF 25/EGEE User Forum, Catania Italy www.reservoir-fp7.eu Grid Highly specialized resources that need to be shared by thousands [researchers] Large data sets Sharing is a goal In many cases, providers are also consumers Interoperable by design Cloud Reducing CAPEX, OPEX, time to market Millions of users that share to save not for the sake of sharing Providers want market share and customer lock-in Need for interoperability driven by customers](https://image.slidesharecdn.com/random-090524102753-phpapp01/75/Cloud-Computing-68-2048.jpg)