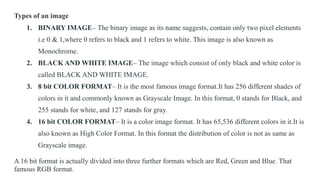

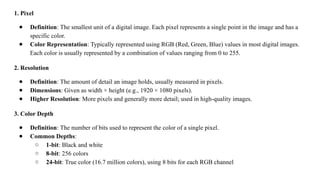

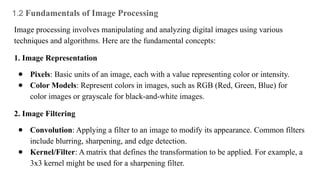

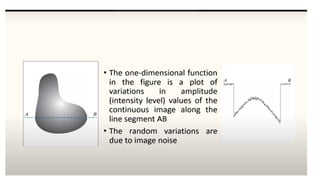

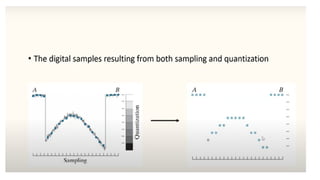

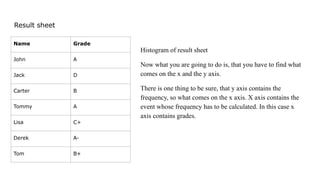

The document provides an overview of digital image processing, detailing its fundamentals, applications, types of images, and key concepts such as pixels, resolution, and color depth. It covers the process of image acquisition, manipulation techniques, and applications across various fields including medical imaging, robotics, and pattern recognition. Histograms are highlighted as crucial for analyzing image data and enhancing image quality through techniques like equalization.