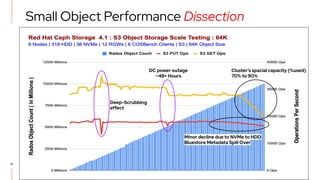

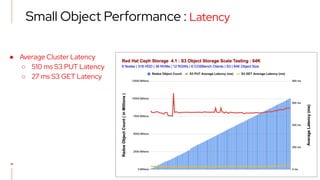

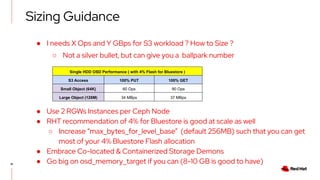

The document details a scale testing of Red Hat Ceph Storage (RHCS) with over 10 billion objects, showcasing its deterministic performance for both small and large object workloads. Key results include an average of 17,800 S3 put operations and 28,800 S3 get operations per second across 318 HDDs and 36 NVMe devices, with findings indicating acceptable performance during failure scenarios. The testing demonstrates RHCS's capability to exceed current limits, affirming its viability for high-scale object storage applications.