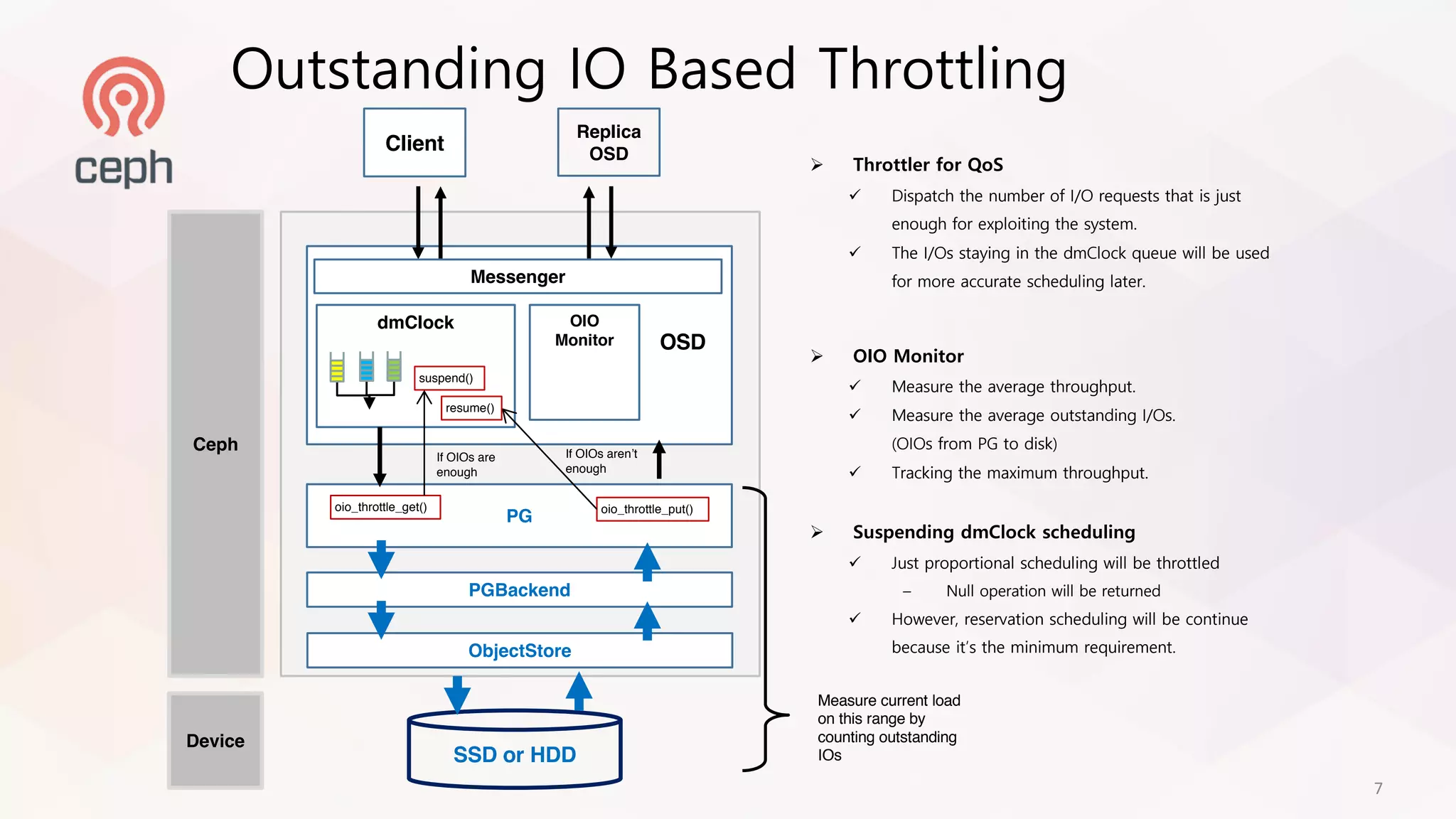

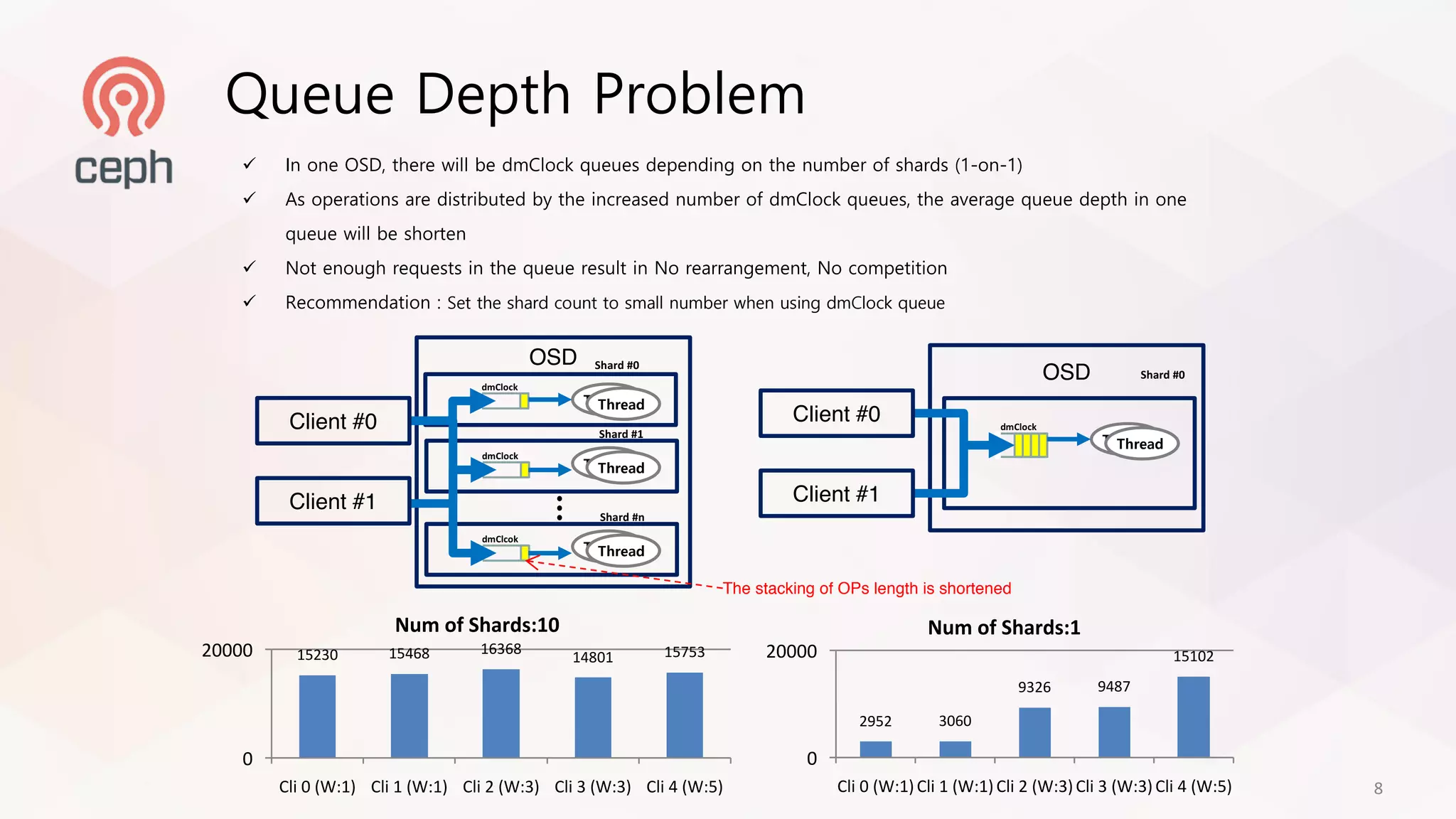

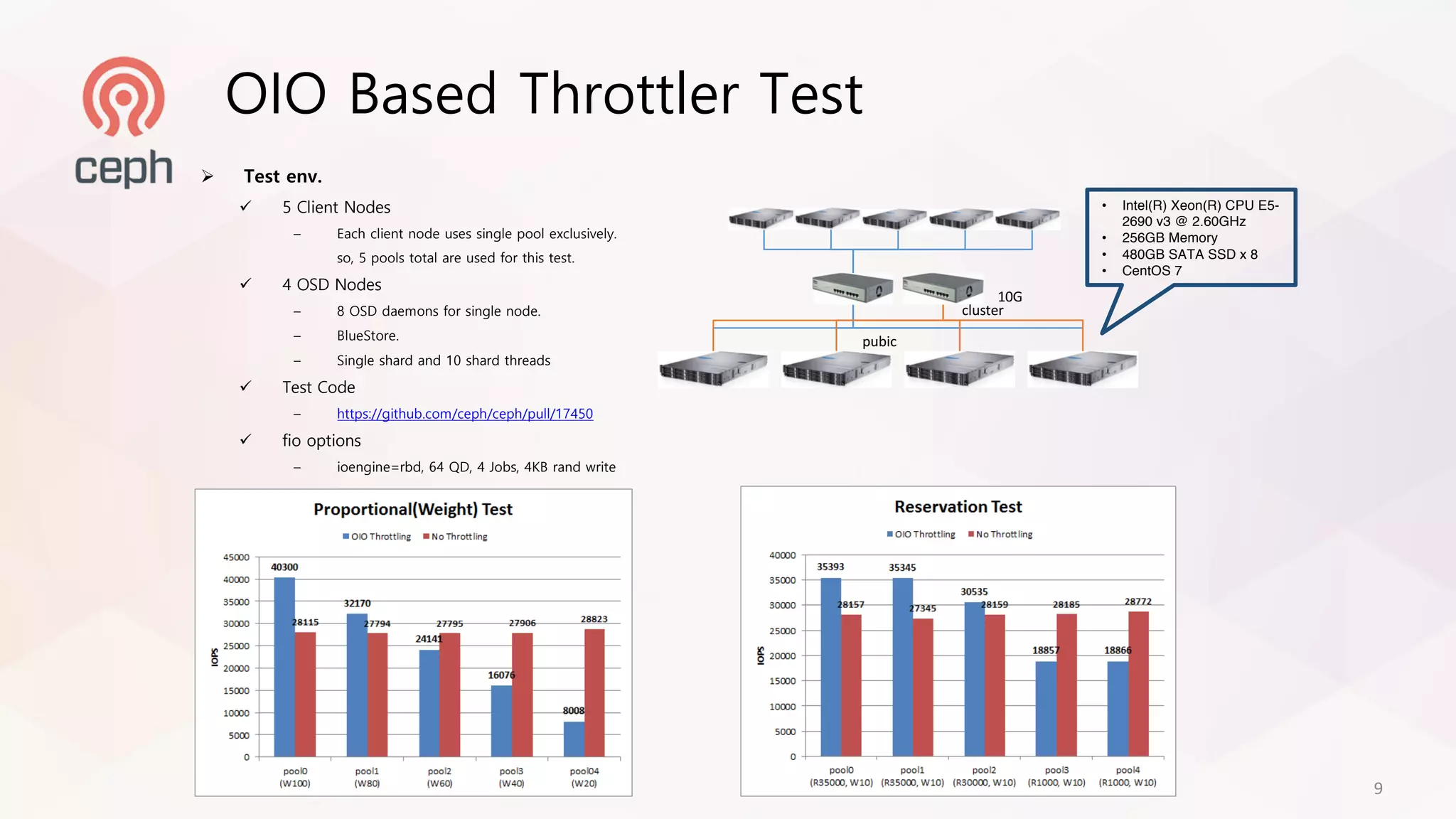

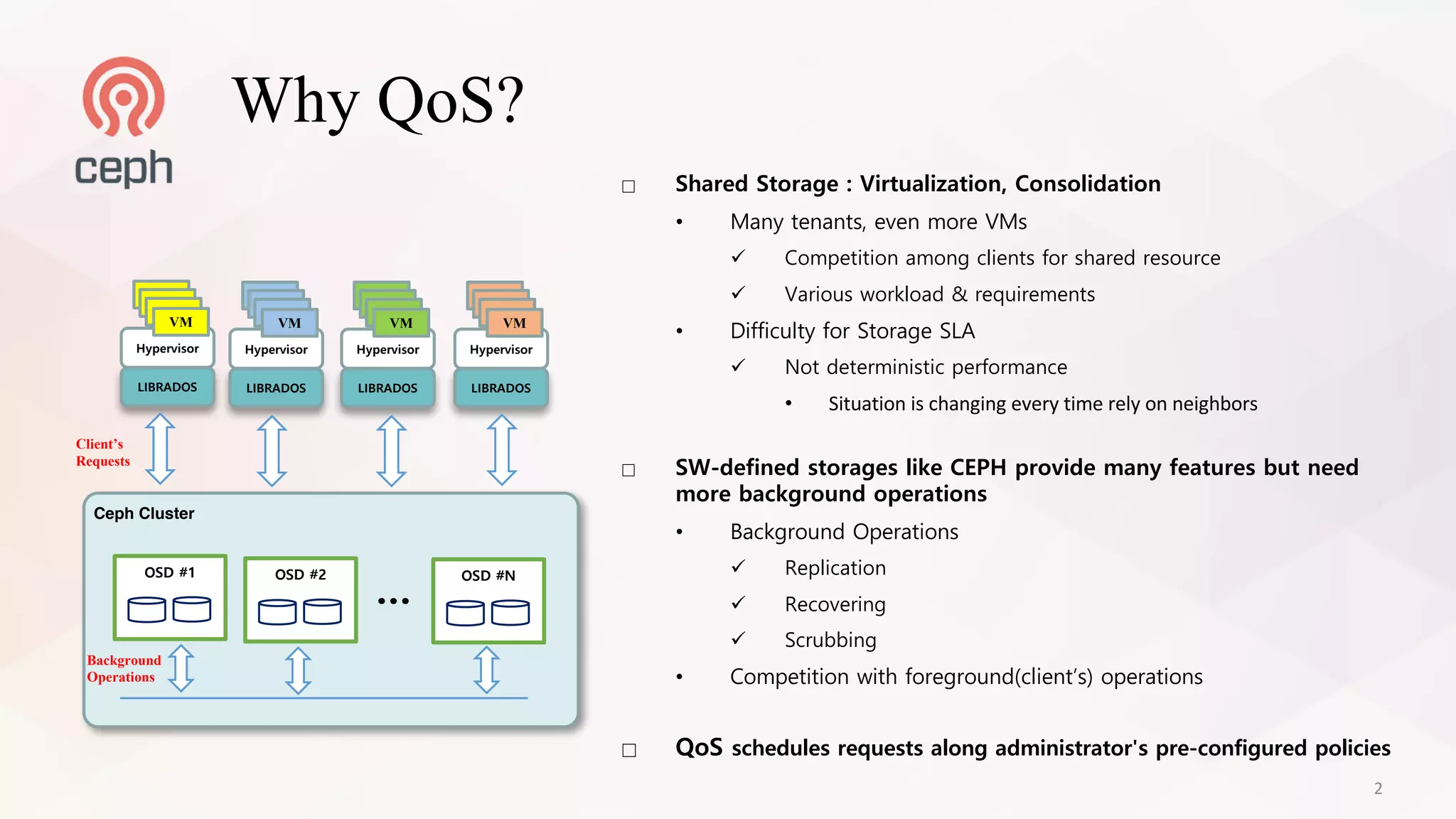

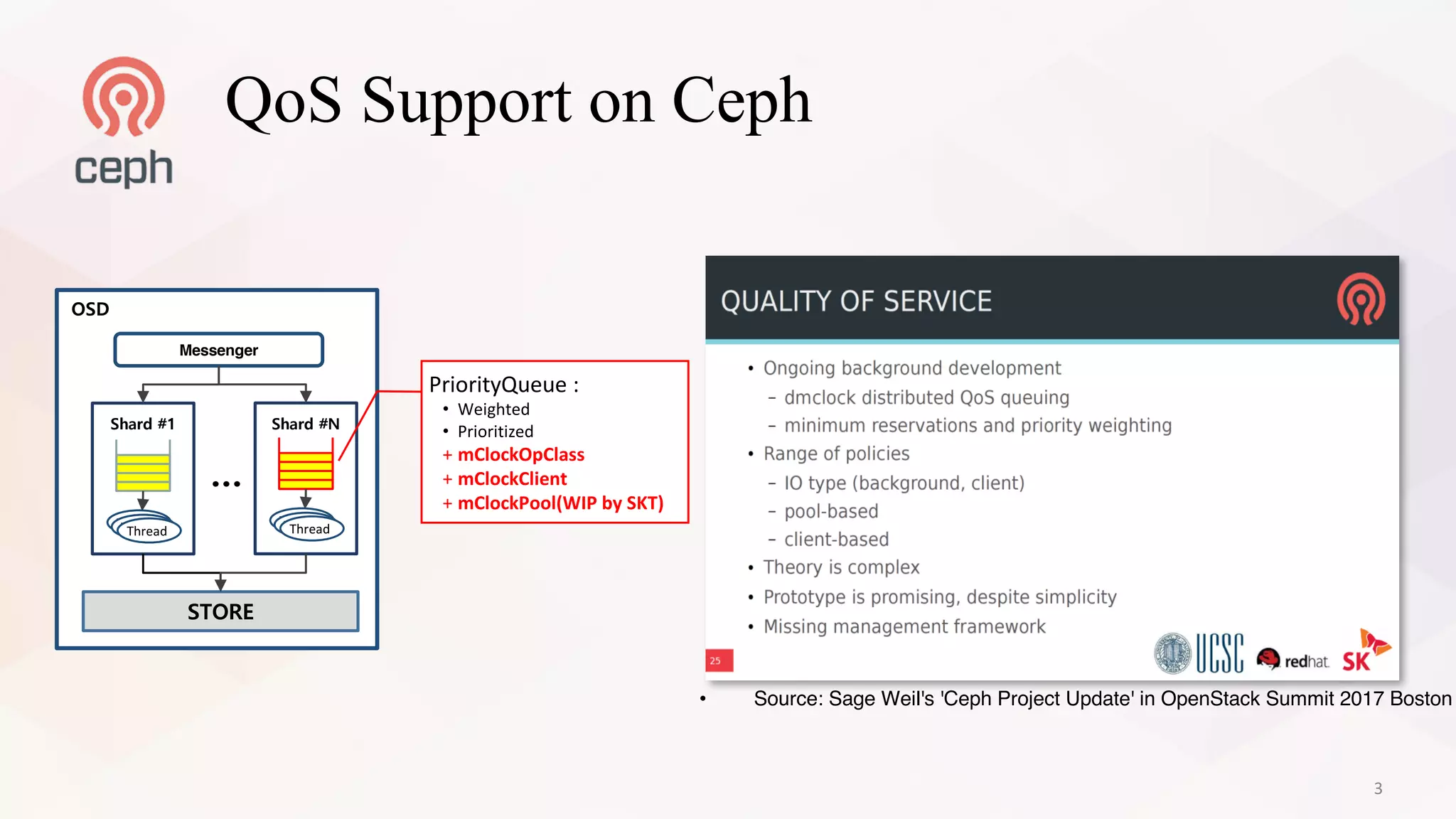

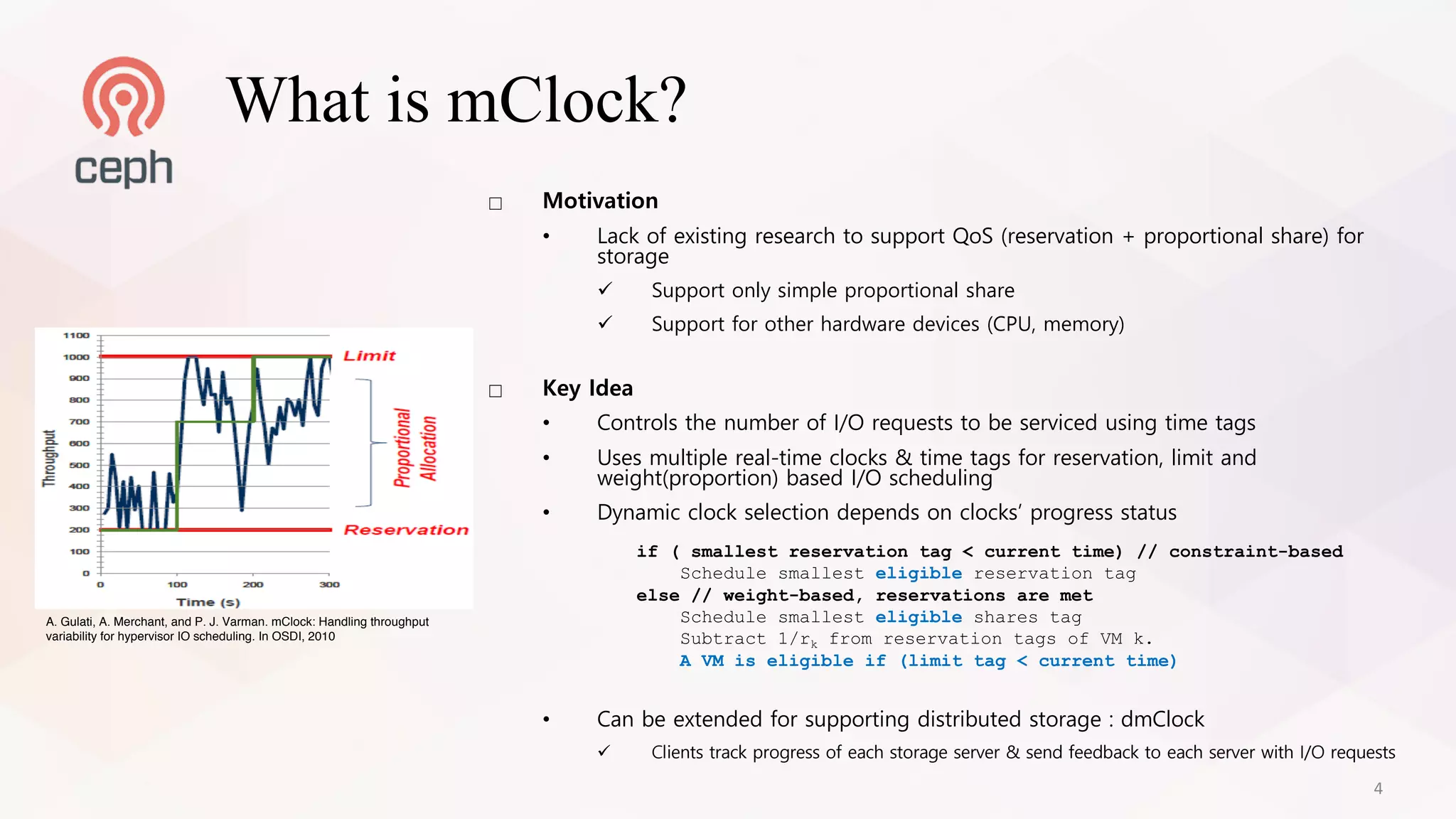

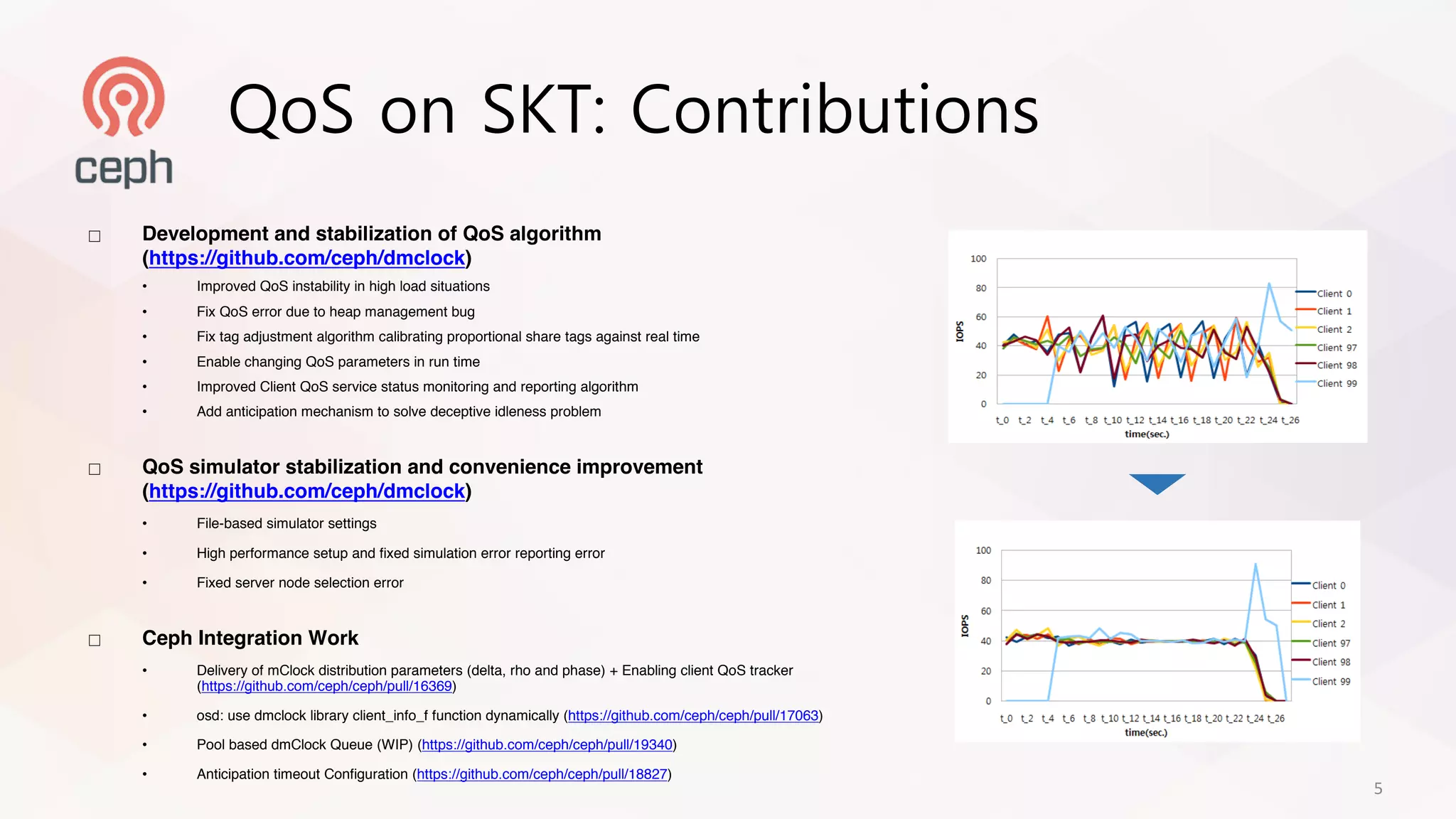

This document discusses supporting quality of service (QoS) in distributed storage systems like Ceph. It describes how SK Telecom has contributed to QoS support in Ceph, including an algorithm called dmClock that controls I/O request scheduling according to administrator-configured policies. It also details an outstanding I/O-based throttling mechanism to measure and regulate load. Finally, it discusses challenges like queue depth that can be addressed by increasing the number of scheduling threads, and outlines plans to improve and test Ceph's QoS features.

![1. Each pool’s properties(MAX, MIN, Weight) are stored in the OSD Map

CLI Example: ceph osd pool set [pool ID] resv 4000.0 (IOPs)

2. Distribution status is monitored by “dmClock QoS Monitor” and status info is embedded in RADOS requests

3. Since each OSD has the latest OSD Map, it can know the QoS control of the pool corresponding to the received

request, and proceed QoS with Pool’s QoS properties & QoS status info

dmClcok QoS

Monitor

LIBRADOS

CRUSH

Algorithm

I/O δOSD#

Target

OSD

QoS Status Info

Pool#1

User 2

LIBRBD

dmClcok QoS

Monitor

LIBRADOS

CRUSH

Algorithm

I/O δOSD#

Target

OSD

QoS Status Info

User 1

LIBRBD

…

OSD #1

dmClock

QoS Scheduler

STORE

OSD

Map

POOL#0

Current Implemented Ceph QoS Pool Units

Pool’s Properties

{MIN,

MAX,Weight]

OSD #2

dmClock

QoS Scheduler

STORE

OSD

Map

OSD #3

dmClock

QoS Scheduler

STORE

OSD

Map

OSD #n

dmClock

QoS Scheduler

STORE

OSD

Map

6](https://image.slidesharecdn.com/03-howtosupportqosindistributedstoragesystem-taewoongkim-180419044230/75/Ceph-QoS-How-to-support-QoS-in-distributed-storage-system-Taewoong-Kim-7-2048.jpg)