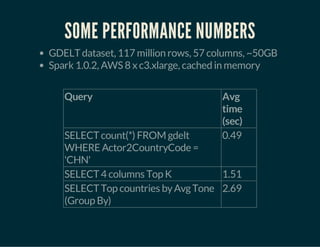

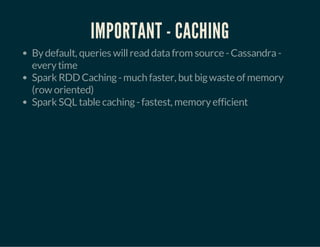

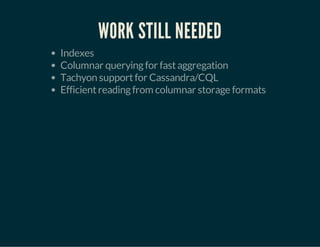

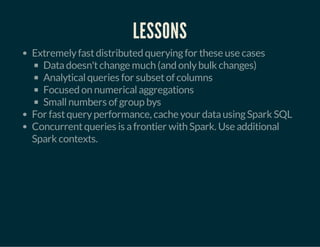

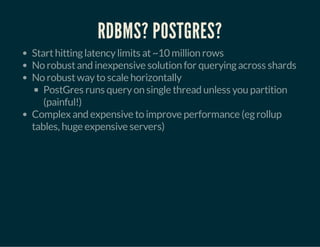

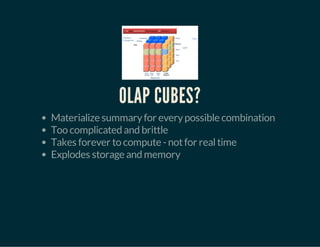

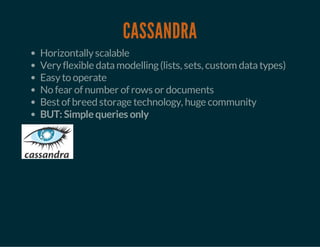

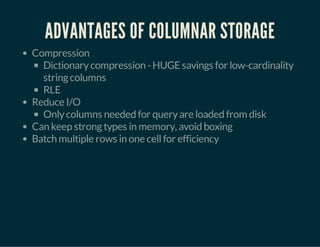

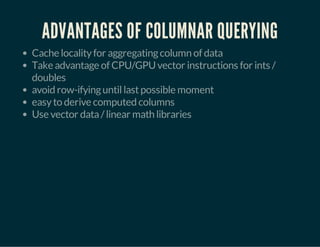

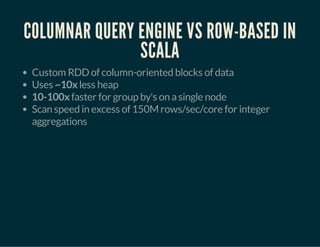

The document discusses using Apache Spark and Cassandra for online analytical processing (OLAP) of big data. It describes challenges with relational databases and OLAP cubes at large scales and how Spark can provide fast, distributed querying of data stored in Cassandra. The key points made are that Spark and Cassandra combine to provide horizontally scalable storage with Cassandra and fast, in-memory analytics with Spark; and that for optimal performance, data should be cached in Spark SQL tables for column-oriented querying and aggregation.

![INITIAL ATTEMPTS

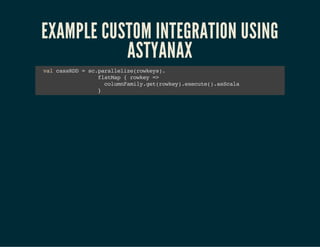

val rows = Seq(

Seq("Burglary", "19xx Hurston", 10),

Seq("Theft", "55xx Floatilla Ave", 5)

)

sc.parallelize(rows)

.map { values => (values[0], values) }

.groupByKey

.reduce(_[2] + _[2])](https://image.slidesharecdn.com/2014-09-olap-spark-cass-summit-140929192553-phpapp01/85/Cassandra-Summit-2014-Interactive-OLAP-Queries-using-Apache-Cassandra-and-Spark-23-320.jpg)

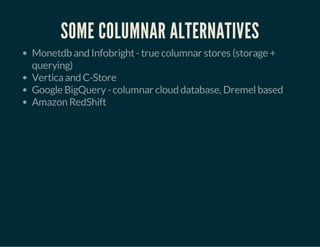

![No existing generic query engine for Spark when we started

(Shark was in infancy, had no indexes, etc.), so we built our own

For every row, need to extract out needed columns

Ability to select arbitrary columns means using Seq[Any], no

type safety

Boxing makes integer aggregation very expensive and memory

inefficient](https://image.slidesharecdn.com/2014-09-olap-spark-cass-summit-140929192553-phpapp01/85/Cassandra-Summit-2014-Interactive-OLAP-Queries-using-Apache-Cassandra-and-Spark-24-320.jpg)

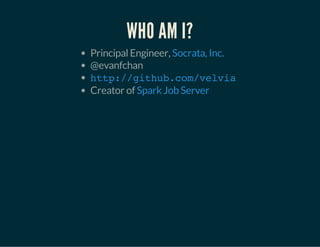

.registerAsTable("gdelt")

sqlContext.cacheTable("gdelt")

sqlContext.sql("SELECT Actor2Code, Actor2Name, Actor2CountryCode, AvgTone from gdelt ORDER Remember Spark is lazy, nothing is executed until the

collect()

In Spark 1.1+: registerTempTable](https://image.slidesharecdn.com/2014-09-olap-spark-cass-summit-140929192553-phpapp01/85/Cassandra-Summit-2014-Interactive-OLAP-Queries-using-Apache-Cassandra-and-Spark-37-320.jpg)