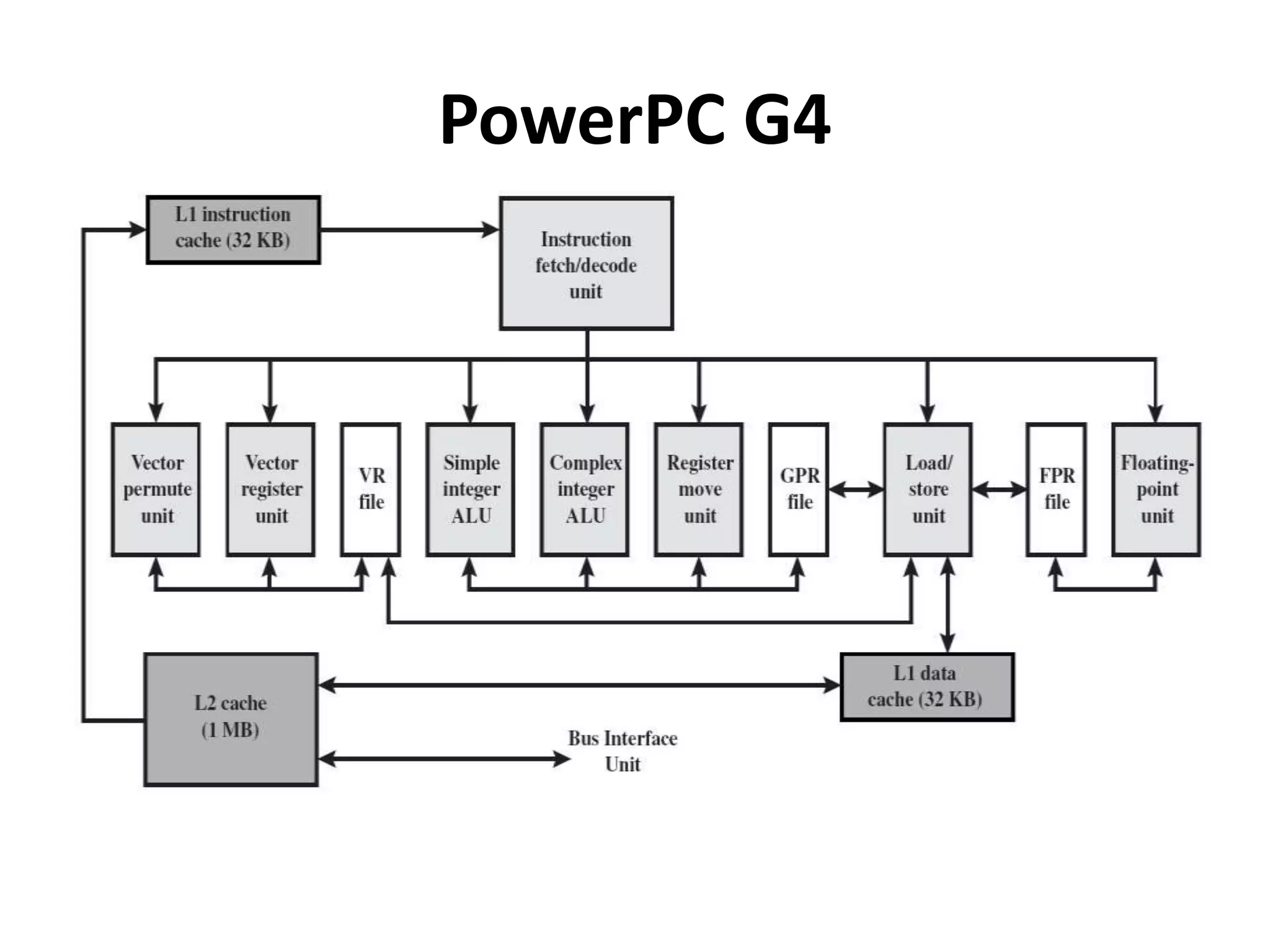

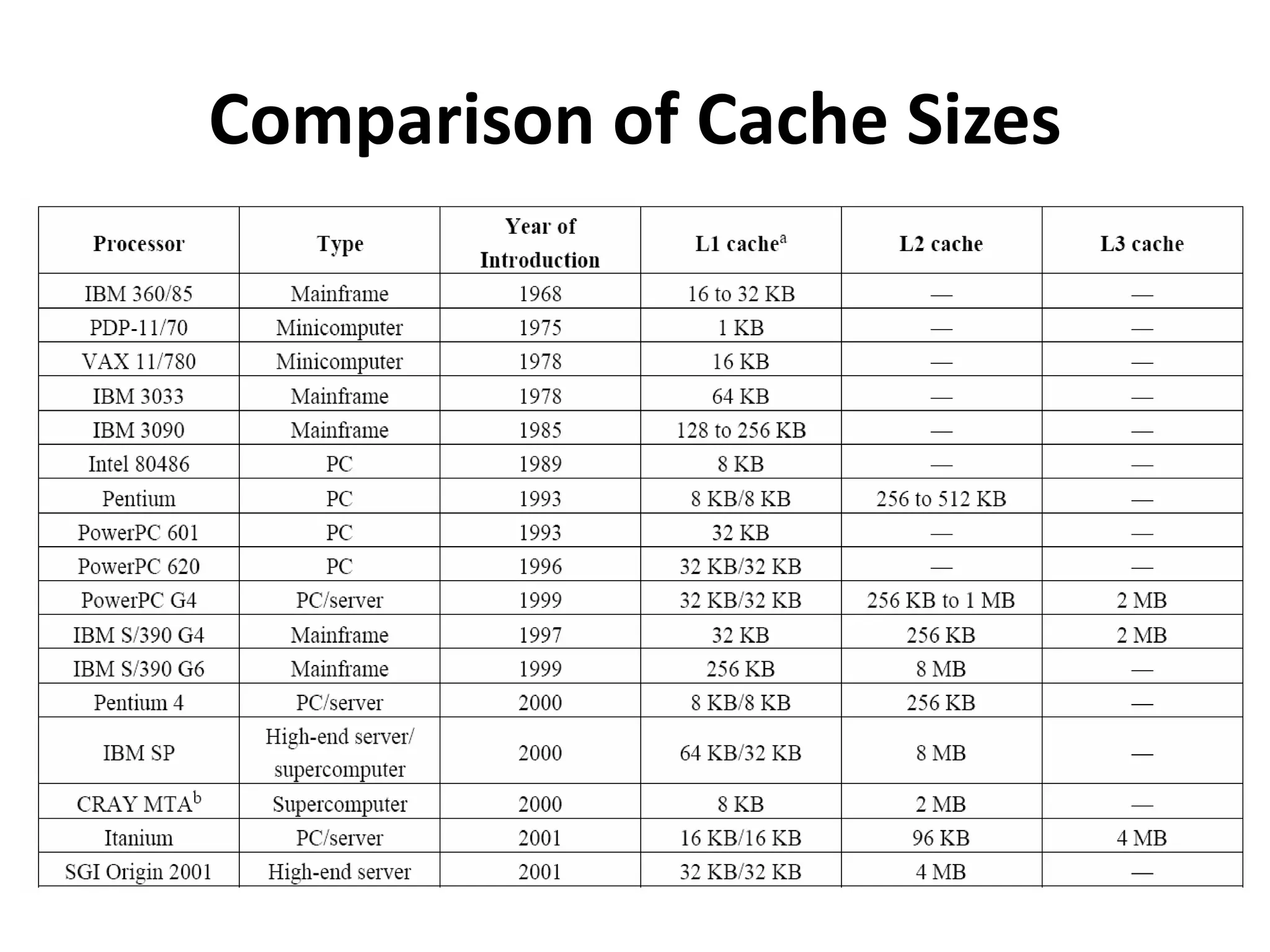

This document discusses cache memory principles and provides details about cache operation, structure, organization, and design considerations. The key points covered are:

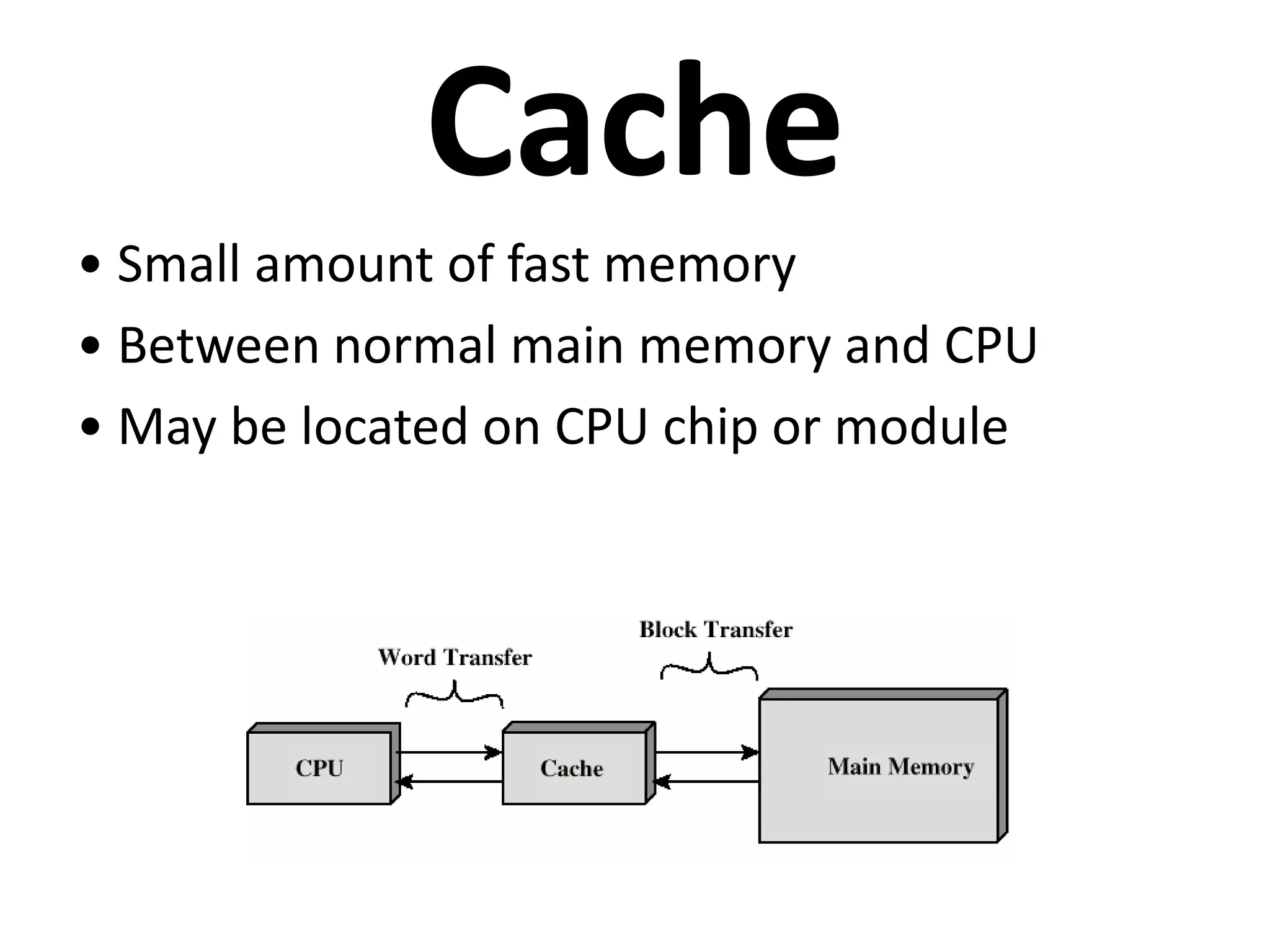

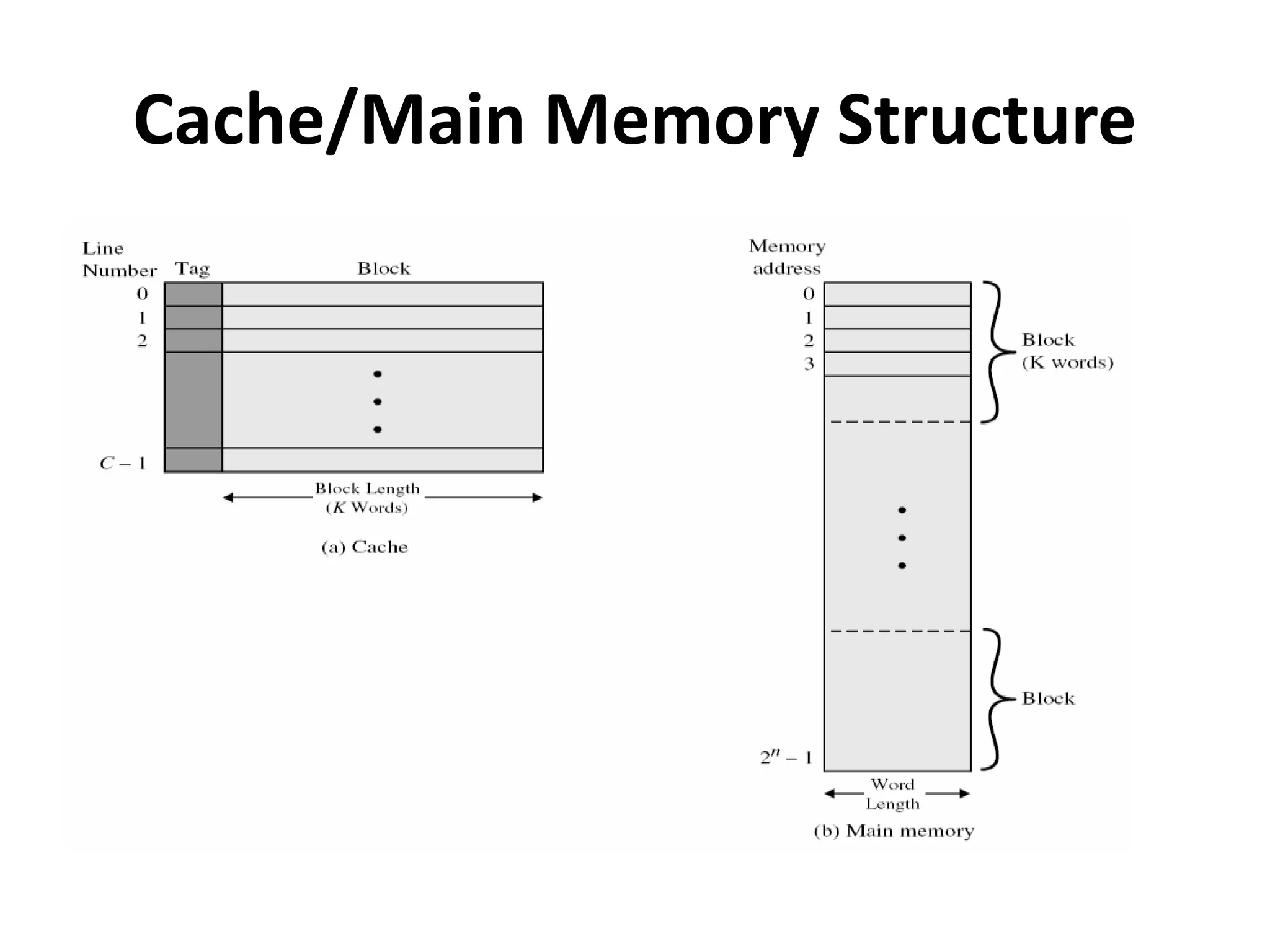

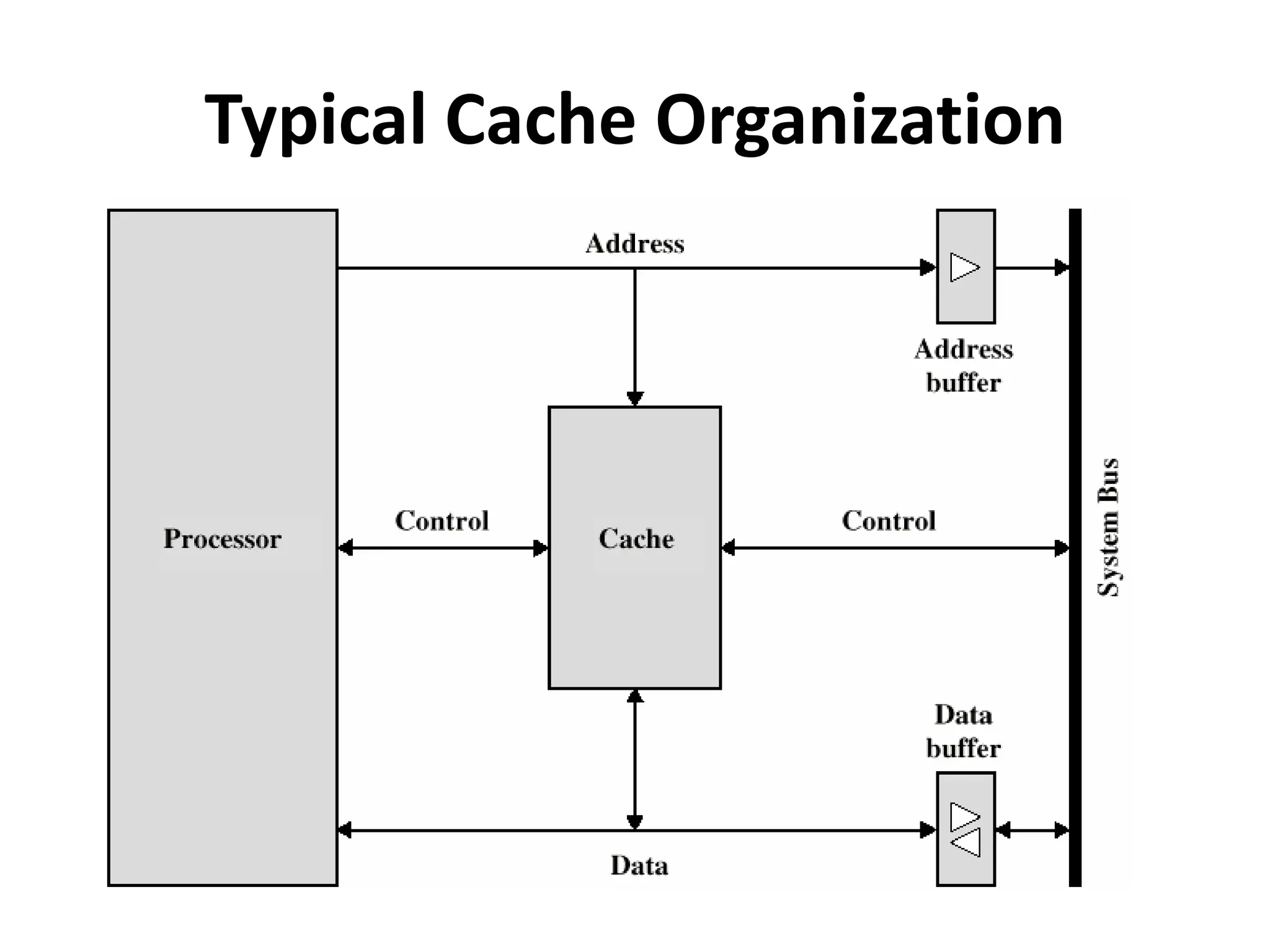

- Cache is a small, fast memory located between the CPU and main memory that stores frequently used data.

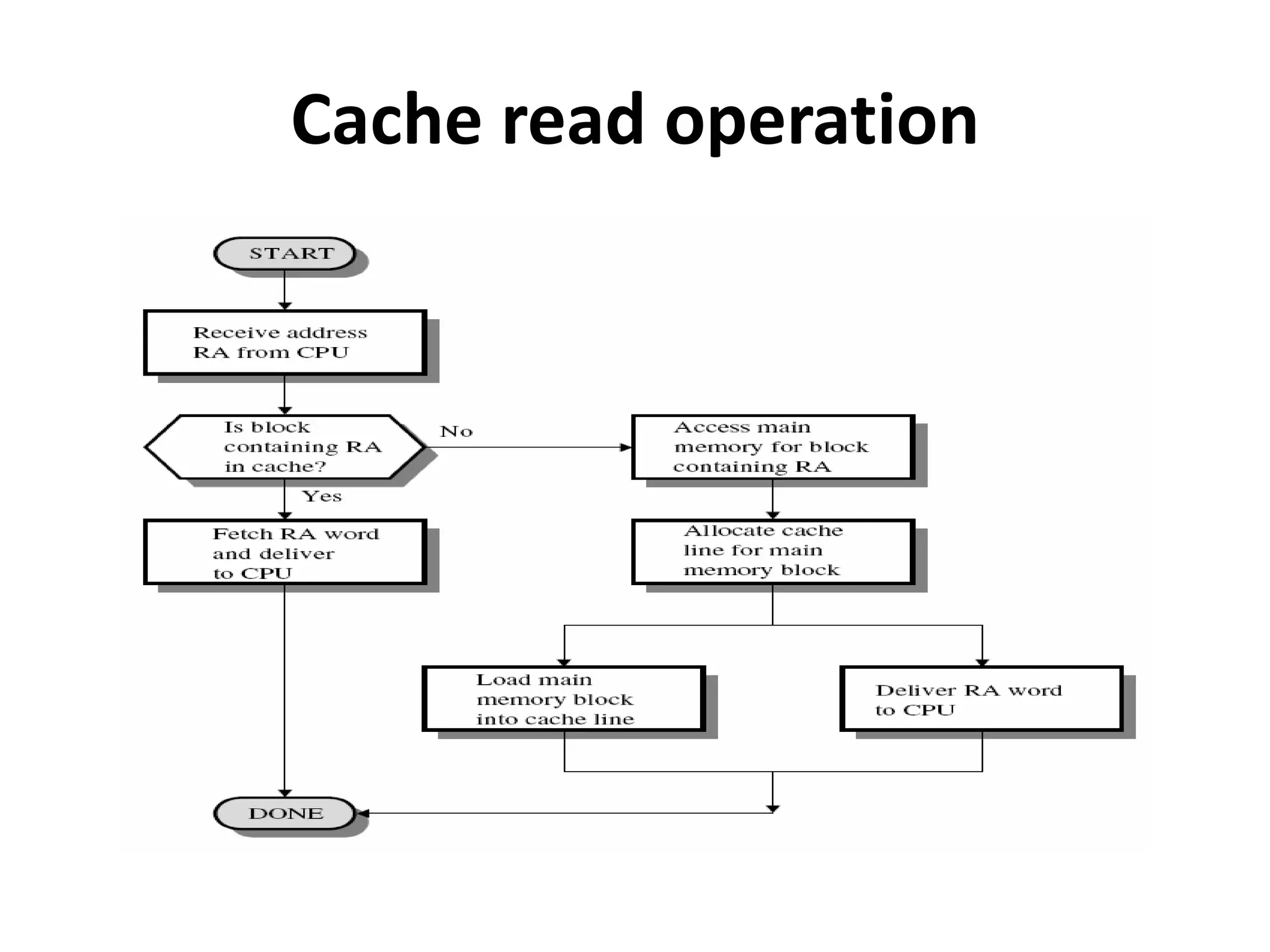

- During a cache read operation, the CPU first checks the cache for the requested data. If present, it is retrieved from the fast cache. If not, the data is read from main memory into cache.

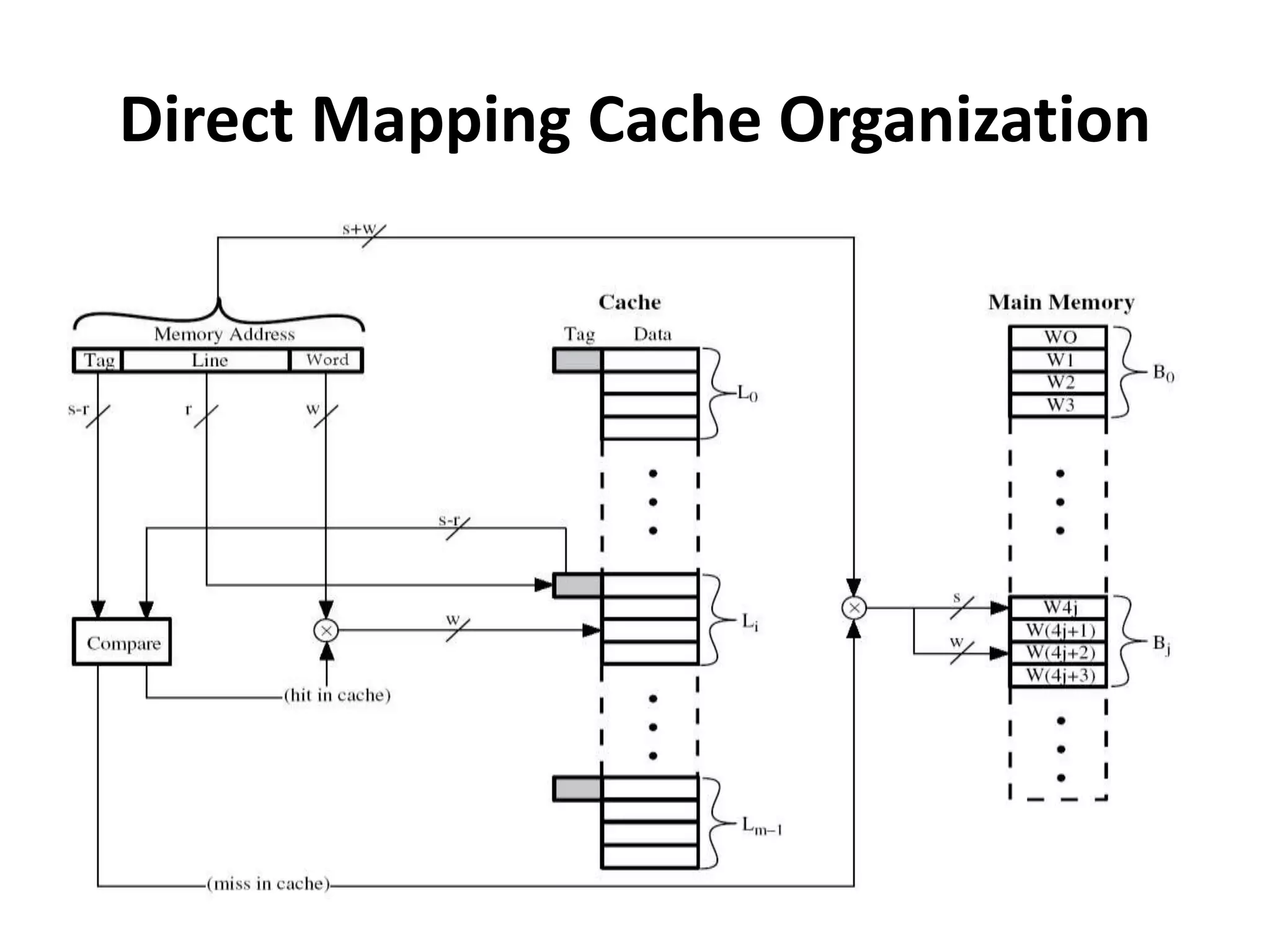

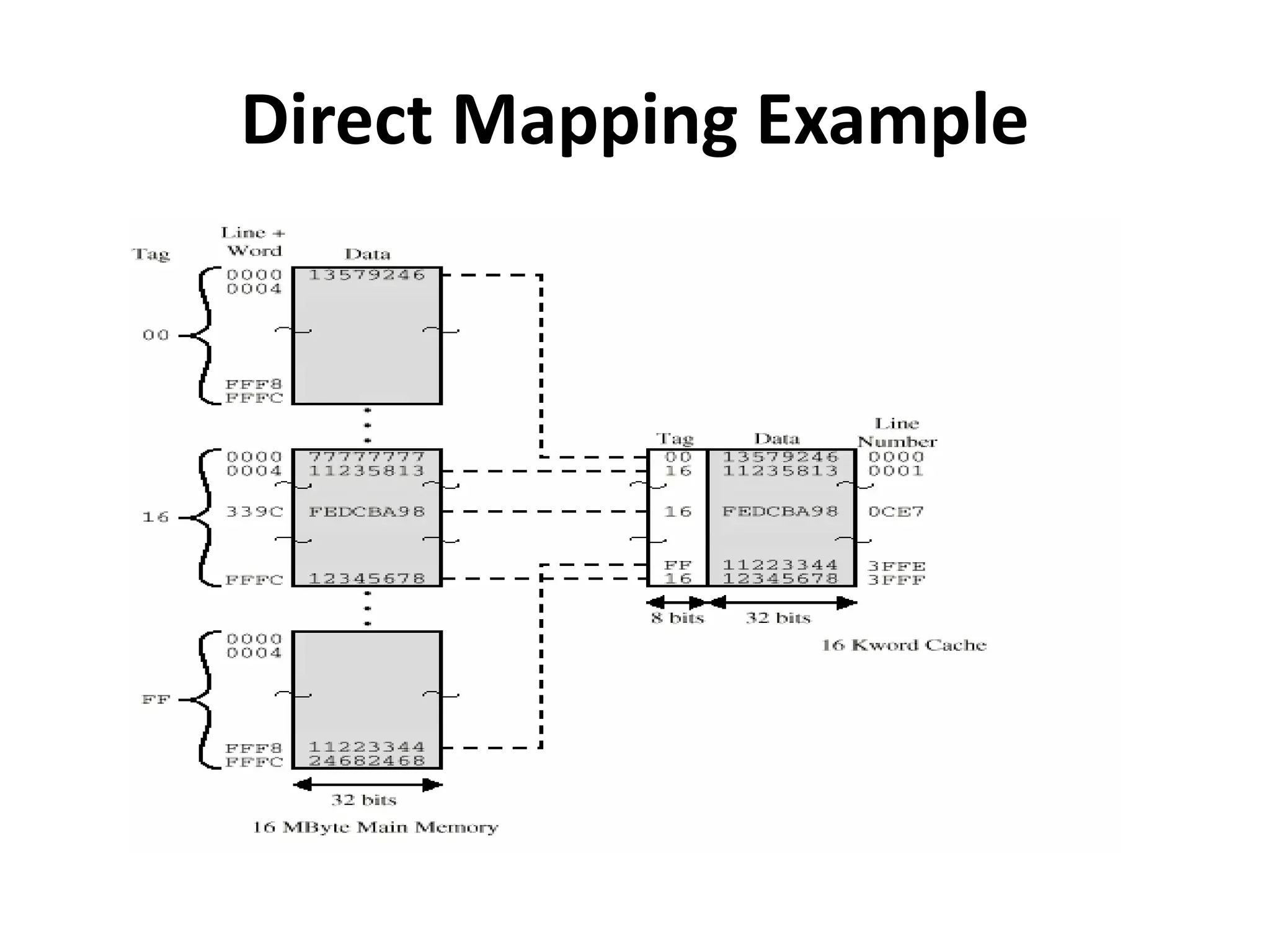

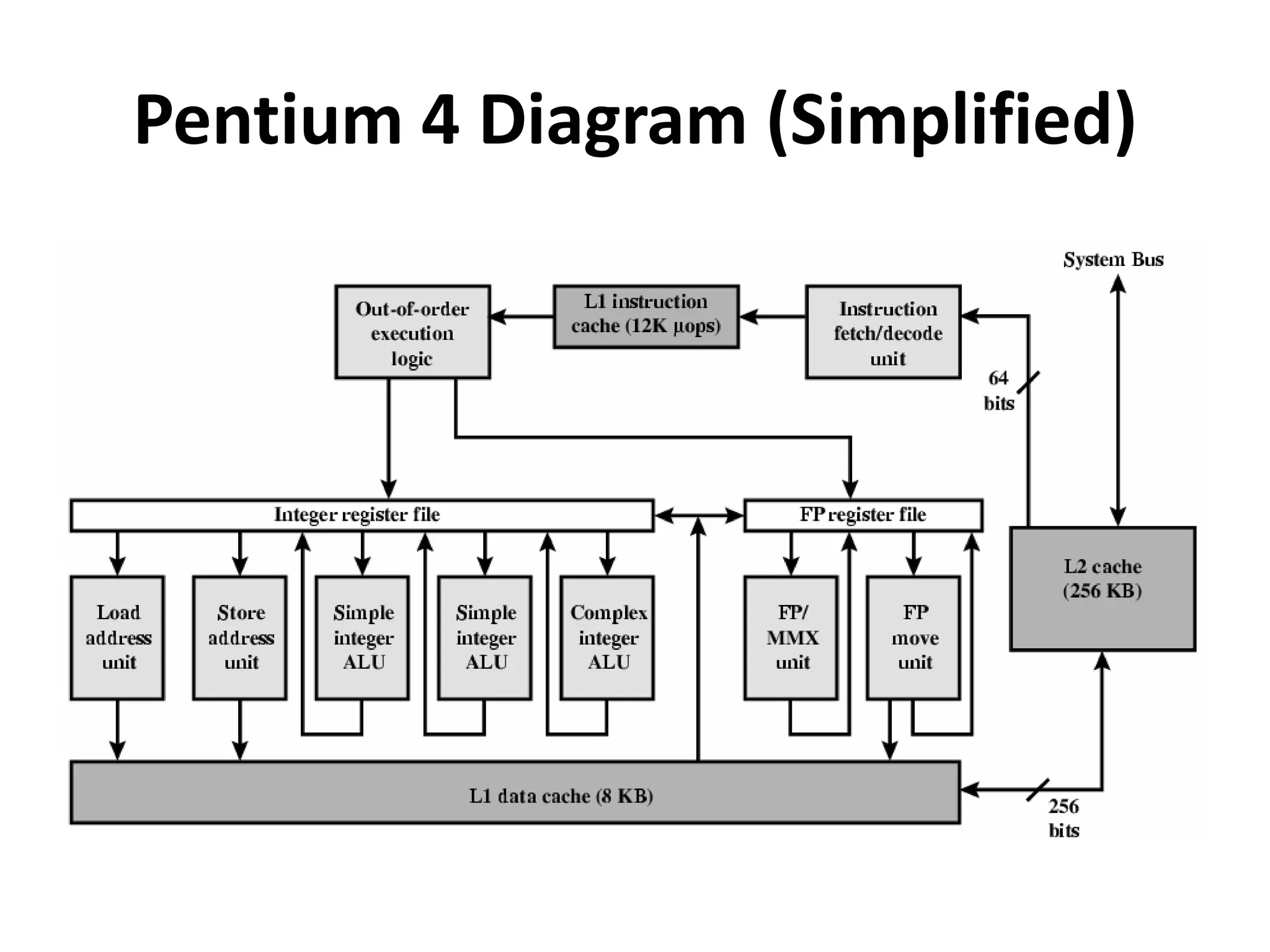

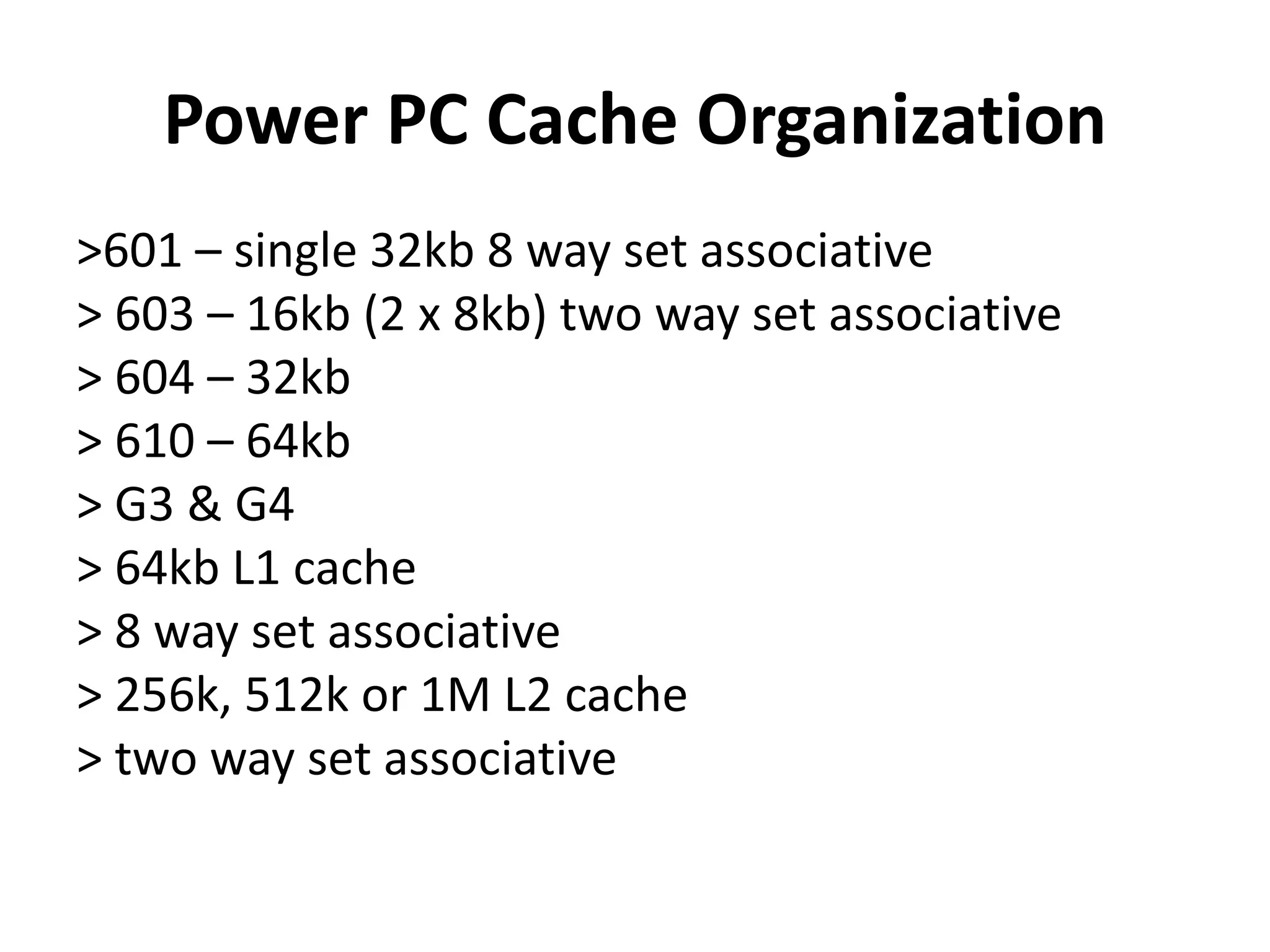

- Cache design considerations include size, mapping function, replacement algorithm, write policy, line size, and number of cache levels.

- Modern CPUs use hierarchical cache designs with multiple levels (L1, L2, etc.) to improve performance.