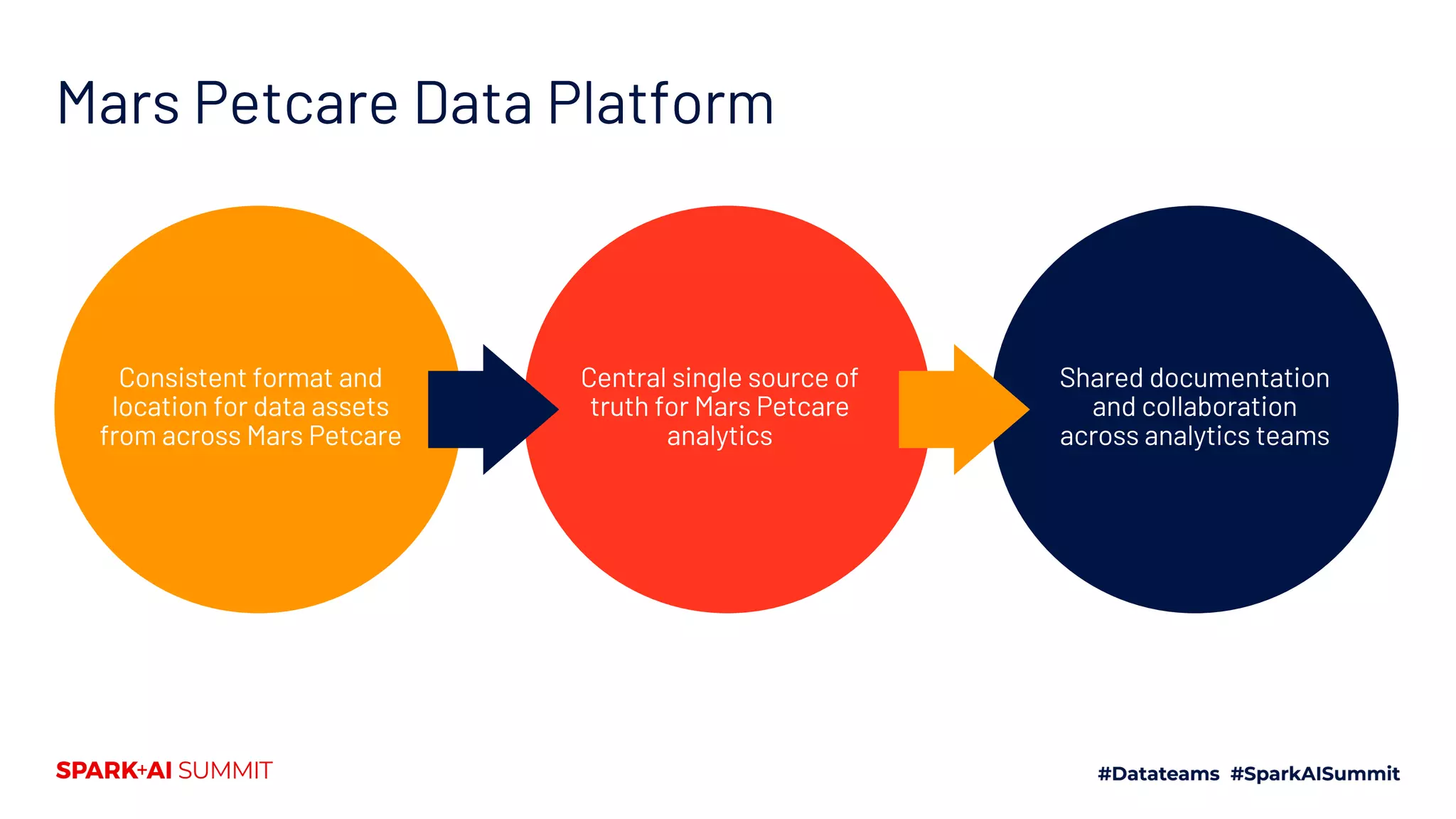

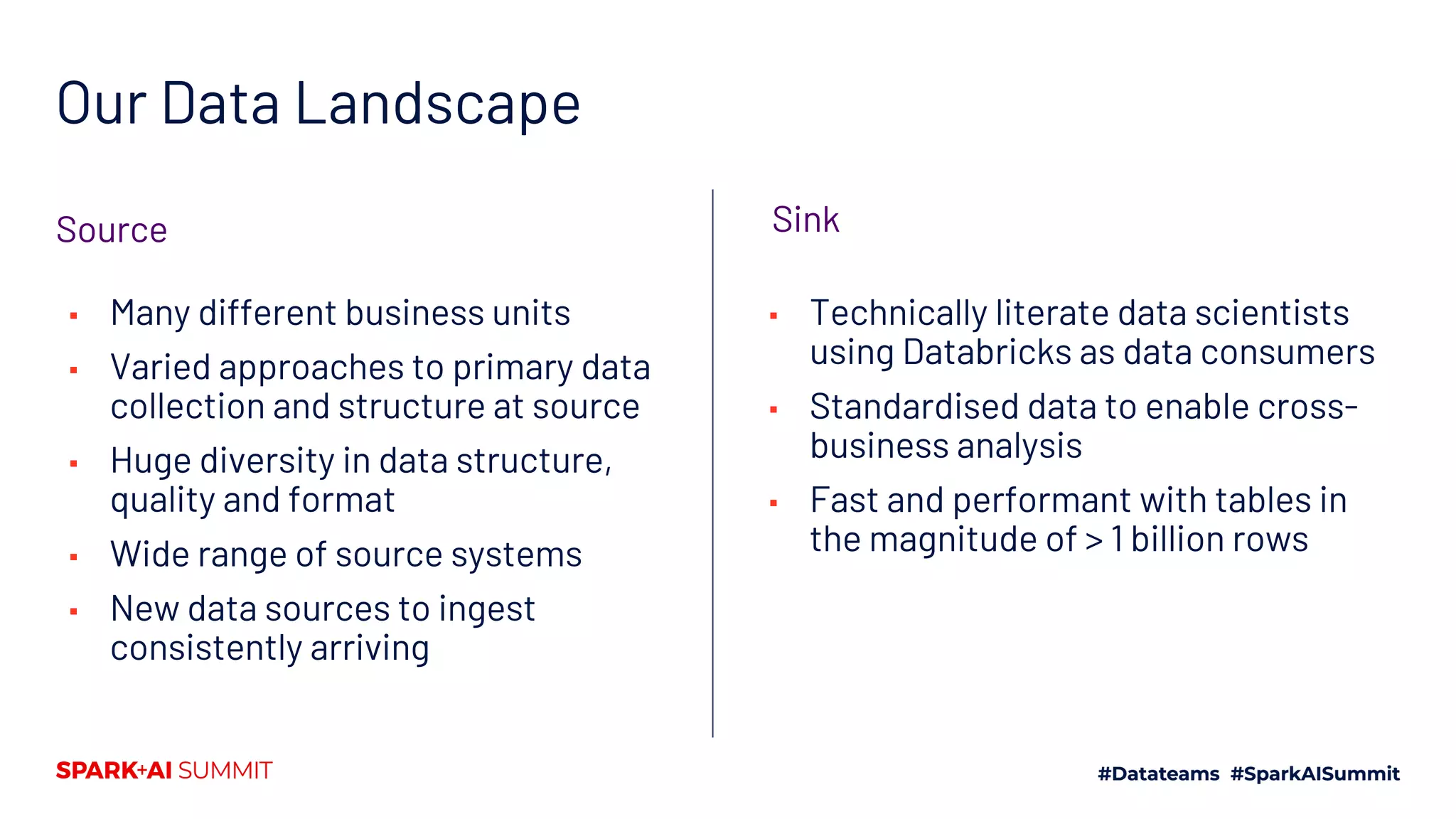

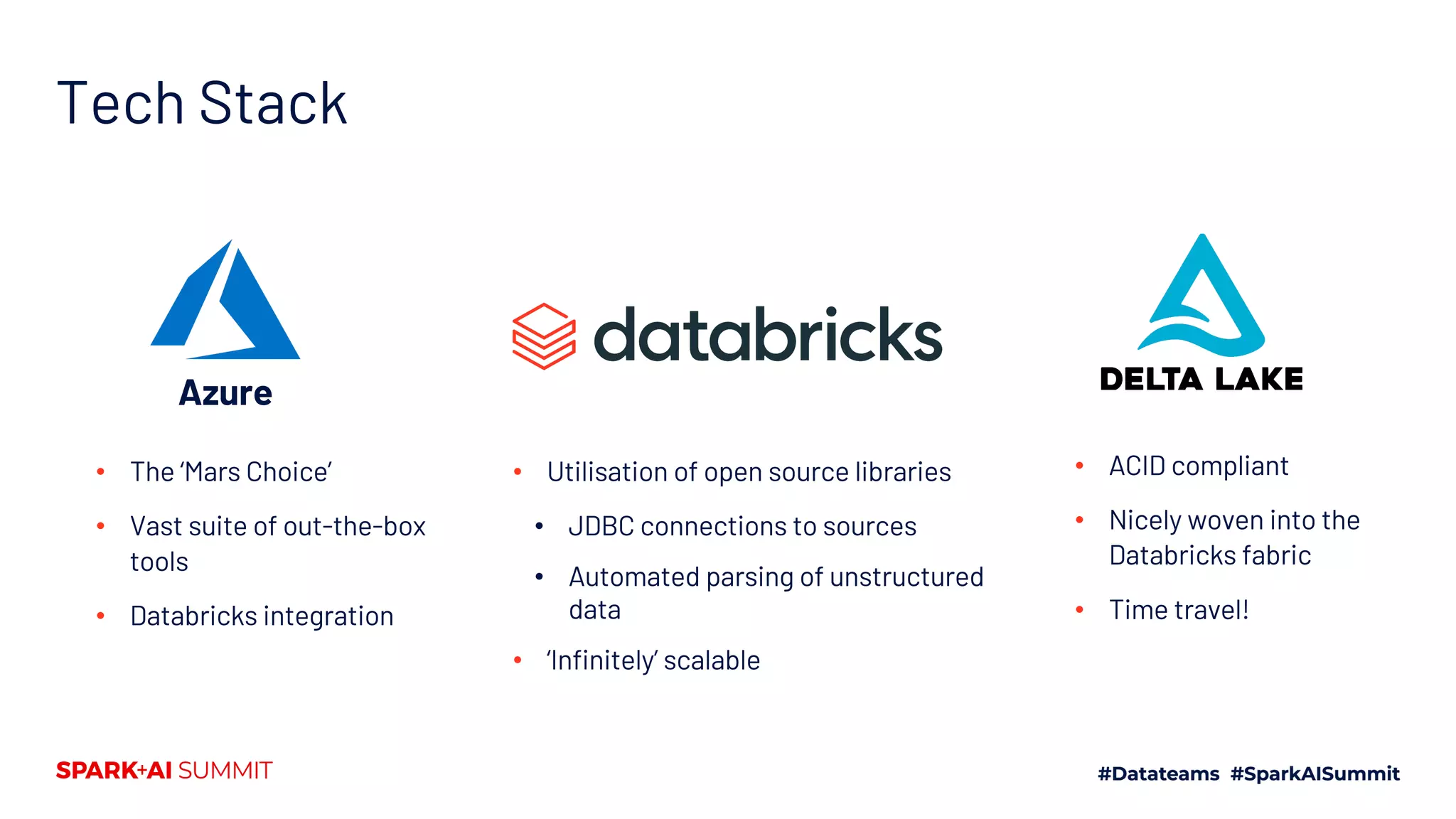

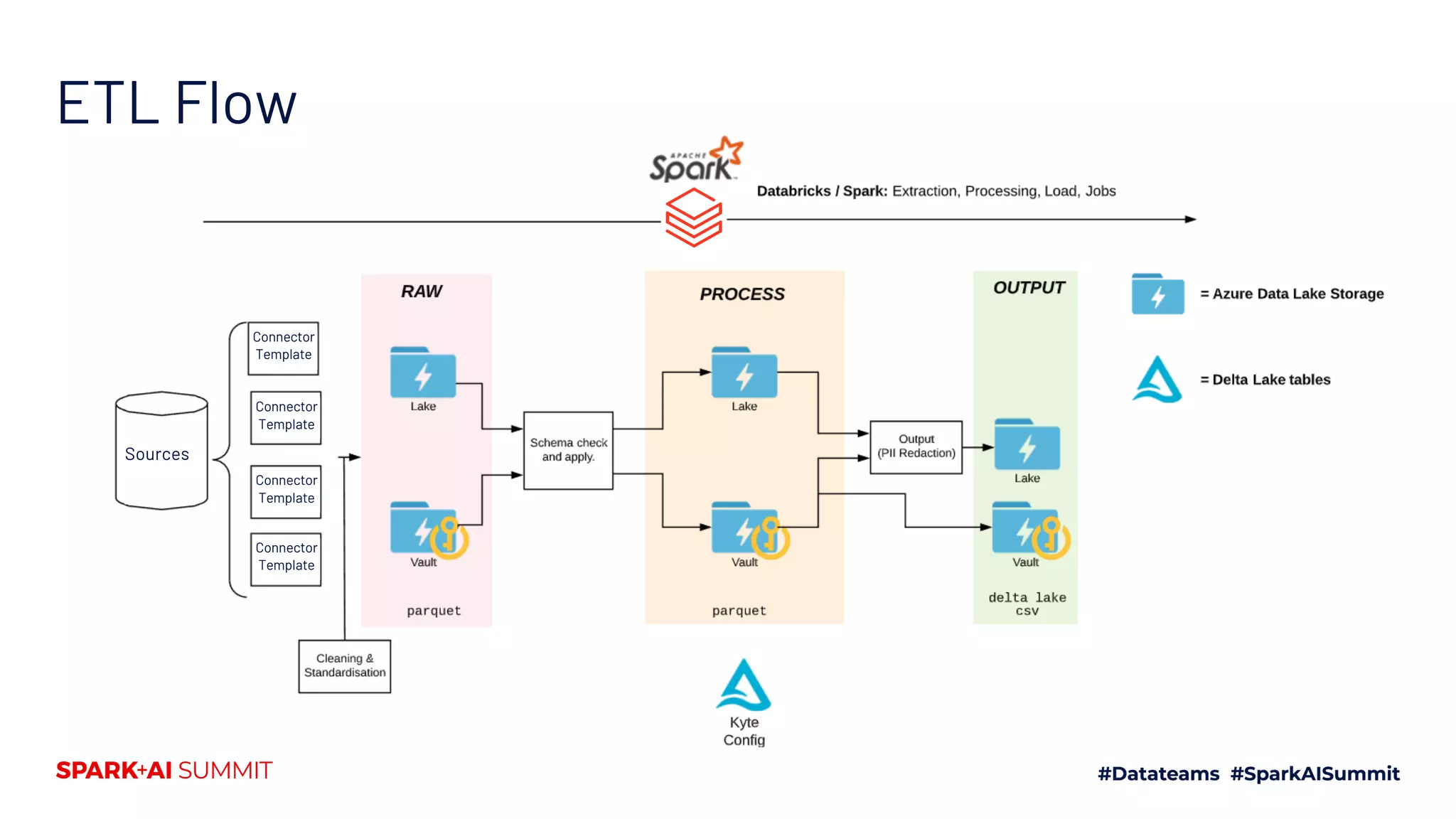

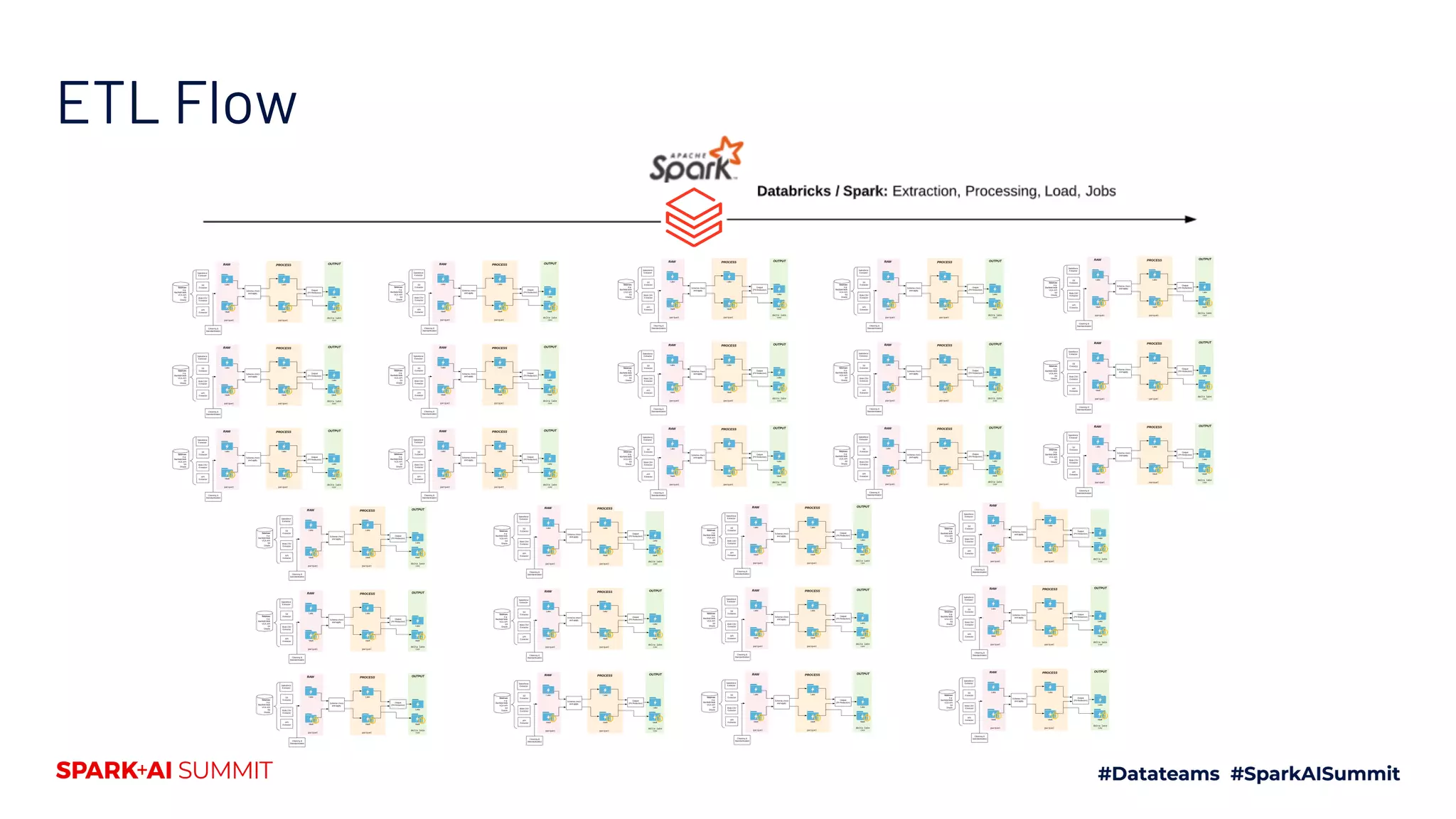

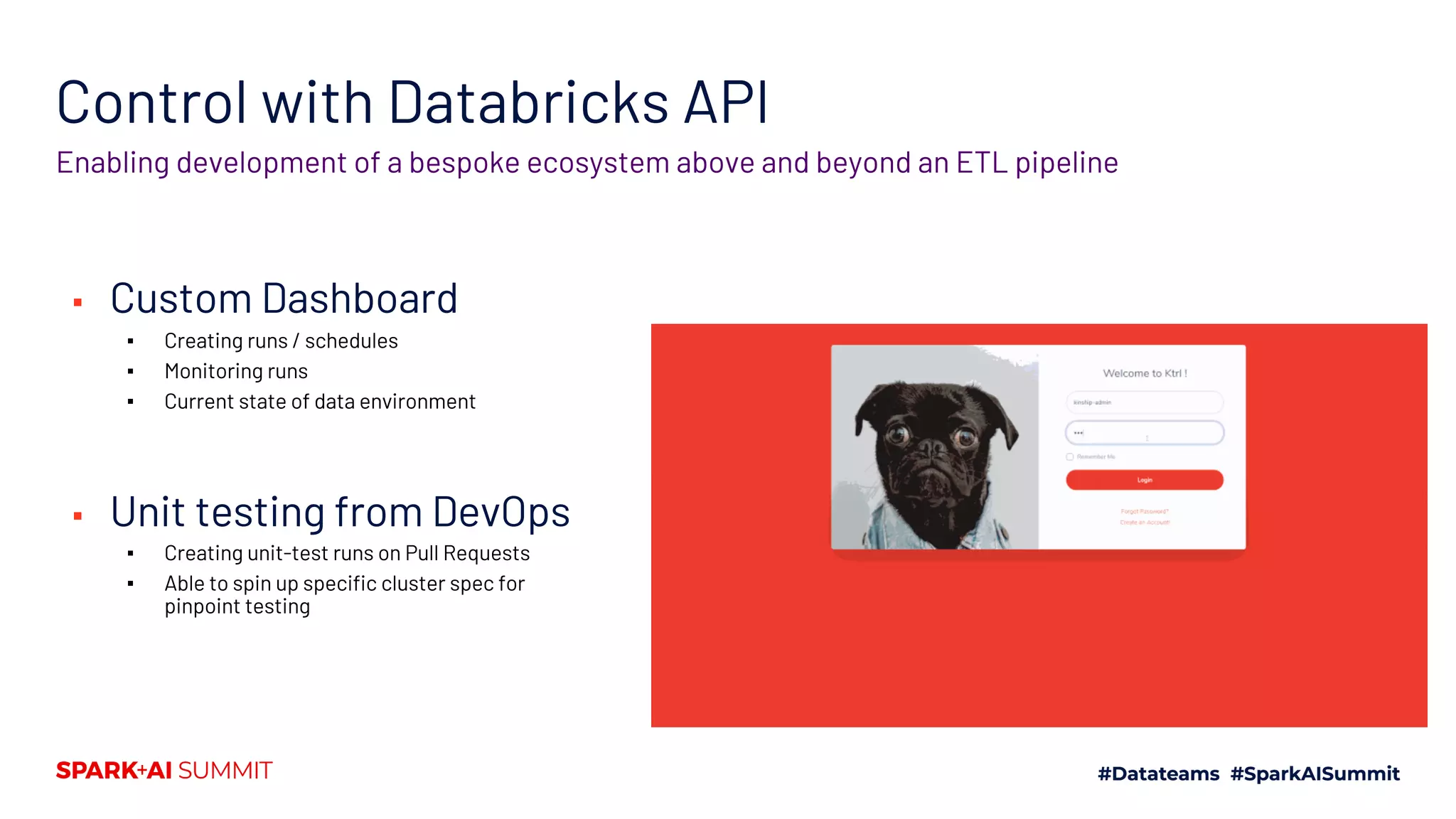

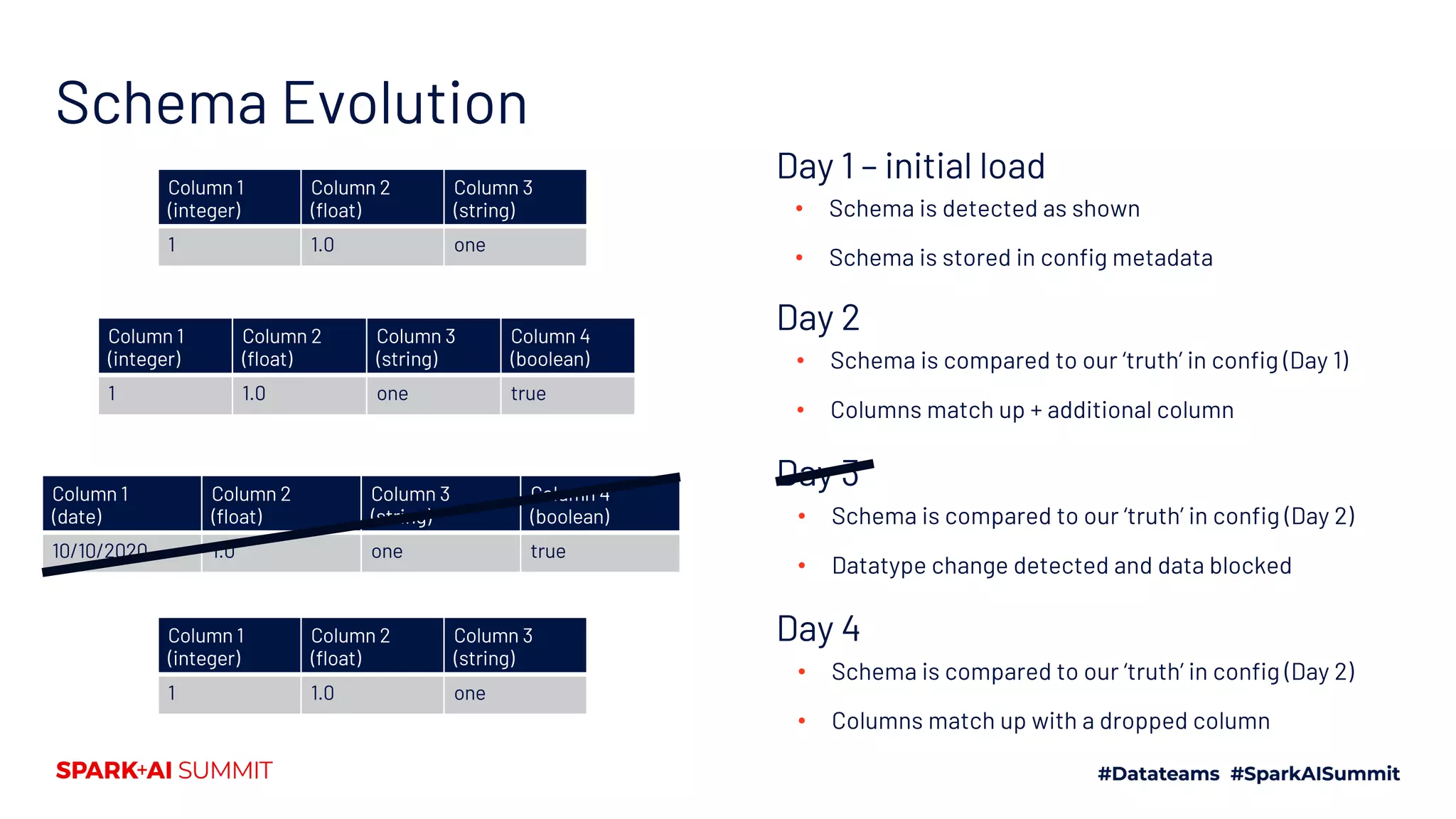

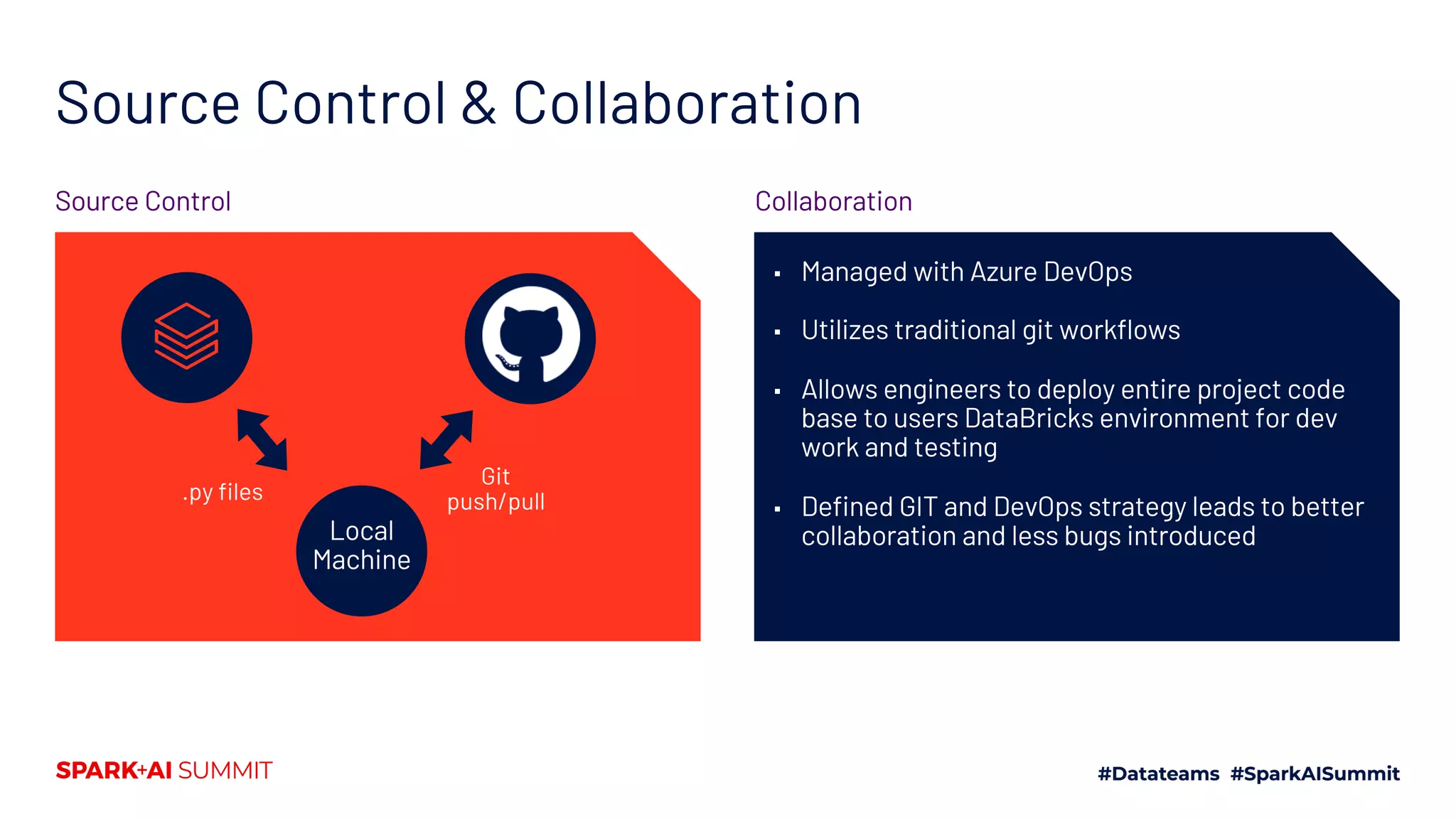

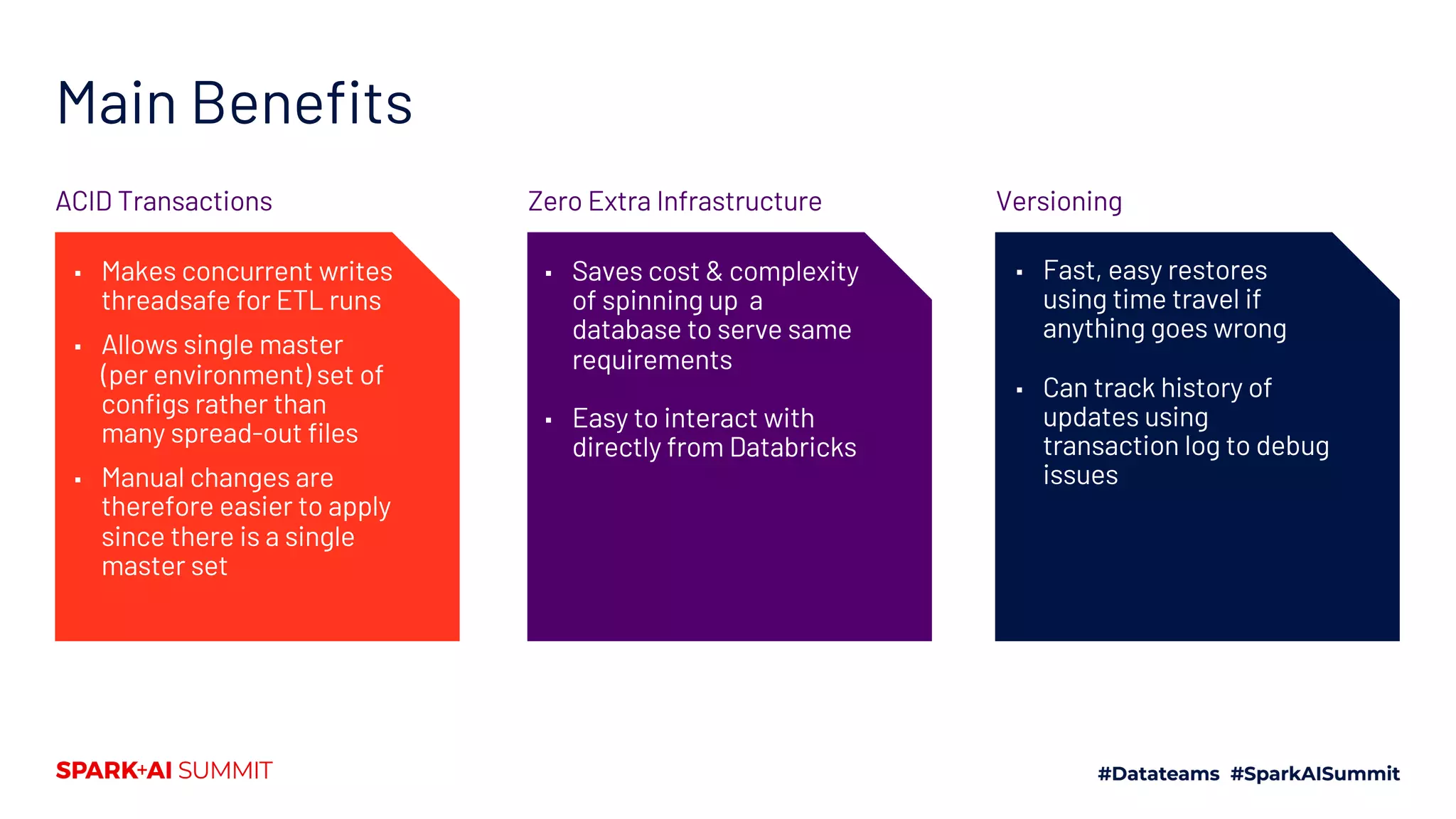

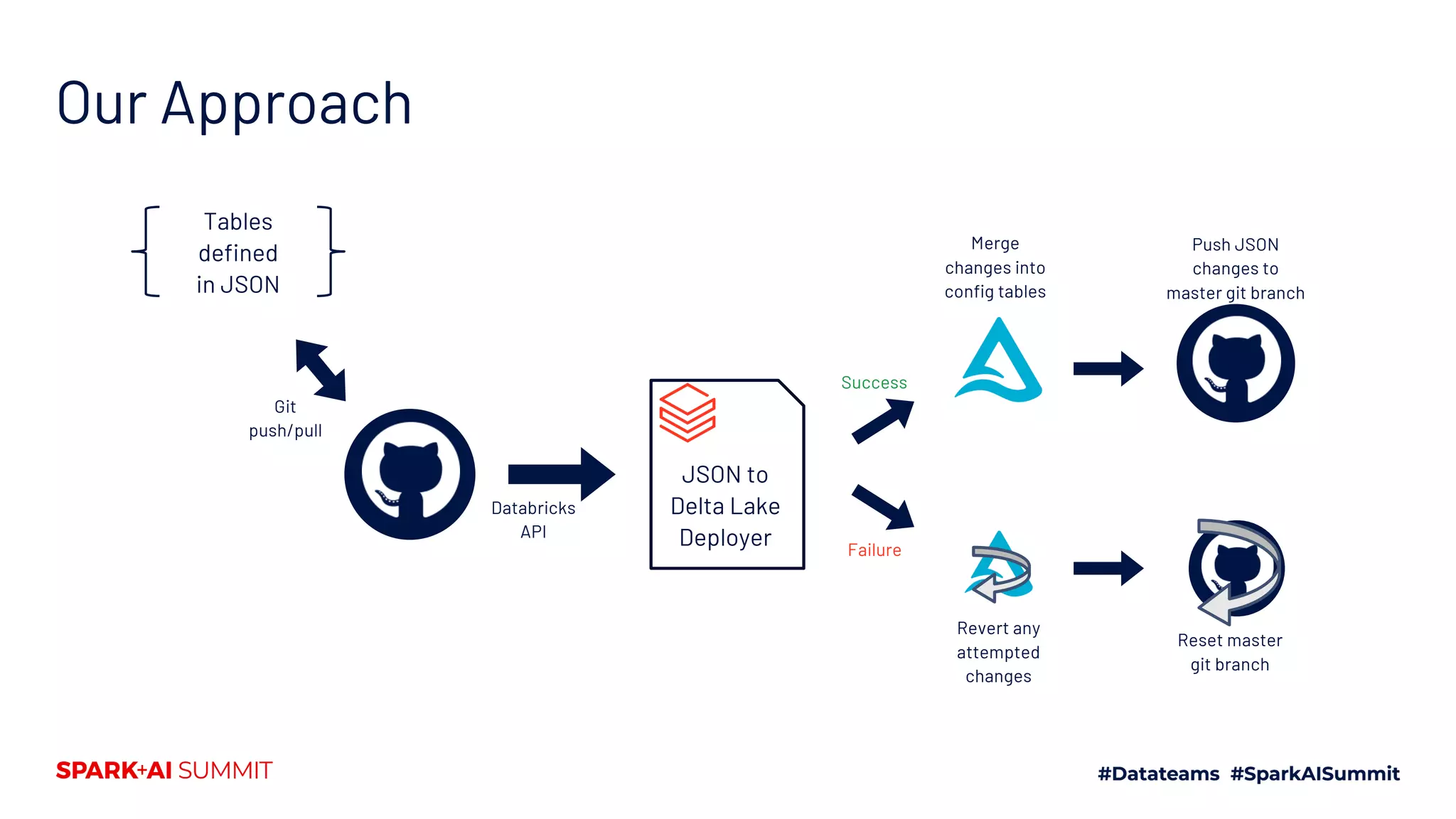

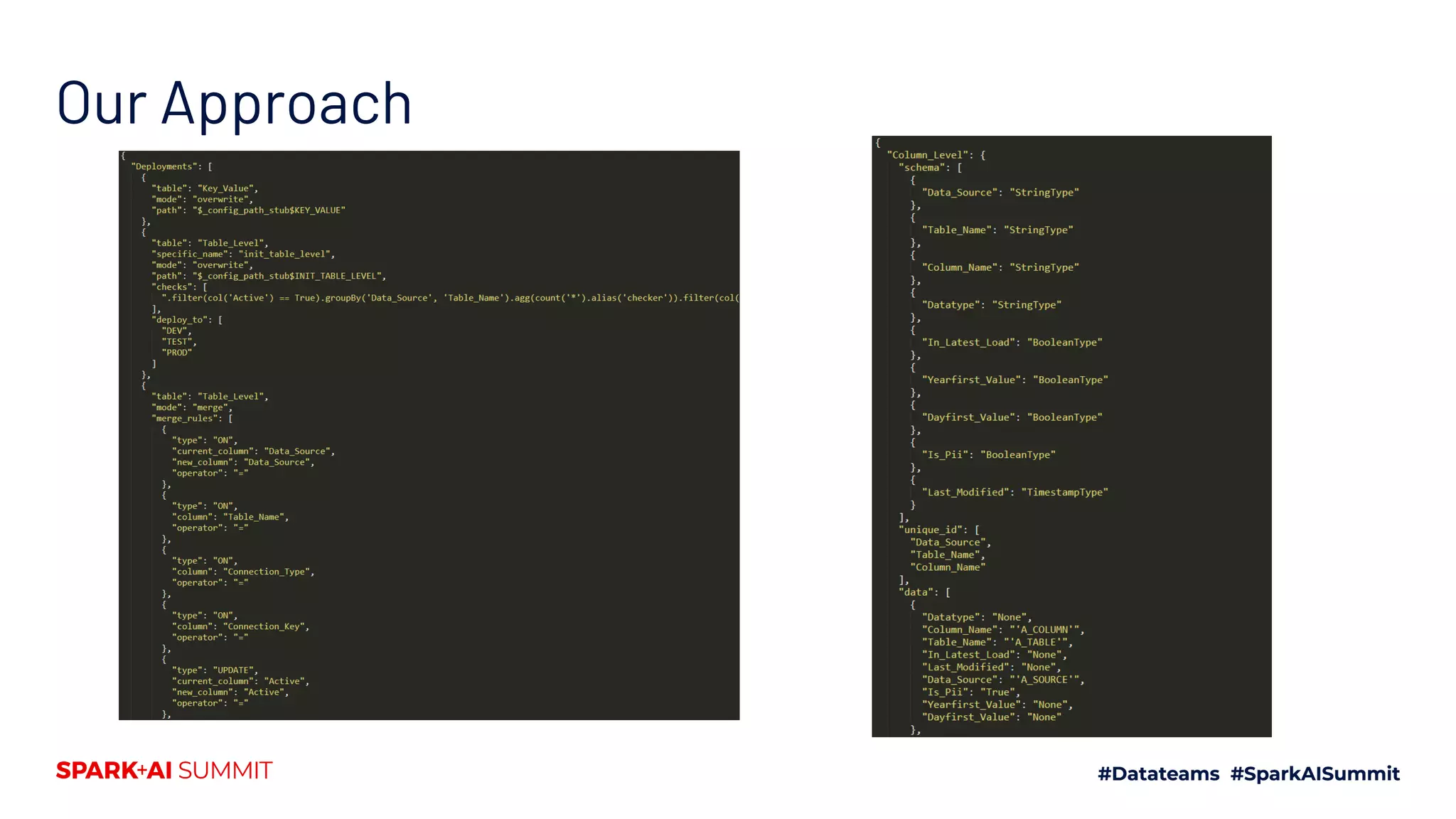

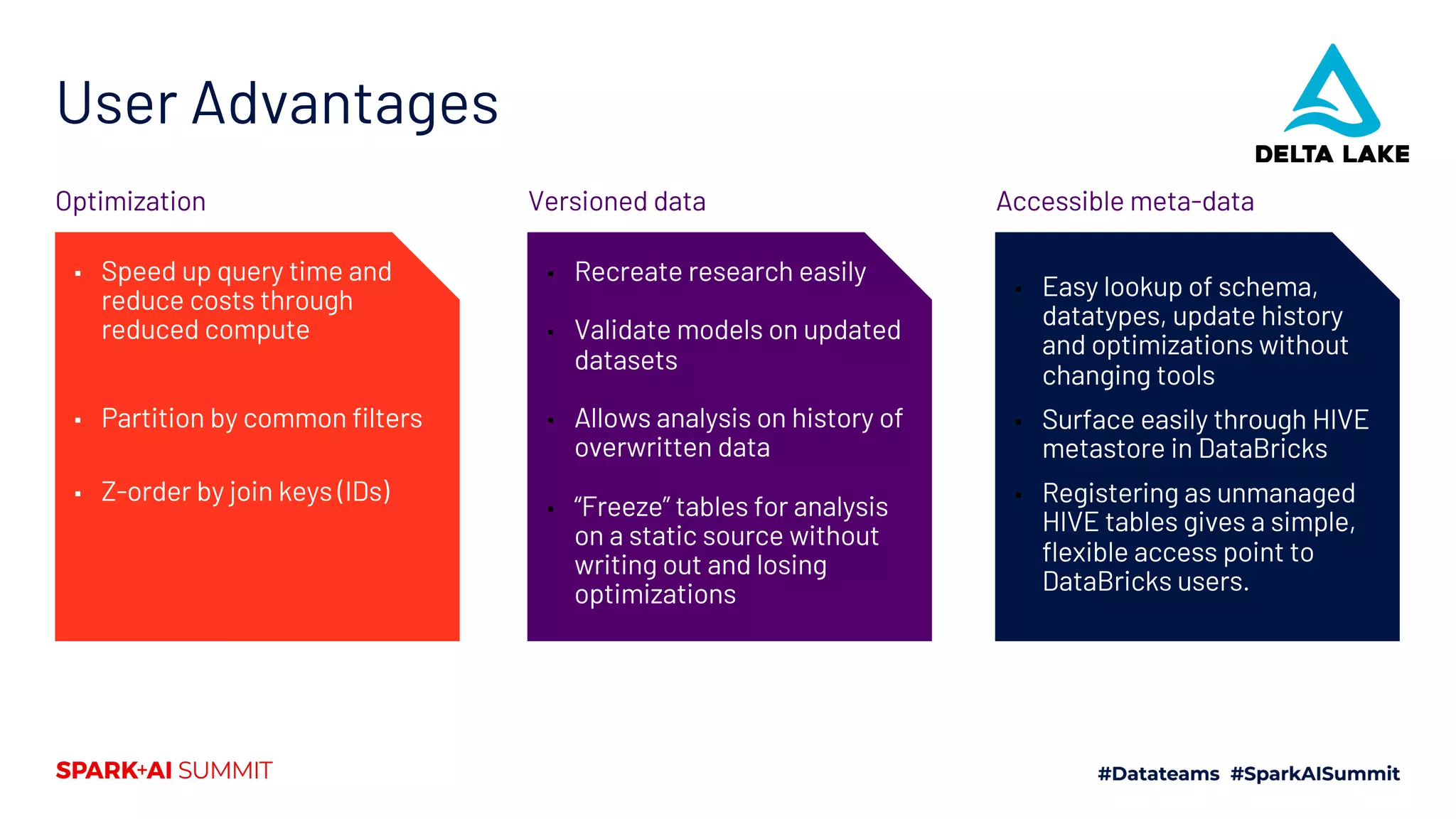

The document discusses the development of Mars Petcare's data platform using Delta Lake and Databricks for ETL processes, emphasizing the benefits of a standardized approach to data management and analytics. Key features include the ability to handle diverse data sources, ensure data integrity through ACID transactions, and enhance collaboration among analytics teams using Azure DevOps. Moreover, it highlights the advantages for data scientists, such as faster query performance and improved data access through effective schema management.