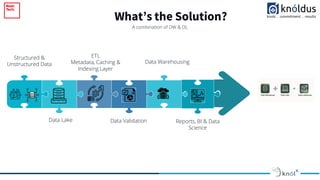

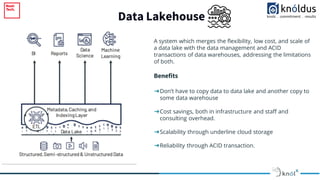

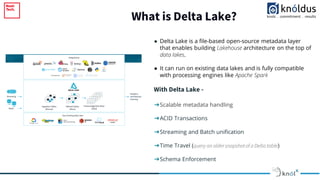

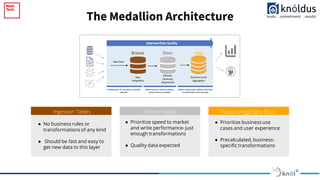

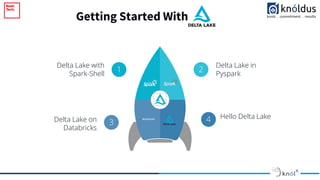

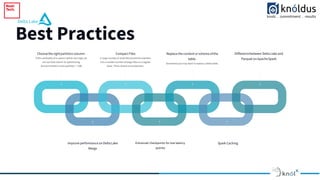

The document outlines an introduction to Delta Lake and its importance in modern data architecture, highlighting its role in combining the flexibility of data lakes with the reliability of data warehouses. It discusses the features of Delta Lake, such as ACID transactions, schema enforcement, and scalability, along with best practices for implementation. Additionally, it emphasizes the significance of etiquette during sessions, including punctuality and providing constructive feedback.