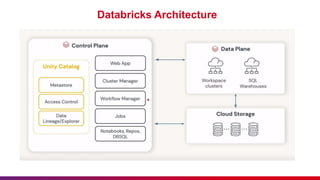

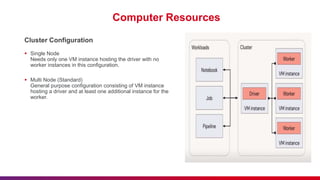

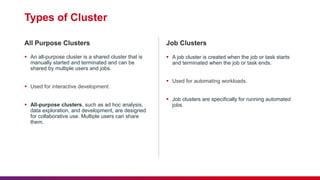

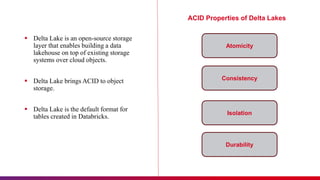

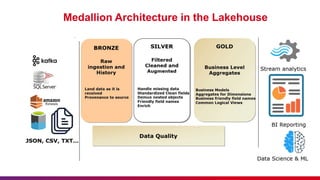

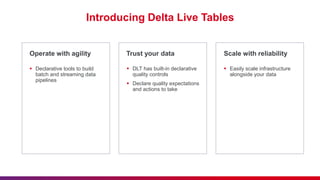

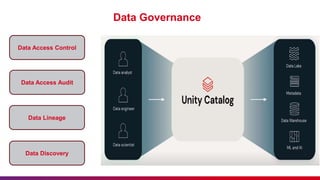

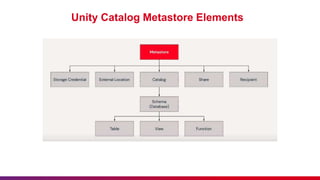

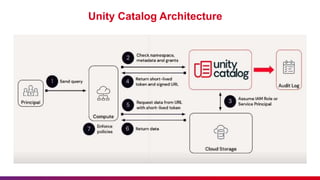

The document outlines guidelines for etiquette during Databricks sessions, emphasizing punctuality, feedback, and minimizing disruptions. It also provides an overview of Databricks features, including multi-node and all-purpose clusters, Delta Lake for object storage management, and Delta Live Tables for building data pipelines. Additionally, it discusses governance aspects like data access control and lineage within the Unity Catalog framework.