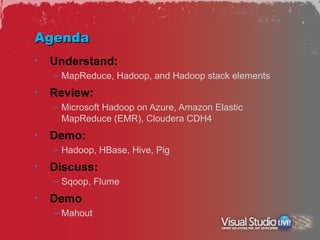

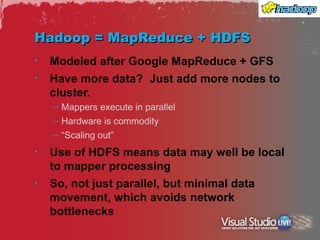

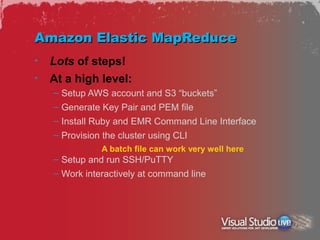

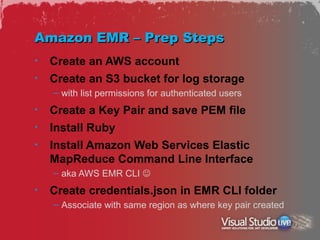

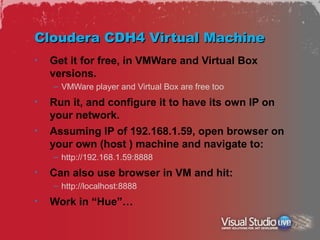

This document provides a summary of Hadoop and its ecosystem components. It begins with introducing the speaker, Andrew Brust, and his background. It then provides an overview of the key Hadoop components like MapReduce, HDFS, HBase, Hive, Pig, Sqoop and Flume. The document demonstrates several of these components and discusses how to use Microsoft Hadoop on Azure, Amazon EMR and Cloudera CDH virtual machines. It also summarizes the Mahout machine learning library and recommends a commercial product for visualizing and applying Mahout outputs.

![Amazon – Security and Startup

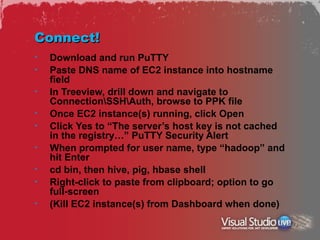

• Security

– Download PuTTYgen and run it

– Click Load and browse to PEM file

– Save it in PPK format

– Exit PuTTYgen

• In a command window, navigate to EMR CLI

folder and enter command:

– ruby elastic-mapreduce --create --alive [--num-instance xx]

[--pig-interactive] [--hive-interactive] [--hbase --instance-type

m1.large]

• In AWS Console, go to EC2 Dashboard and

click Instances on left nav bar

• Wait until instance is running and get its

Public DNS name

– Use Compatibility View in IE or copy may not work](https://image.slidesharecdn.com/brusthadoopecosystem-120816105016-phpapp02/85/Brust-hadoopecosystem-16-320.jpg)

![Hive, Continued

• Load data from flat HDFS files

– LOAD DATA [LOCAL] INPATH 'myfile'

INTO TABLE mytable;

• SQL Queries

– CREATE, ALTER, DROP

– INSERT OVERWRITE (creates whole tables)

– SELECT, JOIN, WHERE, GROUP BY

– SORT BY, but ordering data is tricky!

– MAP/REDUCE/TRANSFORM…USING allows for custom

map, reduce steps utilizing Java or streaming code](https://image.slidesharecdn.com/brusthadoopecosystem-120816105016-phpapp02/85/Brust-hadoopecosystem-28-320.jpg)