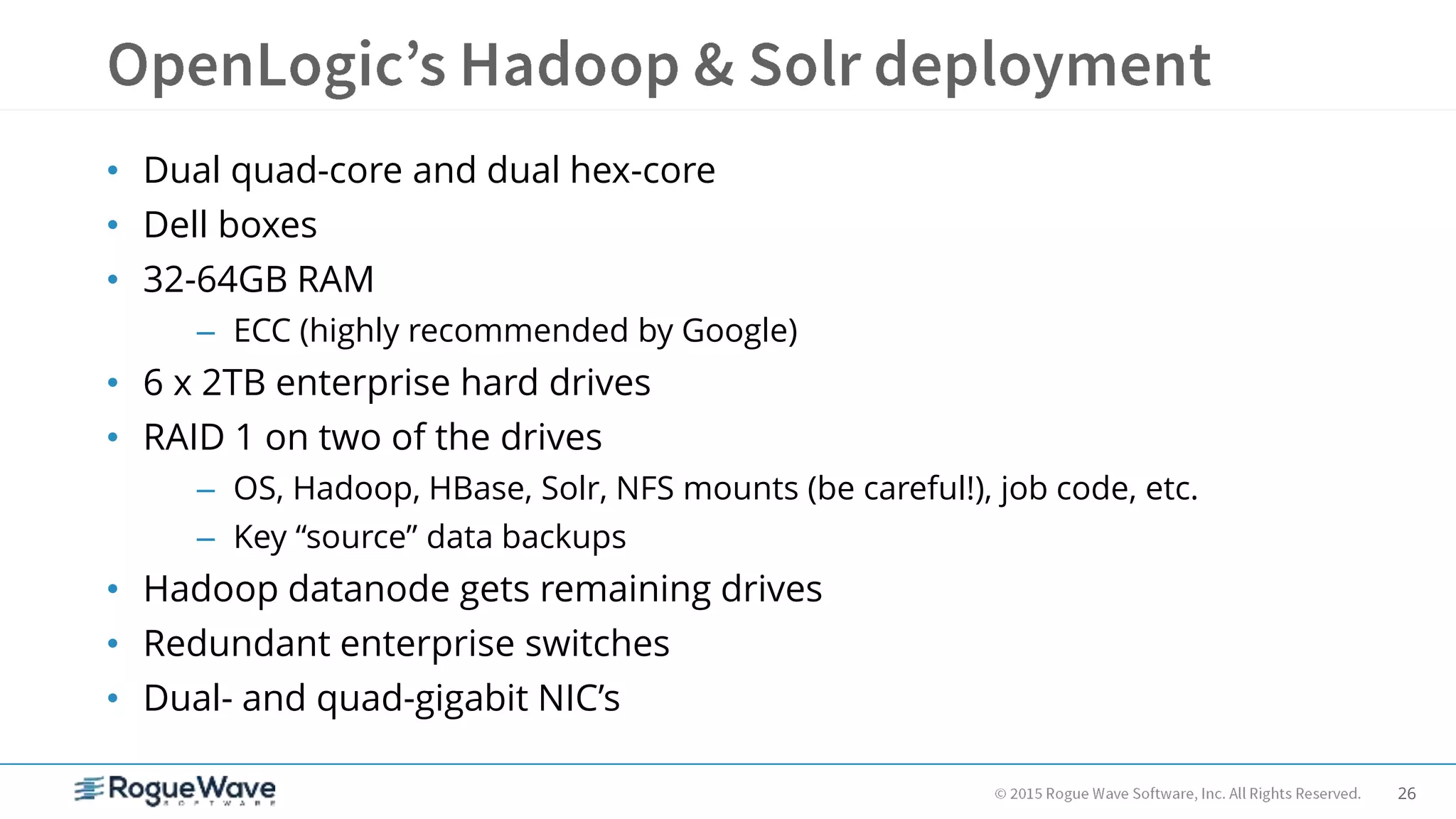

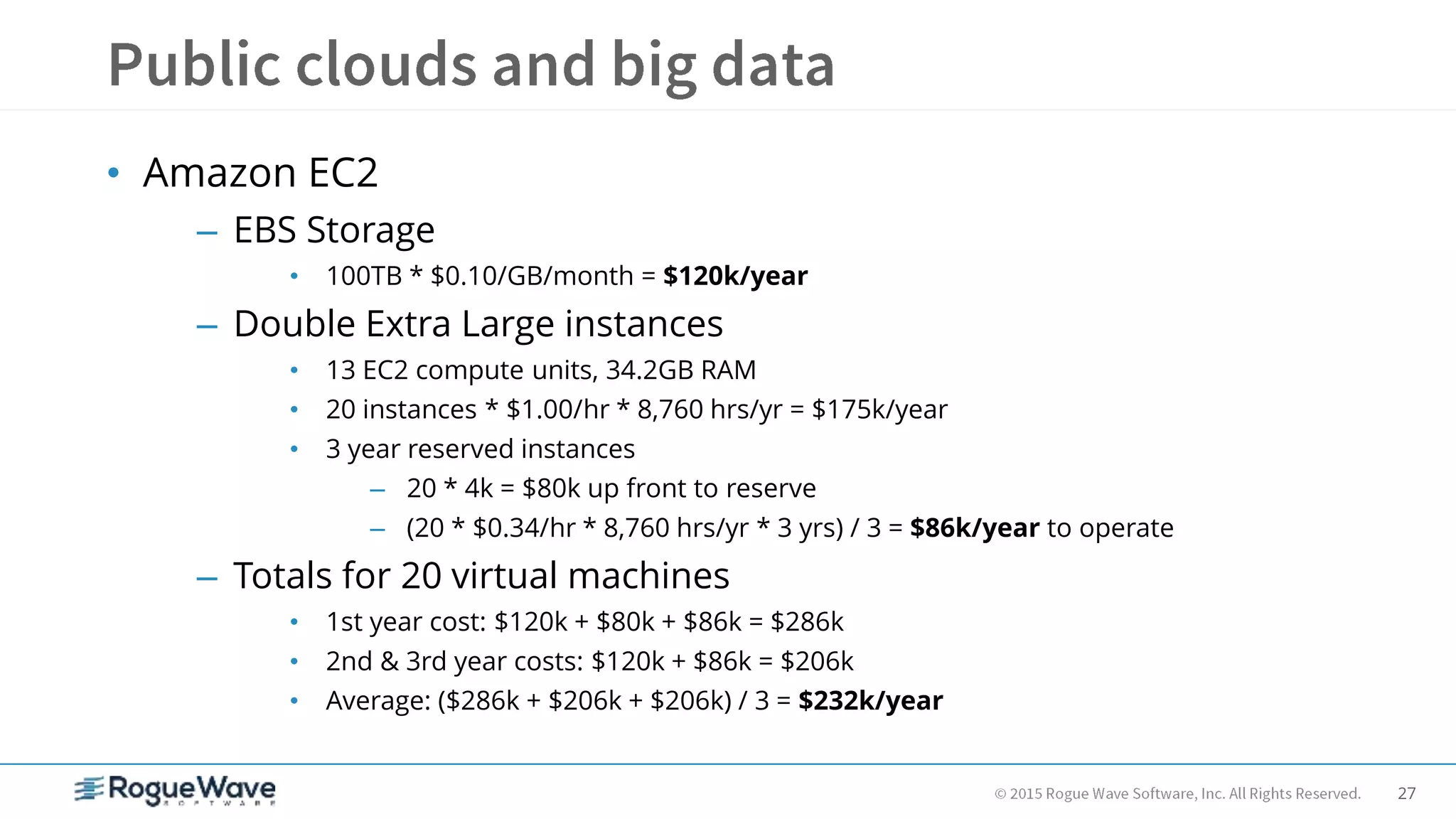

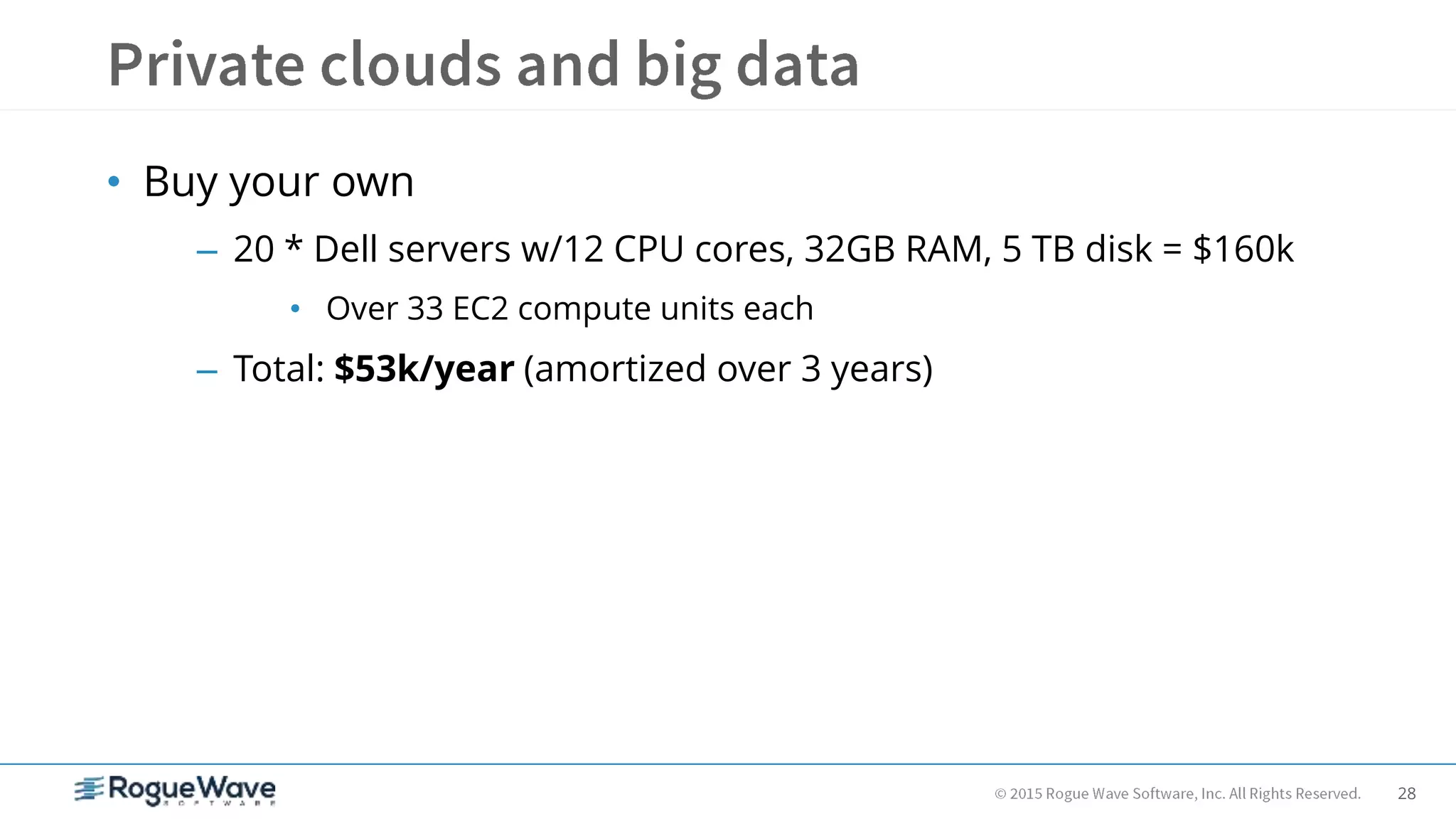

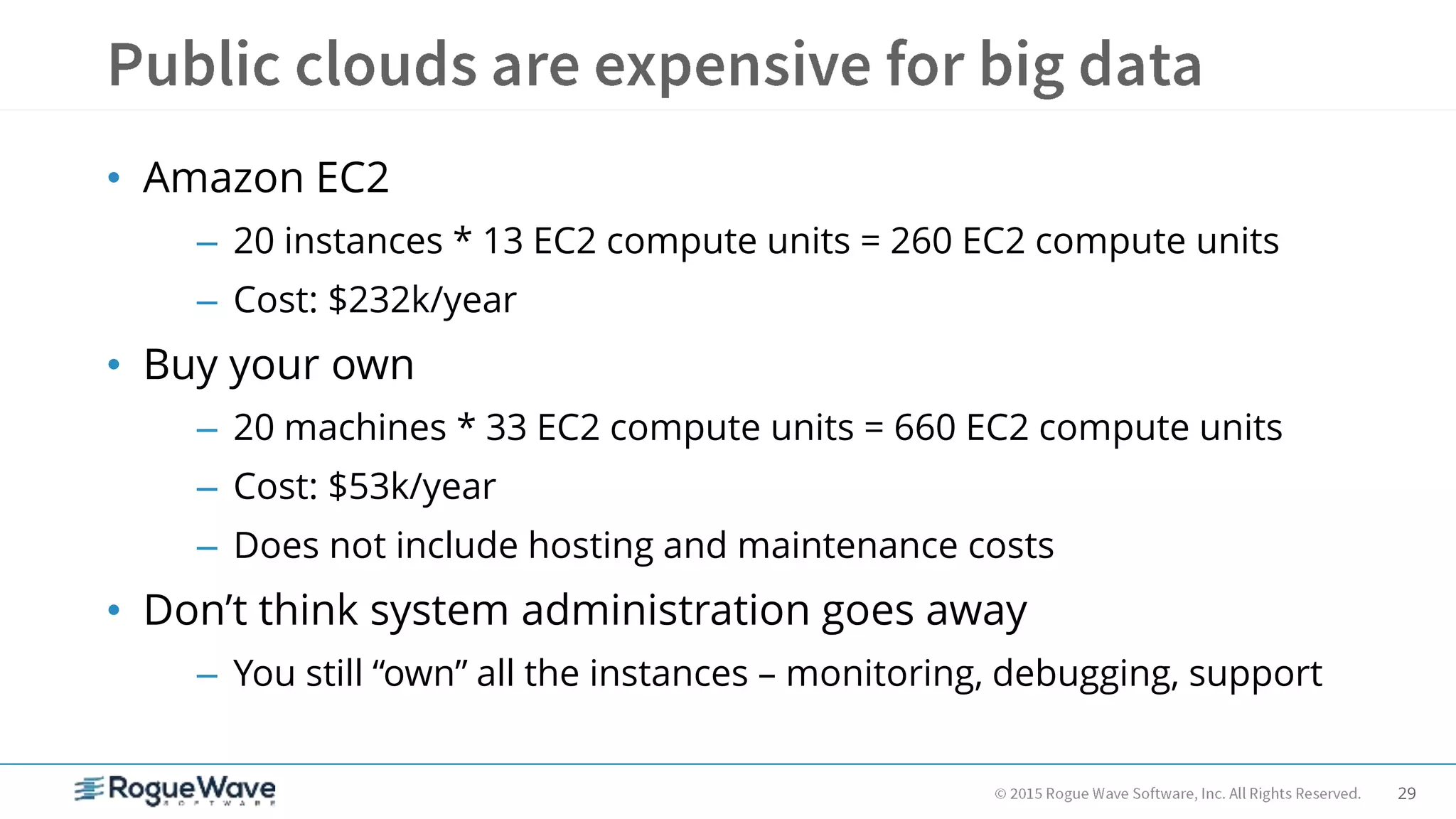

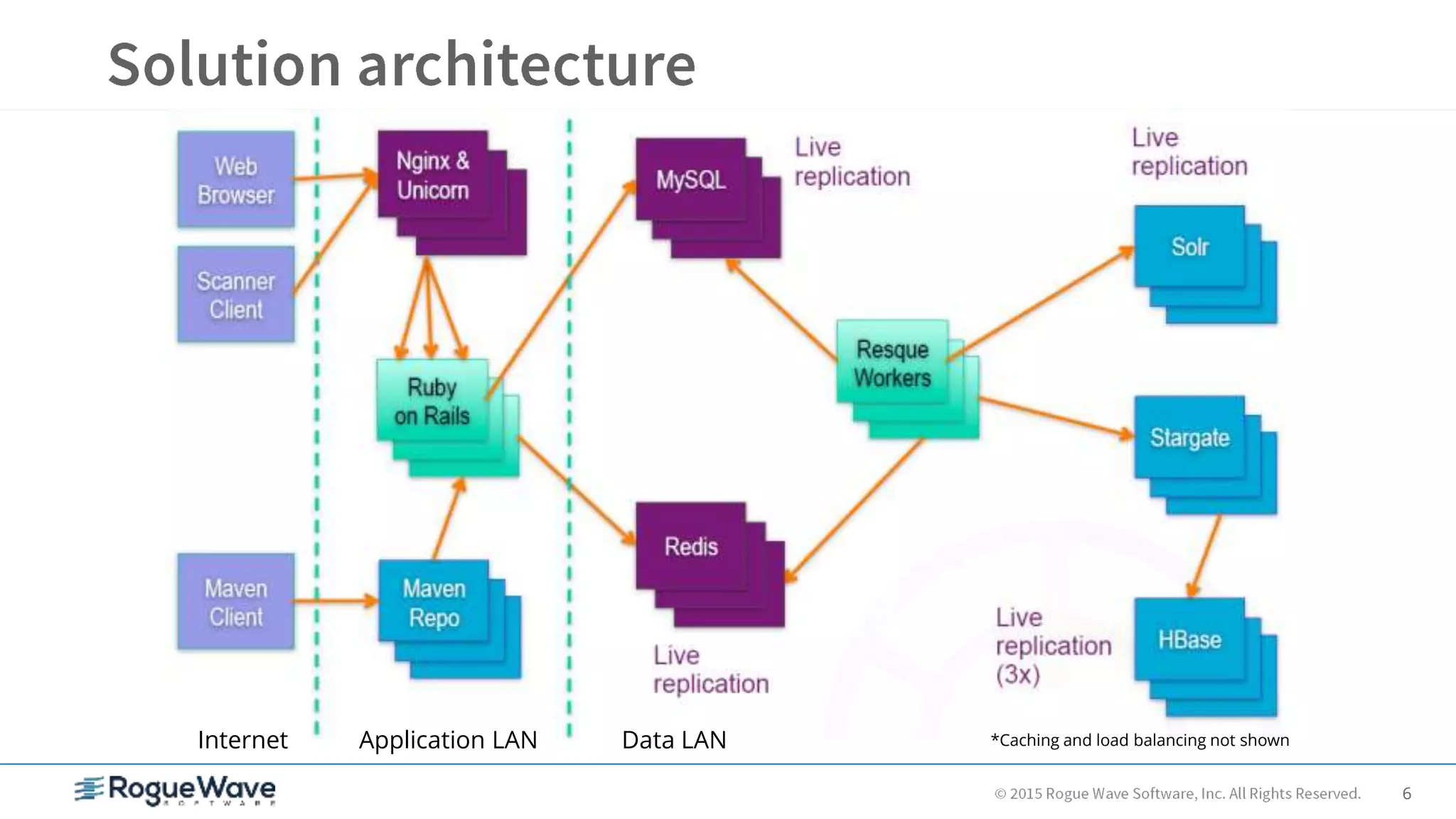

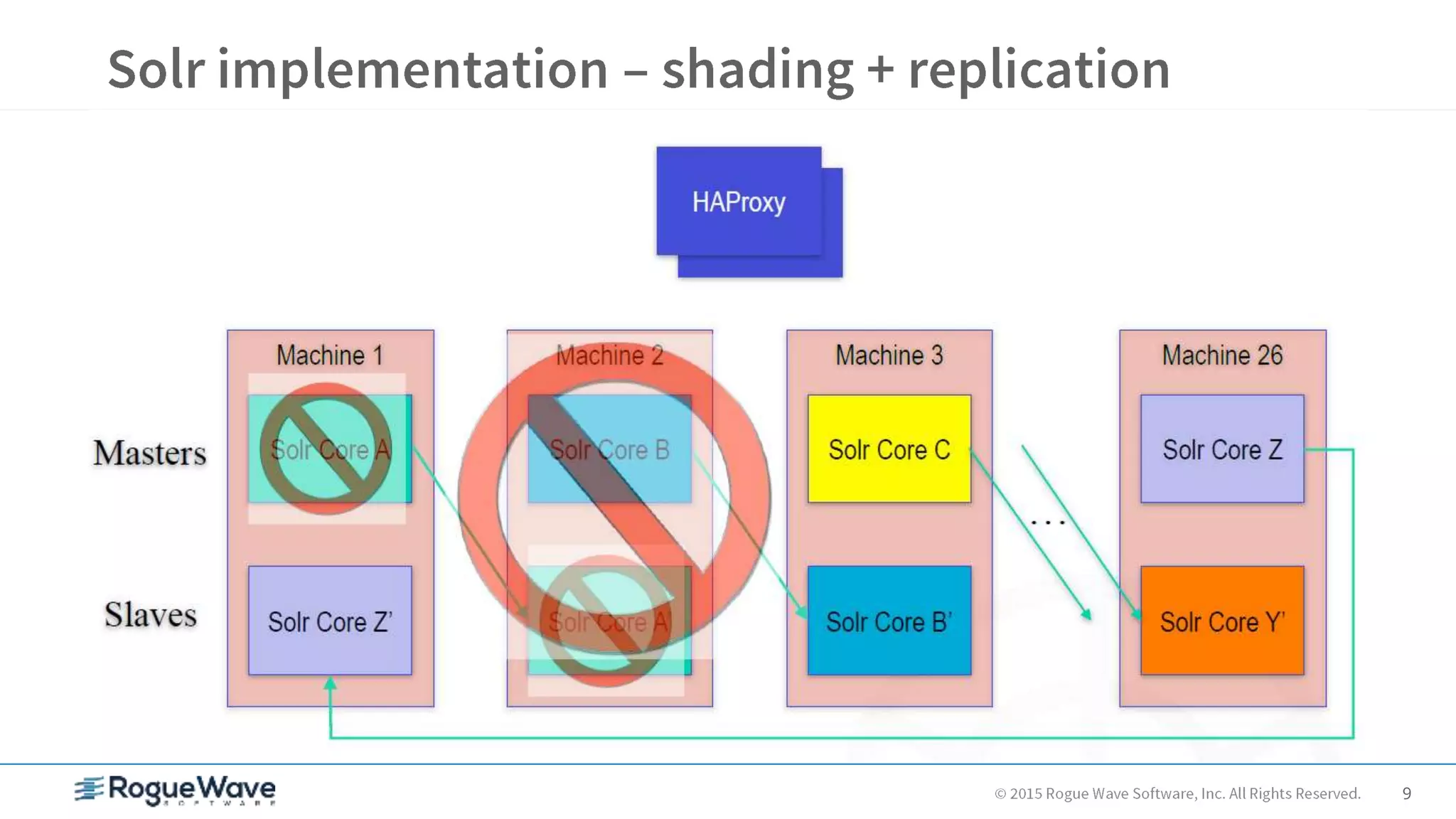

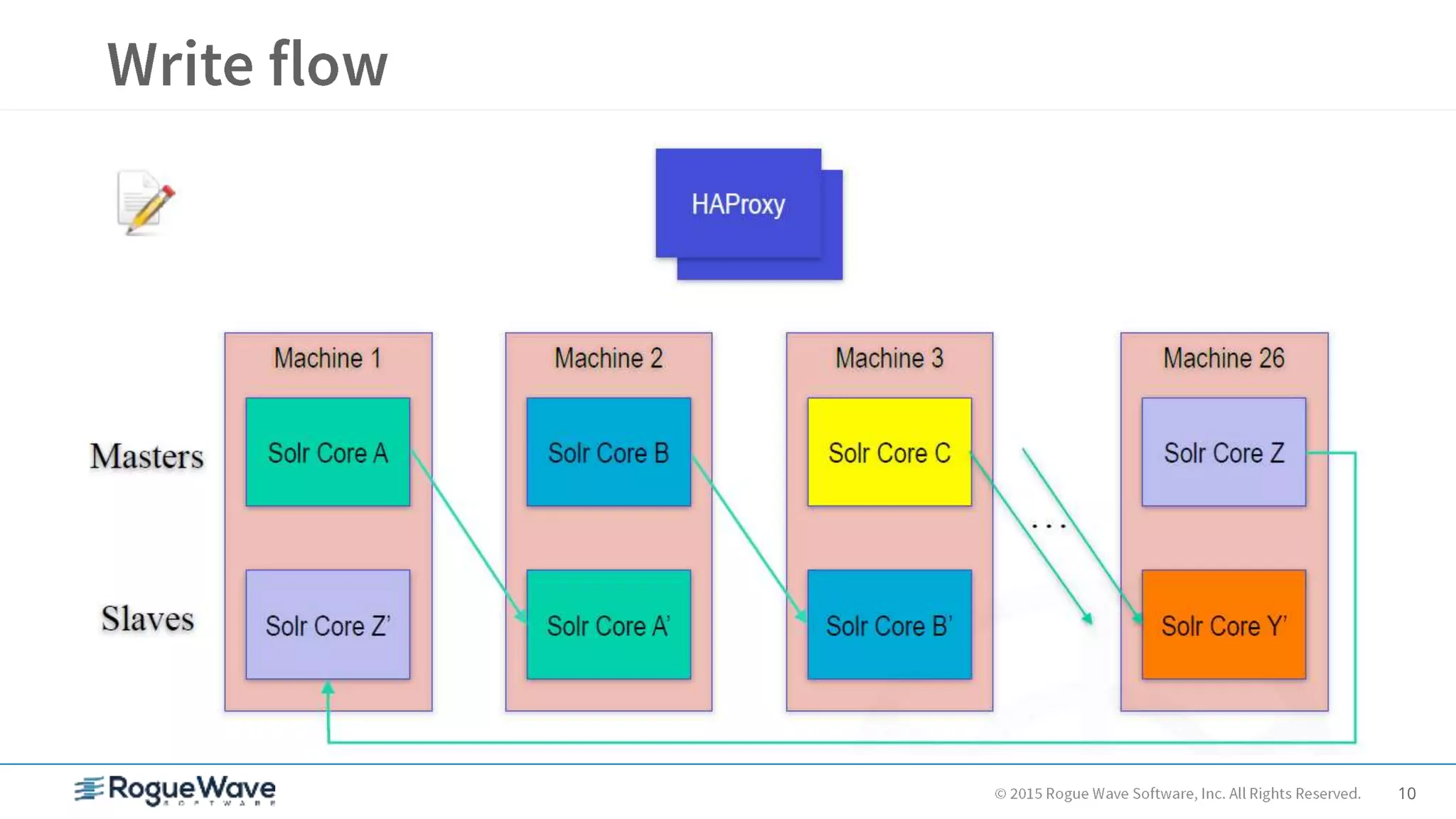

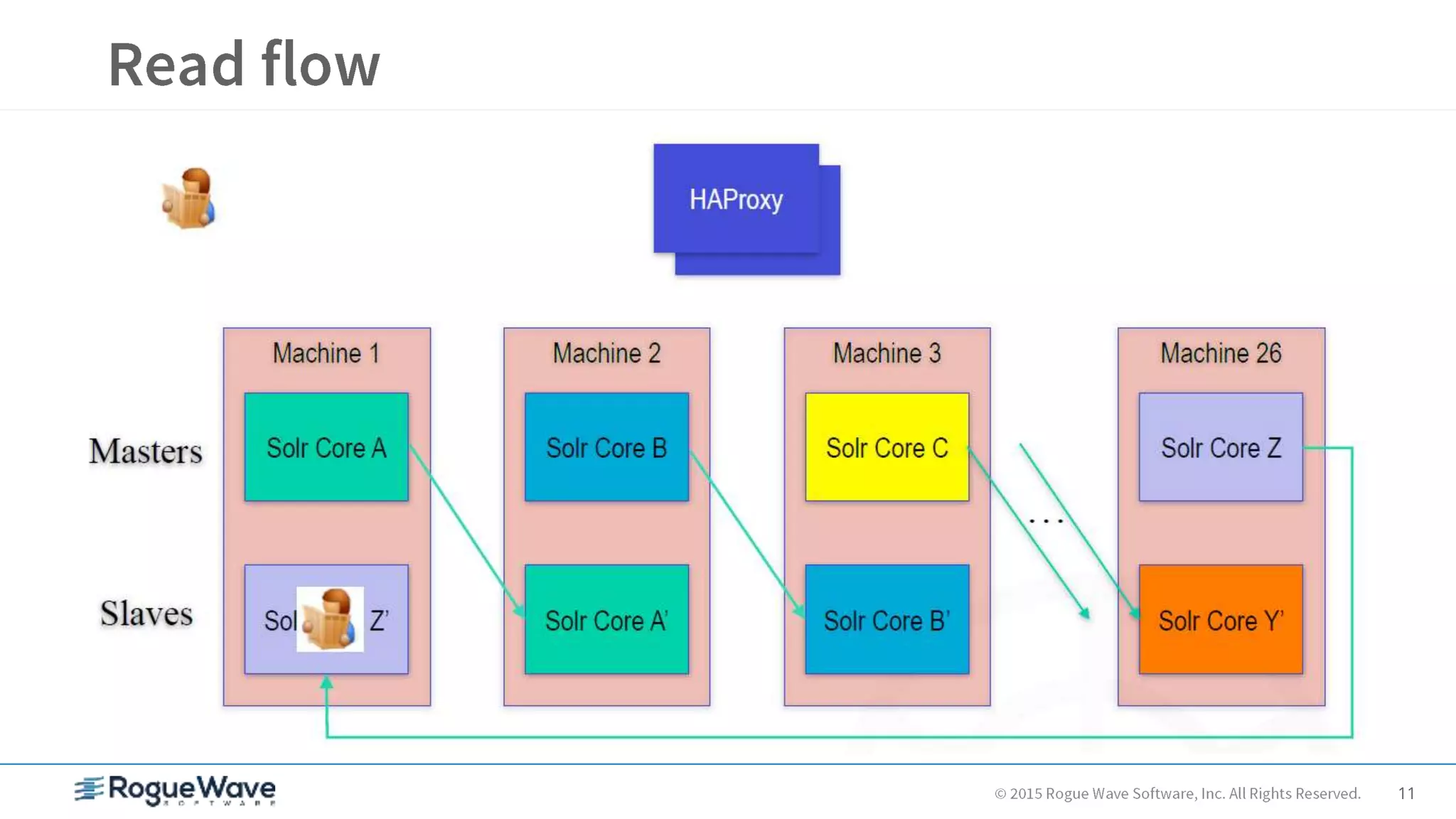

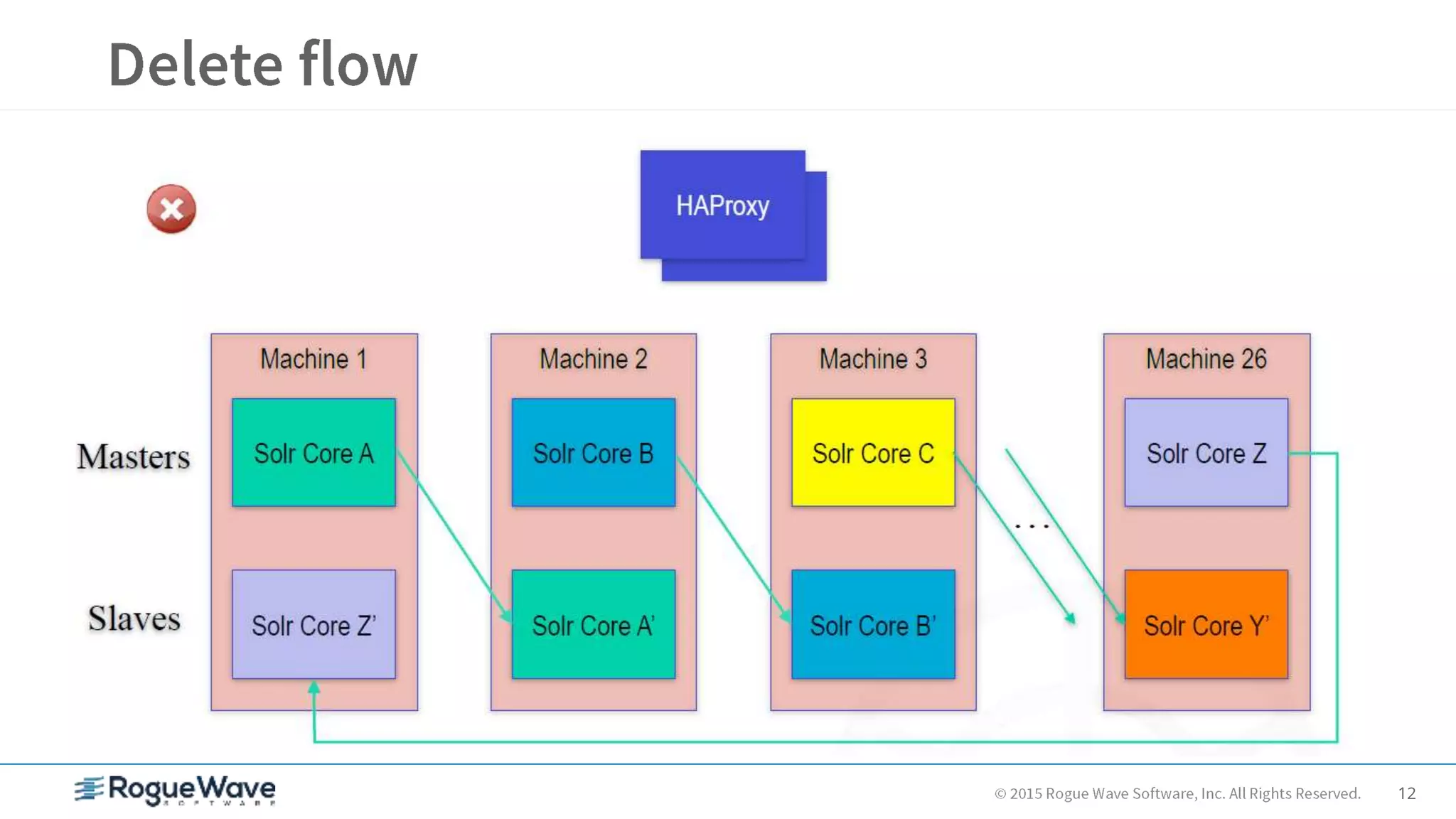

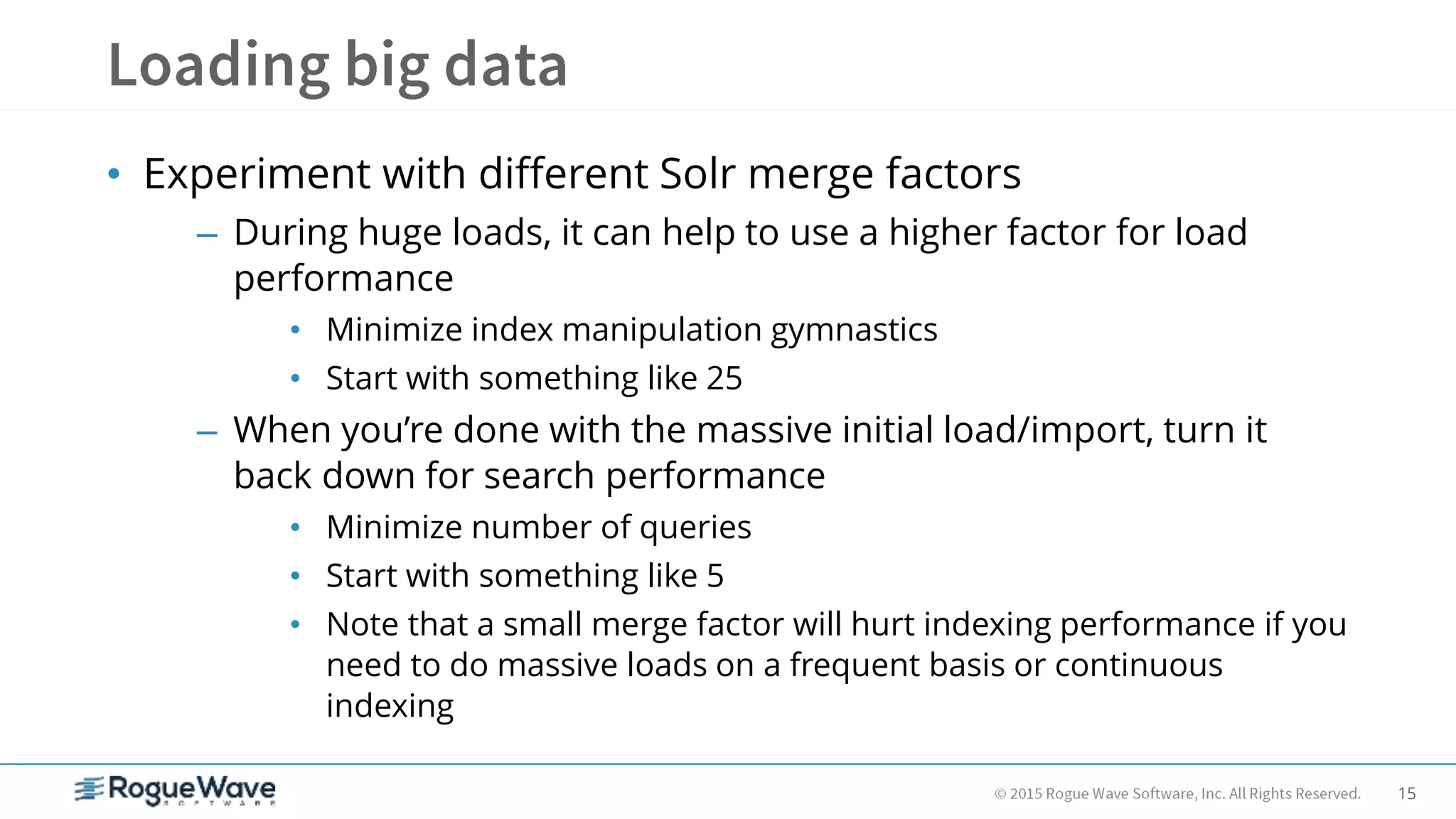

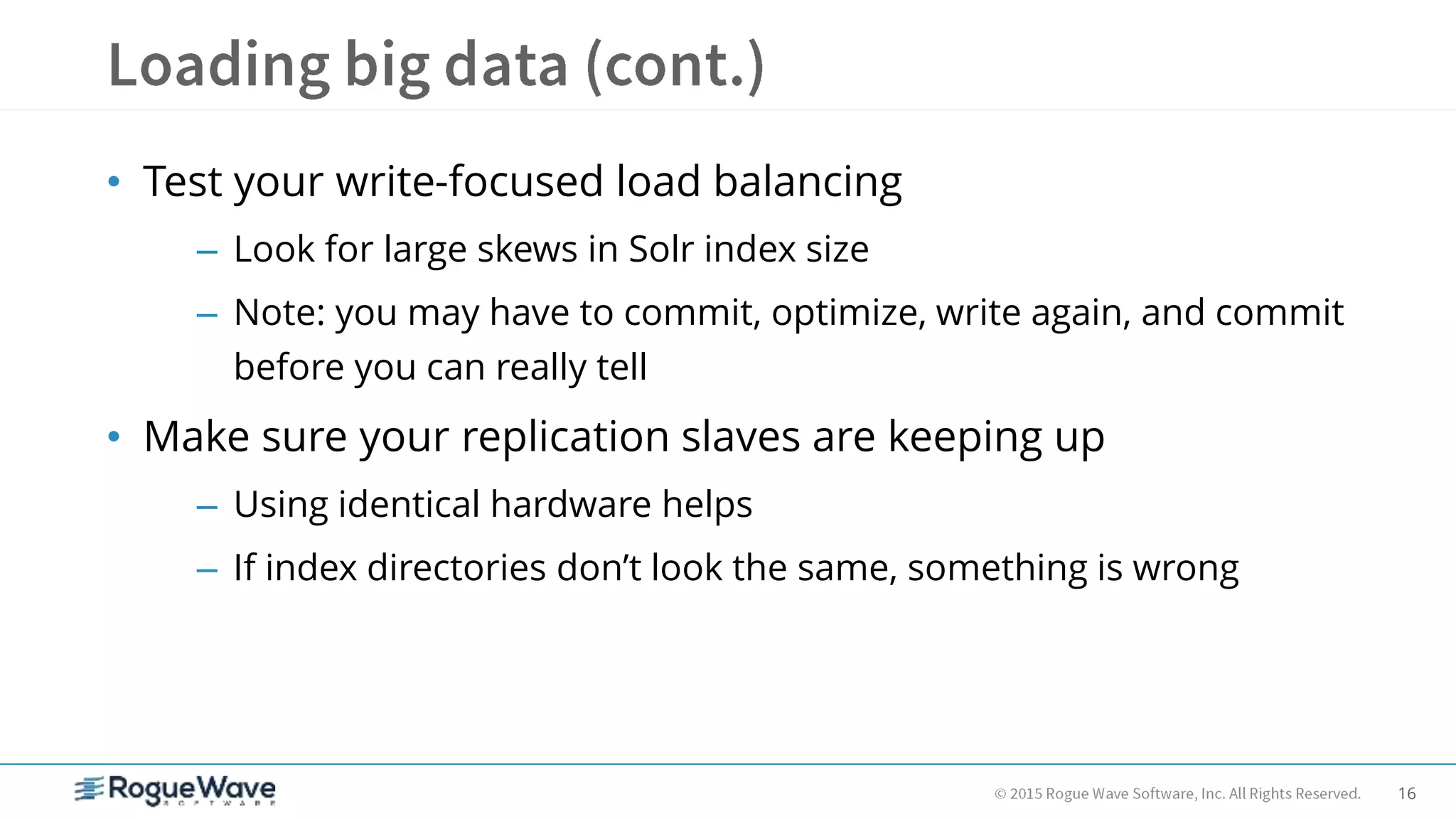

The document discusses the integration of Solr and Hadoop for real-time searching of big data, highlighting the limitations of traditional relational databases and emphasizing the need for scalable solutions. It details components like HBase, Solr, and their operational intricacies, including sharding, replication, and performance optimization techniques. The author also contrasts on-premise hardware versus cloud solutions, showcasing cost considerations and system administration challenges in managing big data environments.

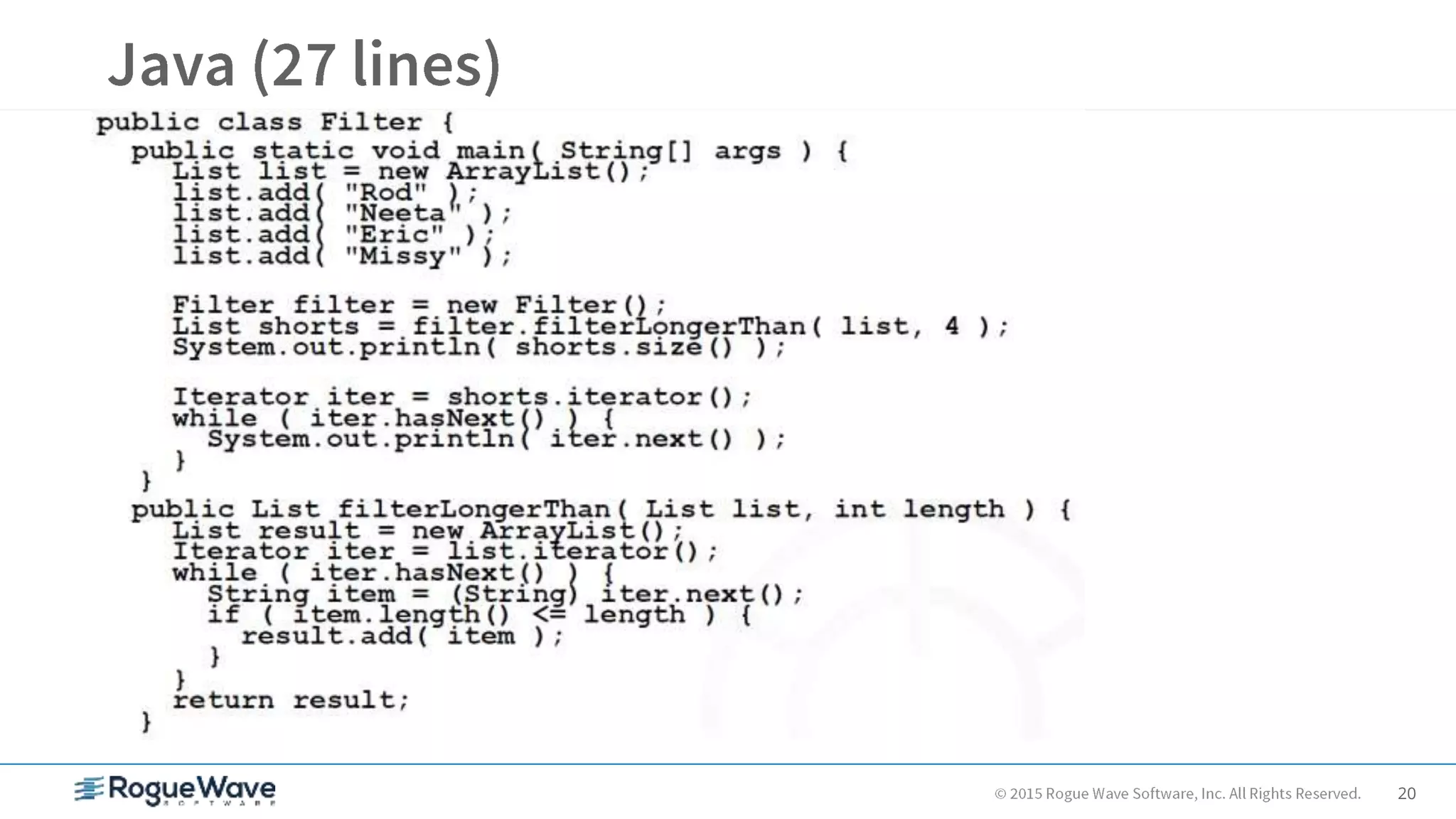

![21

JRuby

list = ["Rod", "Neeta", "Eric", "Missy"]

shorts = list.find_all { |name| name.size <= 4 }

puts shorts.size

shorts.each { |name| puts name }

-> 2

-> Rod

Eric

Groovy

list = ["Rod", "Neeta", "Eric", "Missy"]

shorts = list.findAll { name -> name.size() <= 4 }

println shorts.size

shorts.each { name -> println name }

-> 2

-> Rod

Eric](https://image.slidesharecdn.com/real-timesearchingofbigdatawithsolrandhadoop-151030144524-lva1-app6892/75/Real-time-searching-of-big-data-with-Solr-and-Hadoop-21-2048.jpg)