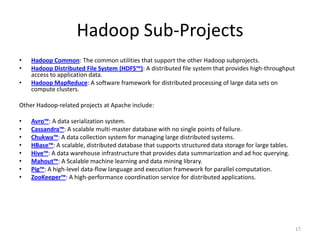

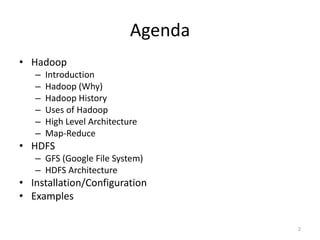

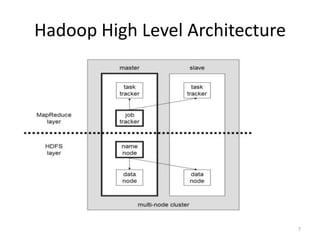

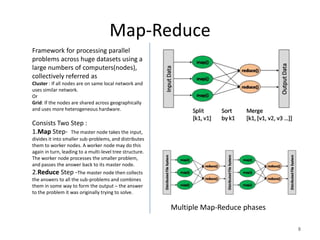

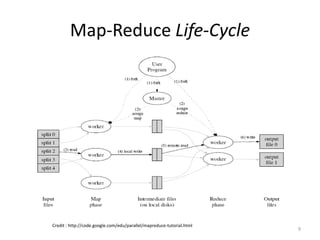

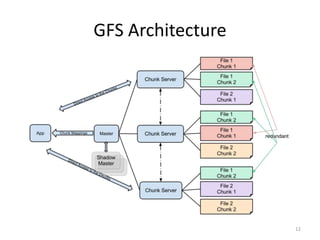

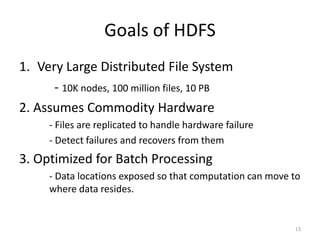

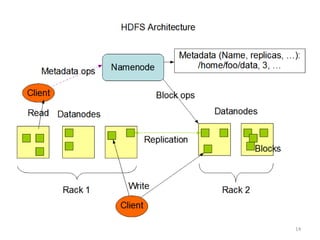

The document provides an overview of Hadoop, an open-source framework designed for processing large datasets across distributed environments. It covers various aspects such as the history, architecture, and components like the Hadoop Distributed File System (HDFS) and MapReduce framework. The document also includes installation/configuration instructions and highlights the significant applications and sub-projects related to Hadoop.

![Installation/ Configuration

[rjain@10.1.110.12 hadoop-1.0.3]$ vi conf/hdfs-site.xml [rjain@10.1.110.12 hadoop-1.0.3]$ pwd

<configuration> /home/rjain/hadoop-1.0.3

<property>

<name>dfs.replication</name>

[rjain@10.1.110.12 hadoop-1.0.3]$ bin/start-all.sh

<value>1</value>

</property> [rjain@10.1.110.12 hadoop-1.0.3]$ bin/start-mapred.sh

<property>

<name>dfs.permissions</name>

[rjain@10.1.110.12 hadoop-1.0.3]$ bin/start-dfs.sh

<value>true</value>

</property> [rjain@10.1.110.12 hadoop-1.0.3]$ bin/hadoop fs

<property> Usage: java FsShell

<name>dfs.data.dir</name> [-ls <path>]

<value>/home/rjain/rahul/hdfs/data</value> [-lsr <path>] : Recursive version of ls. Similar to Unix ls -R.

</property> [-du <path>] : Displays aggregate length of files contained in the directory or the length of a file.

<property> [-dus <path>] : Displays a summary of file lengths.

<name>dfs.name.dir</name> [-count[-q] <path>]

<value>/home/rjain/rahul/hdfs/name</value> [-mv <src> <dst>]

</property> [-cp <src> <dst>]

</configuration> [-rm [-skipTrash] <path>]

[-rmr [-skipTrash] <path>] : Recursive version of delete(rm).

[rjain@10.1.110.12 hadoop-1.0.3]$ vi conf/mapred-site.xml [-expunge] : Empty the Trash

<configuration> [-put <localsrc> ... <dst>] : Copy single src, or multiple srcs from local file system to the

<property> destination filesystem

<name>mapred.job.tracker</name> [-copyFromLocal <localsrc> ... <dst>]

<value>localhost:9001</value> [-moveFromLocal <localsrc> ... <dst>]

</property> [-get [-ignoreCrc] [-crc] <src> <localdst>]

</configuration> [-getmerge <src> <localdst> [addnl]]

[-cat <src>]

[rjain@10.1.110.12 hadoop-1.0.3]$ vi conf/core-site.xml [-text <src>] : Takes a source file and outputs the file in text format. The allowed formats are zip

<configuration> and TextRecordInputStream.

<property> [-copyToLocal [-ignoreCrc] [-crc] <src> <localdst>]

<name>fs.default.name</name> [-moveToLocal [-crc] <src> <localdst>]

<value>hdfs://localhost:9000</value> [-mkdir <path>]

</property> [-setrep [-R] [-w] <rep> <path/file>] : Changes the replication factor of a file

</configuration> [-touchz <path>] : Create a file of zero length.

[-test -[ezd] <path>] : -e check to see if the file exists. Return 0 if true. -z check to see if the file is

[rjain@10.1.110.12 hadoop-1.0.3]$ jps zero length. Return 0 if true. -d check to see if the path is directory. Return 0 if true.

29756 SecondaryNameNode [-stat [format] <path>] : Returns the stat information on the path like created time of dir

19847 TaskTracker [-tail [-f] <file>] : Displays last kilobyte of the file to stdout

18756 Jps [-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

29483 NameNode [-chown [-R] [OWNER][:[GROUP]] PATH...]

29619 DataNode [-chgrp [-R] GROUP PATH...] 15

19711 JobTracker [-help [cmd]]](https://image.slidesharecdn.com/hadoop-for-beginners-130112031616-phpapp02/85/Hadoop-HDFS-for-Beginners-15-320.jpg)

![HDFS- Read/Write Example

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

Given an input/output file name as string, we construct inFile/outFile Path objects.

Most of the FileSystem APIs accepts Path objects.

Path inFile = new Path(argv[0]);

Path outFile = new Path(argv[1]);

Validate the input/output paths before reading/writing.

if (!fs.exists(inFile))

printAndExit("Input file not found");

if (!fs.isFile(inFile))

printAndExit("Input should be a file");

if (fs.exists(outFile))

printAndExit("Output already exists");

Open inFile for reading.

FSDataInputStream in = fs.open(inFile);

Open outFile for writing.

FSDataOutputStream out = fs.create(outFile);

Read from input stream and write to output stream until EOF.

while ((bytesRead = in.read(buffer)) > 0) {

out.write(buffer, 0, bytesRead);

}

Close the streams when done.

in.close();

out.close();

16](https://image.slidesharecdn.com/hadoop-for-beginners-130112031616-phpapp02/85/Hadoop-HDFS-for-Beginners-16-320.jpg)