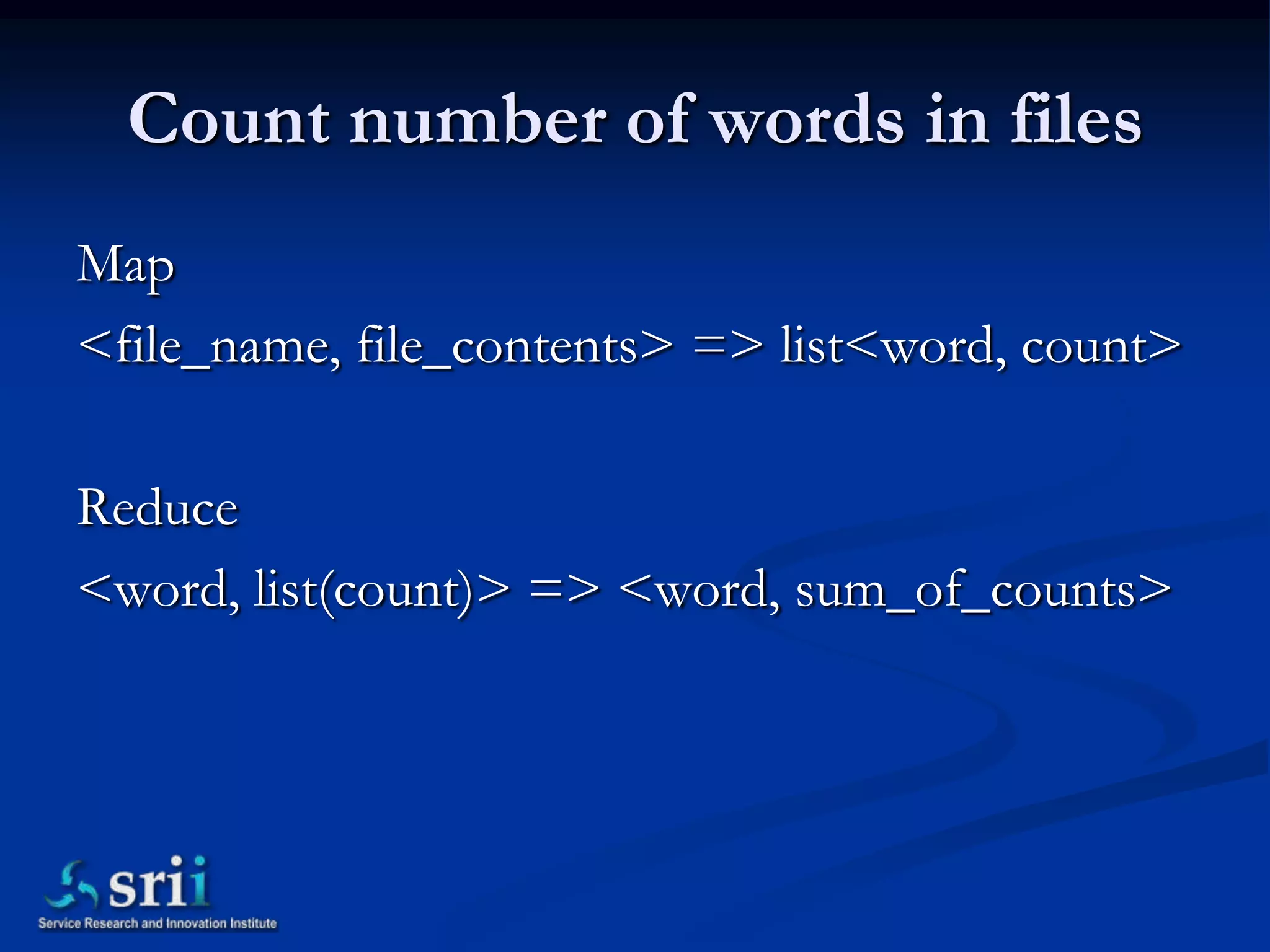

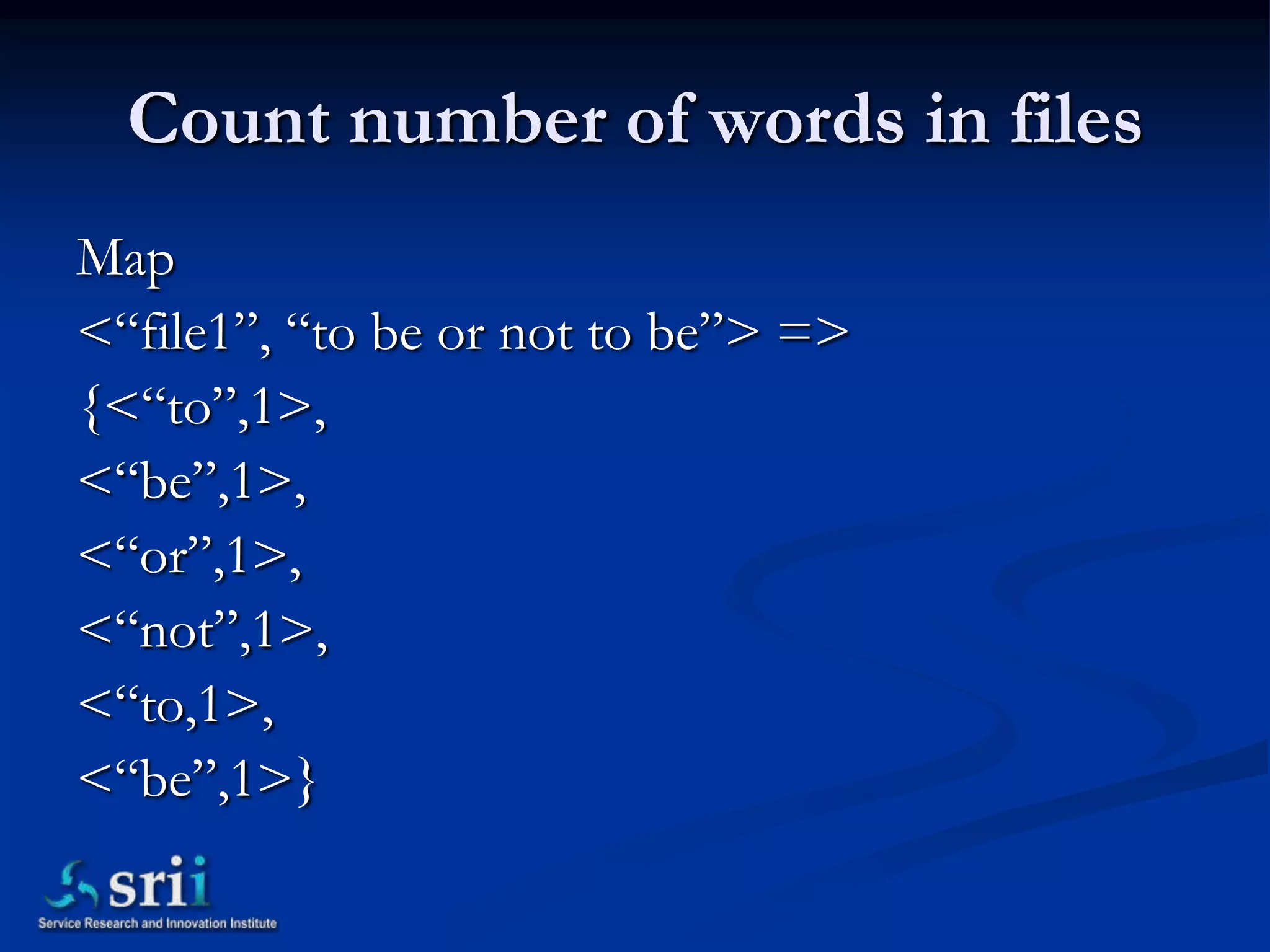

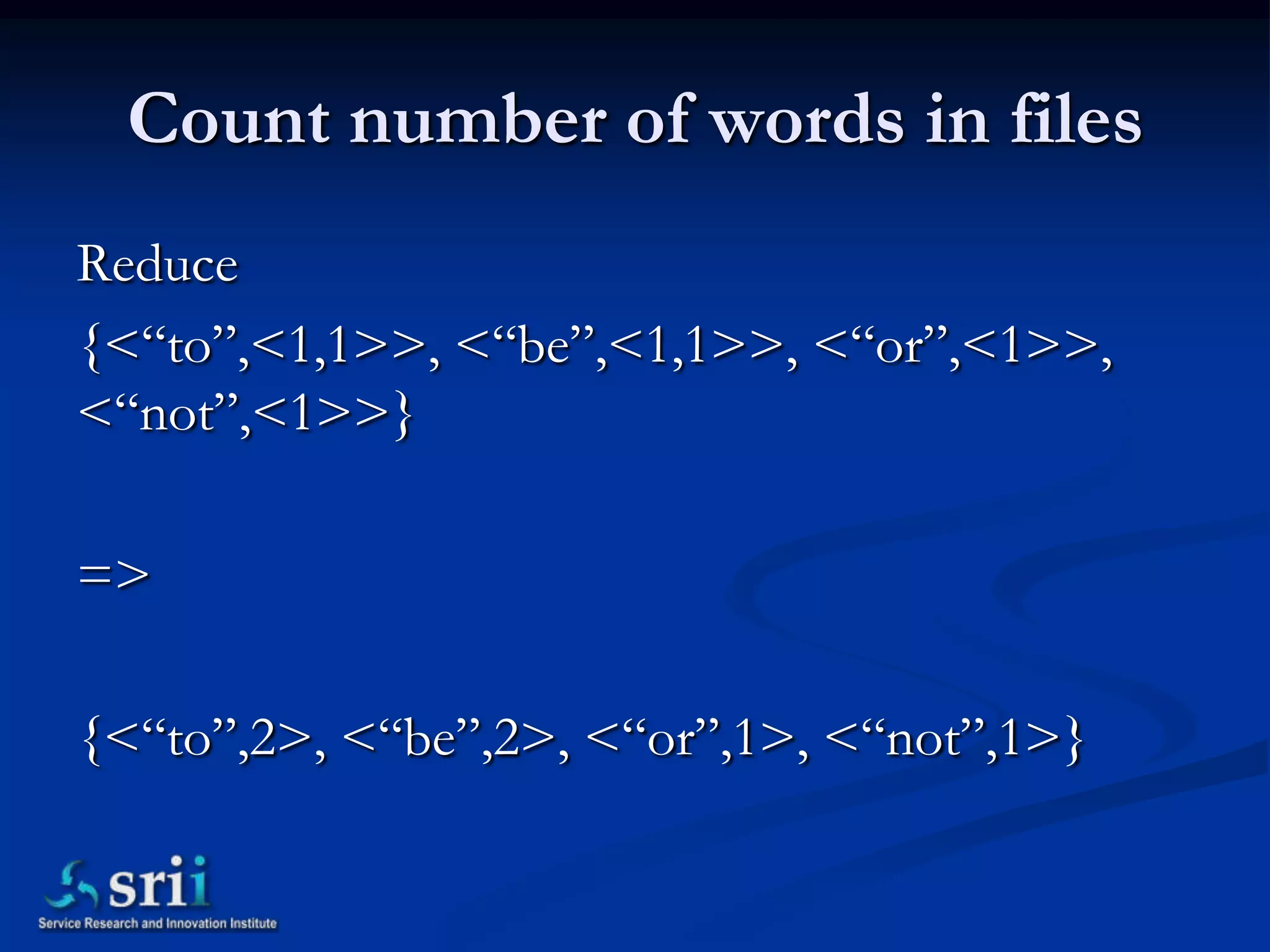

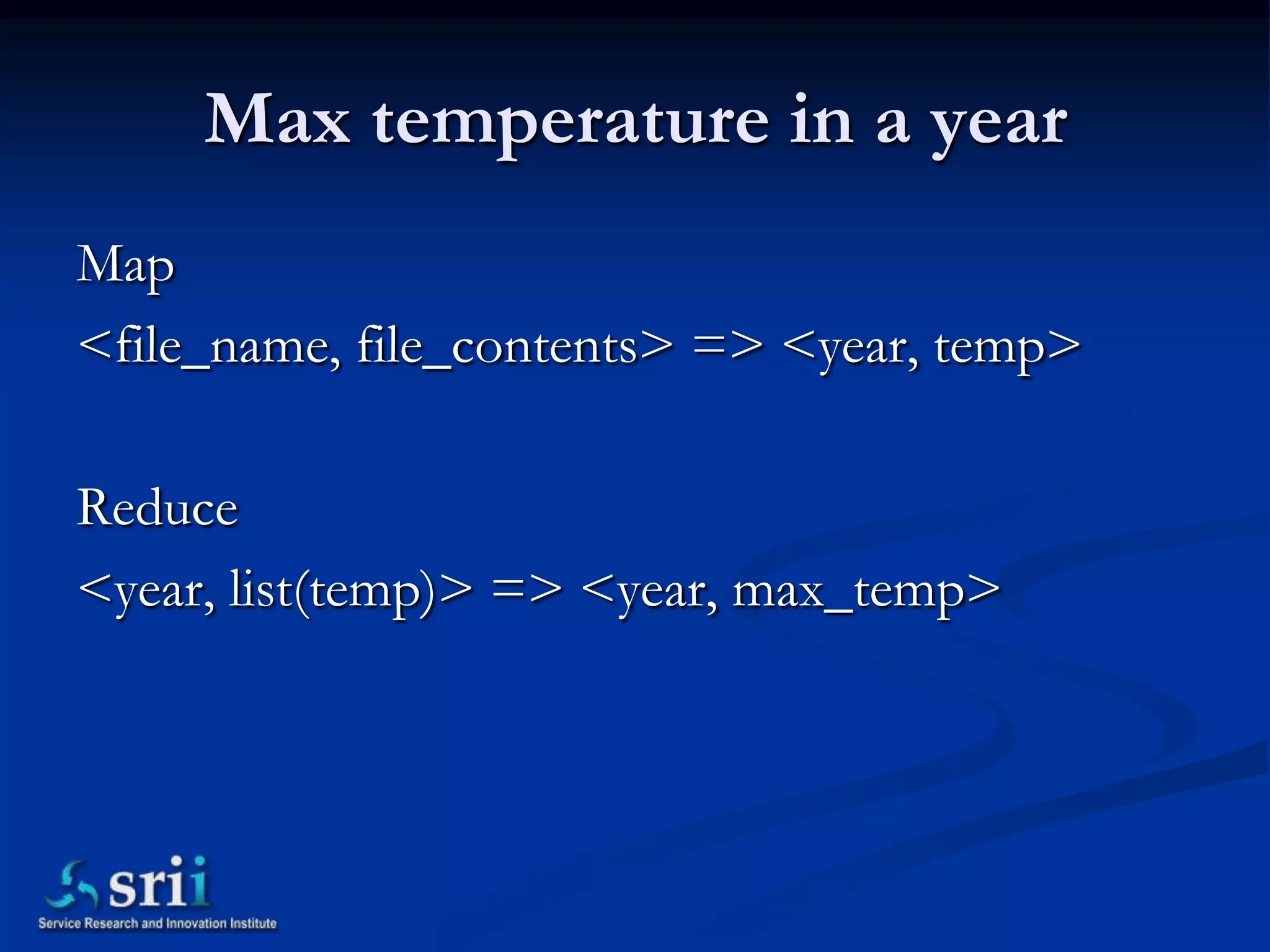

This document provides an overview and introduction to BigData using Hadoop and Pig. It begins with introducing the speaker and their background working with large datasets. It then outlines what will be covered, including an introduction to BigData, Hadoop, Pig, HBase and Hive. Definitions and examples are provided for each. The remainder of the document demonstrates Hadoop and Pig concepts and commands through code examples and explanations.

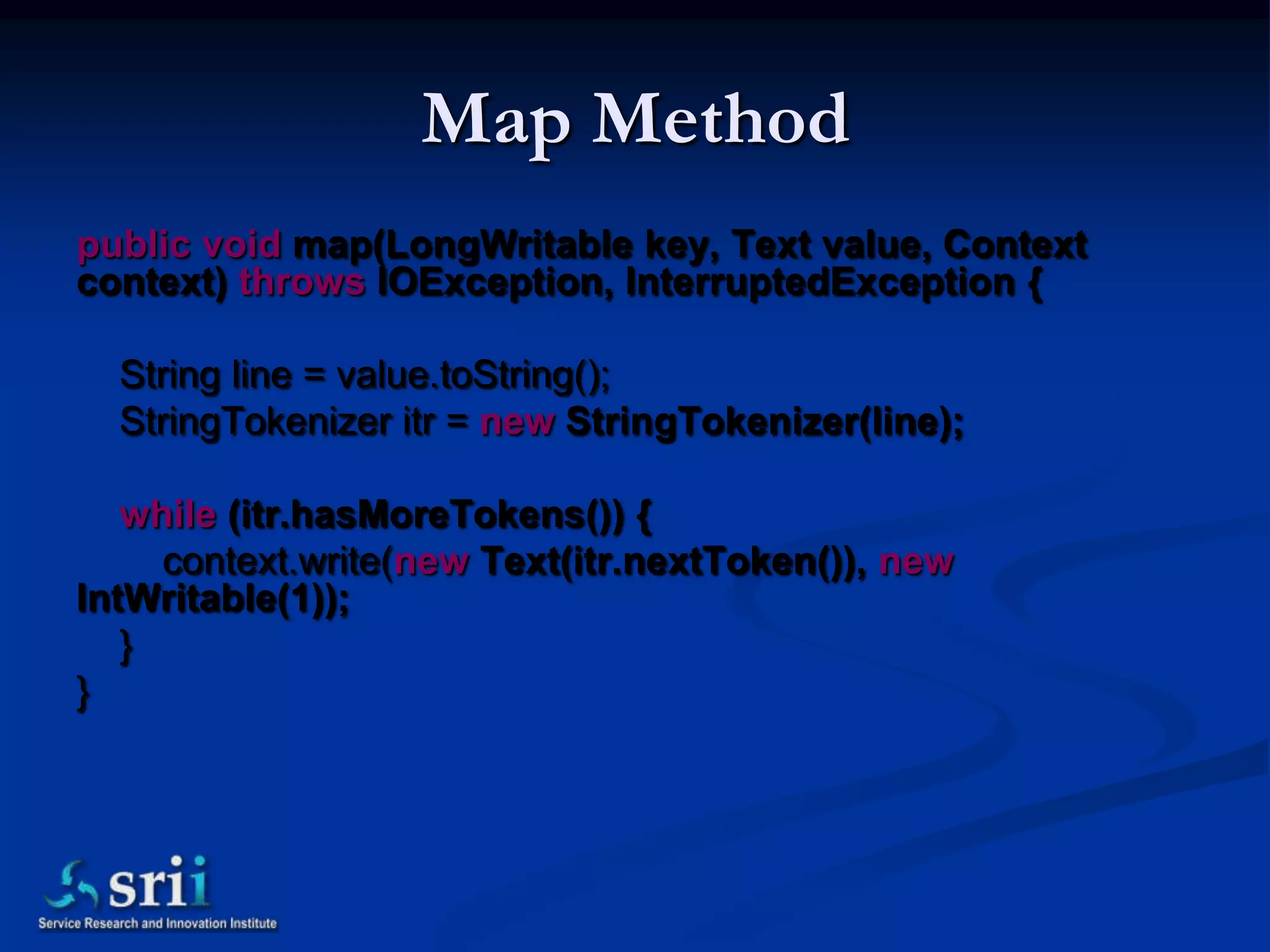

![Main Method

Job job = new Job();

job.setJarByClass(CountWords.class);

job.setJobName("Count Words");

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(CountWordsMapper.class);

job.setReducerClass(CountWordsReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);](https://image.slidesharecdn.com/hadoop-and-pig-srii-121211215405-phpapp01/75/Hands-on-Hadoop-and-pig-44-2048.jpg)