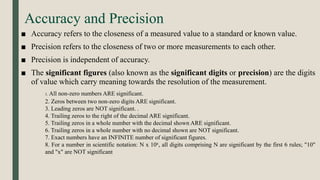

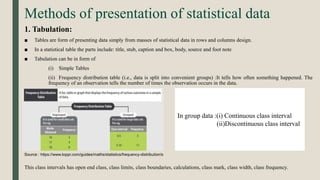

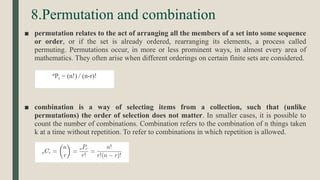

This document provides an introduction to biostatistics. It discusses topics such as collecting and presenting quantitative and qualitative data through tables, charts, and diagrams. It also covers descriptive statistics like measures of central tendency (mean, median, mode) and dispersion (range, standard deviation). Inferential statistics such as probability distributions, hypothesis testing, and tests of significance are introduced. Examples provided include the normal, binomial, and Poisson distributions as well as chi-square, t-tests, z-tests, and ANOVA for hypothesis testing.