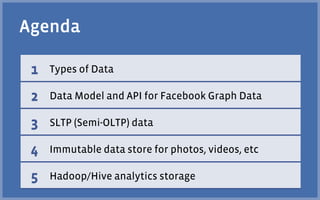

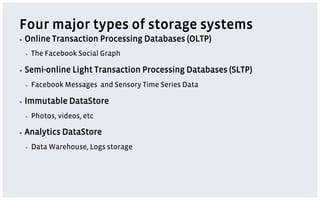

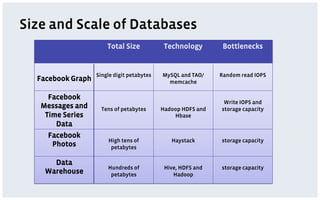

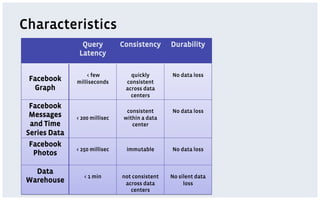

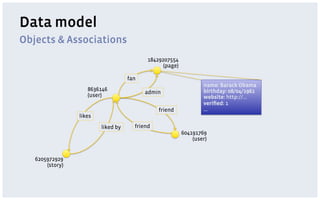

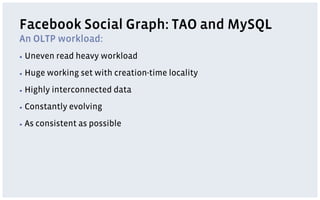

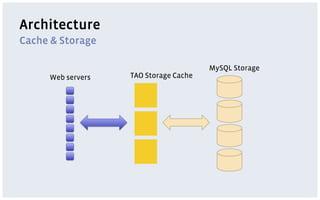

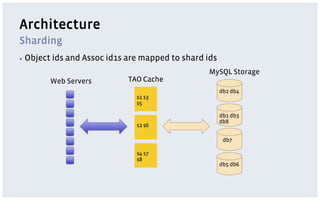

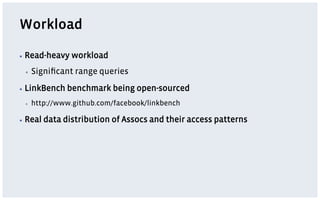

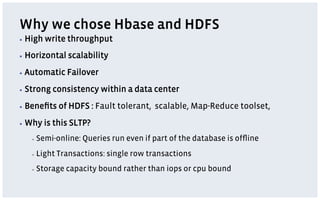

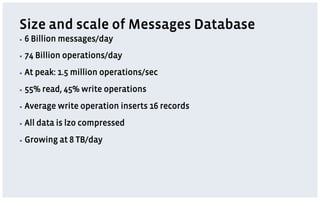

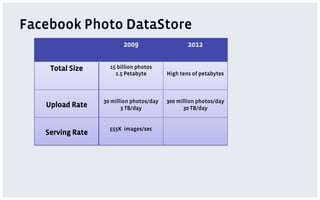

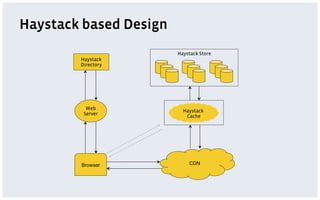

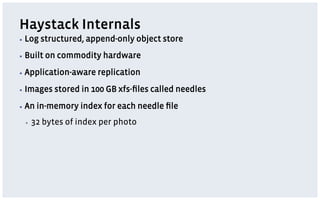

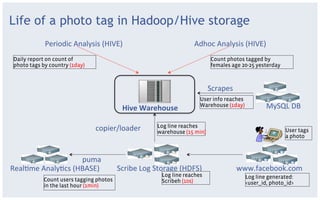

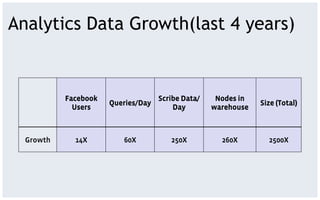

The document discusses various storage systems and data models used by Facebook, focusing on their petabyte-scale data management strategies. It covers OLTP and SLTP workloads, the use of Hadoop and HDFS for analytics, and the architecture of Facebook's social graph and photo storage systems. Key highlights include the performance characteristics of different databases, data growth metrics, and the advantages of using Hadoop for data experiments and crowd-sourced analytics.