This document provides an introduction to big data, including:

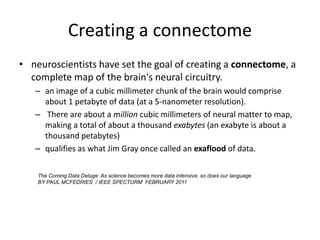

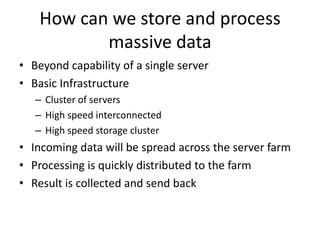

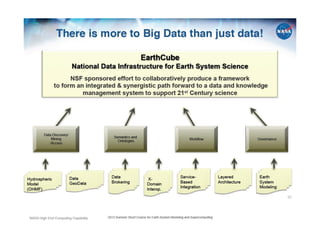

- Big data is characterized by its volume, velocity, and variety, which makes it difficult to process using traditional databases and requires new technologies.

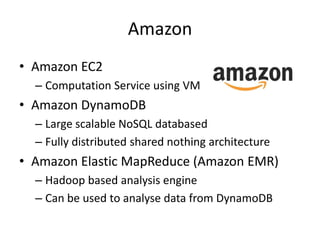

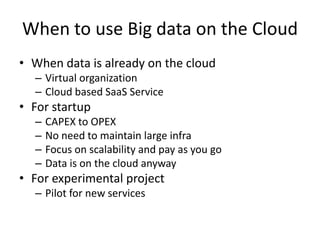

- Technologies like Hadoop, MongoDB, and cloud platforms from Google and Amazon can provide scalable storage and processing of big data.

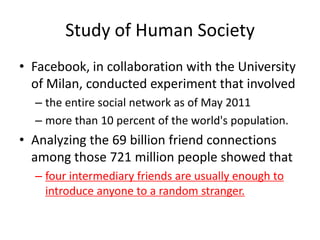

- Examples of how big data is used include analyzing social media and search data to gain insights, enabling personalized experiences and targeted advertising.

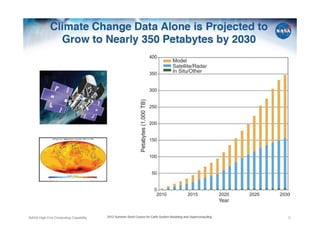

- As data volumes continue growing exponentially from sources like sensors, simulations, and digital media, new tools and approaches are needed to effectively analyze and make sense of "big data".