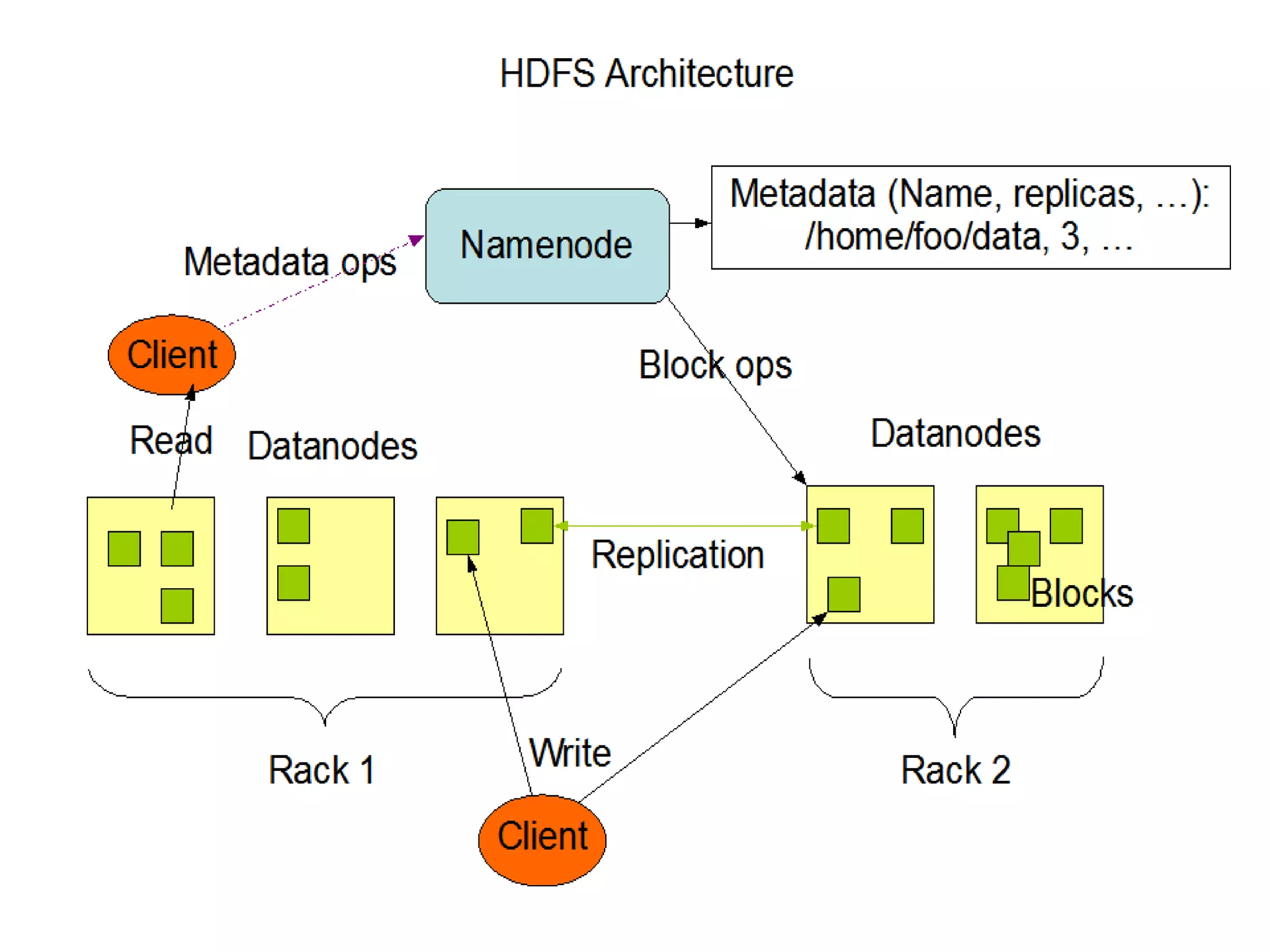

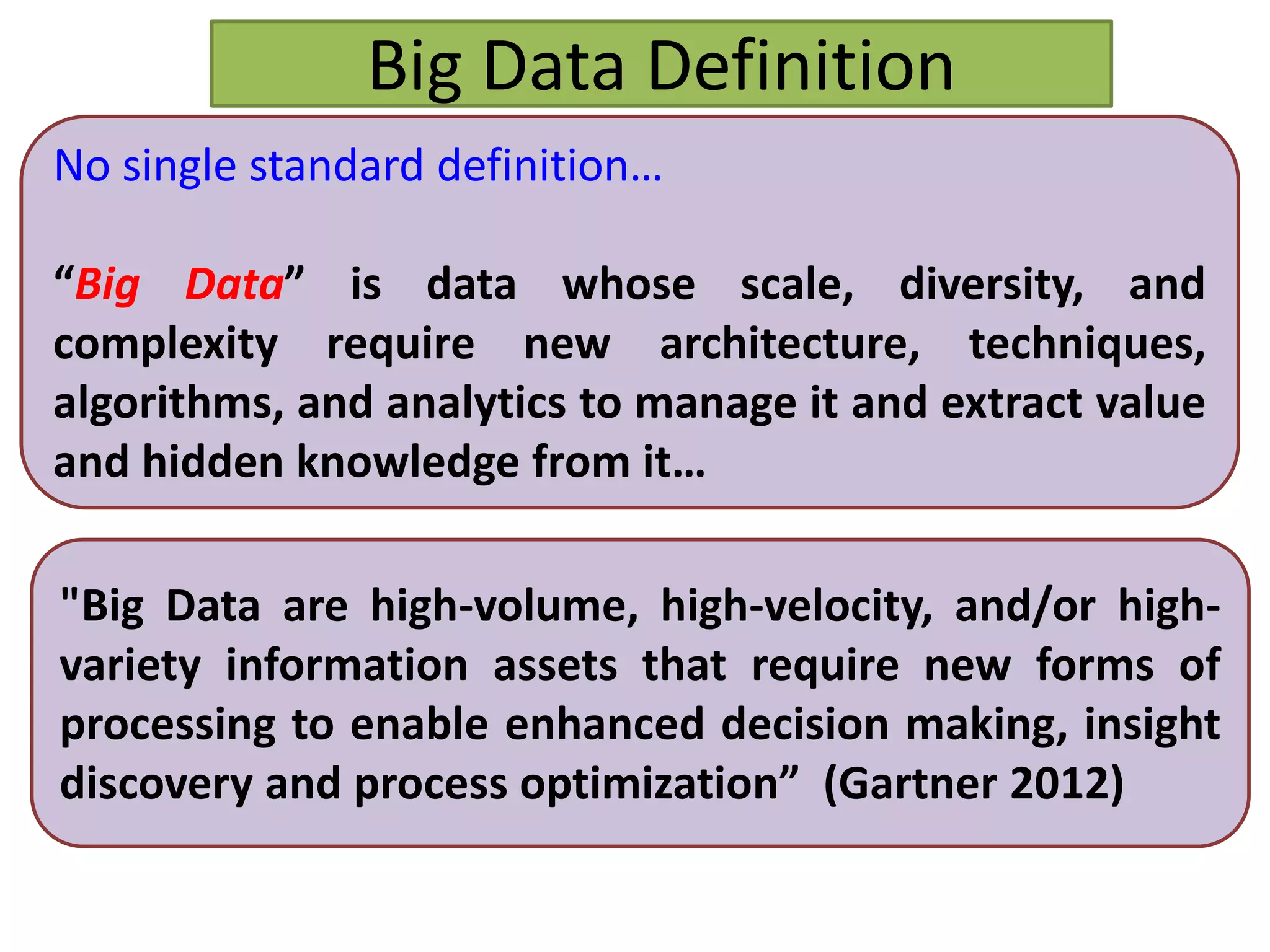

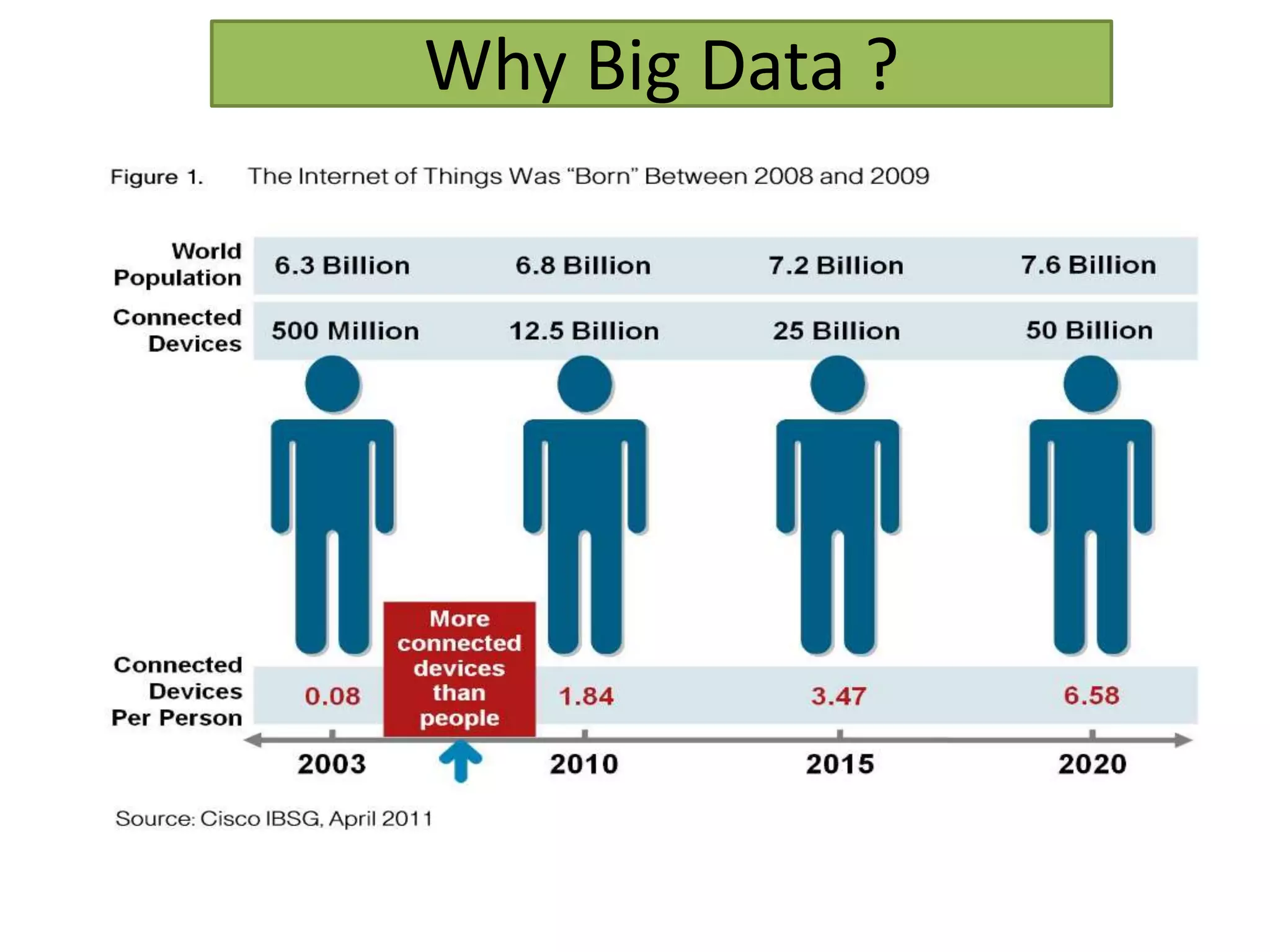

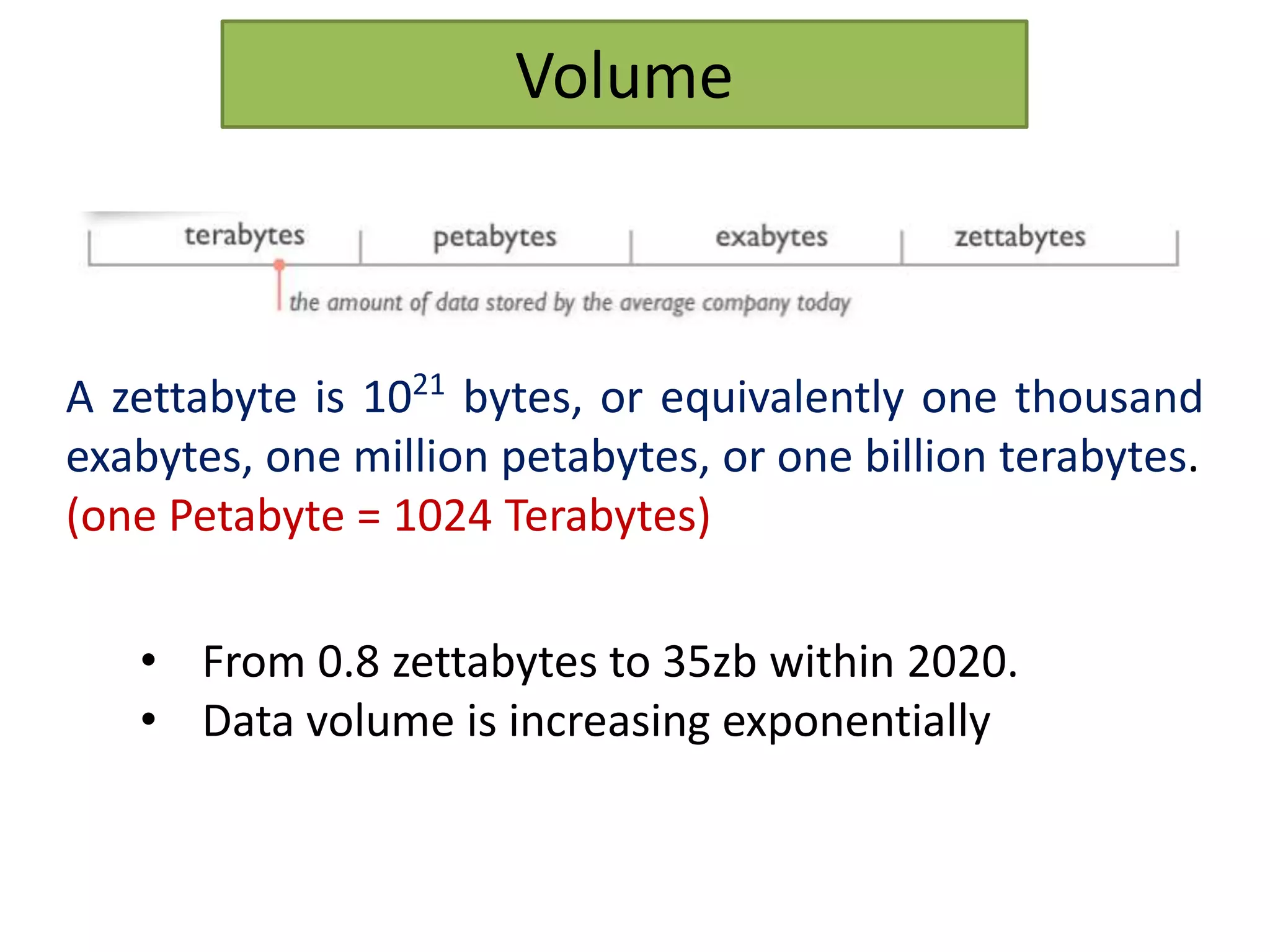

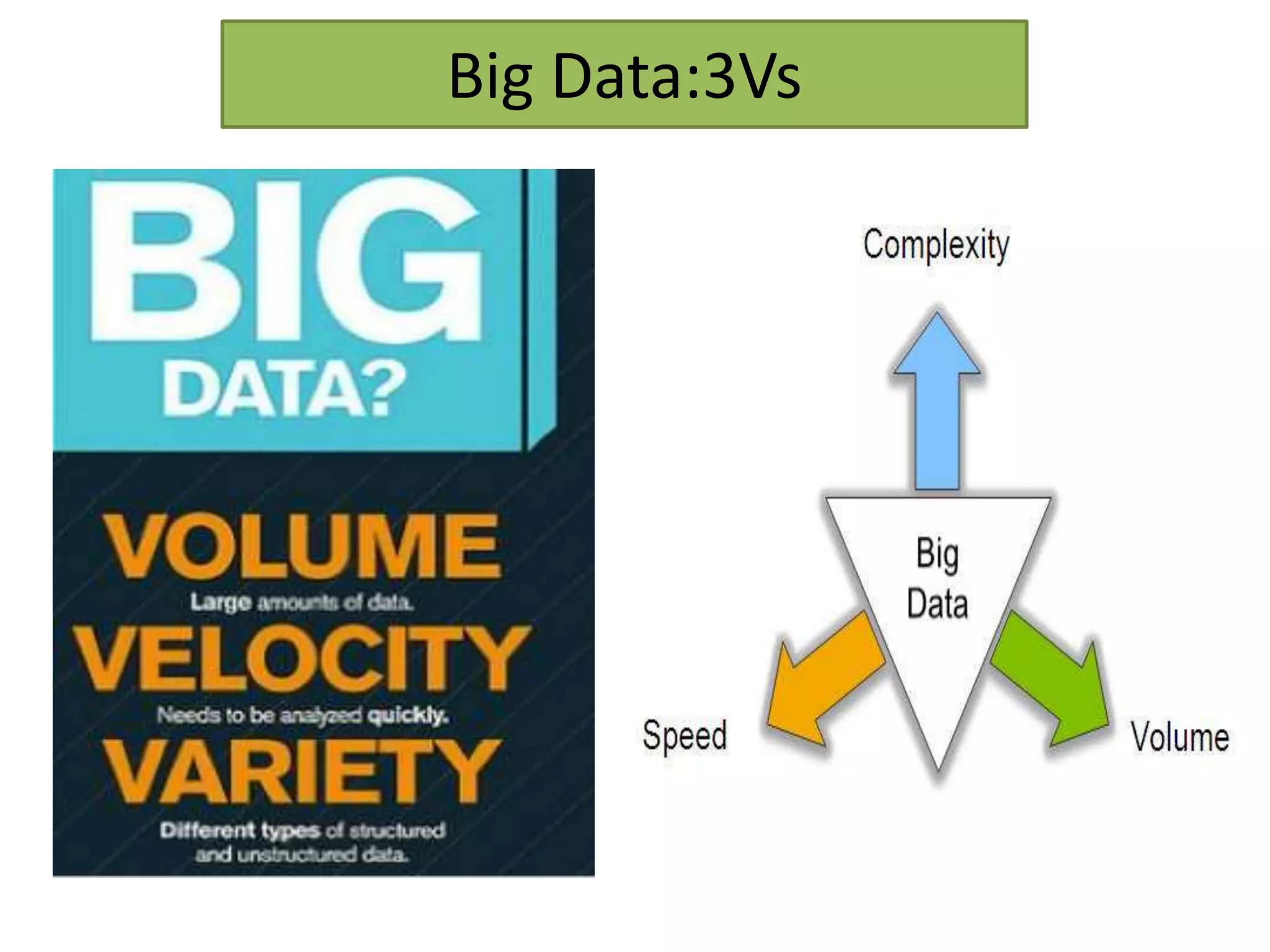

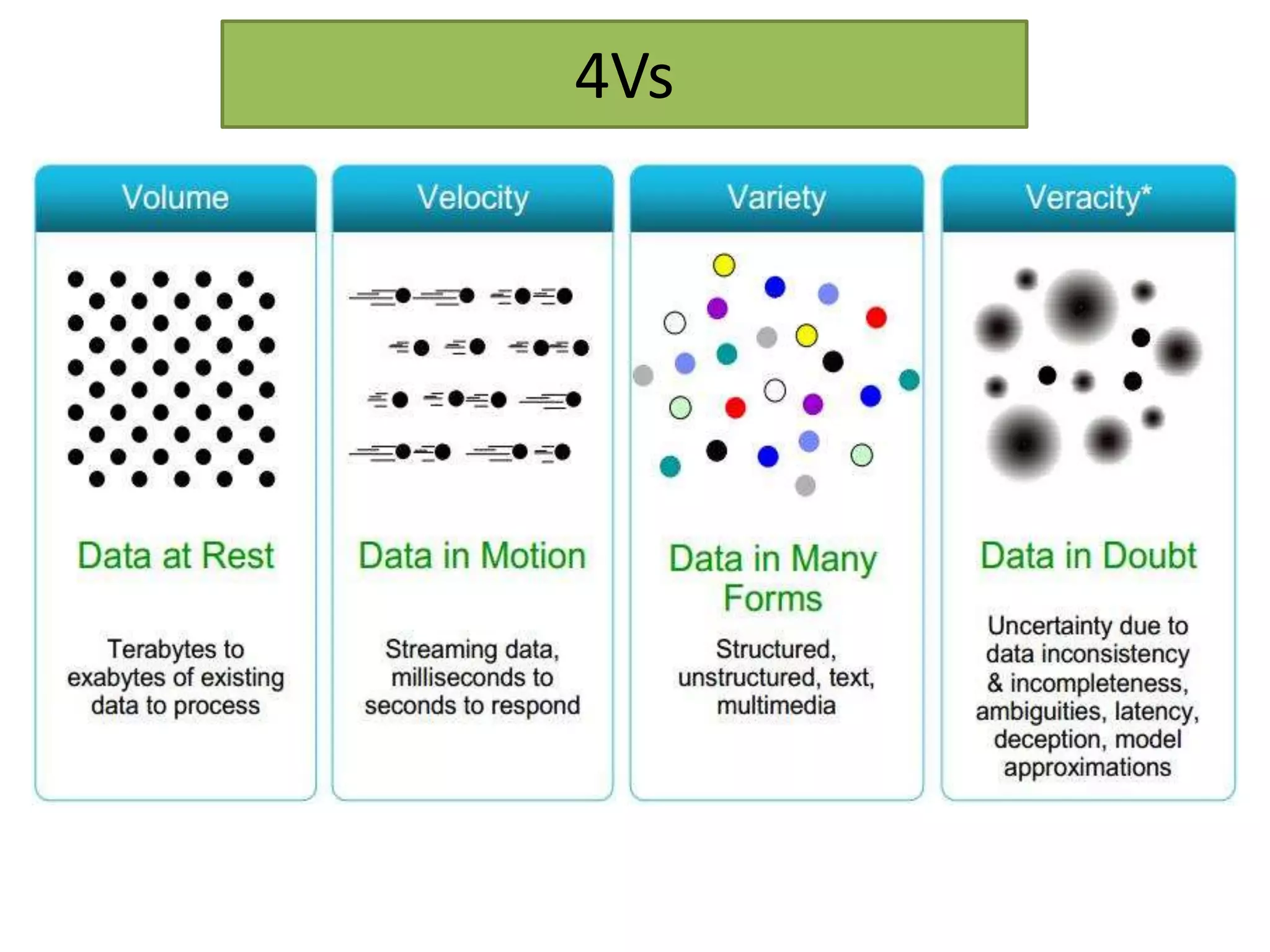

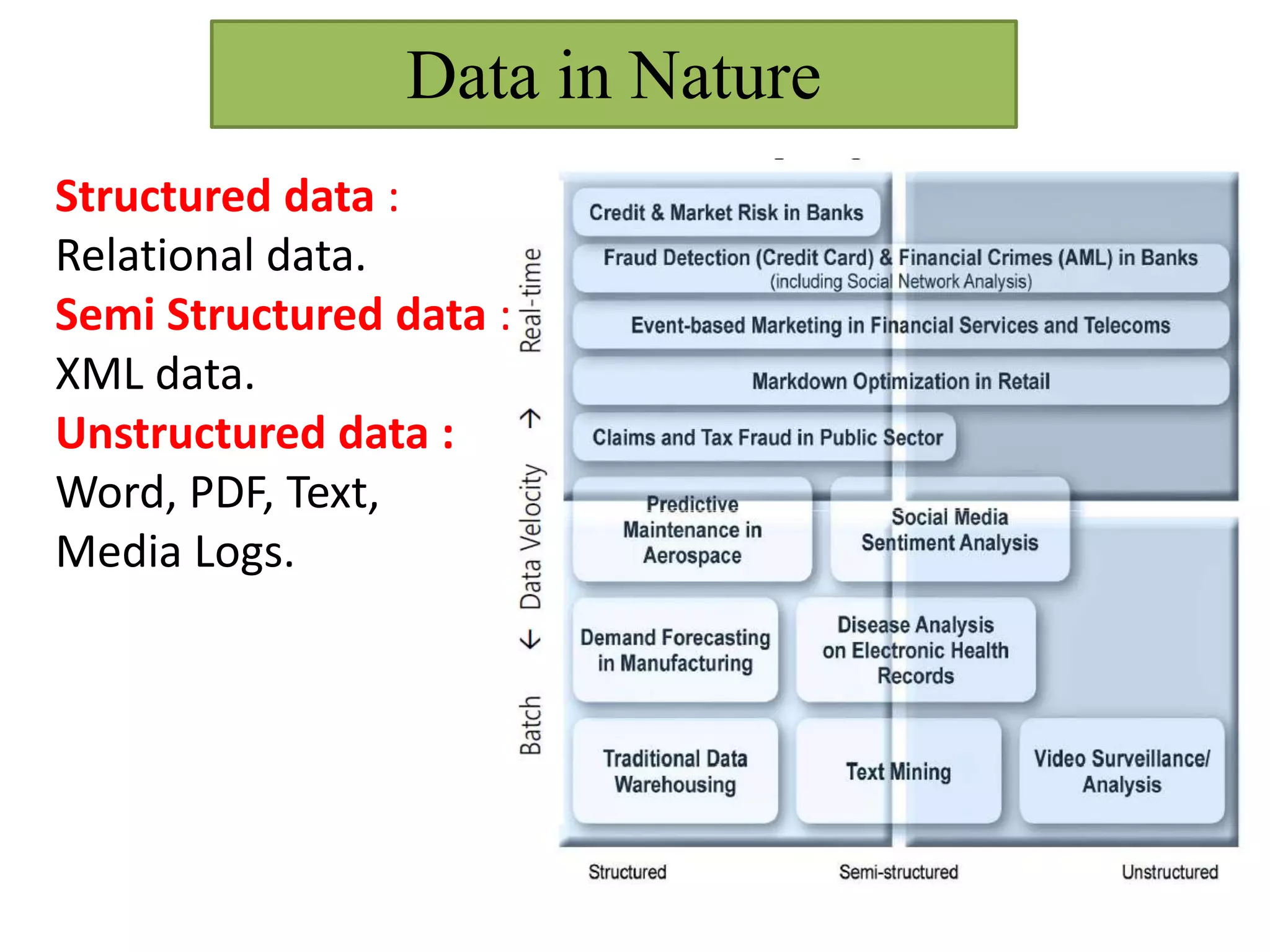

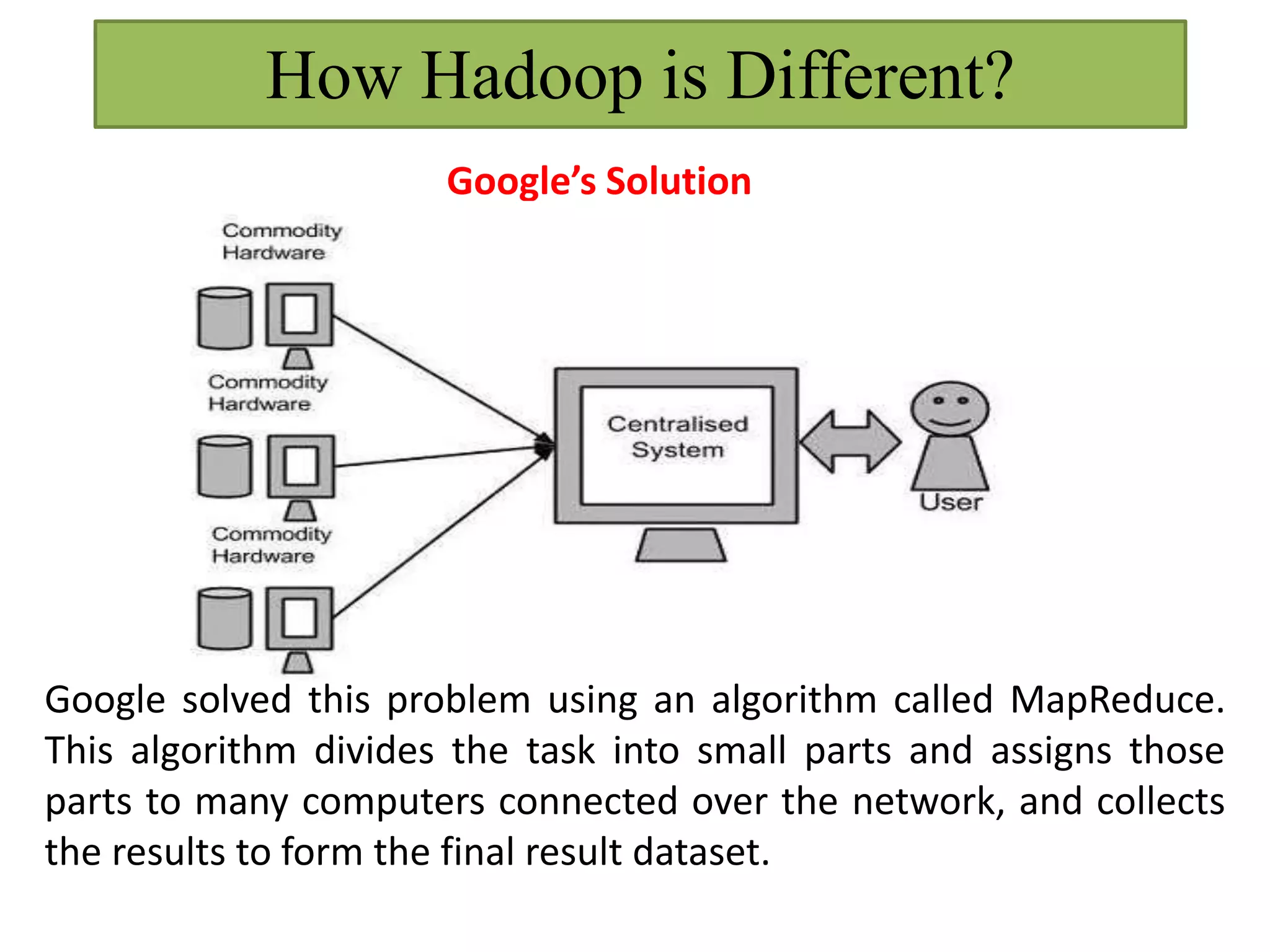

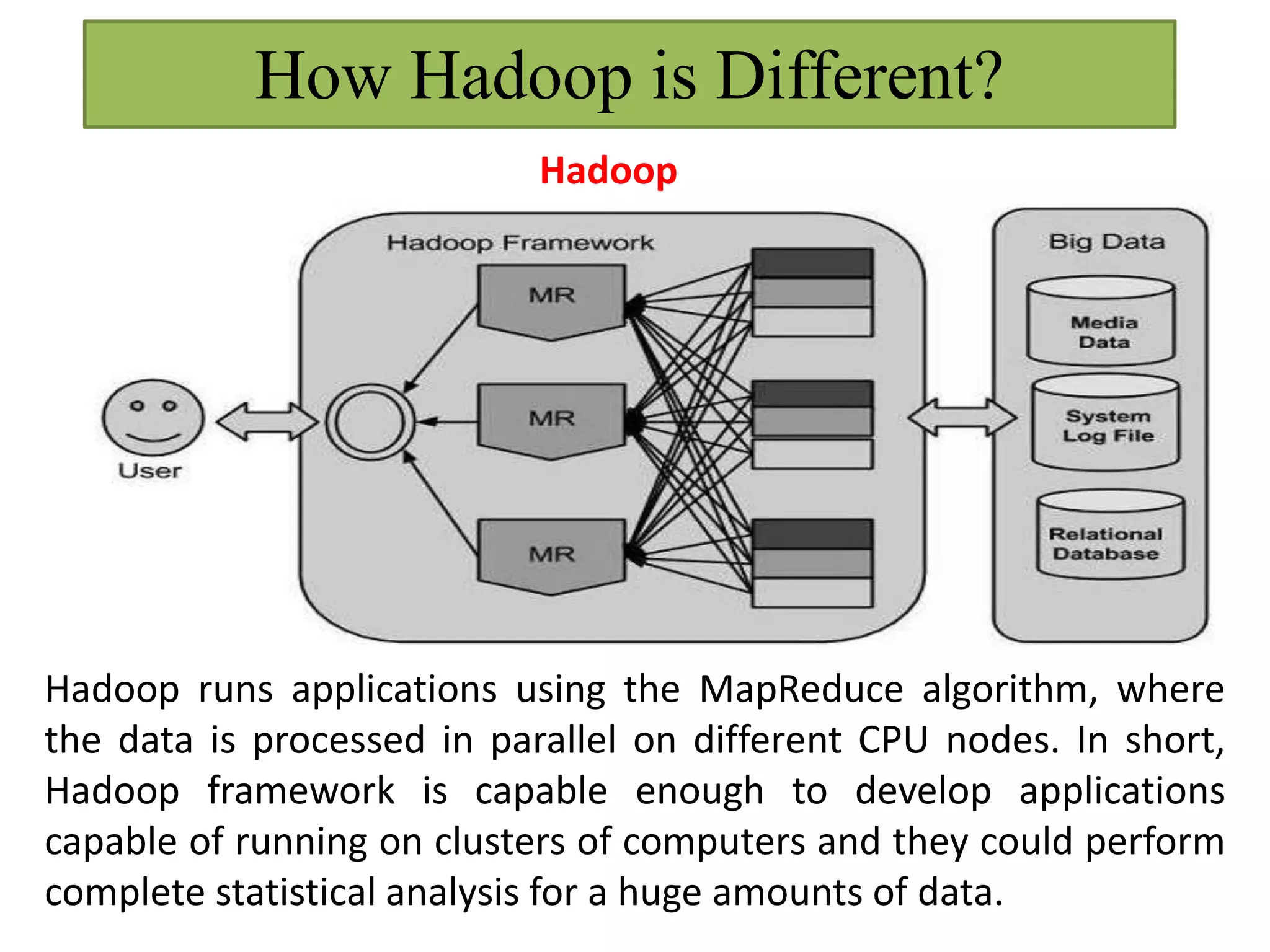

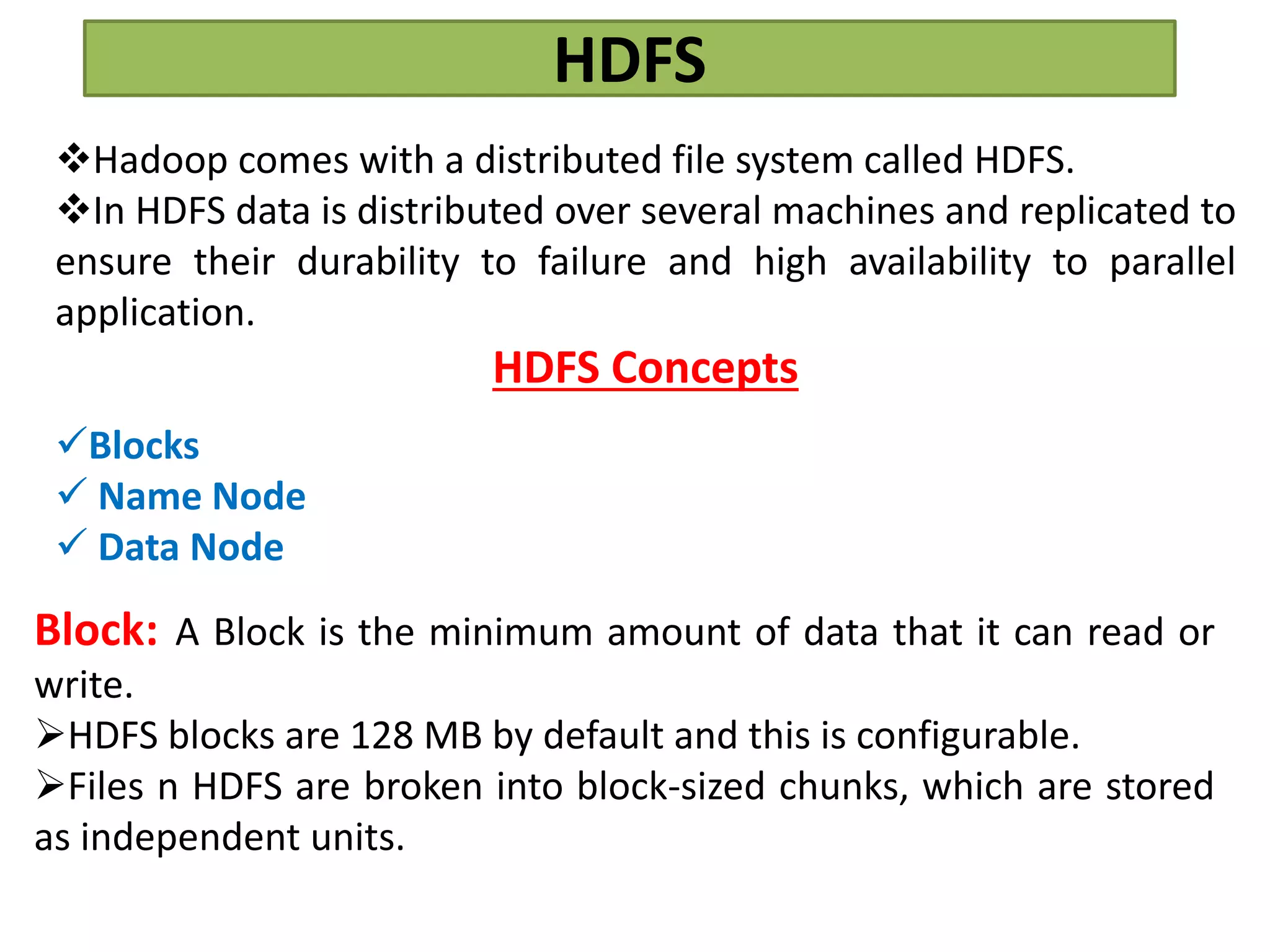

This document provides an overview of big data and Hadoop. It defines big data as high-volume, high-velocity, and high-variety data that requires new techniques to capture value. Hadoop is introduced as an open-source framework for distributed storage and processing of large datasets across clusters of computers. Key components of Hadoop include HDFS for storage and MapReduce for parallel processing. Benefits of Hadoop are its ability to handle large amounts of structured and unstructured data quickly and cost-effectively at large scales.

![Hadoop Models of Installation

Standalone / local model-After downloading Hadoop in your

system, by default, it is configured in a standalone mode and can be

run as a single java process.

Pseudo distributed model [Labwork]-It is a distributed simulation

on single machine. Each Hadoop such as hdfs, yarn, MapReduce etc.,

will run as a separate java process. This mode is useful for

development.

Fully Distributed mode [Realtime]-This mode is fully distributed

with minimum two or more machines as a cluster.](https://image.slidesharecdn.com/hadoophdfs-221226134503-021185b2/75/Hadoop-HDFS-ppt-50-2048.jpg)