Embed presentation

Downloaded 10 times

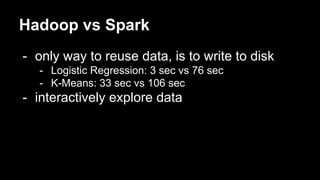

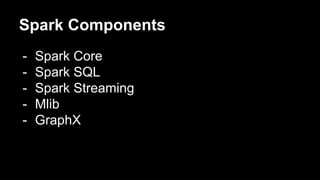

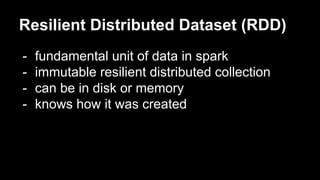

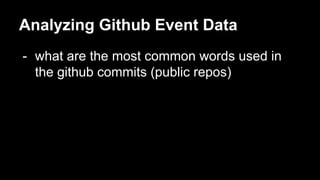

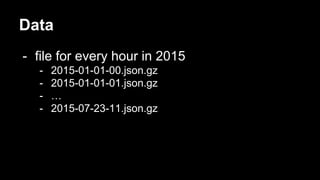

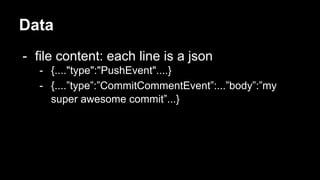

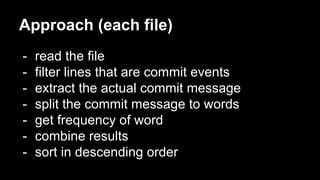

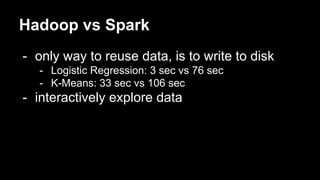

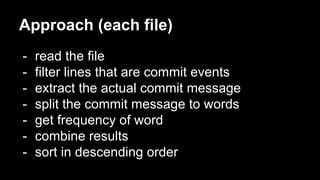

The document discusses the Apache Spark framework, its development history from Hadoop and its role in handling rapidly growing data through parallel processing. It highlights key components of Spark such as Spark Core and Spark SQL, and provides insights into a specific analysis of GitHub event data to identify common words used in commits. Additionally, it offers resources for further learning about Spark and its applications in big data and machine learning.