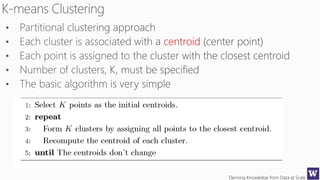

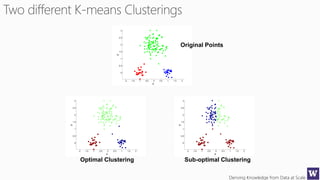

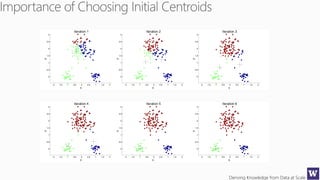

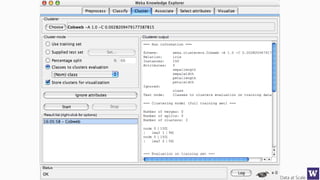

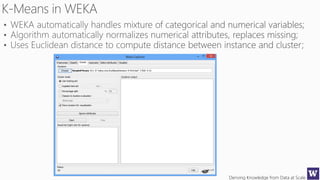

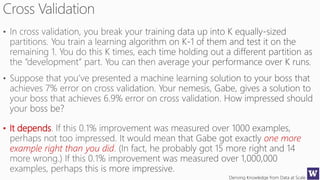

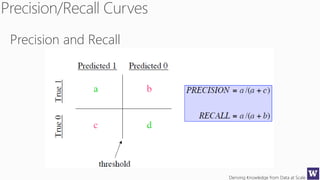

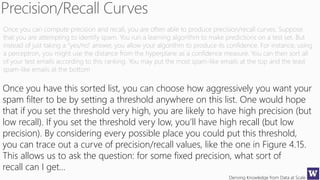

The document discusses clustering and nearest neighbor algorithms for deriving knowledge from data at scale. It provides an overview of clustering techniques like k-means clustering and discusses how they are used for applications such as recommendation systems. It also discusses challenges like class imbalance that can arise when applying these techniques to large, real-world datasets and evaluates different methods for addressing class imbalance. Additionally, it discusses performance metrics like precision, recall, and lift that can be used to evaluate models on large datasets.