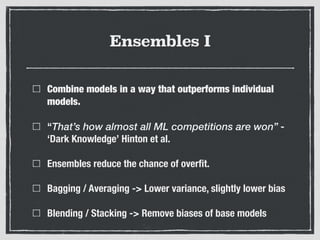

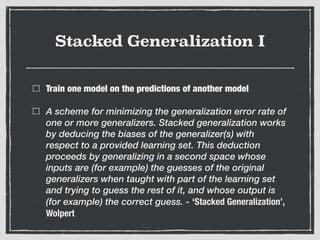

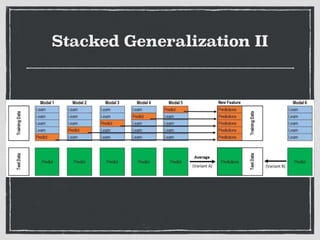

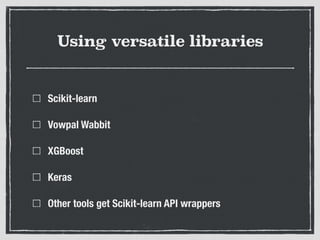

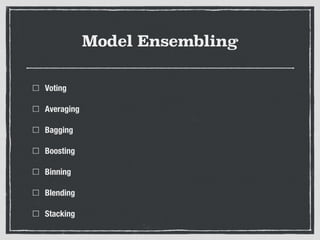

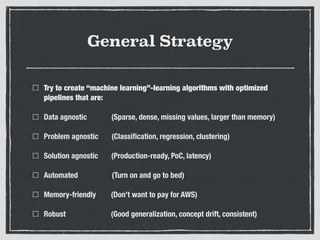

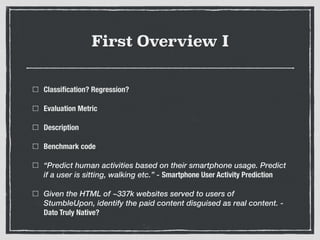

Winning Kaggle competitions involves getting a good score as fast as possible using versatile machine learning libraries and models like Scikit-learn, XGBoost, and Keras. It also involves model ensembling techniques like voting, averaging, bagging and boosting to improve scores. The document provides tips for approaches like feature engineering, algorithm selection, and stacked generalization/stacking to develop strong ensemble models for competitions.

![Random Forests I

A Random Forest is an ensemble of decision trees.

"Random forests are a combination of tree

predictors such that each tree depends on the

values of a random vector sampled

independently and with the same distribution for

all trees in the forest. […] More robust to noise -

“Random Forest" Breiman](https://image.slidesharecdn.com/kaggle-presentation-160918221740/85/Kaggle-presentation-19-320.jpg)

![GBM I

A GBM trains weak models on samples that previous

models got wrong

"A method is described for converting a weak

learning algorithm [the learner can produce an

hypothesis that performs only slightly better

than random guessing] into one that achieves

arbitrarily high accuracy." - “The Strength of Weak

Learnability" Schapire](https://image.slidesharecdn.com/kaggle-presentation-160918221740/85/Kaggle-presentation-21-320.jpg)

![Perceptron I

Update weights when wrong prediction, else do nothing

The embryo of an electronic computer that [the

Navy] expects will be able to walk, talk, see,

write, reproduce itself and be conscious of its

existence. ‘New York Times’, Rosenblatt](https://image.slidesharecdn.com/kaggle-presentation-160918221740/85/Kaggle-presentation-27-320.jpg)

![Neural Networks I

Inspired by biological systems (Connected neurons firing

when threshold is reached)

Because of the "all-or-none" character of nervous

activity, neural events and the relations among

them can be treated by means of propositional

logic. […] for any logical expression satisfying

certain conditions, one can find a net behaving in

the fashion it describes. ‘A Logical Calculus of the

Ideas Immanent in Nervous Activity’, McCulloch & Pitts](https://image.slidesharecdn.com/kaggle-presentation-160918221740/85/Kaggle-presentation-29-320.jpg)