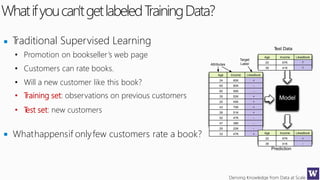

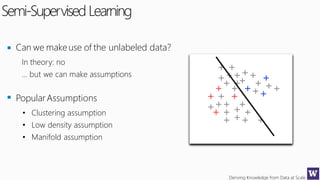

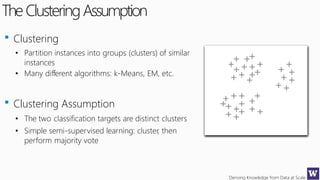

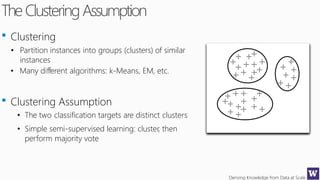

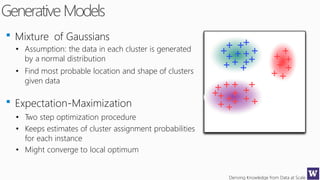

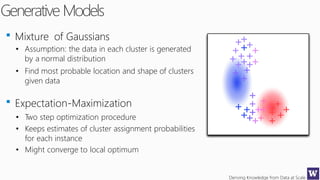

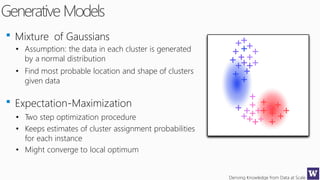

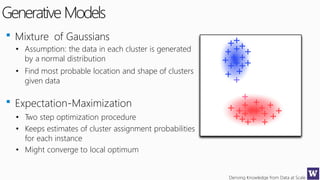

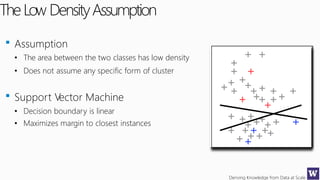

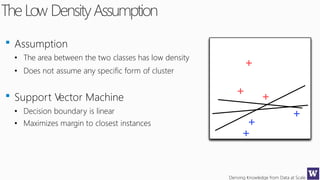

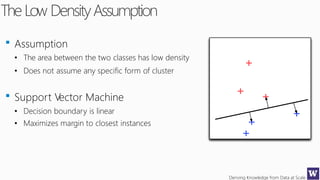

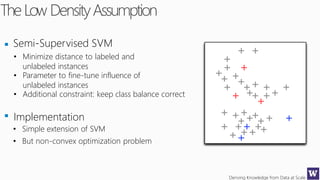

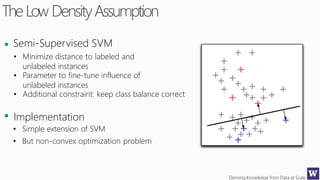

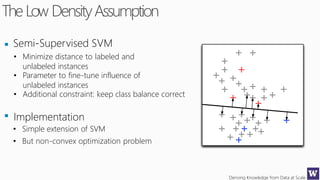

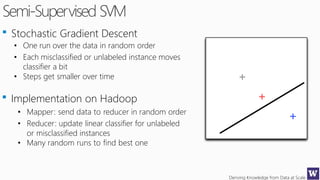

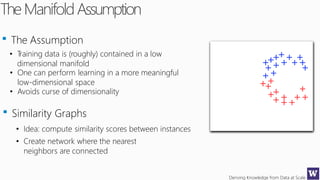

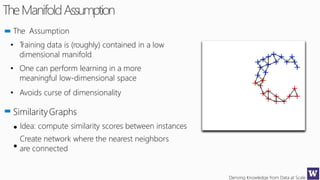

This document discusses various techniques for machine learning when labeled training data is limited, including semi-supervised learning approaches that make use of unlabeled data. It describes assumptions like the clustering assumption, low density assumption, and manifold assumption that allow algorithms to learn from unlabeled data. Specific techniques covered include clustering algorithms, mixture models, self-training, and semi-supervised support vector machines.