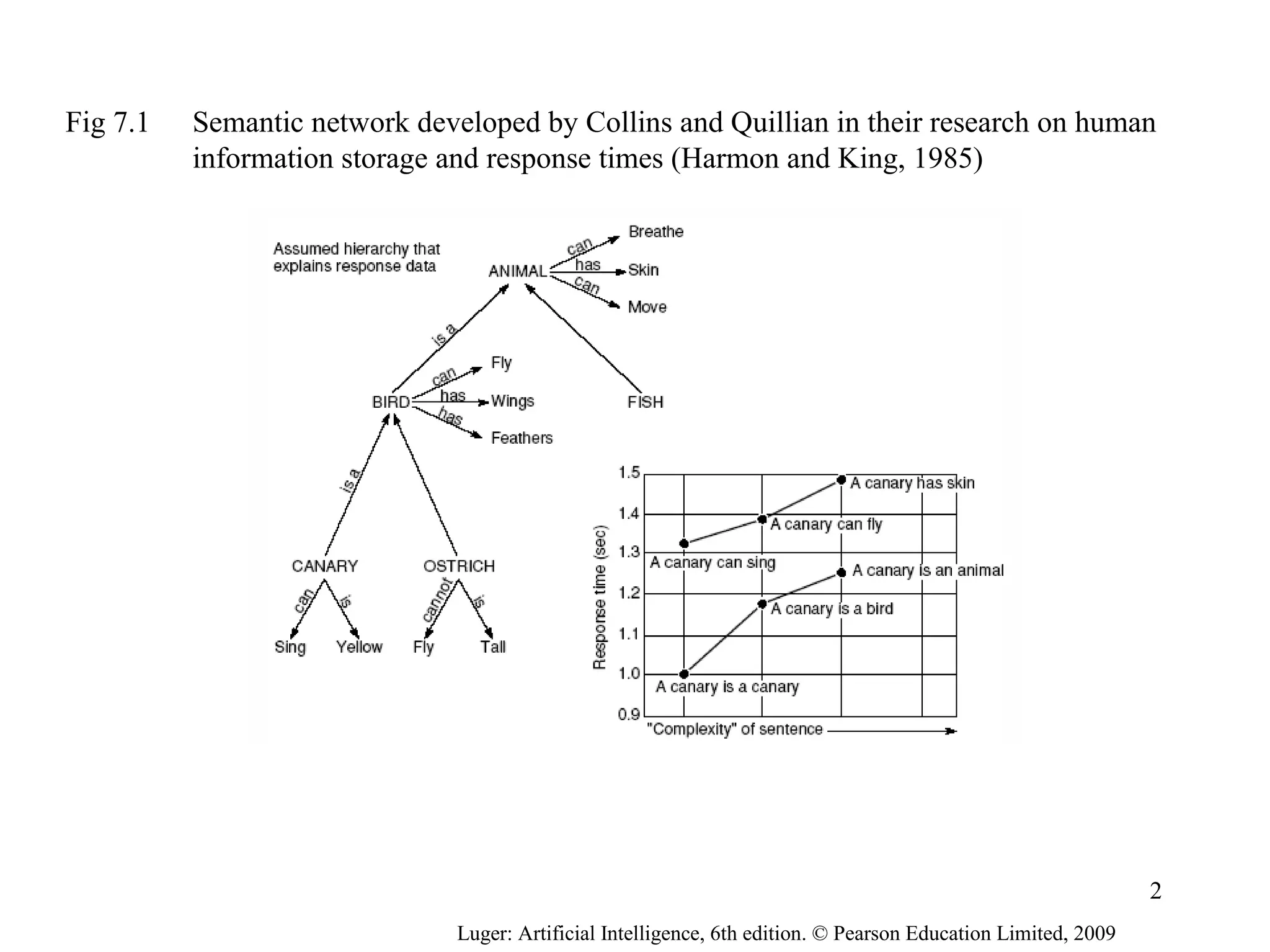

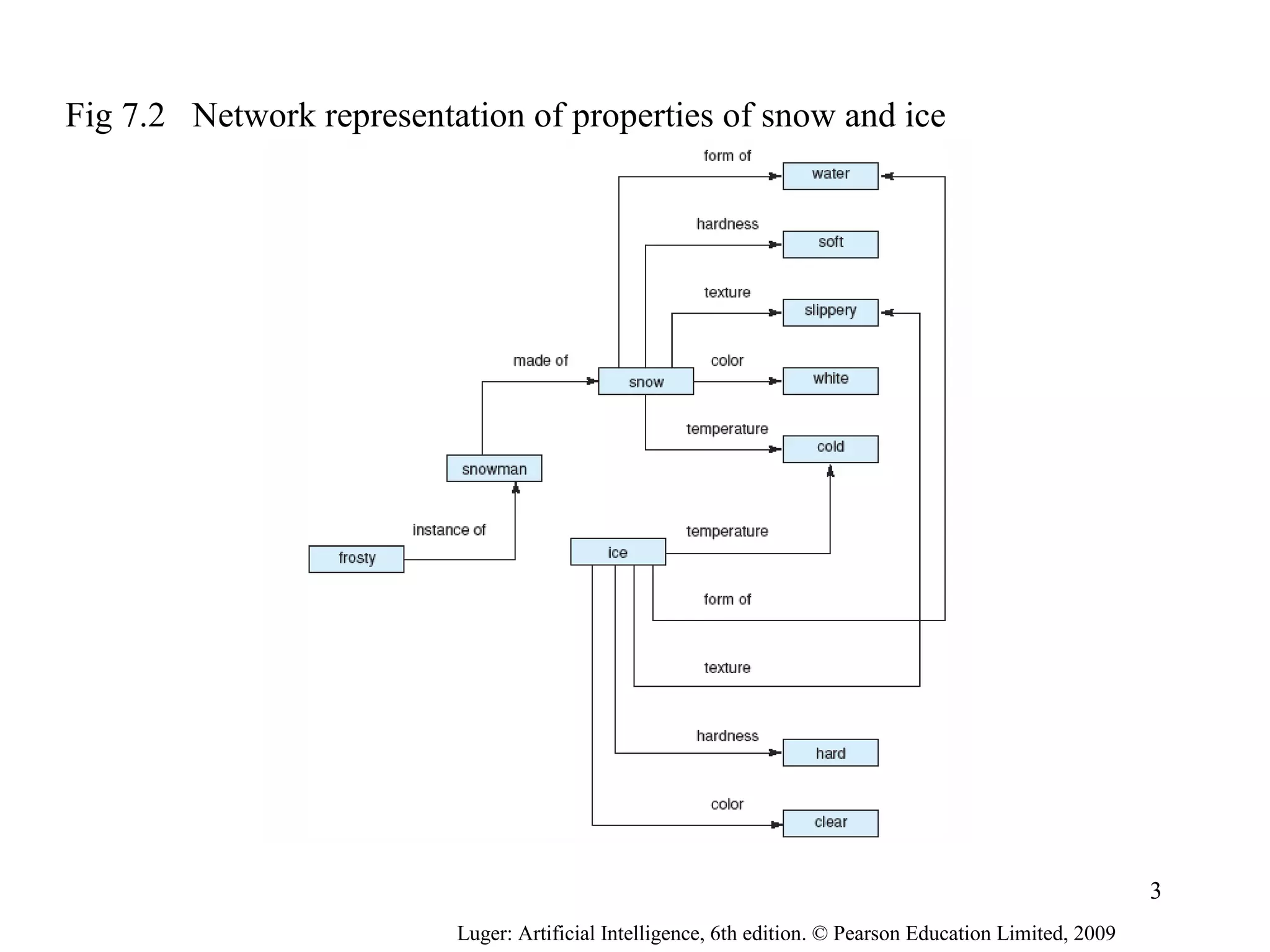

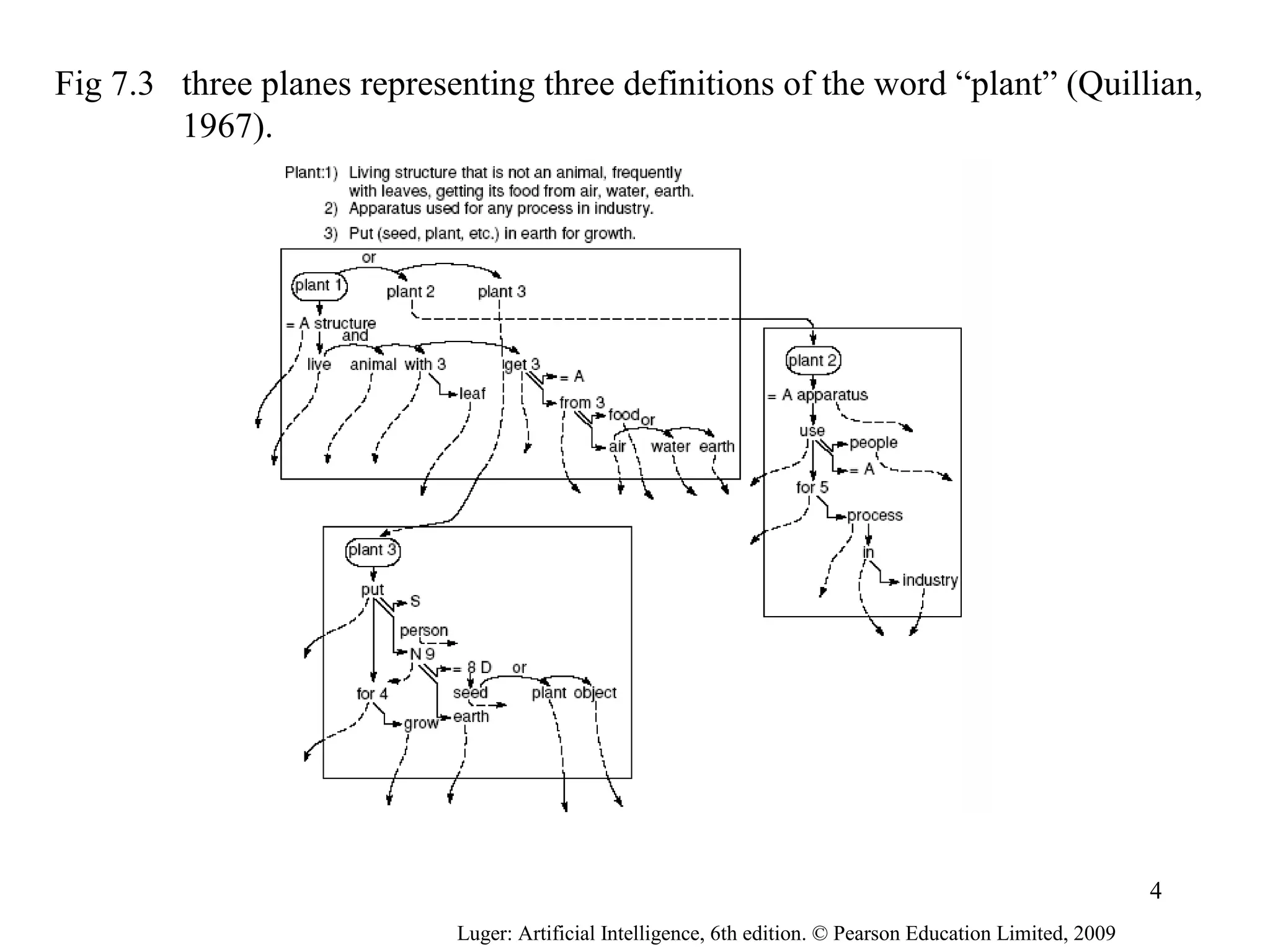

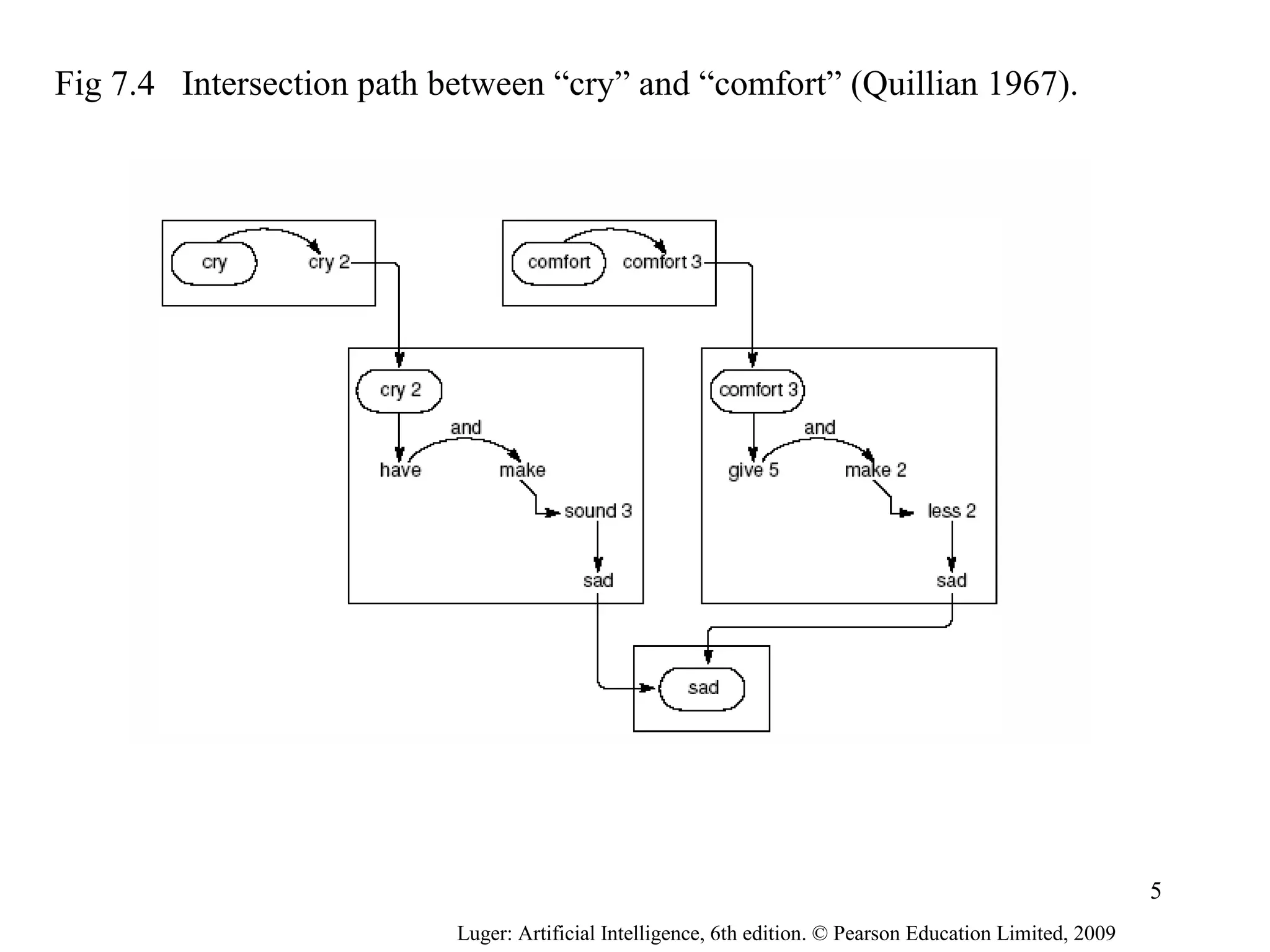

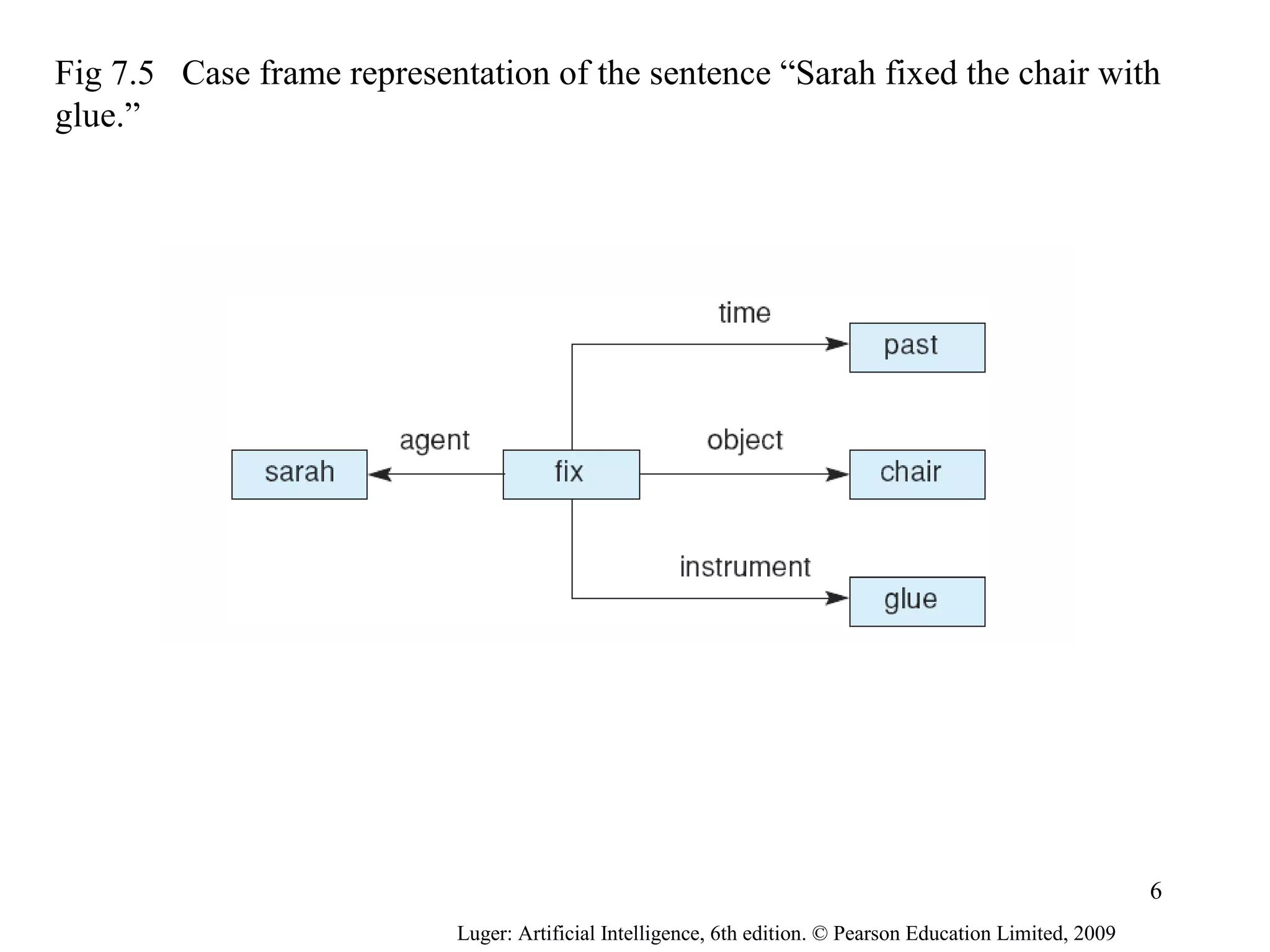

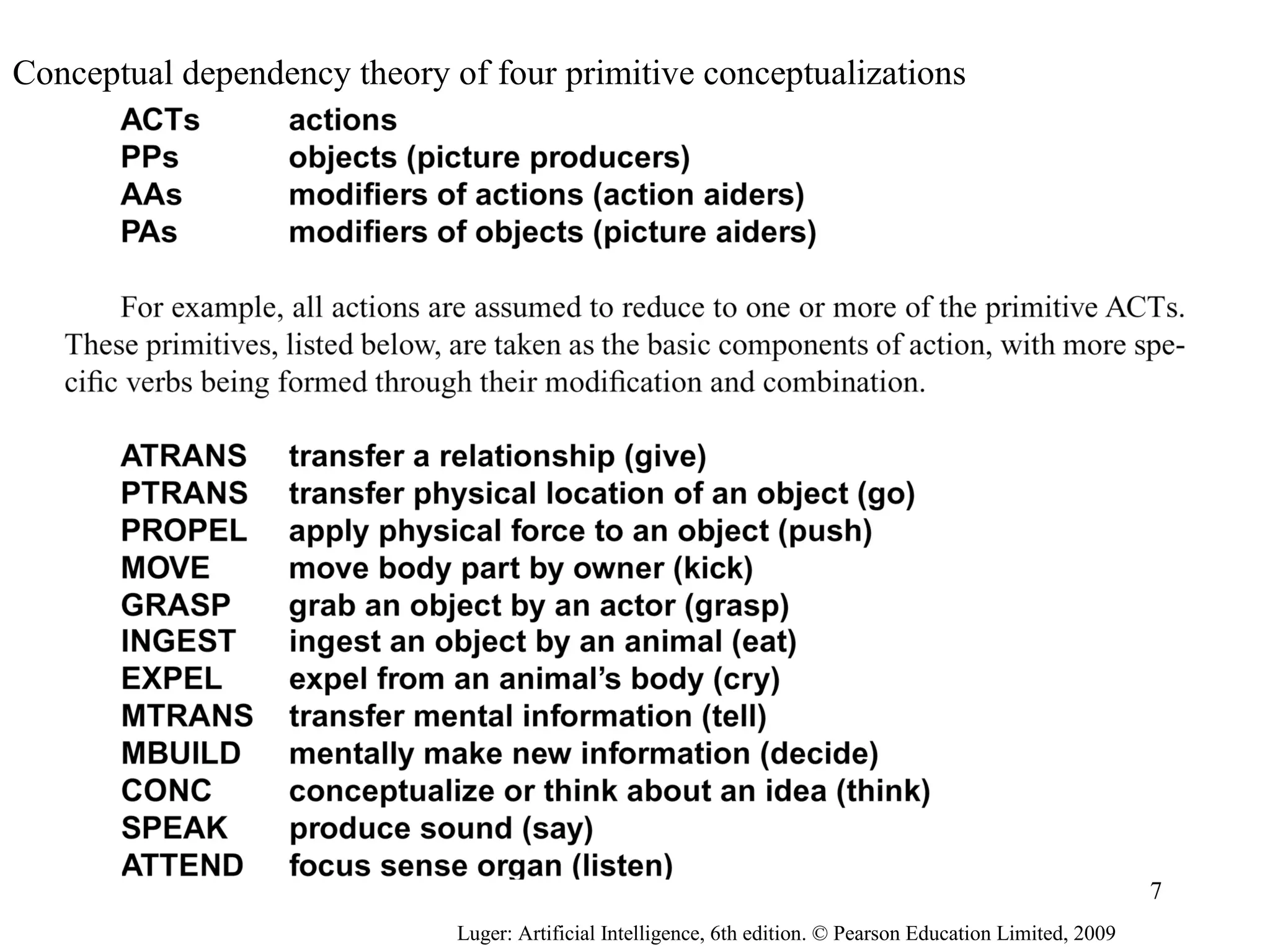

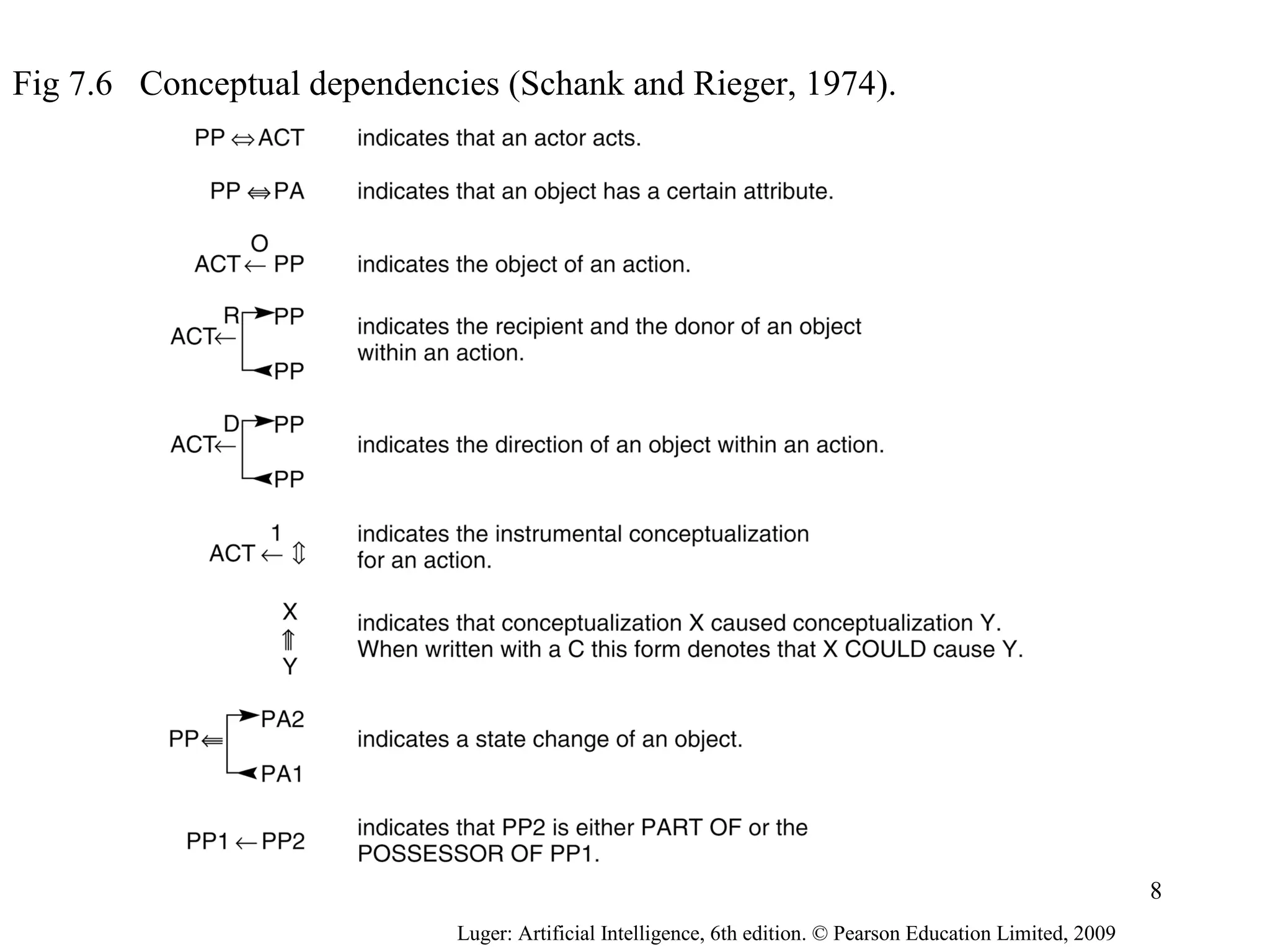

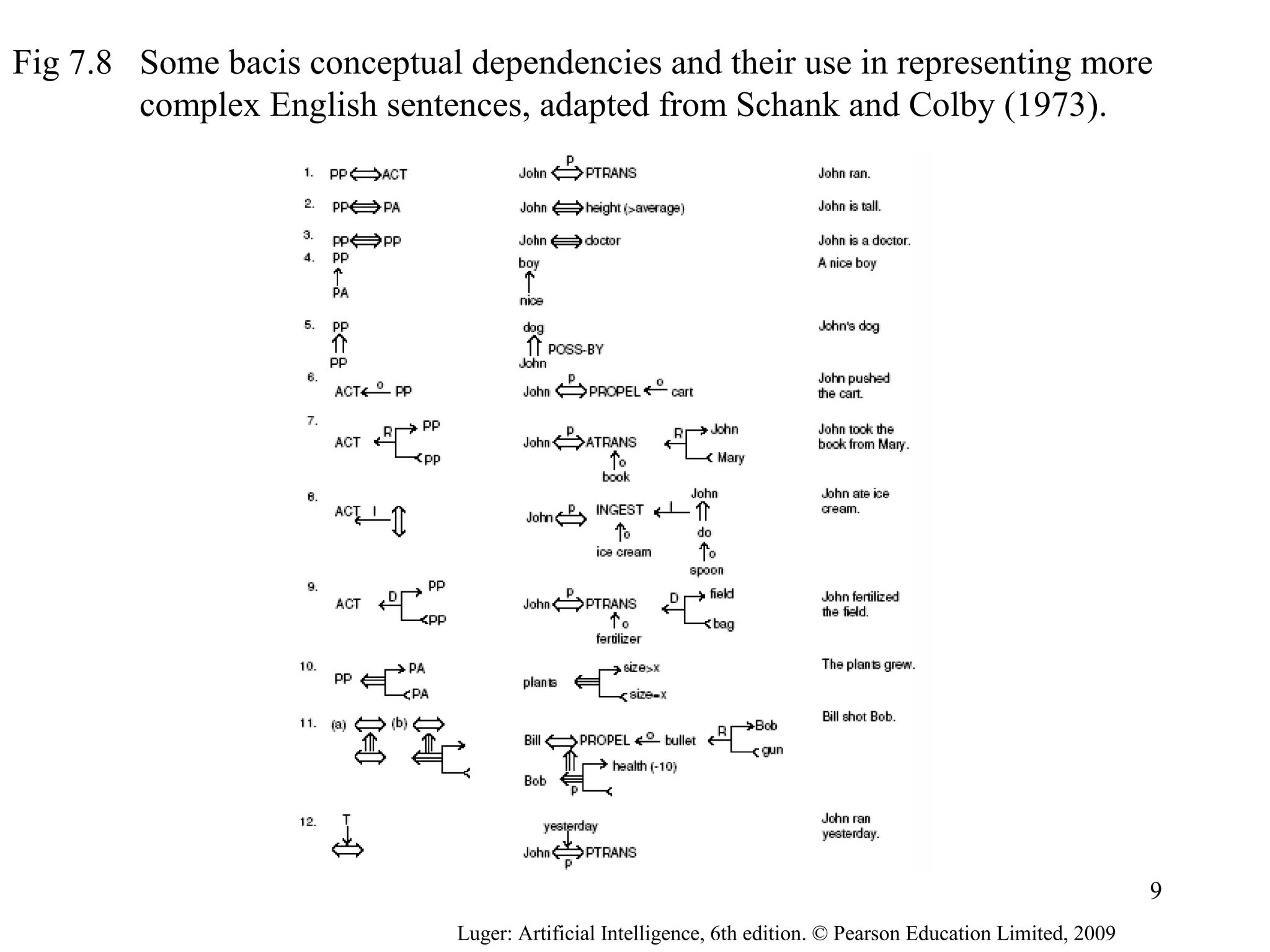

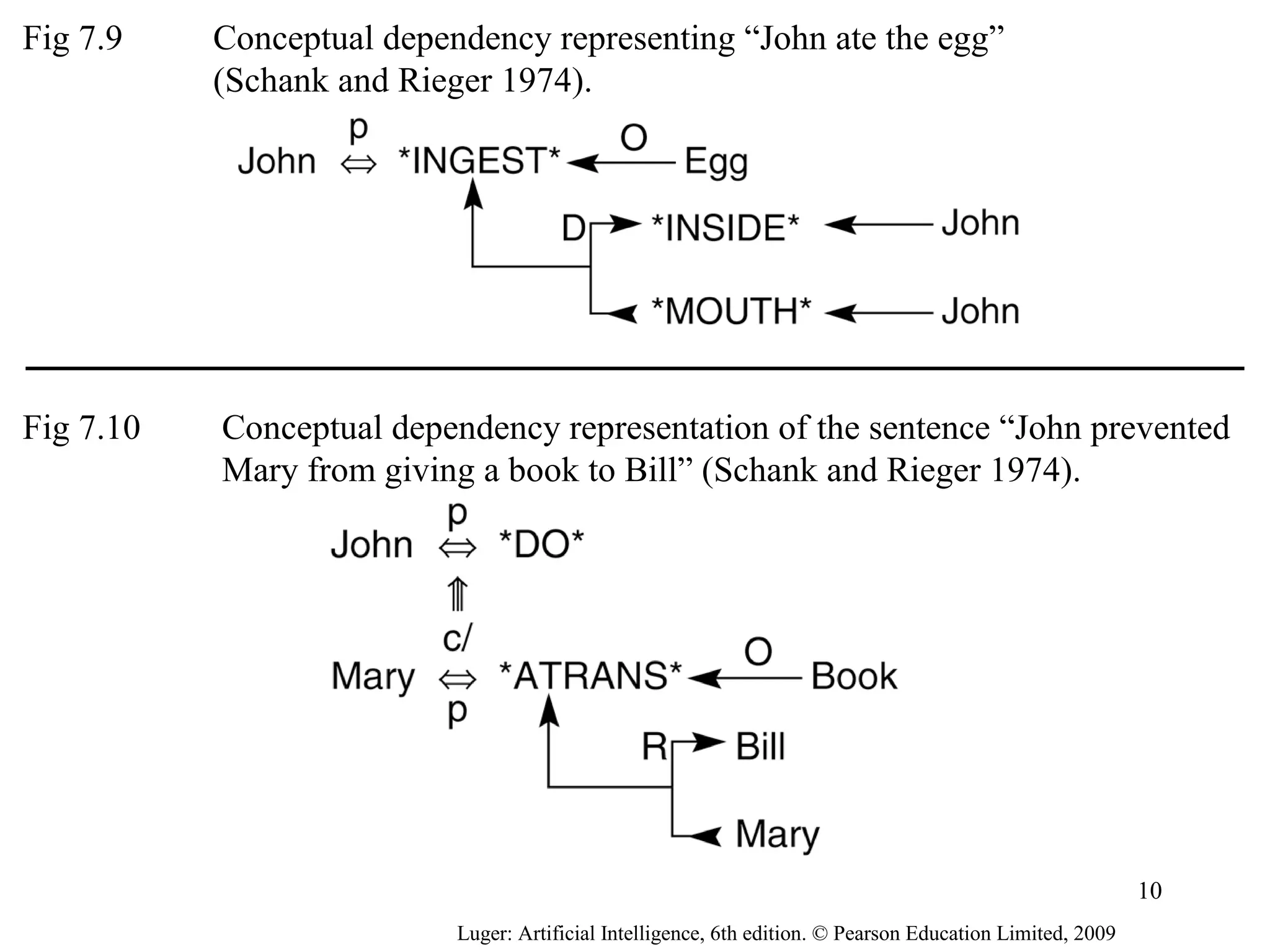

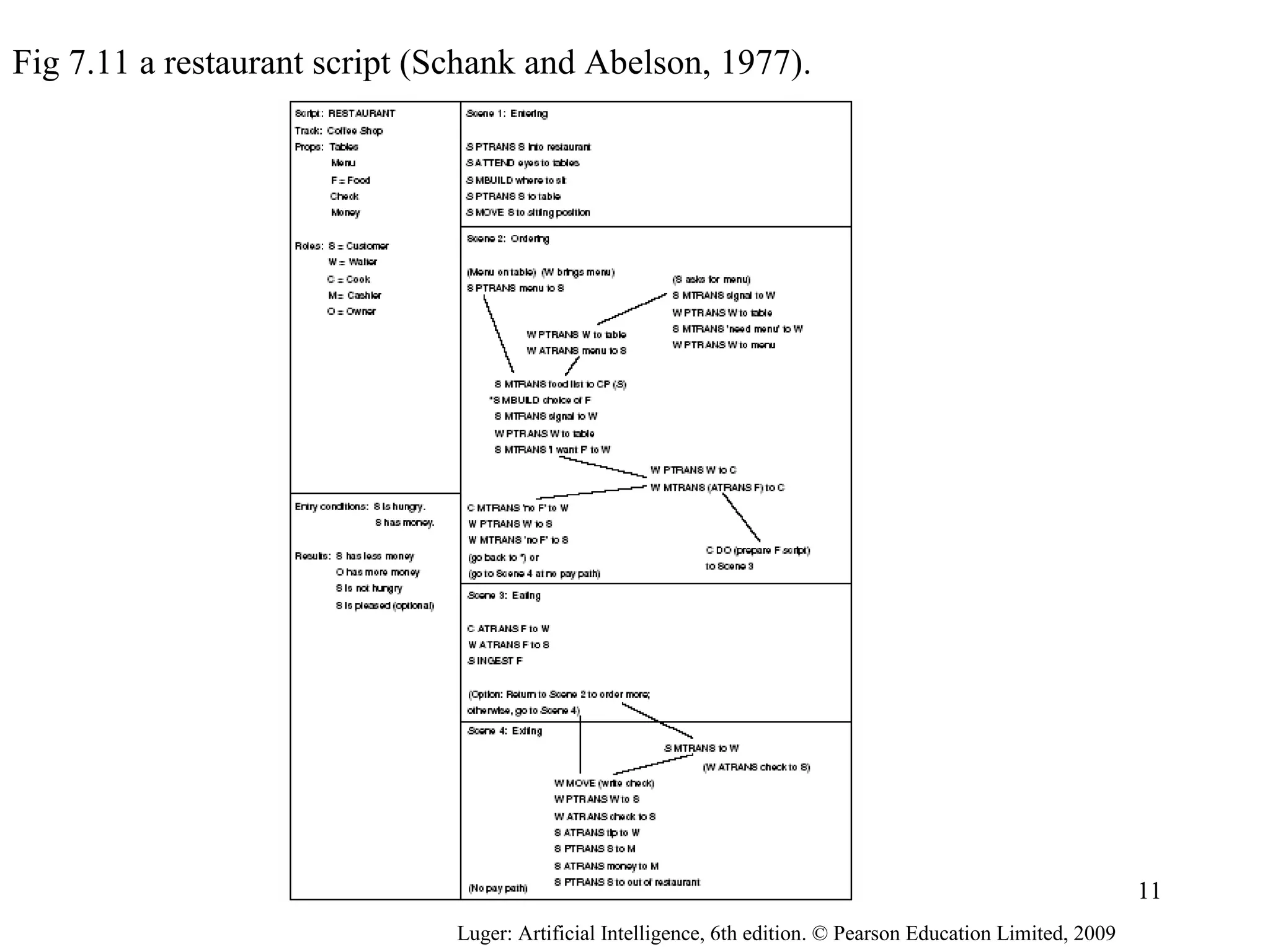

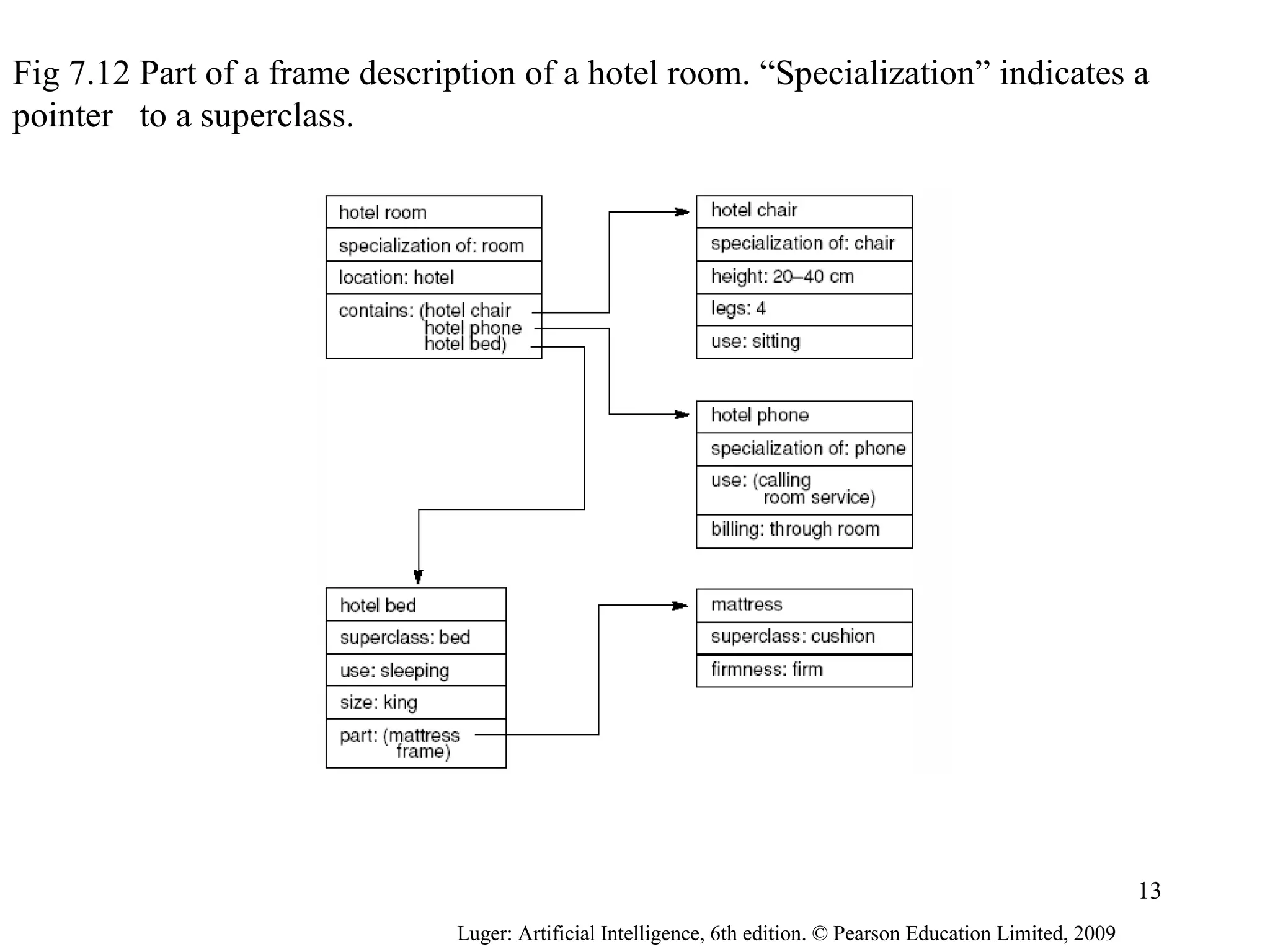

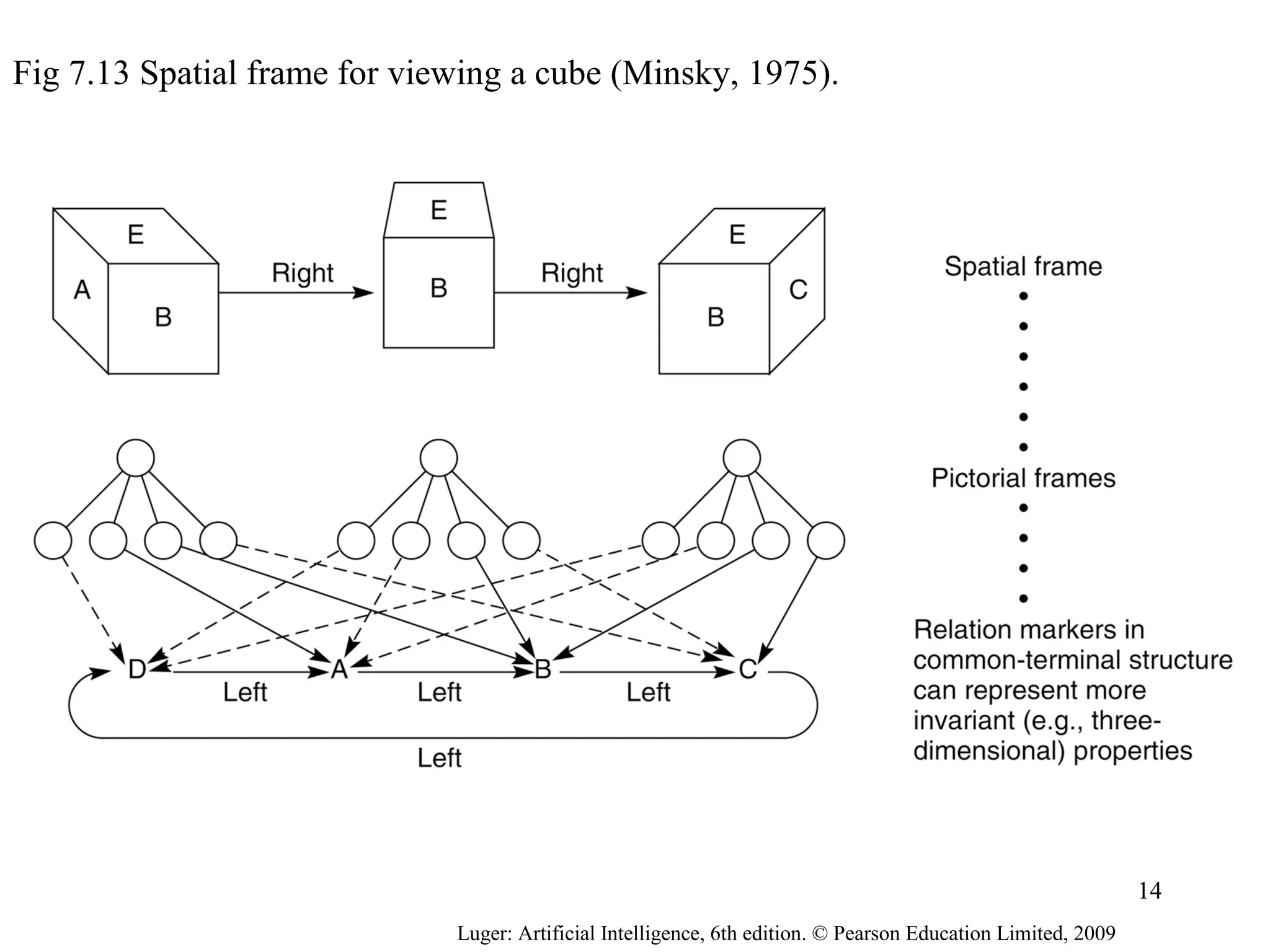

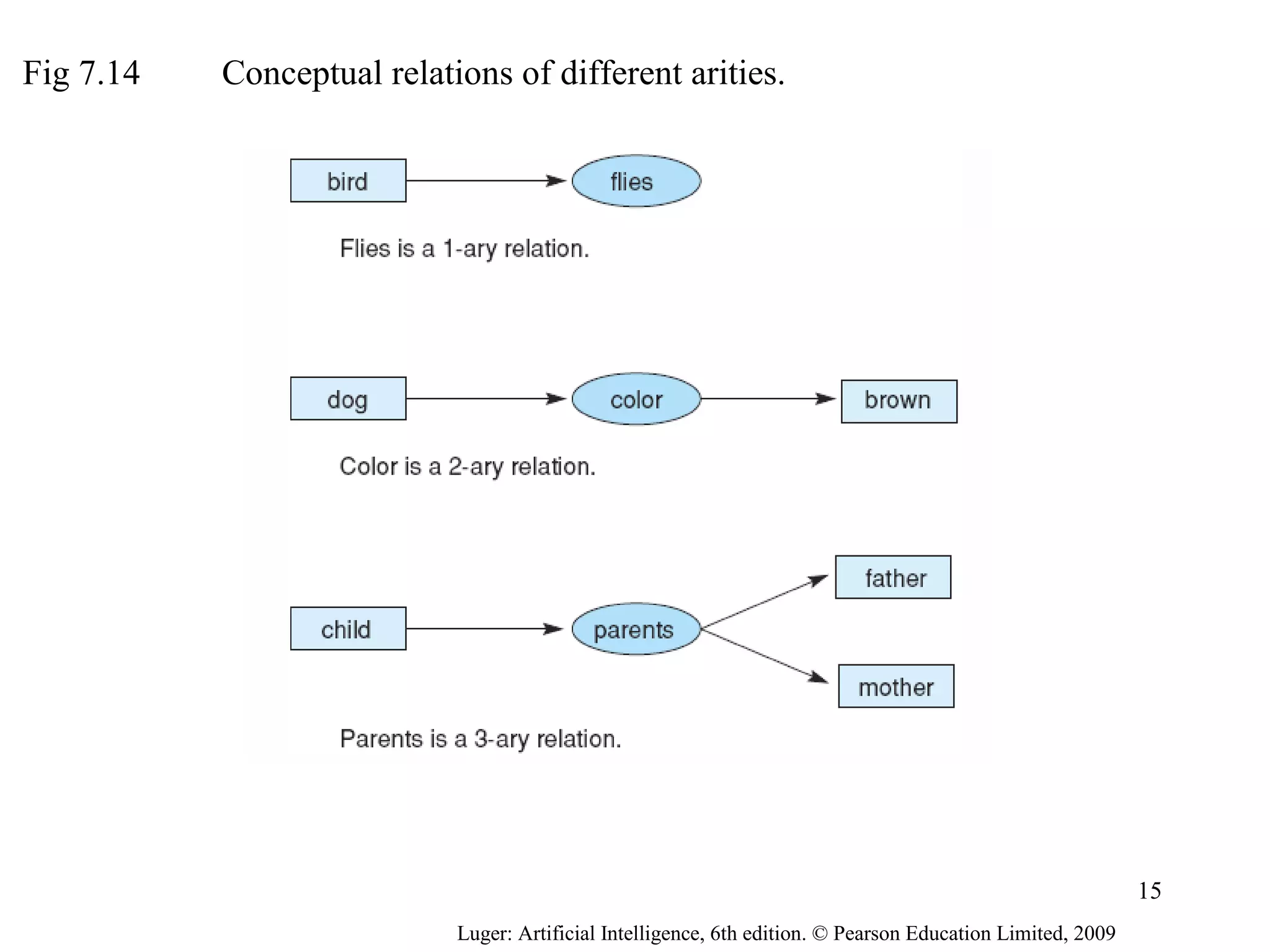

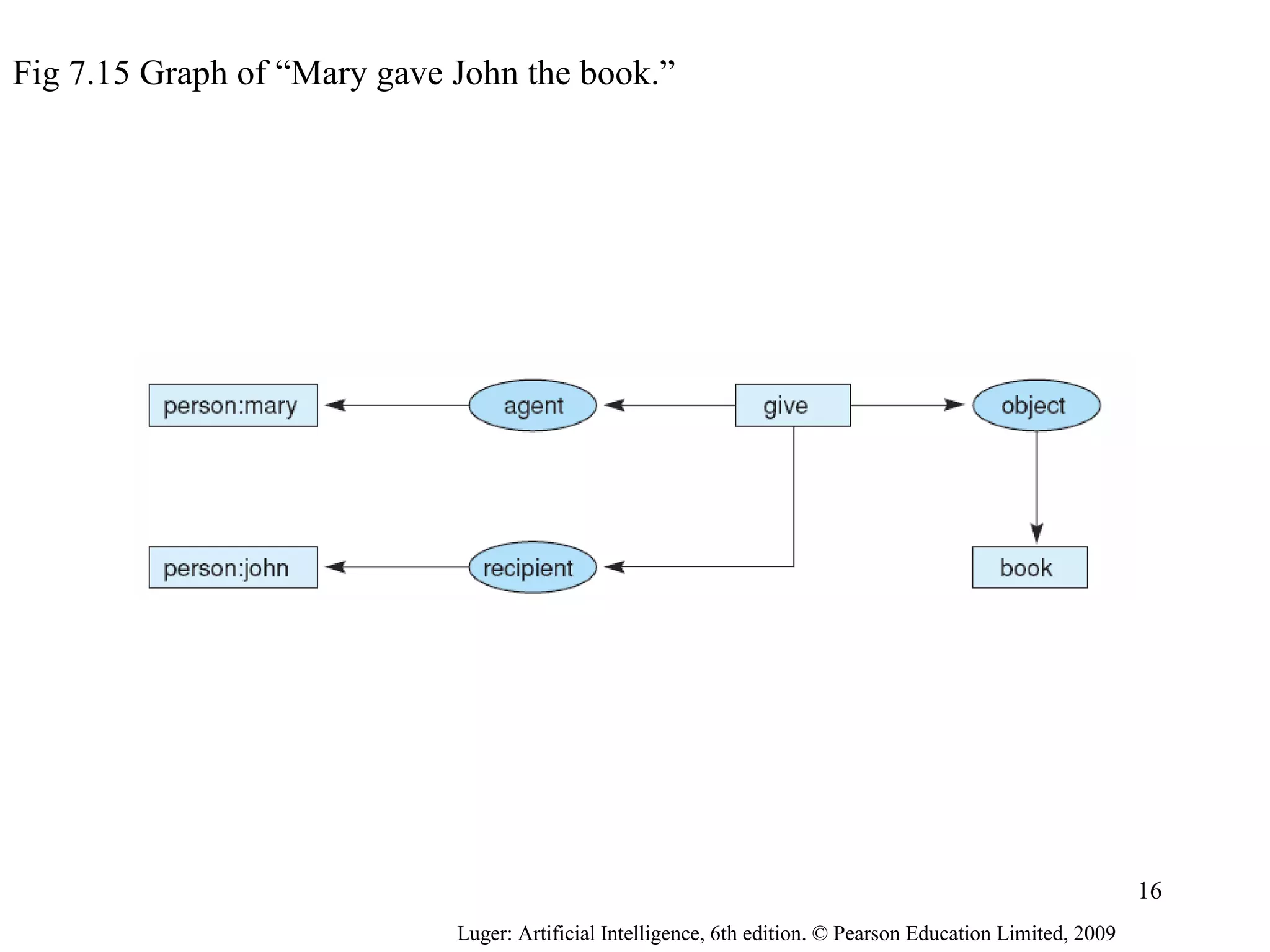

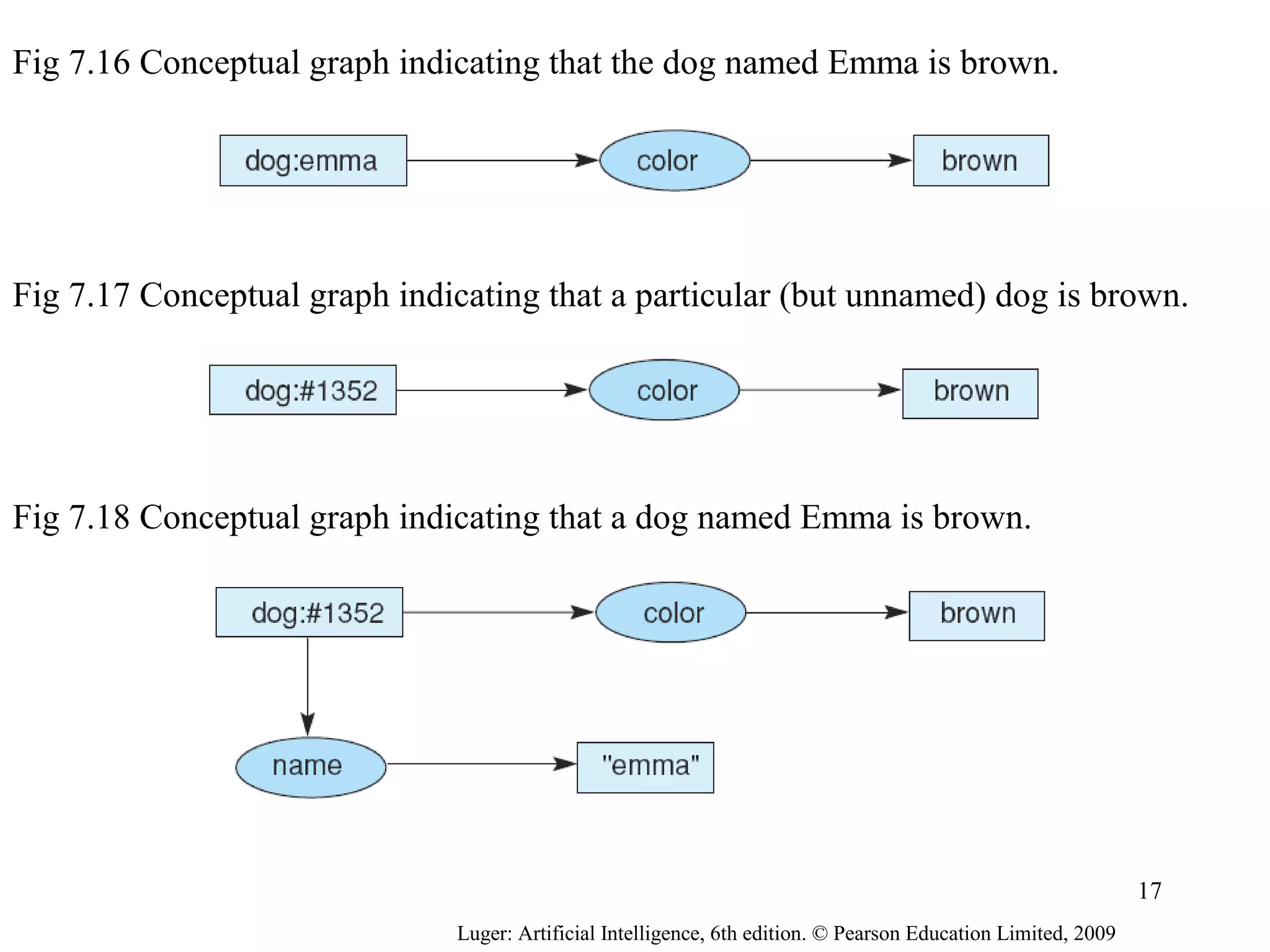

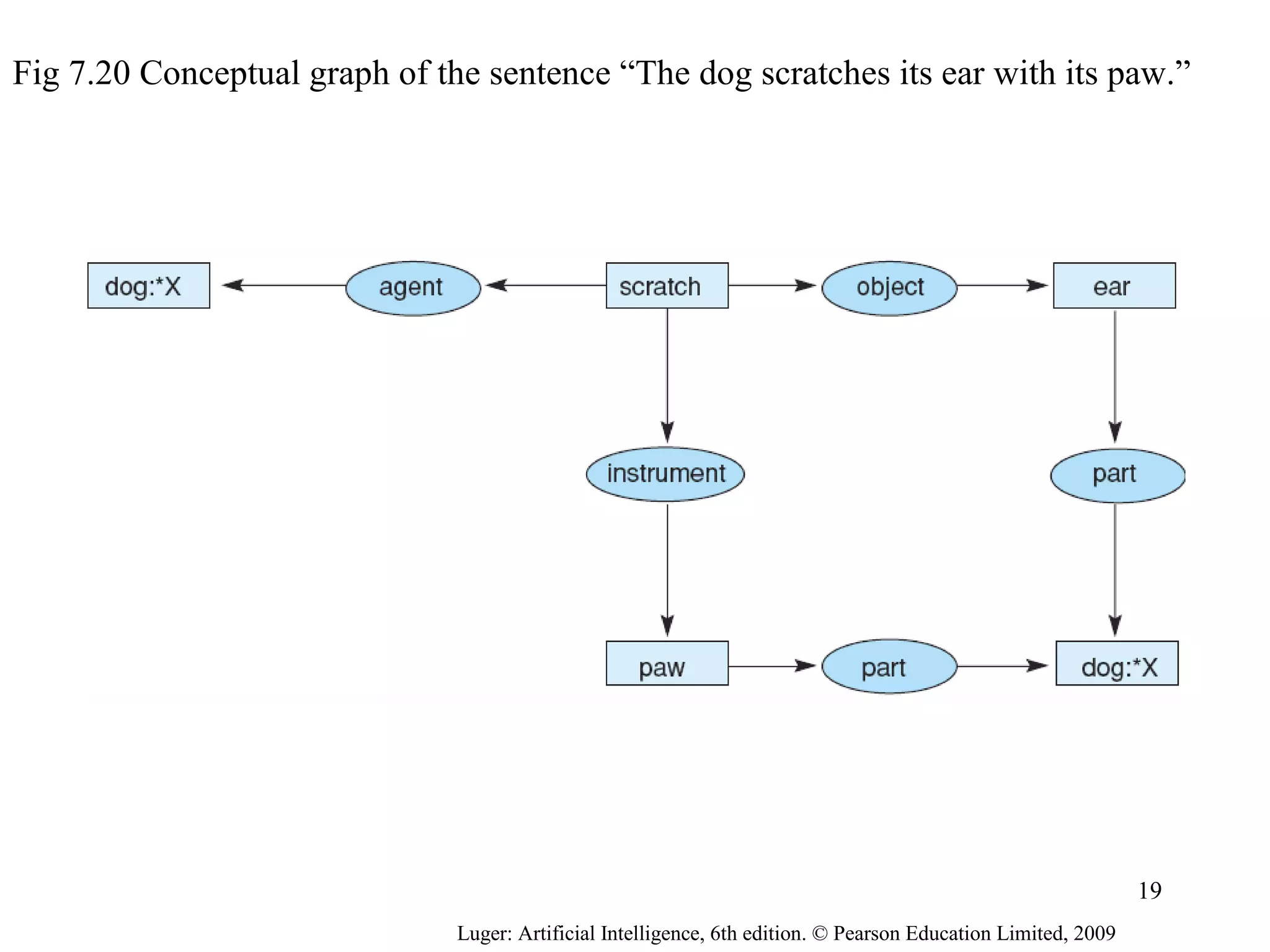

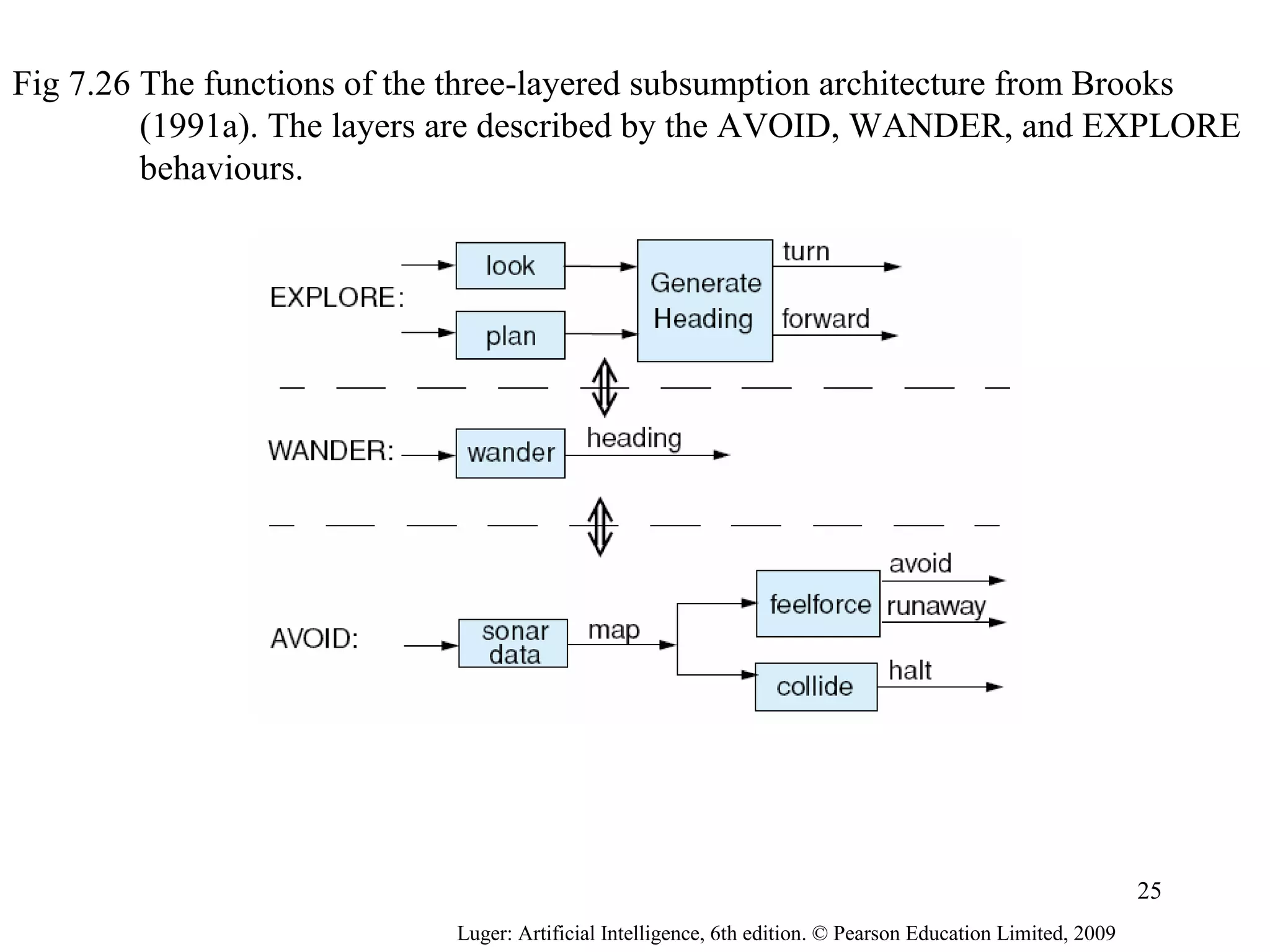

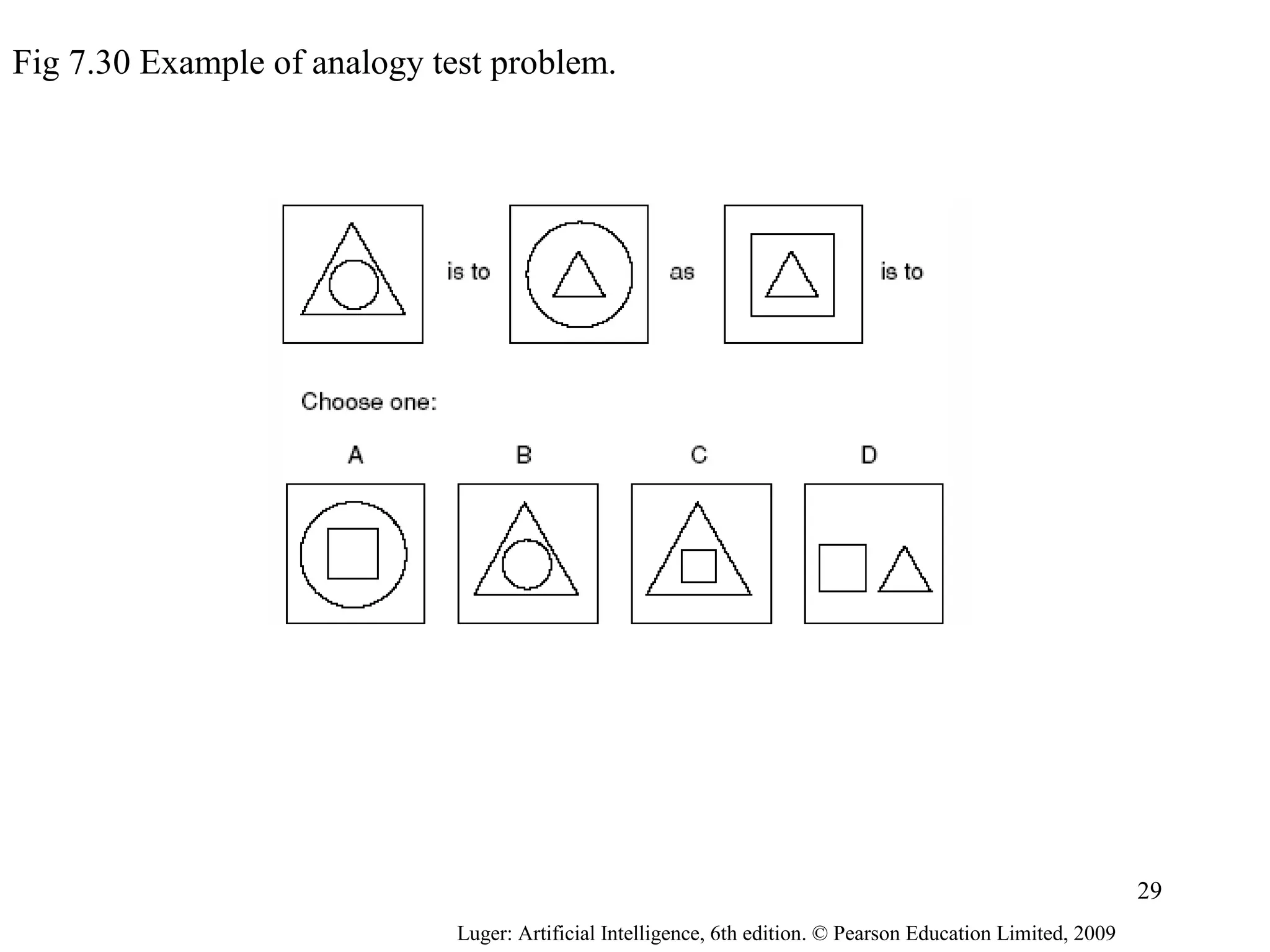

This document discusses various methods of knowledge representation in artificial intelligence, including semantic networks, conceptual graphs, frames, and scripts. It provides examples of each method through figures and descriptions. Different knowledge representation schemes are compared, along with their suitability for representing different types of knowledge.