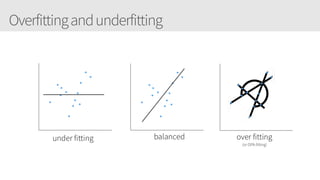

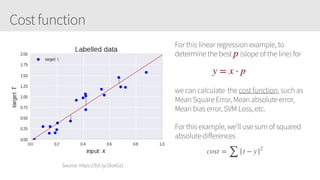

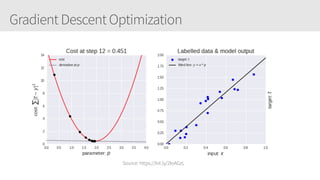

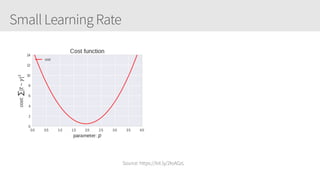

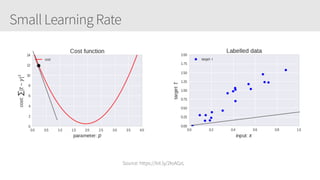

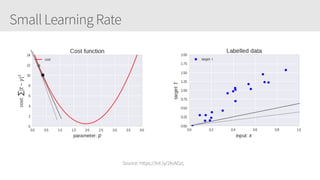

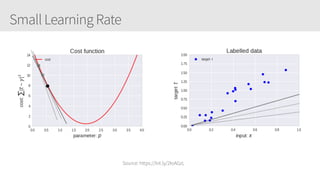

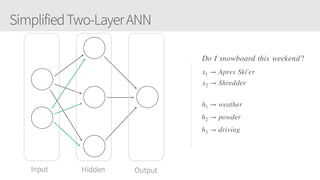

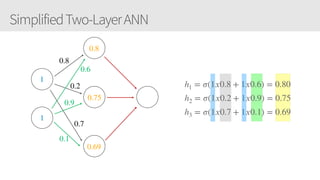

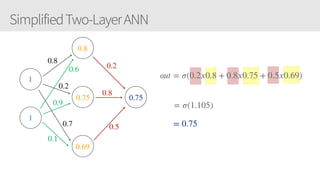

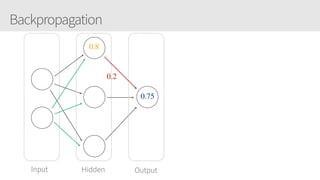

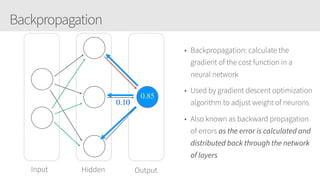

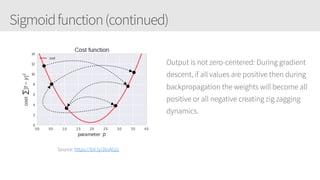

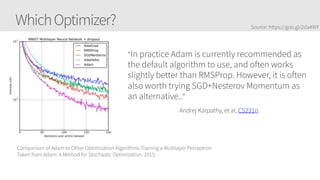

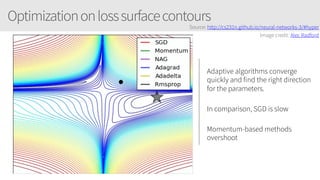

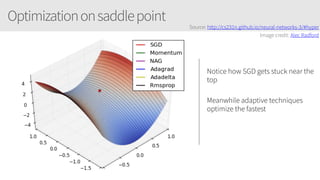

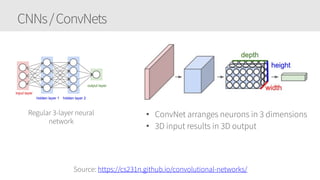

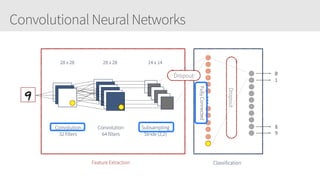

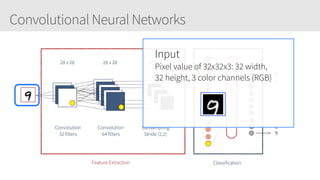

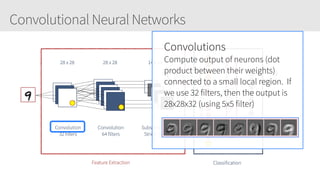

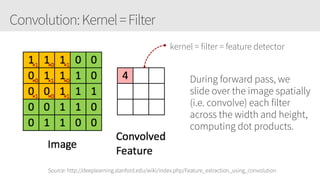

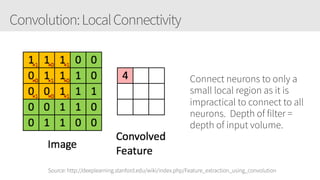

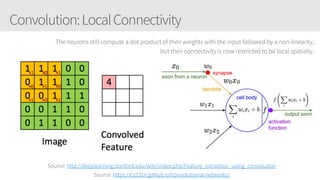

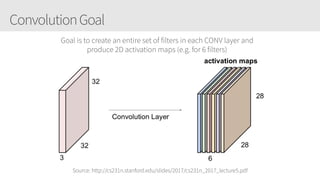

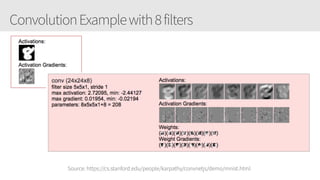

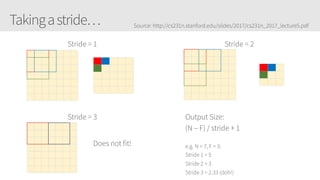

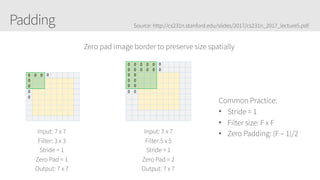

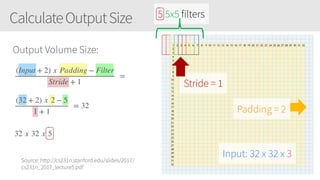

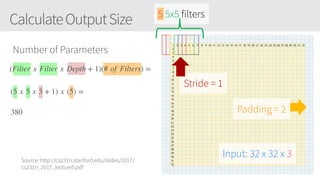

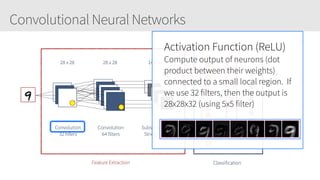

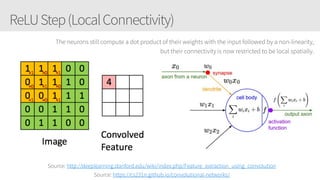

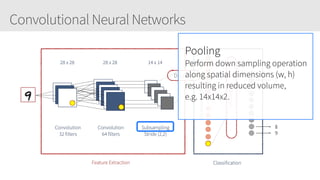

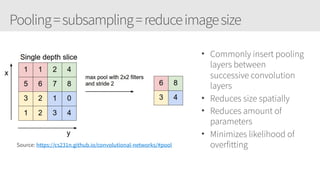

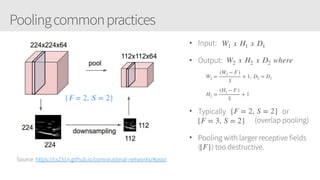

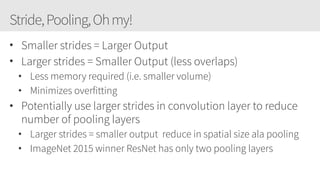

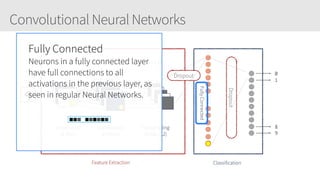

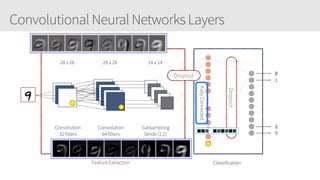

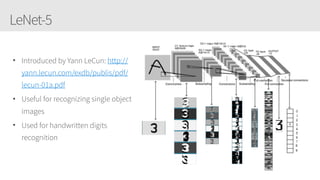

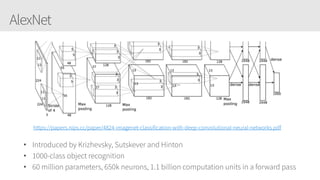

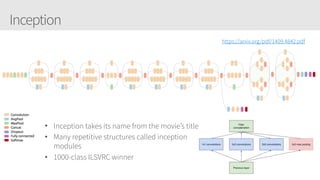

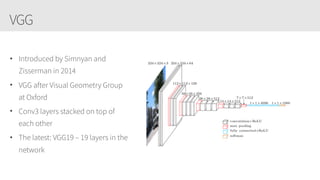

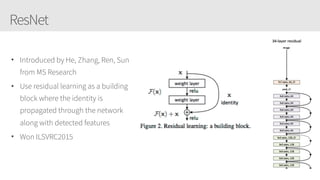

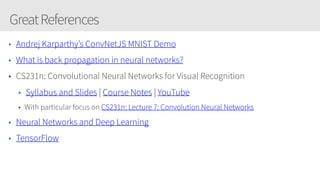

The document provides an overview of applying convolutional neural networks (CNNs), highlighting key topics such as CNN architectures, hyperparameters, cost functions, and backpropagation. It emphasizes the significance of unifying data science, engineering, and business with tools like Keras and Spark, while also featuring notable networks such as AlexNet and ResNet. Furthermore, it includes practical insights on optimizing learning rates and applying convolutions effectively in deep learning tasks.