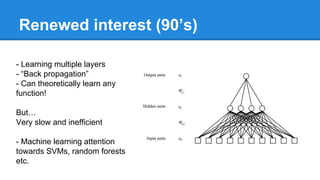

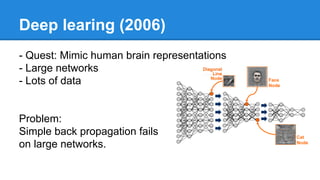

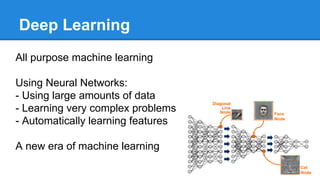

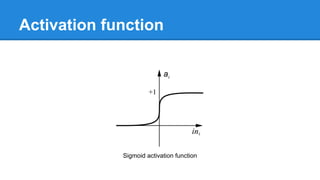

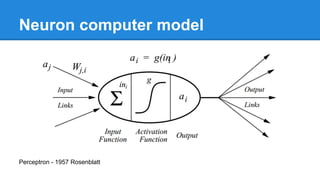

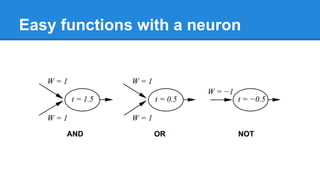

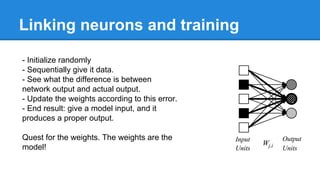

The document discusses the evolution and application of deep learning and neural networks, highlighting its ability to process large amounts of data and learn complex problems. It details the historical development from early perceptrons to advanced algorithms and architectures that can handle significant computational tasks, facilitated by the rise of GPUs. It also emphasizes the practical applications of deep learning in various fields while acknowledging the challenges and intricacies involved in its implementation.

![The Perceptron (1958)

“A machine which senses, recognizes, remembers, and responds like the human mind”

“Remarkable machine… [was] capable of what amounts to thought” - The New Yorker](https://image.slidesharecdn.com/neuralnetworksanddeeplearning-140117060742-phpapp02/85/Neural-networks-and-deep-learning-14-320.jpg)