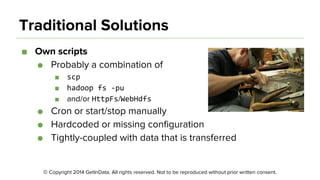

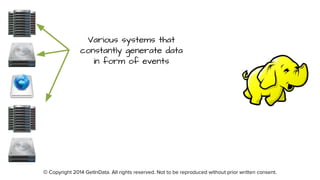

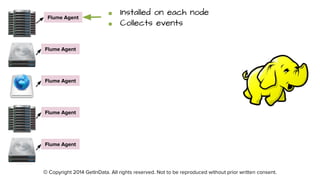

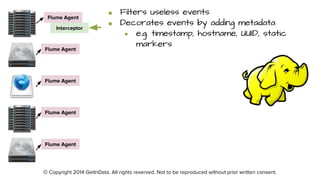

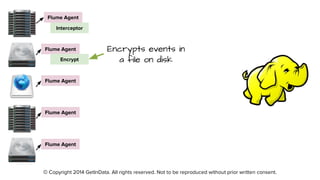

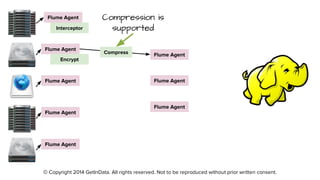

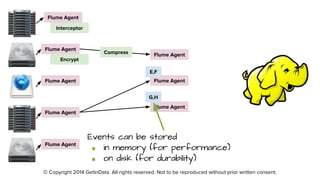

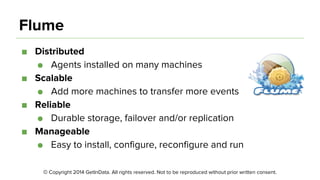

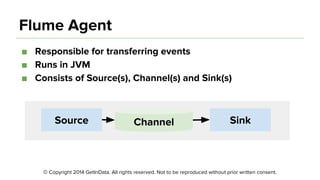

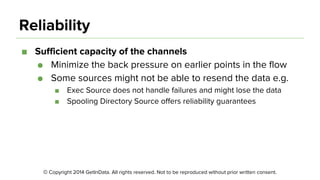

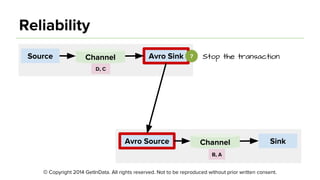

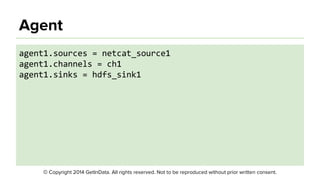

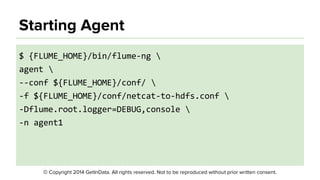

The document discusses Apache Flume, a tool designed to efficiently move large volumes of streaming event data from various sources, like servers, to a Hadoop cluster. It addresses traditional solutions' drawbacks, such as delays and limited scalability, and highlights Flume's features, including its distributed, scalable, and reliable architecture with various sources and sinks. The document also describes the architecture, components, event handling, and configuration of Flume, emphasizing its integration with the Hadoop ecosystem.