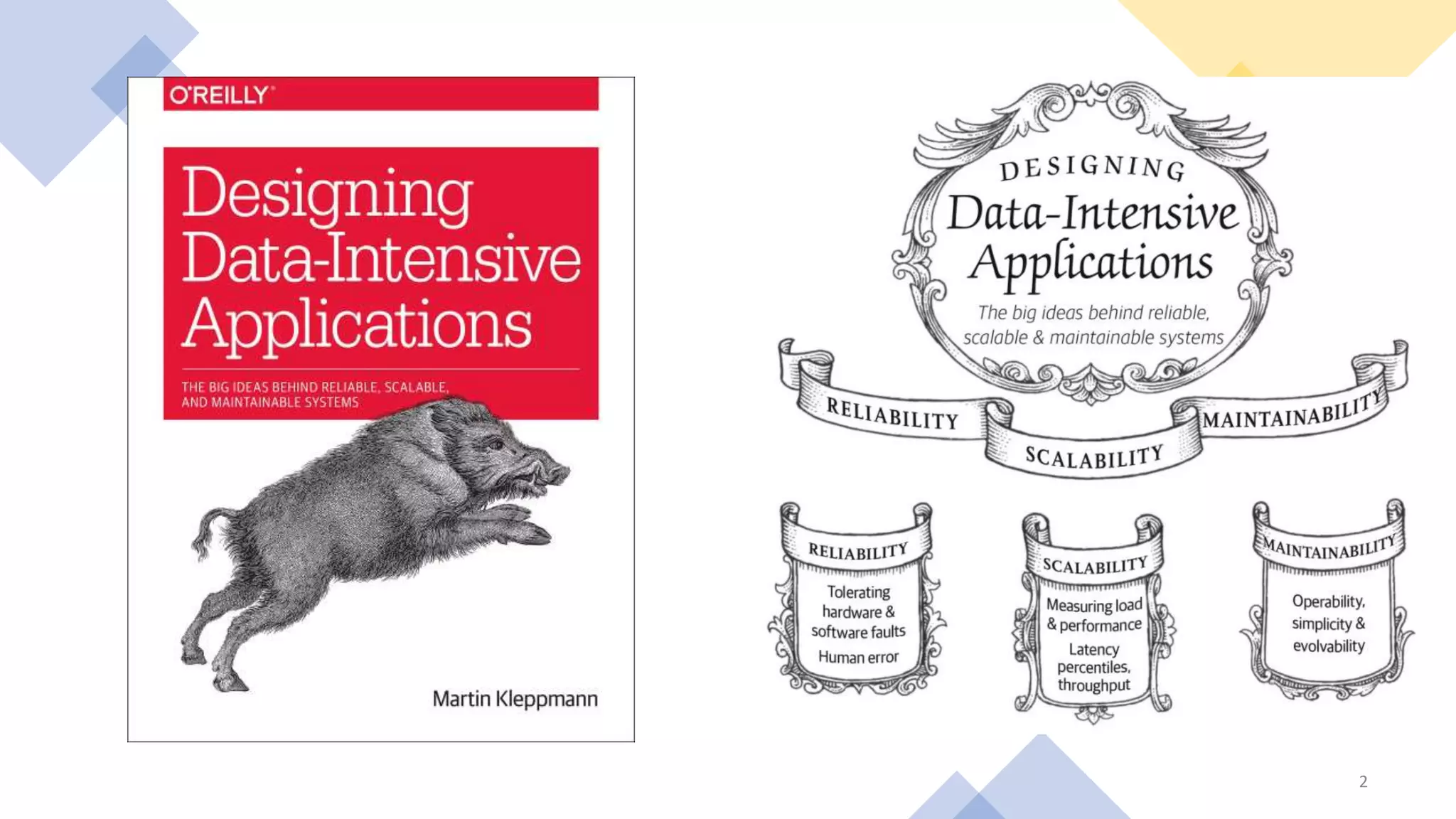

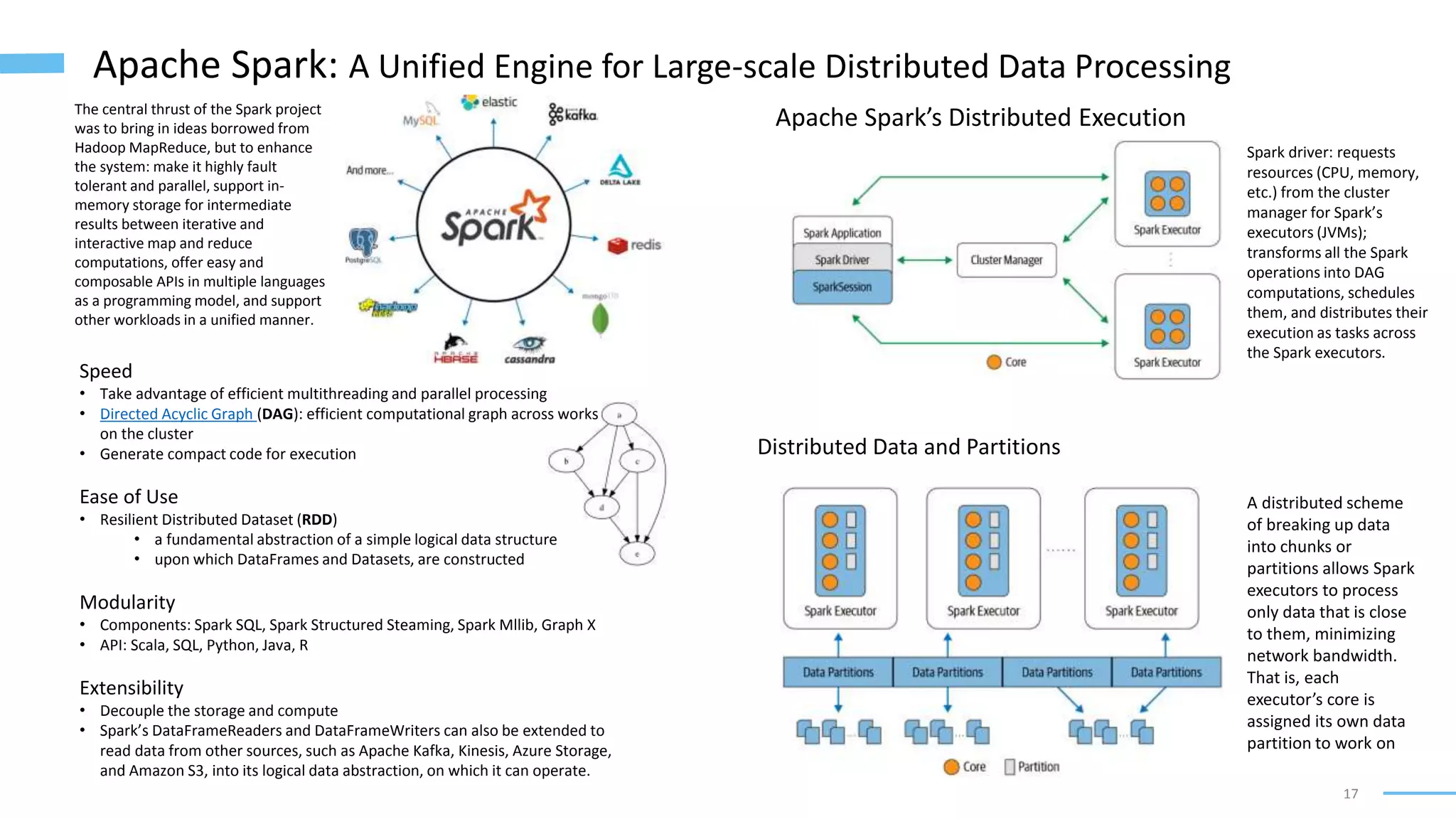

The document provides an overview of software architecture considerations for data applications. It discusses sample data system components like Memcached, Redis, Elasticsearch, and Solr. It covers topics such as service level objectives, data models, query languages, graph models, data warehousing, machine learning pipelines, and distributed systems. Specific frameworks and technologies mentioned include Spark, Kafka, Neo4j, PostgreSQL, and ZooKeeper. The document aims to help understand architectural tradeoffs and guide the design of scalable, performant, and robust data systems.

![13

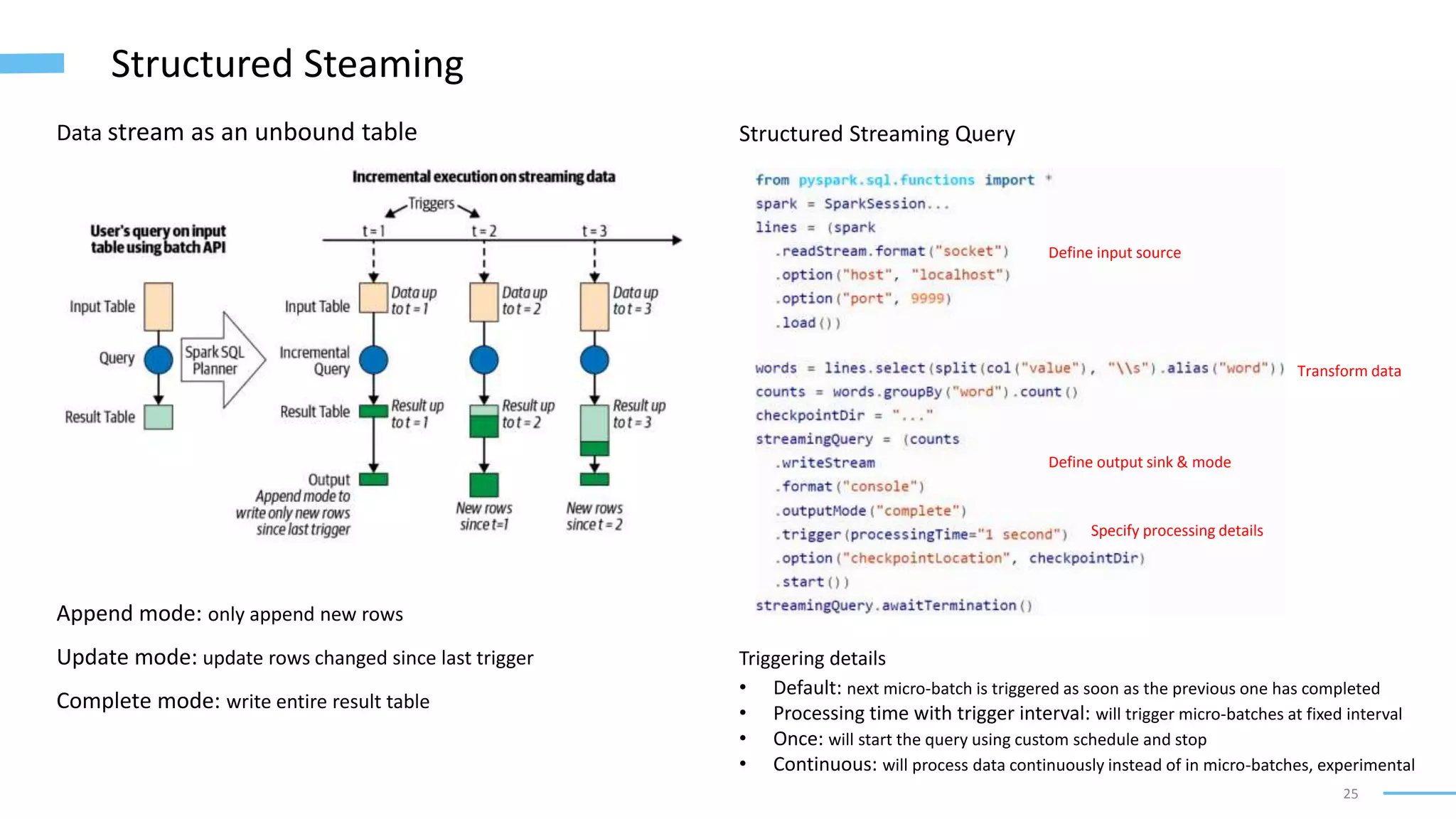

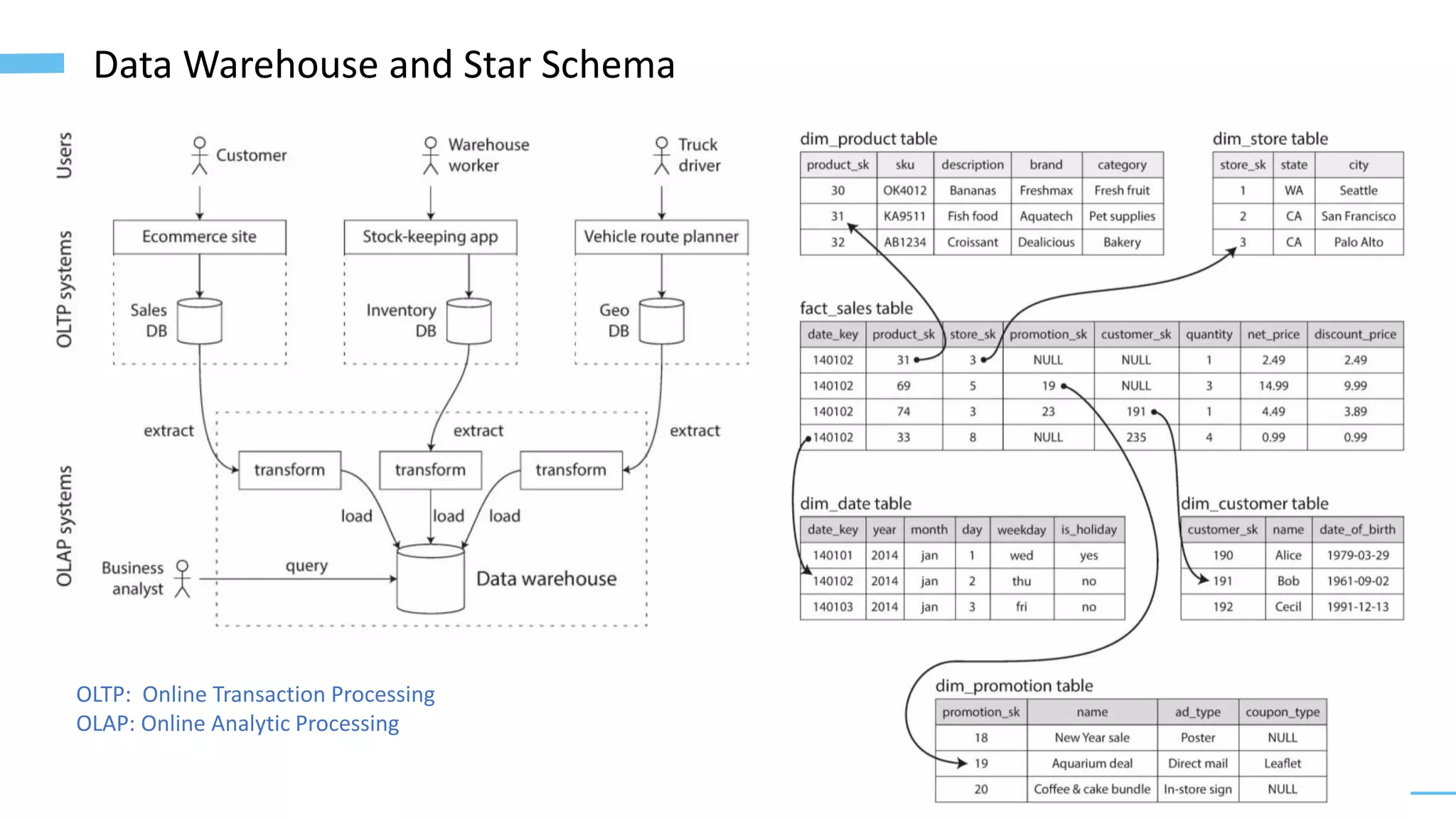

Batch Processing with Unix Tools, MapReduce, and Spark

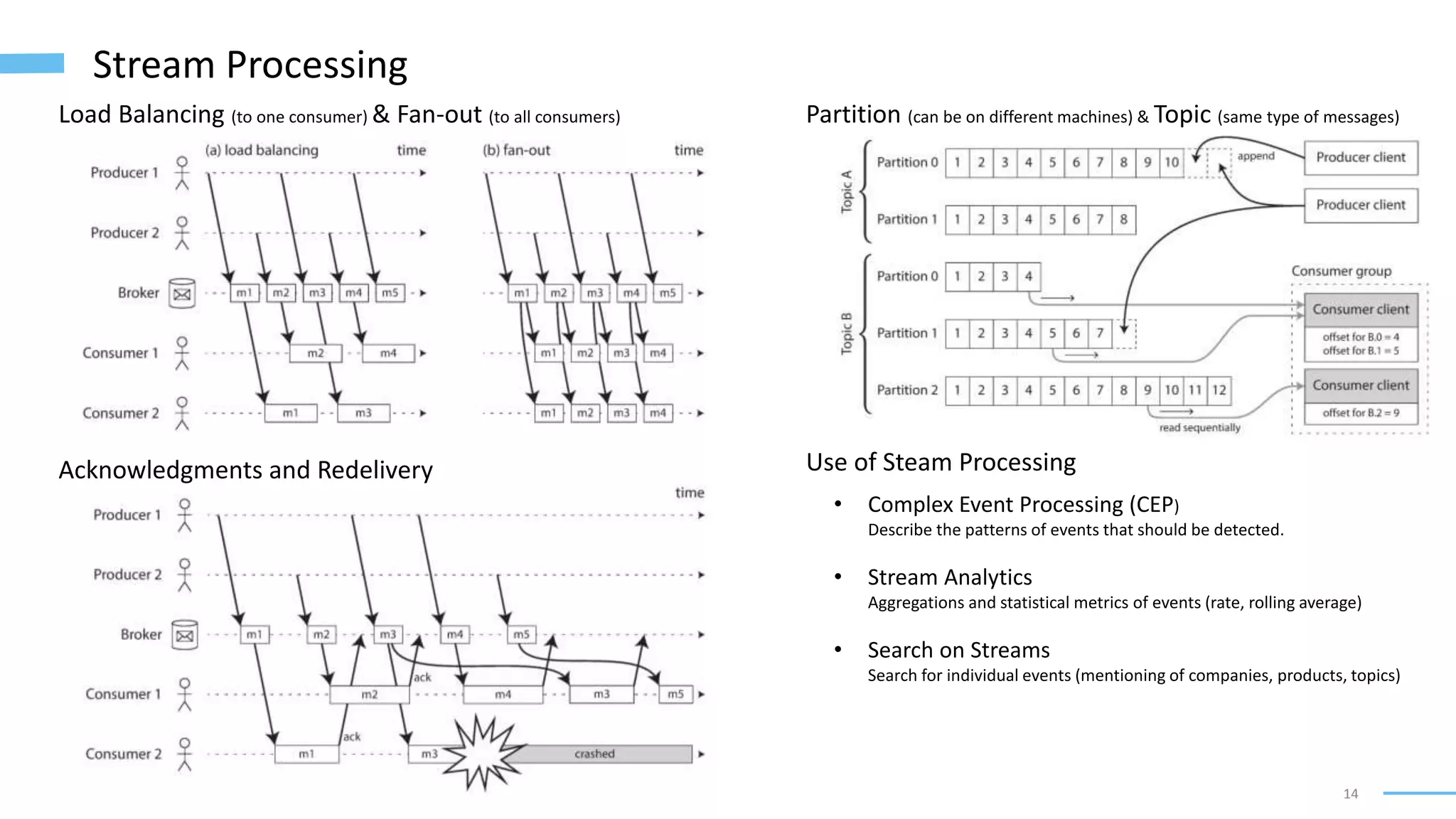

• Services [HTTP/REST-based APIs] (online systems)

• Batch Processing Systems (offline systems)

• Stream Processing Systems (near-real-time systems)

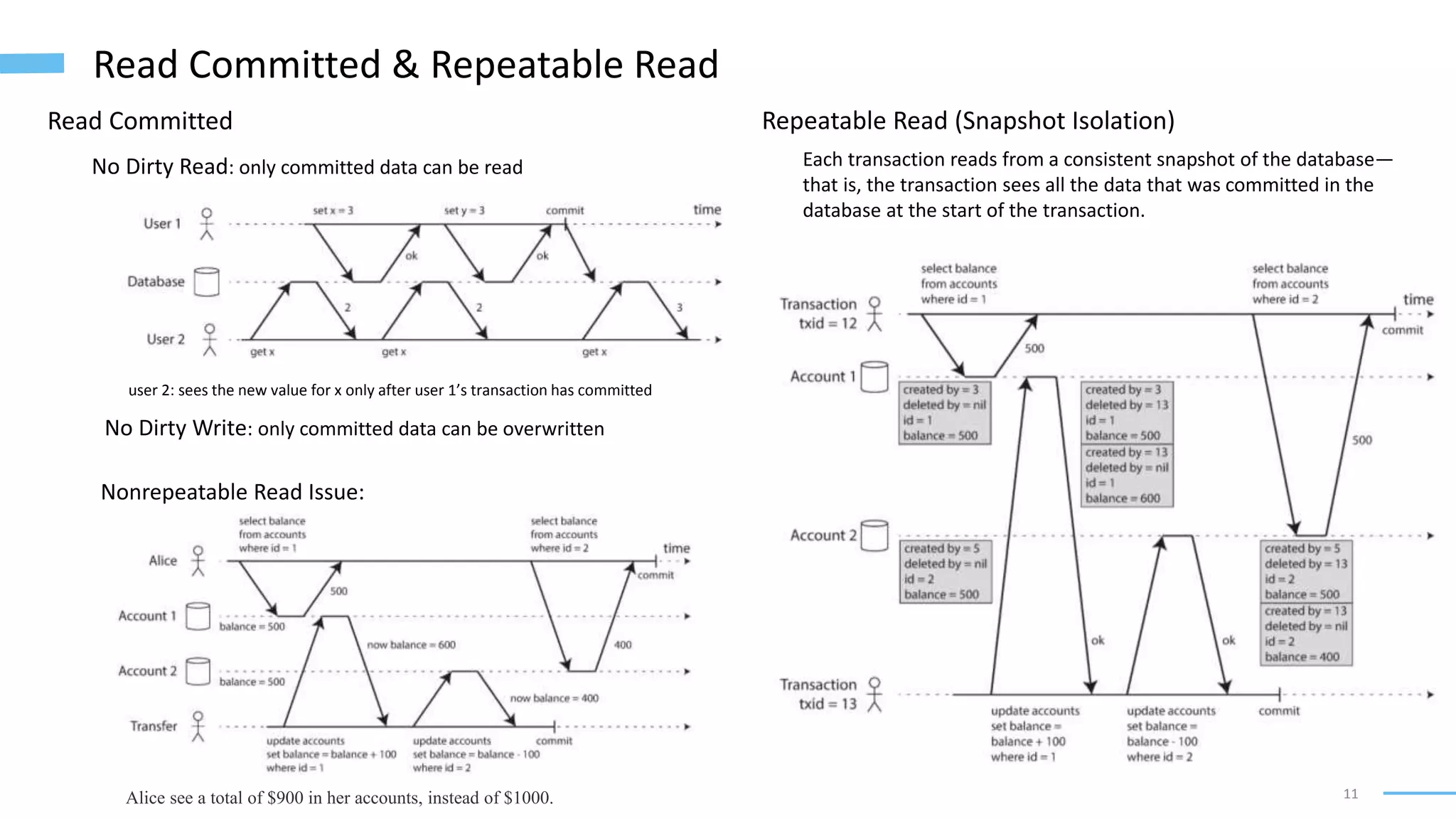

Batch Processing Web Logs with Unix Tools

Output:

MapReduce and Distributed Filesystems

• Read a set of input files and break it up into records.

• Call the mapper function to extract a key and value from each input record.

awk '{print $7}': it extracts the URL ($7) as the key and leaves the value empty.

• Sort all the key-value pairs by key.

This is done by the first sort command.

• Call the reducer function to iterate over the sorted key-value pairs.

uniq -c, which counts the number of adjacent records with the same key.

Read log file

Split each line, get http ($7 token)

Alphabetically sort by URL

Counts of distinct URL

Sort by number in reverse

Output the first 5 lines

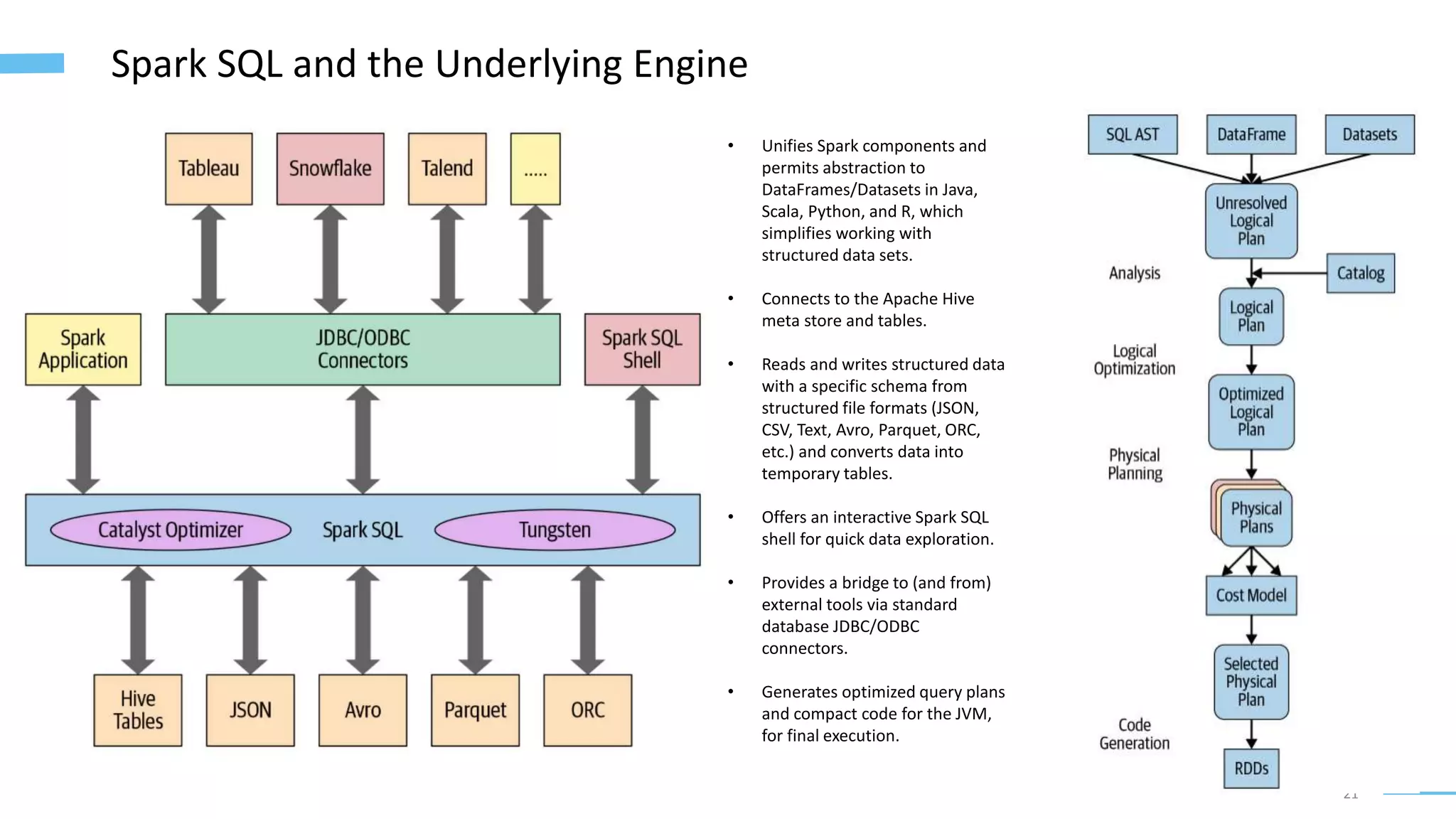

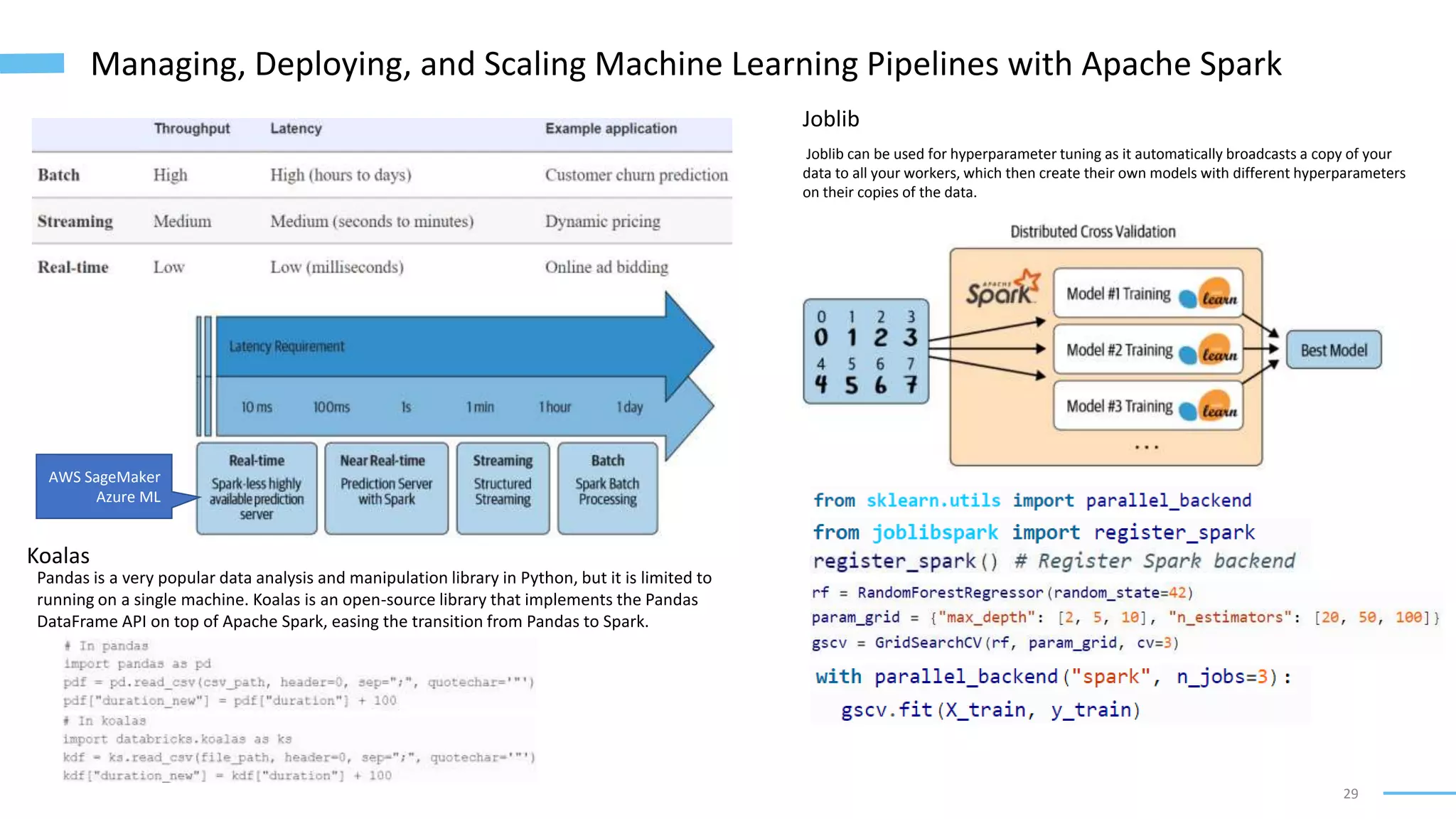

Downsides of MapReduce

• A MapReduce job can only start when all tasks in the preceding jobs (that generate its

inputs) have completed

• Mappers are often redundant: they just read back the same file that was just written

by a reducer and prepare it for the next stage of partitioning and sorting.

• Storing intermediate state in a distributed filesystem means those files are replicated

across several nodes, which is often overkill for such temporary data.

Solution: Dataflow Engines (such as Spark)

• Explicitly model the flow of data through several processing stages

• Parallelize work by partitioning inputs, and they copy the output of one function over

the network to become the input to another function

• All joins and data dependencies in a workflow are explicitly declared, the scheduler

has an overview of what data is required where, so it can make locality optimizations](https://image.slidesharecdn.com/softwarearchitecturefordataapplications-220212133506/75/Software-architecture-for-data-applications-13-2048.jpg)

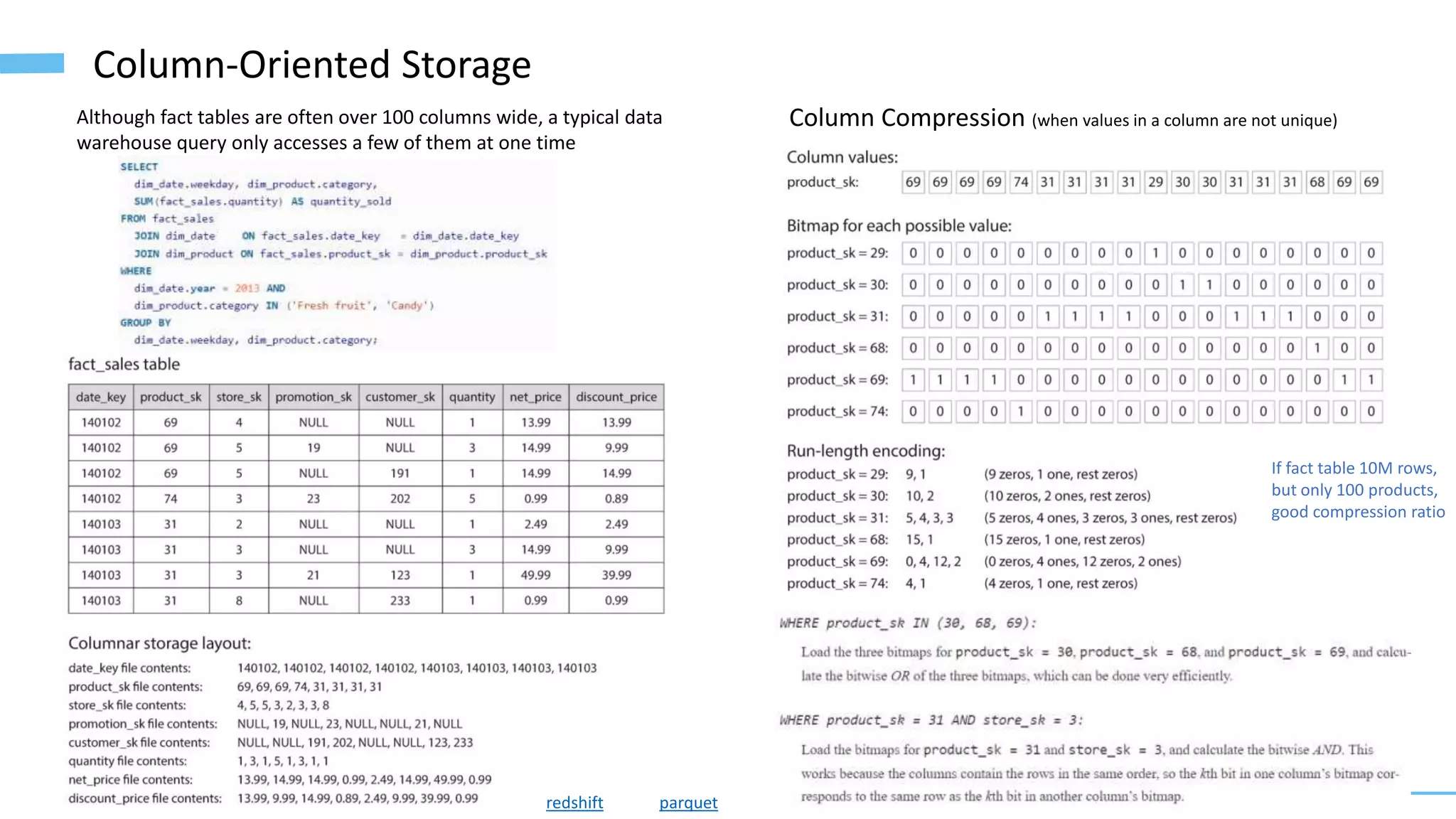

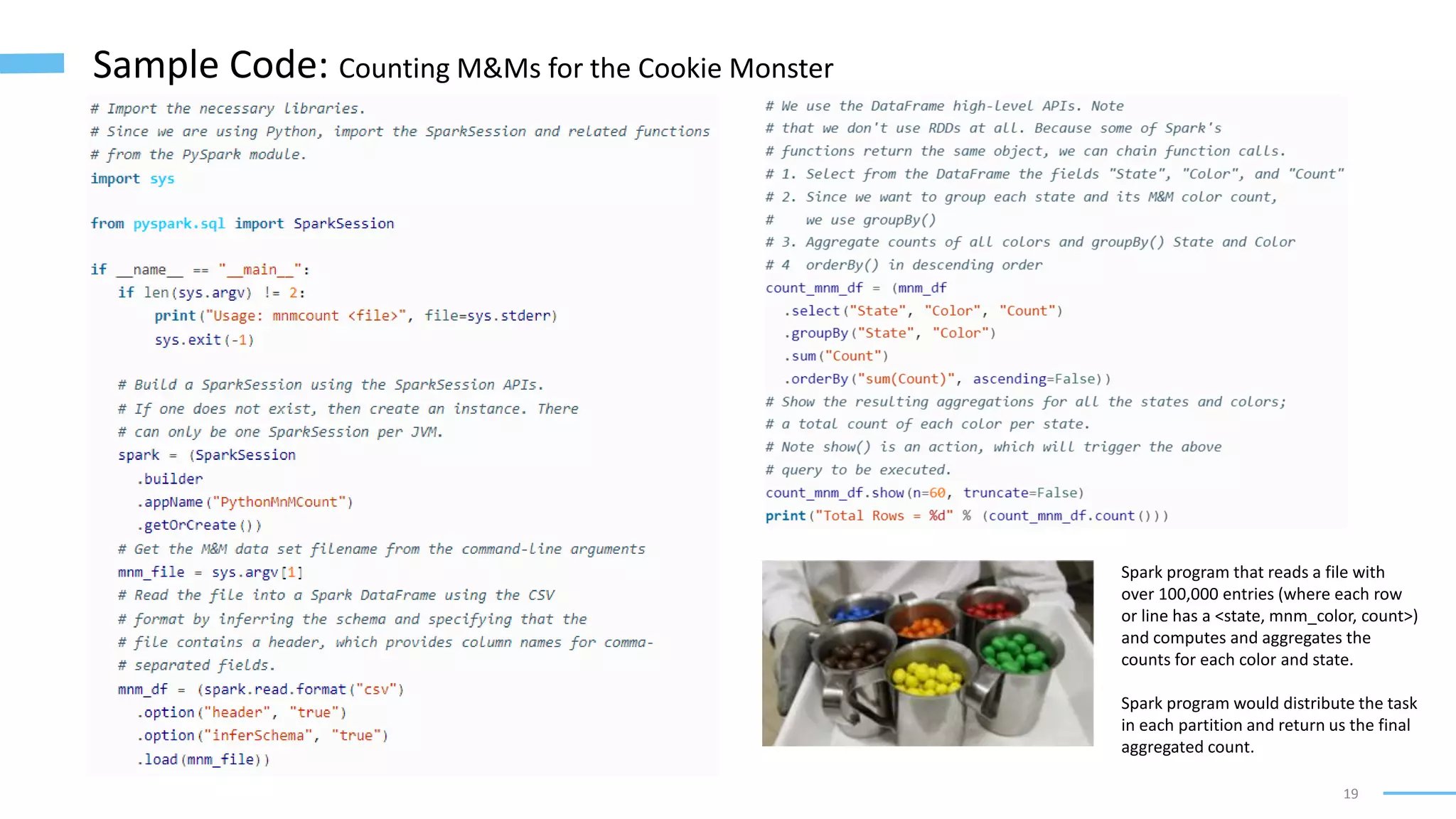

![20

Apache Spark’s Structured APIs

The RDD is the most basic abstraction in Spark

• Dependencies

instructs Spark how an RDD is constructed with its inputs

• Partitions (with some locality information)

ability to parallelize computation on partitions across executors

• Compute function: Partition => Iterator[T]

produces an Iterator[T] for the data that will be stored in the RDD

Basic Python data types in Spark

Python structured data types in Spark

Schemas and Creating DataFrames

A schema defines the column names and associated data types for a DataFrame.

schema = "author STRING, title STRING, pages INT“

blogs_df = spark.createDataFrame(data, schema)](https://image.slidesharecdn.com/softwarearchitecturefordataapplications-220212133506/75/Software-architecture-for-data-applications-20-2048.jpg)