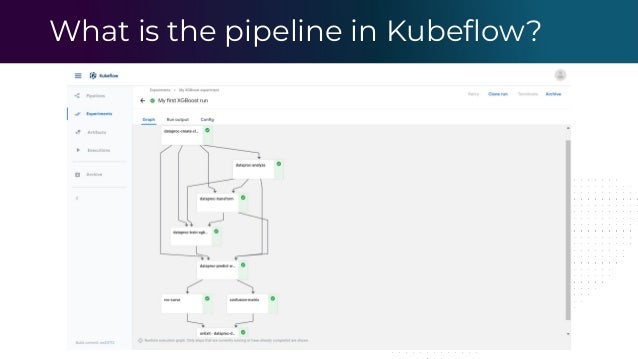

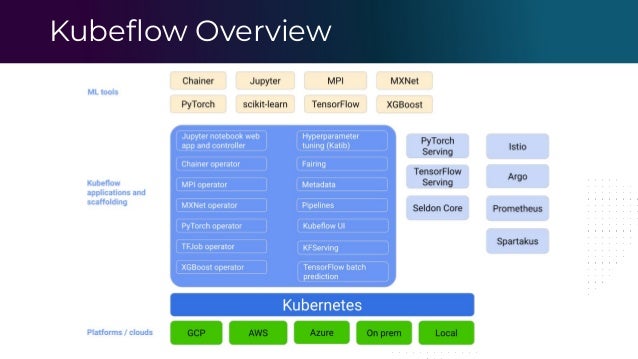

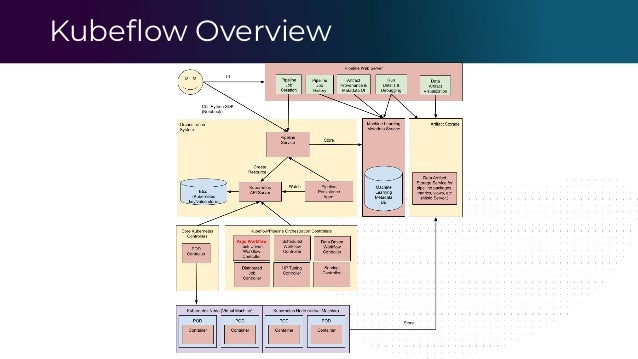

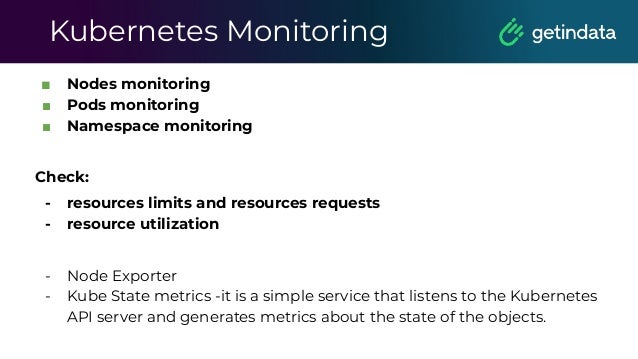

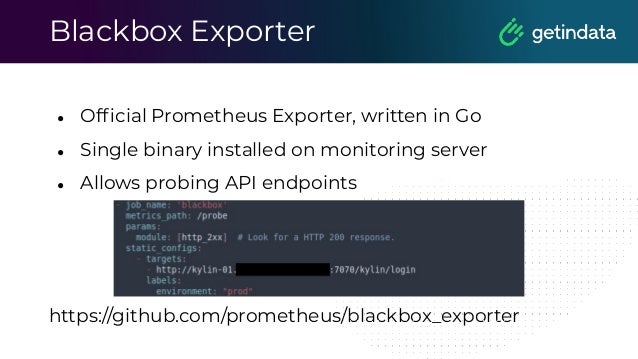

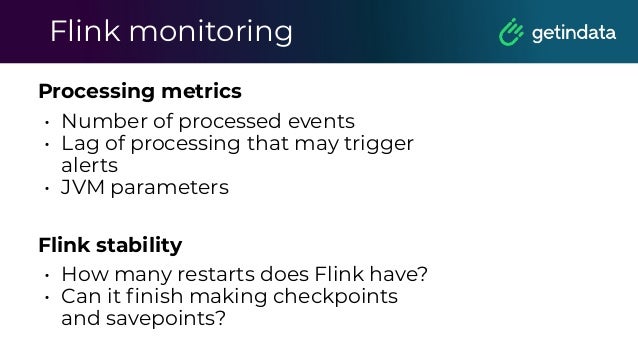

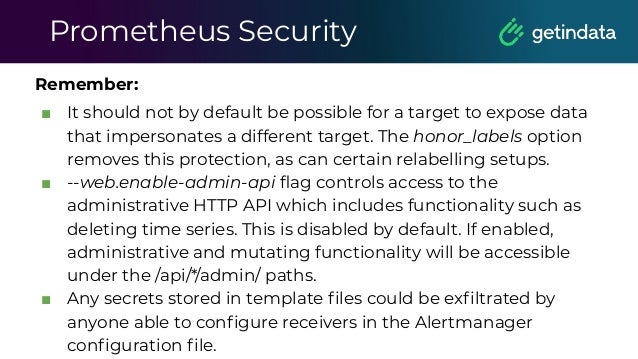

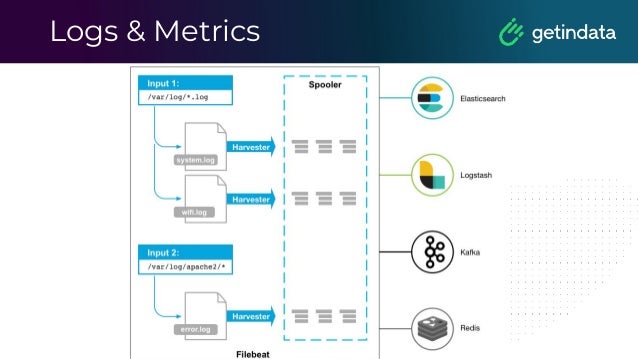

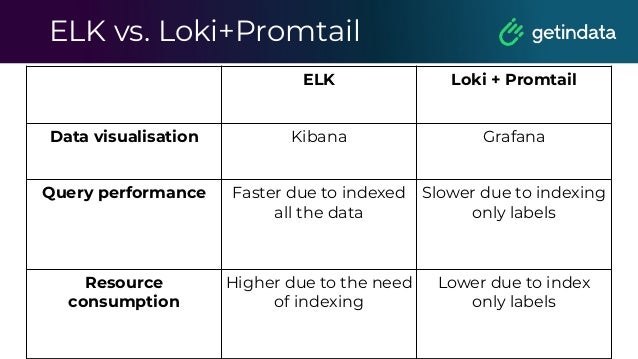

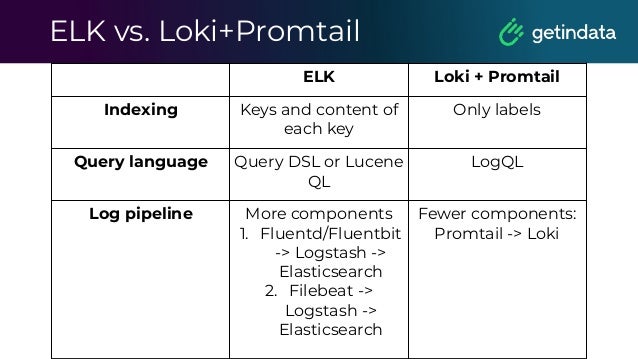

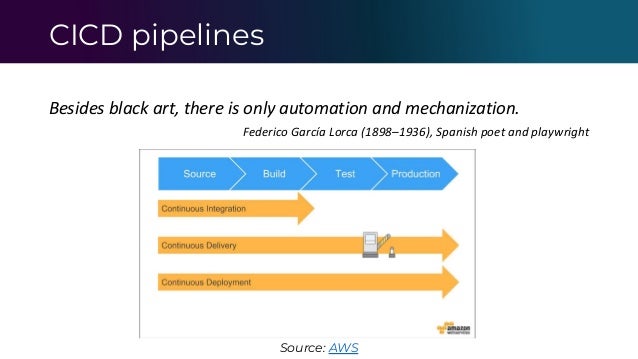

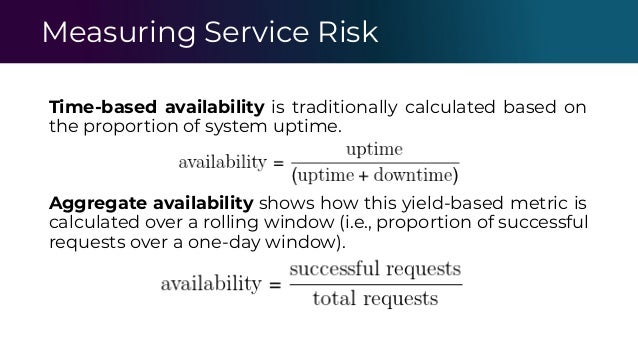

The document outlines the functioning of a data science platform using Kubeflow, emphasizing its goals such as end-to-end orchestration and easy experimentation. It discusses observability through metrics and logs, the importance of CI/CD in deploying machine learning models, and the utilization of tools like Prometheus and Loki for monitoring and logging systems. Additionally, it provides best practices for maintaining infrastructure as code and outlines the significance of SLIs, SLAs, and postmortem analyses in managing service risks and ensuring system reliability.