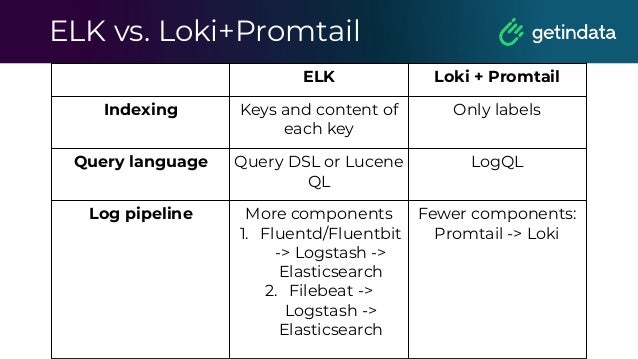

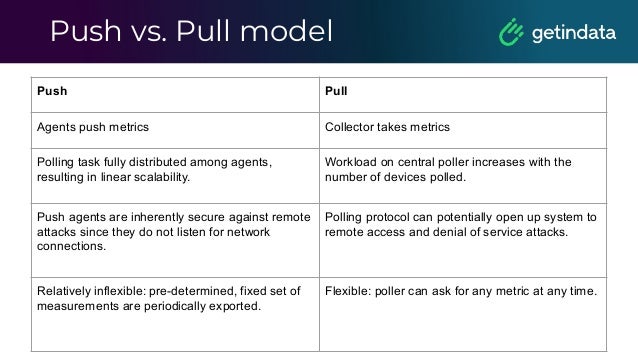

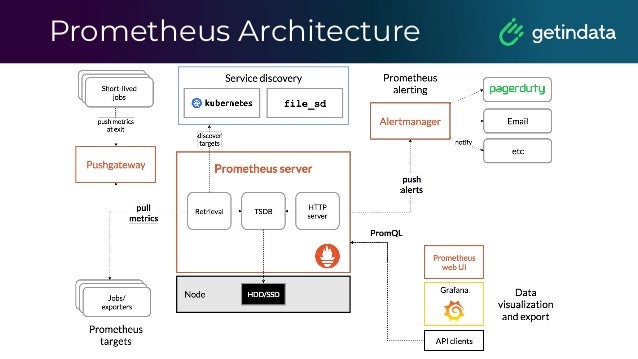

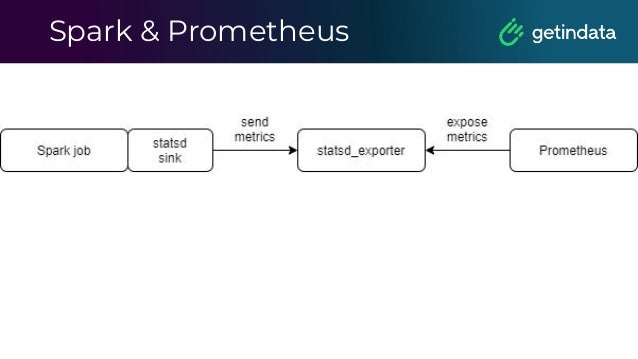

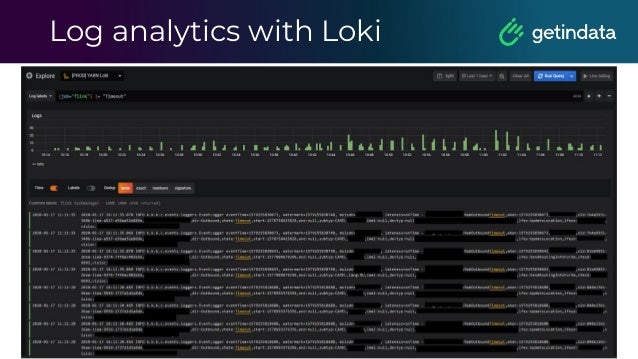

The document discusses monitoring in big data platforms, focusing on key concepts such as metrics, log analysis, and Prometheus architecture. It highlights differences between push and pull models, offers security insights for Prometheus components, and provides monitoring solutions for various systems including Kubernetes, Kafka, and Spark. Additionally, it explores log analytics tools like Elasticsearch and Loki, emphasizing their implementation for effective monitoring and observability.

![LogQL

This example counts all the log lines within the last

five minutes for the MySQL job.

rate( ( {job="mysql"} |= "error" !=

"timeout)[10s] ) )

It counts the entries for each log stream

count_over_time({job="mysql"}[5m])](https://image.slidesharecdn.com/2020monitoringinbigdataplatform-220114111648/95/Monitoring-in-Big-Data-Platform-Albert-Lewandowski-GetInData-58-638.jpg)