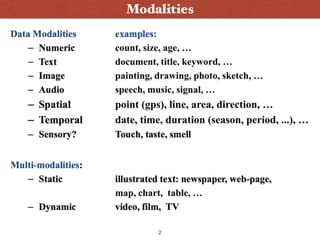

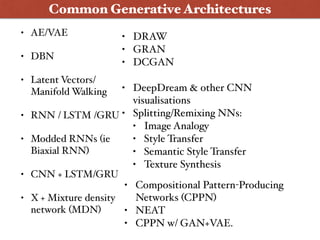

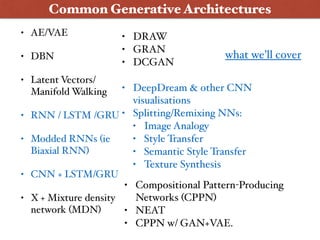

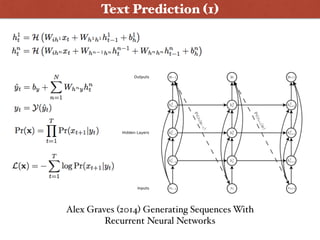

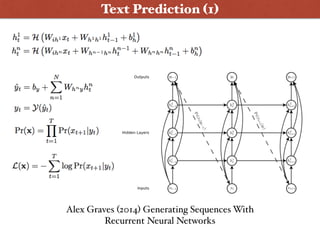

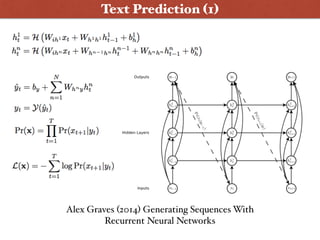

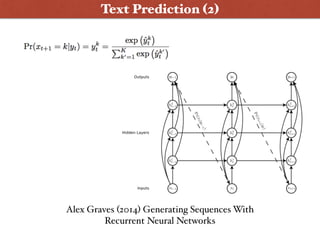

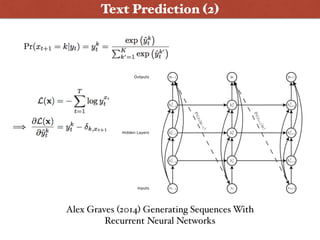

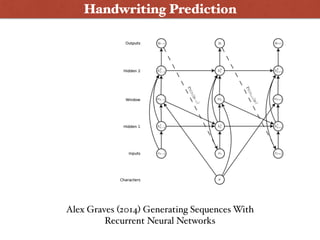

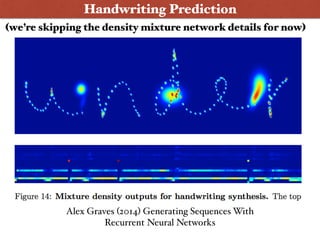

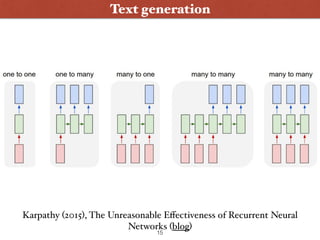

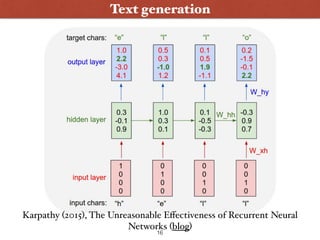

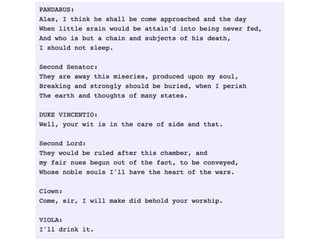

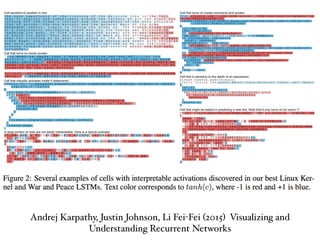

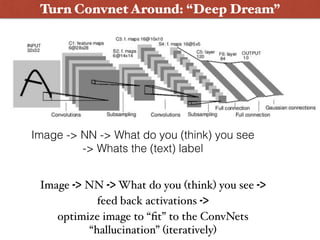

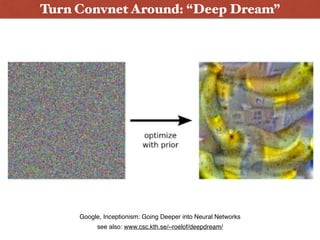

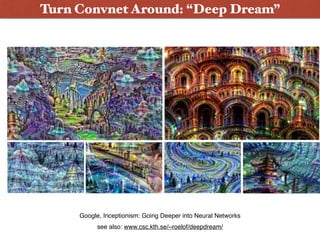

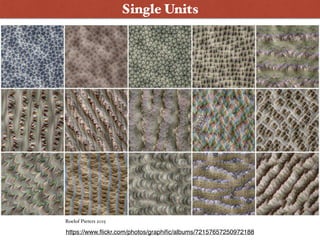

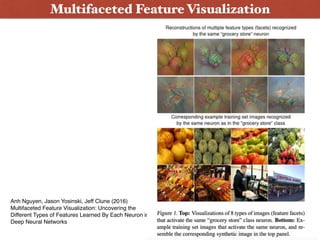

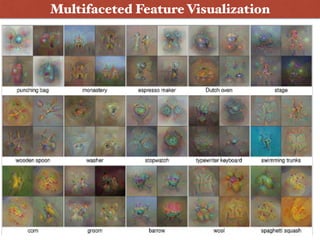

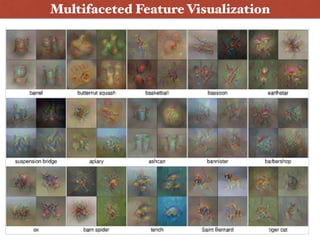

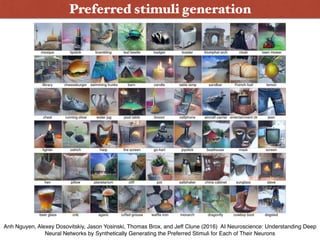

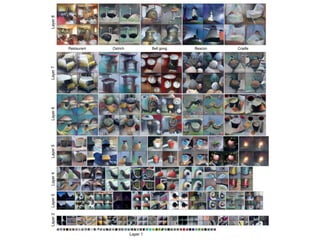

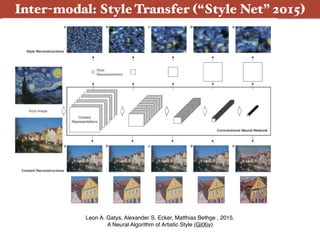

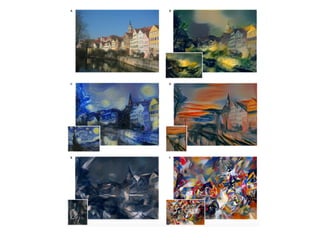

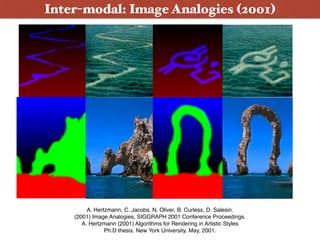

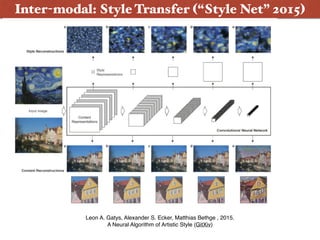

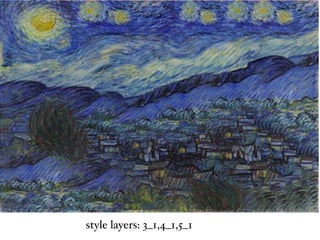

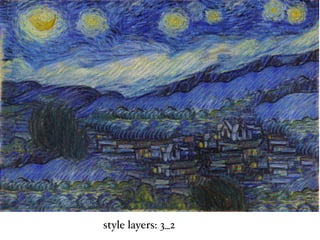

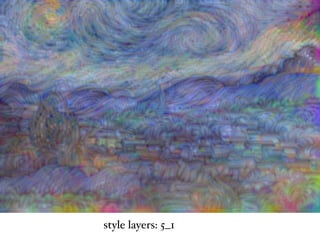

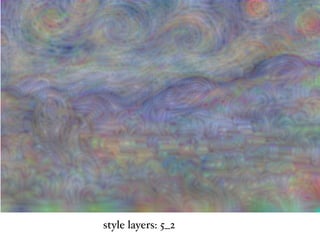

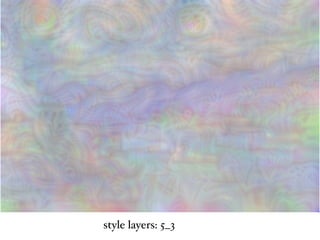

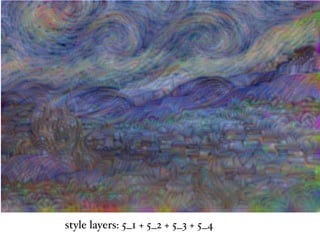

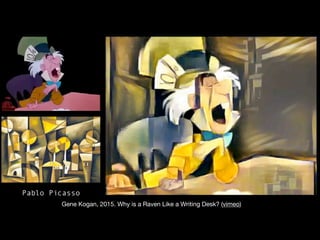

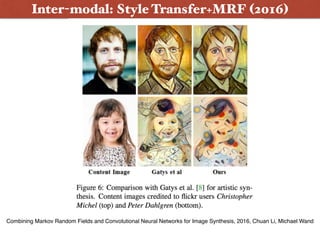

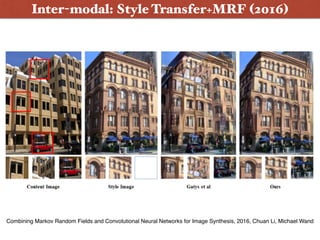

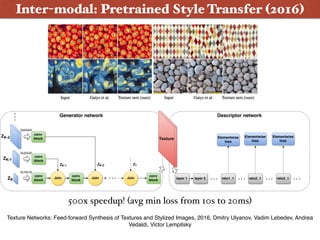

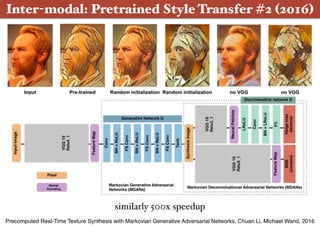

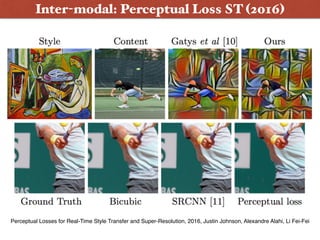

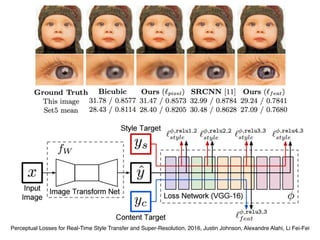

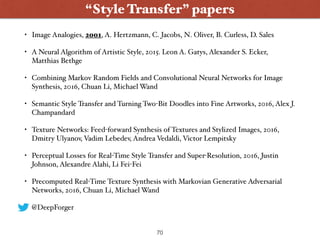

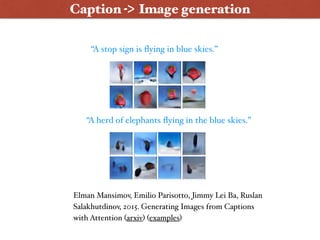

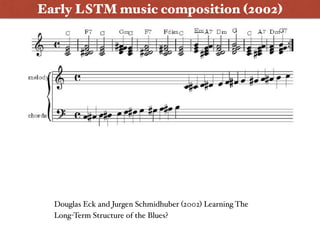

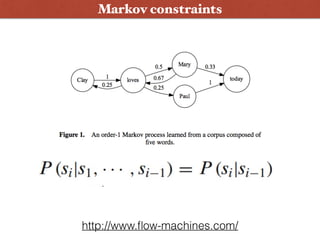

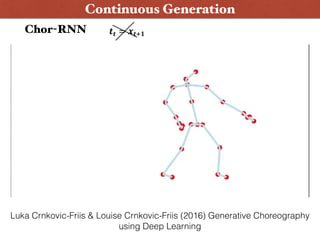

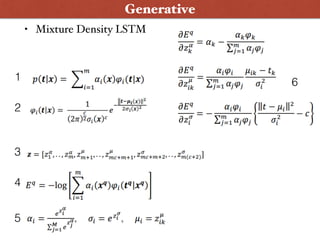

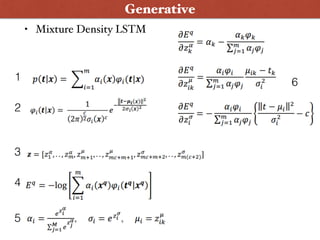

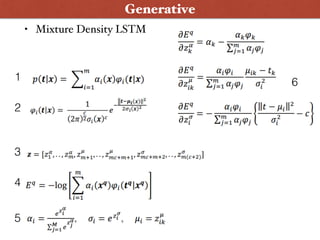

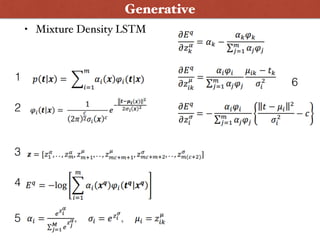

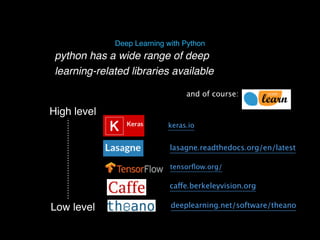

This document discusses multi-modal embeddings and generative models. It begins by covering common generative architectures like VAEs, DBNs, RNNs and CNNs. It then discusses specific applications including text generation with RNNs, image generation using techniques like DeepDream and style transfer, and audio generation using LSTMs and mixture density networks. The document advocates for creative AI as a "brush" for rapid experimentation in human-machine collaboration.