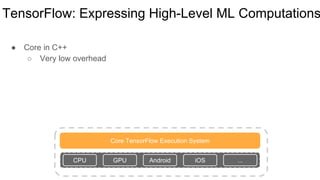

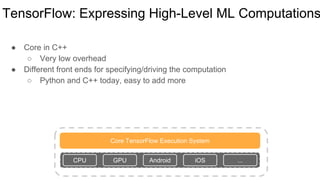

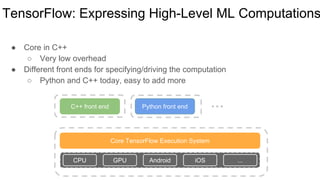

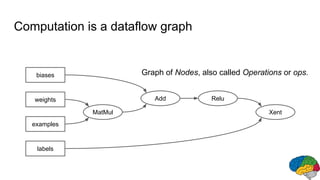

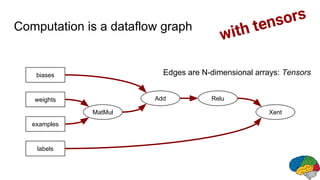

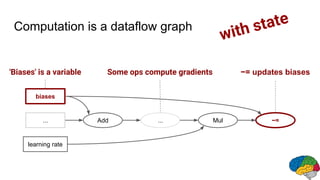

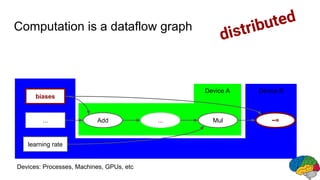

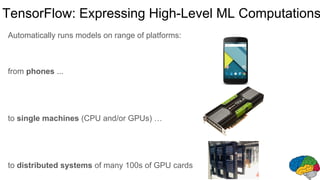

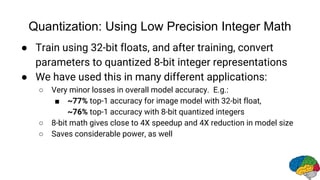

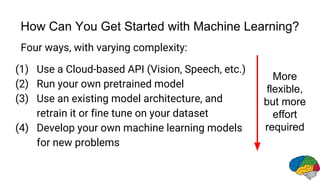

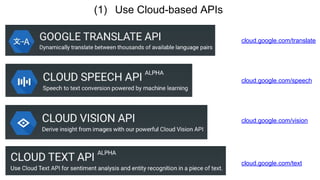

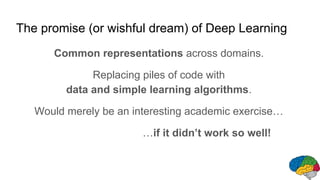

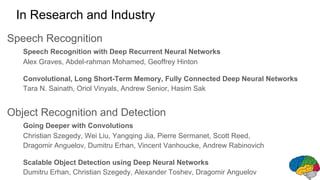

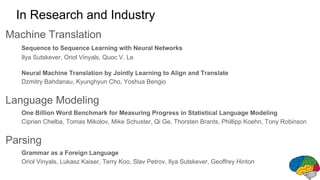

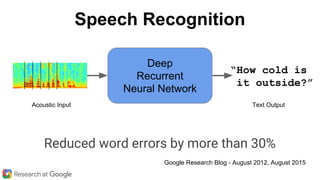

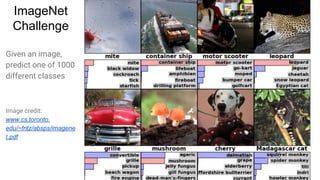

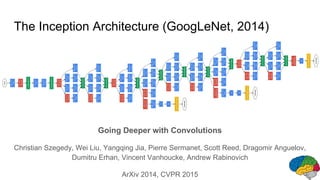

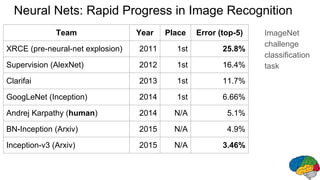

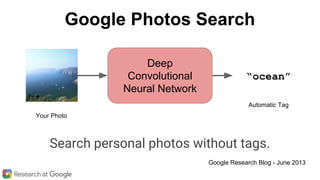

The document discusses the advances and applications of large-scale deep learning, particularly through Google's Brain Team, highlighting the importance of deep neural networks in various domains like speech recognition, image processing, and natural language understanding. It emphasizes the significance of TensorFlow, an open-source software framework designed to facilitate machine learning and enable broader access to deep learning technologies. Future prospects for deep learning are optimistic, with anticipated growth across multiple sectors including robotics and healthcare.

![Image Captions

W __ A young girl

A young girl asleep[Vinyals et al., CVPR 2015]](https://image.slidesharecdn.com/bim-01googledean-160516180952/85/Large-Scale-Deep-Learning-for-Building-Intelligent-Computer-Systems-a-Keynote-Presentation-from-Google-35-320.jpg)